Cloud-Native DevOps: Your World to New Possibilities

The construction of applications tailored for the cloud has created the cloud-native paradigm, boosted by tools like Kubernetes and companies like Amazon.

Join the DZone community and get the full member experience.

Join For FreeIn DevOps, everyone needs to trust that everyone else is doing their best for the business. This can happen only when there is trust between the teams, shared goals, and standard practices. For example, Devs need to talk to Ops about the impact of their code, risks involved, challenges so that the Ops can be well aware and prepared to handle and maintain the stability of the system if any unexpected incidents occur.

While embracing DevOps initially, failures are inevitable, but that doesn't mean you stop innovating.

That's how you learn, right? If you think you can prevent failure, then you aren't developing your ability to respond. Old ways of developing and delivering software are being replaced with new, faster, easier, and more powerful alternatives, like the cloud-native approach.

That’s how the applications are built today, the driving force behind the digital transformation in modern enterprises. That is what we are going to dive further into in this article.

“If you are not prepared to be wrong, you’ll never come up with anything original.” — Ken Robinson

Cloud-Native: What It Is and How It All Started

At a high level, cloud-native architecture implies adapting to the many new possibilities empowering innovation that paves the way for digital transformation. However, it involves a very different set of architectural constraints – offered by the cloud compared to traditional on-premises infrastructure and approaches. You need to understand that cloud-native is not about containers, nor is it a synonym for microservices.

Cloud-native really started to grow in about 2010, popularized by a couple of industry thought leaders. One of them is Paul Fremantle who wrote about it in his blog. He doesn’t talk about the tools and technologies in his blog; instead, he states that for systems to behave well on the cloud, they need to be written for the cloud. This is where the cloud-native approach began.

The cloud-native concept was then put forward and made famous by Matt Stine at Pivotal in 2013. In 2015, Matt Stine defined several characteristics of cloud-native architectures in the book, Migrating to Cloud Native Application Architectures.

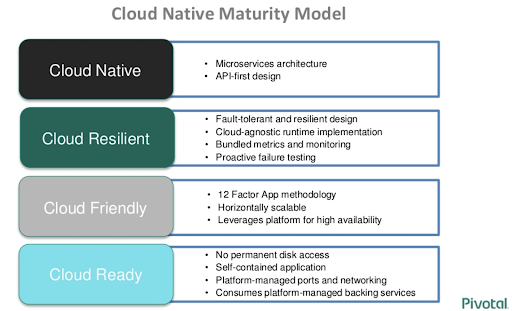

See the cloud-native maturity model below.

Image source: Pivotal Software, Inc.

Containers and microservices give a boost to the cloud-native model, but that’s not everything.

The tools and technologies empower today’s application deployment. Cloud-native computing takes advantage of many modern methods, including PaaS, multi-cloud, microservices, Agile methodology, containers, CI/CD, and DevOps. Now, we could even see its own foundation: the Cloud Native Computing Foundation (CNCF), launched in 2015 by the Linux Foundation.

It can be a bit overwhelming for the new folks who see this technology stack all in one for the first time but that is completely fine. The CNCF Landscape gives an overall glimpse of the various projects that are involved and it endeavors to classify these projects/products for better understanding in the cloud community. The CNCF fosters this landscape of open source projects by helping and providing end-user communities with viable options for building cloud-native applications.

Image credits and source: CNCF Cloud Native Interactive Landscape

Cloud-native is about more than just microservices. Cloud-native technologies generally embrace loosely coupled architectures, resiliency, immutable infrastructure, and observability. Along with that is the ability to tap into deep automation capabilities that allow teams to make frequent, high-impact changes with predictability. According to a recent survey by CNCF, it is found that the use of cloud-native technologies in production has grown over 200%.

Cloud-Native Approach = Better Innovation + Advanced Transformation + Richer Customer Experience

As organizations move further with their cloud approaches and mature, they should start focusing less on mechanics and more on business results. Containers, microservices approach, and serverless have an increasingly positive impact on cloud-native development strategies.

According to IDC worldwide IT industry predictions, by 2021, spending on cloud services and cloud-enabling hardware, software and services will more than double to over $530 billion, leveraging the diversifying cloud environment that is 20% at the edge, and over 90% multi-cloud.

Source: IDC

The biggest advantage of a cloud-native architecture is that it takes you from idea to app in the quickest possible time.

Cloud-native fundamentally boosts cloud automation. This refers to automatically managing the installation, configuration, and supervision of cloud computing services. It is about using technology to make more reliable business decisions for your cloud computing resources at the right time. The cloud-native approach helps to release software faster with cloud automation.

Cloud Automation Helps In:

- Lowering the cloud costs

- Faster deployments

- Better control over IT resources

- Achieving true DevOps

- Speedy innovation and scalability

Kubernetes – Leading the Cloud-Native Ship in The DevOps Ocean

As of now, nobody can stop Kubernetes. It has become a de facto orchestration tool in the software industry. Companies are taking Kubernetes adoption as a priority than just a mere adoption. It has become a must and a go-to platform for automating deployments, scaling and management of microservices, and containerized applications.

After it was released in 2013, Docker quickly became an actual container standard. Released in 2014, Kubernetes stands out among many container orchestration systems and winning the container orchestration war. These technologies have significantly reduced the threshold of developing cloud-native applications. The software industry is surging around Kubernetes clusters as a way to automate the deployment of container collections. Now programmers can integrate Kubernetes Docker Registry with JFrog Artifactory and use containers to automate workflow.

Data Dog’s recent survey data showed that Kubernetes is increasingly the first choice among container users and the adoption is exploding in a rapid phase.

Source credit: DataDog

The rise of Kubernetes has positively affected the cloud-native adoption undoubtedly, and the reason for the comparative jump in Kubernetes as a first favorite choice is because of the pain points the Kubernetes solves as a platform. Being a part of CNCF, it has a vibrant and fast-growing community and supports all clouds. Many reasons make it remarkable.

While many are preparing to adopt Kubernetes, there are some facts you need to consider before proceeding further. Here are some questions and tips before getting your applications ready for K8S.

Recently, JFrog’s KubeCon findings revealed that how Kubernetes is going big and shows the acceleration at which the companies are embracing it.

Source Credits: JFrog

DevOps – The Initial Success Story

So, how has it all started?

In 2001, Amazon had a problem: the large, monolithic “big ball of mud” that ran their website, a system called Obidos, was unable to scale. The limiting factor was databases.

The CEO, Jeff Bezos directed this problem into an opportunity. He wanted Amazon to become a platform that other businesses could leverage, with the ultimate goal of better meeting customer needs. With this in mind, he sent a memo to technical staff, directing them to create a service-oriented architecture. This is where the cloud-native movement began at Amazon.

In between, as the team at Amazon grew, they faced severe communication problems, and hence Bezos instructed the team leads to have two-pizza teams. And then, the culture started taking over. This initiative gave Amazon and engineering leaders at Amazon tremendous confidence to disrupt the IT world. In 2012, Amazon went on record stating that they were doing, on average, 23,000 deploys per day.

To avoid the communication overhead that can kill productivity as we scale software development, Amazon leveraged one of the most important laws of software development – Conway’s Law.

As a result, today, most companies use AWS to build cloud-native applications. AWS has much more to offer. Let us see some famous services offered by AWS when it comes to cloud-native:

- Amazon Simple Storage Service: Scalable, low-cost, web-based cloud storage service that is intended for online backup and archiving of data and application programs.

- Amazon SNS: This is used to coordinate the delivery of push notifications for apps, to either subscribing endpoints, or clients. This Simple Notification System makes use of AWS Management console or APIs in order to push messages to smart devices connected over the Internet.

- Amazon CloudFront CDN: Amazon CloudFront is a global CDN service that aids in secure delivery of data, videos, applications, and APIs, assuring low latency and high transfer speed.

- Amazon Lambda: It’s a computing service that enables developers to run code without having to manage servers. This service executes the code only when needed and scales automatically — ranging from a few requests per day to thousands per second.

- Amazon Machine Learning: This is a service by AWS that lets developers build machine learning-based models, generate predictions, and much more, even if they don’t possess a thorough knowledge in ML methods and algorithms.

- Amazon Elastic Load Balancing: ELB is a load-balancing service for AWS deployments, automatically spreads the incoming traffic and also can scale the resources as the demand increases. Here, the application traffic is distributed over multiple channels, such as containers, EC2 instances, and IP addresses.

Engineering Culture at Google, The Cloud-Native Way

At Google, we see that writing code correctly is taken very seriously, reviewing code is taken very seriously, and testing code is taken very seriously.

Image courtesy – Screenshot is from the last year’s swampUP keynote talk by Melody Meckfessel, VP of Engineering at Google Cloud and Sam Ramji, VP of Product Management at Google Cloud.

At Google, everything from Gmail to YouTube to Google Search runs in containers – the company launches more than 2 billion new containers each week, or 200,000 each minute. That’s huge, and that’s innovation.

The Three Core Principles of Google’s Engineering Culture Boosting the Cloud-Native Approach:

- Readability – Because they care about the quality of the source code.

- Transparency – Engineers having access to the code to be able to not only find something they can reuse but also help fix each other’s bugs and have that collaboration happen.

- Automation – Encouragement to automate things and fast build/test approach.

Google itself is the biggest enabler of cloud-native ecosystem with the creation of tools like Kubernetes, which is one of the most searched cloud-native tools on the internet. The whole industry is talking about its win. Google Cloud supports cloud-native ecosystem by:

- Supporting and enriching communities

- Helping cloud-native architects learn and share freely

- Improving the overall developer experience

- Creating unique and combined expertise throughout

For a more detailed explanation, you can read further: "How Google Cloud supports Kubernetes and the cloud-native ecosystem."

Successful adoption of a cloud-native approach enables organizations to bring new innovations to market faster, accelerating the digital transformation of modern enterprises. The cloud-native approach has become a boon to the software powered firms by reducing the overhead cloud costs and increasing productivity and performance. Fully exploiting the power of cloud computing that offers on-demand, limitless computing power, whether in the private or public cloud.

Published at DZone with permission of Pavan Belagatti, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments