Cloud-Native Compilation: Bringing JVMs Into the Modern Cloud World

As Java continues to mature, it’s important to push for cloud-optimized capabilities that will deliver better performance and lower costs.

Join the DZone community and get the full member experience.

Join For FreeAcross the industry, companies are trying to rein in runaway cloud costs by squeezing more carrying capacity out of the instances they run in the cloud. Especially in the Java space, developers are trying to fit workloads into smaller and smaller instances and utilize server resources with maximum efficiency. Relying on elastic horizontal scaling to deal with spikes in traffic means that Java workloads must start fast and stay fast. But some antiquated features of the JVM make it hard to effectively utilize the resources on your cloud instances.

It’s time to re-imagine how Java runs in a cloud-centric world. We started by exploring how compilation can be optimized by offloading JIT workloads to cloud resources. Can we achieve optimized code that is both more performant and takes less time to warm up?

In this blog, we will look at:

- The origins of the current JIT compilation model

- The drawbacks of on-JVM JIT compilation

- How cloud-native compilation impacts performance, warm-up time, and compute resources

A Look Back at JIT Compilation

Today’s Java JIT Compilation model dates from the 1990s. Lots have changed since the 1990s! But some things, like the Java JIT Compilation model, have not. We can do better.

First, a refresher on JIT compilation. When a JVM starts, it runs the compiled portable byte code in the Java program in the slower interpreter until it can identify a profile of “hot” methods and how they are run. The JIT compiler then compiles those methods down into machine code that is highly optimized for the current usage patterns. It runs that code until the optimizations turn out to be wrong. In this case, we get a deoptimization, where optimized code is thrown out and the method is run in the interpreter again until a new optimized method can be produced. When the JVM is shut down, all the profile information and optimized methods are discarded, and on the next run, the whole process starts from scratch.

When Java was created in the 90s, there was no such thing as a “magic cloud” of connected, elastic resources that we could spin up and down at will. It was therefore a logical choice to make JVMs, including the JIT compiler, completely encapsulated and self-reliant.

So what are the drawbacks of this approach? Well…

- The JIT compiler must share resources with the threads executing application logic. This means there is a limit on how many resources can be devoted to optimization, limiting the speed and effectiveness of those optimizations. For example, Azul Platform Prime’s Falcon JIT compiler’s highest optimization levels produce code that can be 50%-200% faster on individual methods and workloads. On resource-constrained machines, however, such high optimization levels may not be practical due to resource limitations.

- You only need resources for JIT compilation for a tiny fraction of the life of your program. However, with the on-JVM JIT compilation, you must reserve capacity forever.

- Bursts of JIT-related CPU and memory usage at the beginning of a JVM’s life can wreak havoc on load-balancers, Kubernetes CPU throttling, and other parts of your deployment topology.

- JVMs have no memory of past runs. Even if a JVM is running a workload that it has run a hundred times before, it must run it in the interpreter from scratch as if it’s the first time.

- JVMs have no knowledge of other nodes running the same program. Each JVM builds up a profile based on its traffic, but a more performant profile could be built up by aggregating the experience of hundreds of JVMs running the same code.

Offloading JIT Compilation to the Cloud

Today, we do have a “magic cloud” of resources that we can use to offload JVM processes that could be more effectively done elsewhere. That’s why we built the Cloud Native Compiler, dedicated service of scalable JIT compilation resources that you can use to warm up your JVMs.

Cloud-Native Compiler is run as a Kubernetes cluster in your servers, either on-premise or in the cloud. Because it’s scalable, you can ramp up the service to provide practically unlimited resources for JIT compilation in the short bursts when it is required, then scale it down to near zero when not needed.

When you use cloud-native compilation on Java workloads:

- CPU consumption on the client remains low and steady. You don’t have to reserve capacity for doing JIT compilation and can right-size your instances to justify the resource requirements of running your application logic.

- You can afford to run the most aggressive optimizations resulting in peak top-line performance, regardless of the capacity of your JVM client instance.

- The wall-clock time of warming up your JVM decreases significantly as the JIT compilation requests are run more in parallel thanks to more available threads.

So, Does It Really Make a Difference?

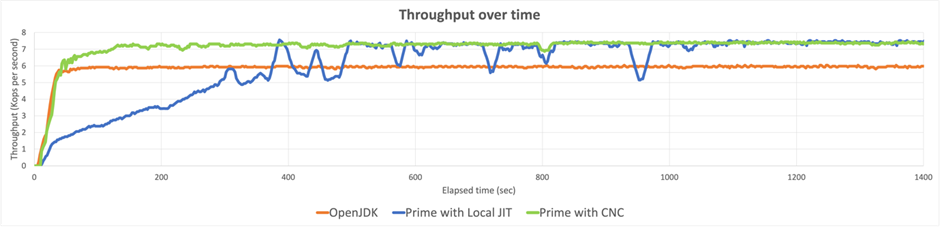

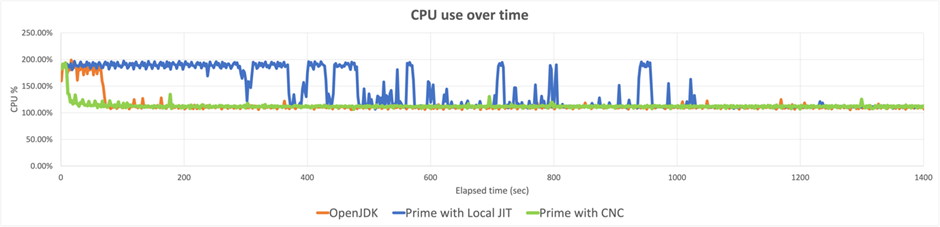

Note what happens when we run the finagle-http Renaissance workload on an extremely resource-constrained 2 vCore machine. Doing heavier optimizations means spending more resources, as shown by the long warm-up curve of Azul Platform Prime with local JIT. When engaging cloud-native compilation, this long warmup time comes down to the same levels as OpenJDK, while the optimized Falcon code continues to run at a faster throughput.

Meanwhile, CPU use on the client remains low and steady, allowing you to allocate more power to running your application logic even during warmup.

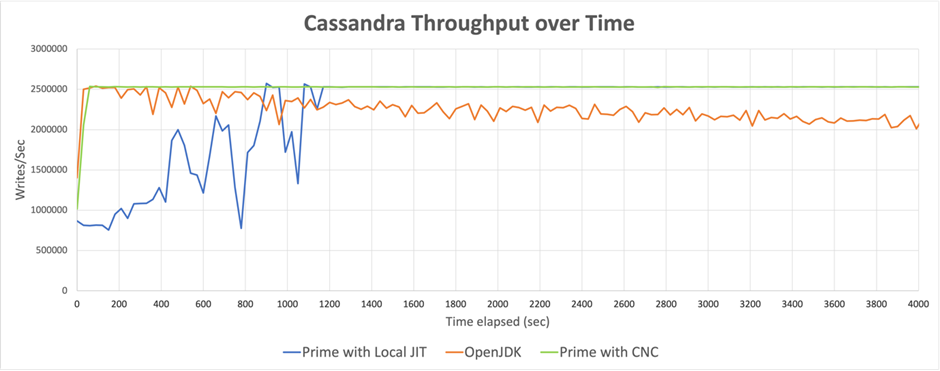

But it’s not just extremely resource-constrained machines that benefit from the cloud-native compilation. Let’s look at a more realistic workload – running a three-node Cassandra cluster on an 8 vCore r5.2xlarge AWS instance. With optimization set to the highest level, resulting in high and consistent throughput, warm-up time goes from 20 minutes with local JIT to less than two minutes with cloud-native compilation.

Conclusion

As Java continues to mature, it’s important to push for cloud-optimized capabilities that will deliver better performance and lower costs to enterprise applications built-in or for the cloud. The cloud-native compilation is a solid start to advancing how Java runtimes perform their work across on-premise and cloud environments.

Published at DZone with permission of John Ceccarelli. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments