Cloud-Agnostic Automated CI/CD for Kubernetes

Take a look at some of the tools and processes that you may want to use to set up a cloud-agnostic production and development environment.

Join the DZone community and get the full member experience.

Join For FreeIn this article, I would like to discuss one of the approaches that we took to automate end-to-end CI/CD on cloud-specific environments for Kubernetes workloads. There might be other solutions, and cloud providers like AWS, Microsoft Azure, and GCP provide their own set of frameworks to achieve the same involving Kubernetes.

The core of this deployment model is Rancher, which is responsible for providing centralized administration and management capabilities to multiple Kubernetes clusters hosted on different cloud environments and bare metal environments. The tools mentioned here could be replaced with tools of one's choice, based on the applications and business needs.

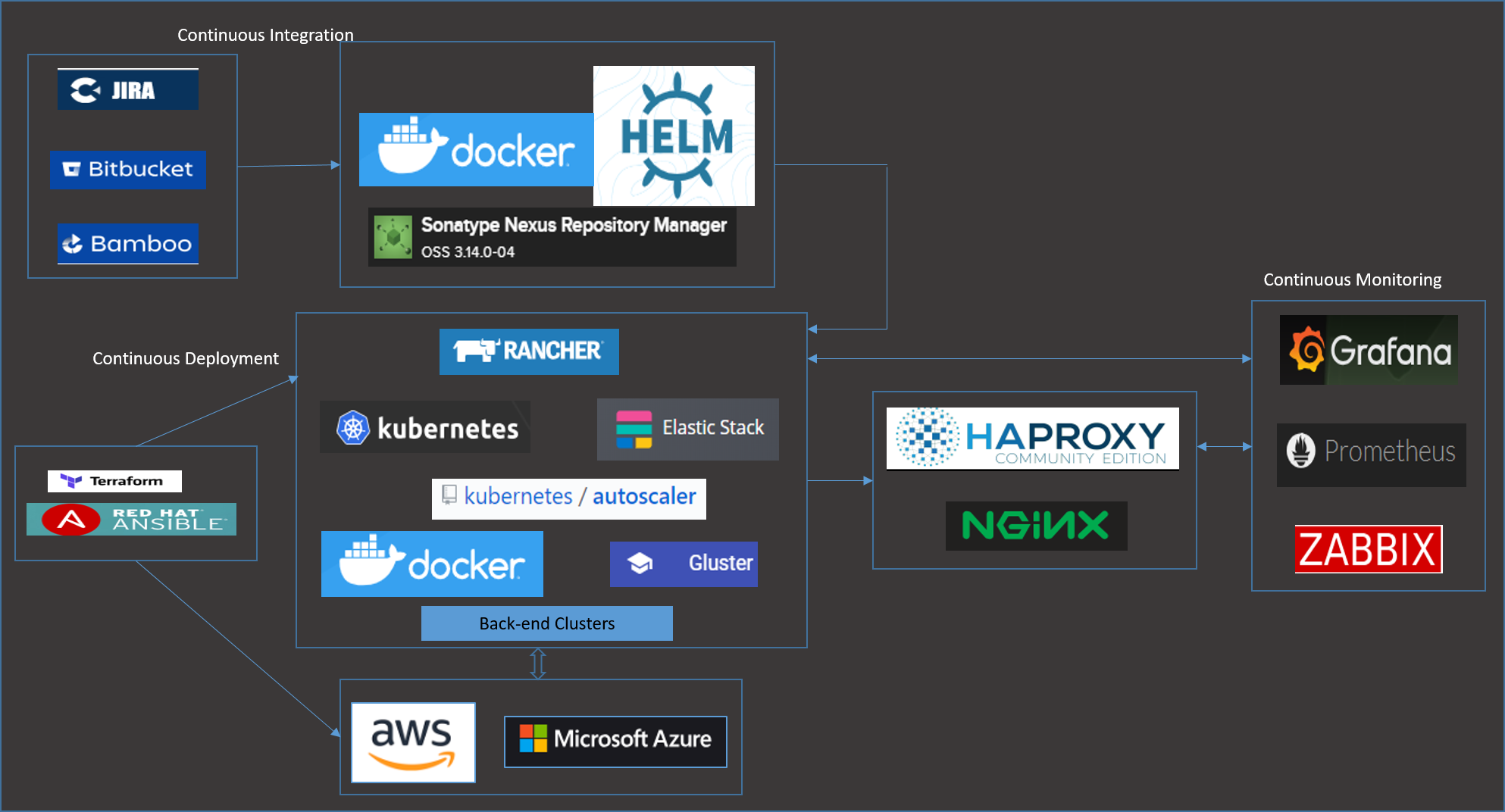

Before I get into details, here is a snapshot of the deployment model:

Continuous Integration Components

We used JIRA, BitBucket, Bamboo and Nexus to automate the Continuous Integration component. The requirements and user stories come from JIRA; the developers put their code into BitBucket; the code is built and integrated with code review and static analysis tools, and the Docker images produced by Bamboo are pushed to Nexus. The images go through certain container security checks.

When you have a number of microservices/applications to be built, then handling the workload deployment, upgrades, and rollbacks to a Kubernetes cluster can be complex. Versioning is another challenge we need to consider. Helm helps to overcome most of these challenges and makes deployment simple. If you're wondering whether you need to have one chart and include all your deployments in it, or have a separate chart for each application and microservices, we preferred to align these charts with the application- or microservice-specific repository so that we don't need to have a separate repository to maintain Helm artifacts. This takes away the effort of maintaining two separate repositories for actual code and Helm templates. Developers get more control on the template changes needed for any application code changes.

Nexus serves as a repository for both Docker images and Helm charts (qe used the helm-nexus plugin). The images and charts are available and pushed to Nexus after every successful application build.

Continuous Deployment Components

To achieve a cloud-agnostic provisioning, we chose Terraform, as it is simple to learn and easy to deploy. We didn't find Terraform very useful for configuration management/maintenance activities post-provisioning, so we also put some Ansible scripts in place. We could have considered Ansible for provisioning, too, but using Terraform gave us more control of launching instances that could be added as Rancher servers/nodes and can be added to auto-scaling groups automatically. We achieved this using the launch scripts feature.

We thought we could reuse most of the Terraform scripts that we wrote for AWS into Azure, but this was not the case. We had to make considerable changes.

We deployed a high availability Rancher server that is running on three different instances with an NGINX server in front to load-balance the three. The deployment was done using Terraform and launch scripts. The scripts use RKE (Rancher Kubenetes Engine) and Rancher API calls to bring up the cluster (the HA Rancher Server).

Rancher provides various options to add the Kubernetes clusters on different cloud providers. You could choose from the options, use hosted Kubernetes providers, or use nodes on an infrastructure provider or from custom nodes. In this scenario, we chose to use the custom nodes on AWS and Azure instead of hosted Kubernetes providers. This helped us in adding a group of worker nodes to Auto Scaling groups and also to use the cluster auto-scaler for node scaling.

All this is automated through launch scripts and Rancher API calls, so that any new node that gets added through ASG (and auto-scaler), automatically registers as a Rancher/Kubernetes node. Some of the activities that are automated through the launch script are:

Installing and configuring the required Docker version

Installing and configuring Zabbix Agent on all the instances (which will be later used in monitoring)

Installing the required GlusterFS client components

Installing required kubectl client

Any other custom configuration that is required for backend database clusters

Auto-mounting additional EBS volumes and GlusterFS volumes

Running Docker container for Rancher Agent/Kubernetes node and attaching specific role (etcd/controlplane/worker)

Checks to make sure that the Rancher agent is available, up, and running.

GlusterFS was considered to handle ReadWriteMany that is not available with EBS and Azure Disk volume types. This was required for many of the applications that we deployed.

An ELK stack (ElasticSearch, Logstash, and Kibana) is deployed on Kubernetes using Helm charts and is configured to push container logs as well as audit and other custom logs through logstash.

HAProxy and NGINX are used for two different purposes. NGINX is the default ingress controller provided by Rancher during the Rancher Server HA Setup. This is used to load-balance the three Rancher Servers. We deployed an HAProxy Ingress Controller on the Kubernetes cluster so that we can expose application end points through these specific nodes (whose IPs are mapped to specific FQDNs). HAProxy ingress controller is deployed as daemonset so that for any additional load, the number of nodes are automatically increased based on the Auto Scaling groups and auto-scaler.

Continuous Monitoring Components

We deployed the Prometheus, Grafana, and Alertmanager suite for capacity planning and for monitoring the Rancher/Kubernetes cluster's status. This is again deployed through the Rancher Helm Chart Provisioner. We could also deploy it through regular/stable Helm charts. It really helped us to monitor and collect metrics like CPU, memory utilization and IO operations on development environment and extrapolate to do capacity planning for staging and production environments.

We also deployed Zabbix Server on our cluster, which is used to monitor various operating system-level and application-specific metrics and alerts for all the nodes that were deployed. This includes any backend database cluster nodes, Kubernetes nodes, Rancher servers, file servers or any other servers that were provisioned through Terraform. Zabbix Server is configured for Node/Agent auto registration, so that any new node that is added to the cluster, through the auto-scaling group or auto-scaler, is available for monitoring. Any critical alerts from these servers sent to the required stakeholders.

Conclusion

This is one of the approaches that we followed for building a complete CI/CD tool chain for Kubernetes workloads. This model helped us automate the provisioning of all the three environments. With Rancher, we were able to provide a dev environment that could be utilized by each developer using the project concept. Each developer gets a node and a project, which are controlled by RBAC, so that they can deploy and test their own changes. No one else will see the project/node details or disturb the Kubernetes workloads deployed by another developer. Since the node gets registered to the Rancher server automatically, the system restarts will not affect the availability of the node. Even in the worst case scenario, if the node is lost, it is very easy to bring up a new node in a couple of minutes. The application can be deployed using the Helm charts or with the built-in support for Helm charts provided by Rancher.

These are a few of the high-level components that we deployed to manage the entire environment. The other aspects that we considered are high-availability cluster environments, for Rancher servers, Kubernetes clusters, Gluster file server clusters, or for any other backend clusters. All the changes and updates that are required to make a production-grade environment are considered while coming up with this approach. Other aspects like secured access to cluster instances, upgrades, backup and restore, and tiered architecture recommendations as per industry standards are also taken into account.

Hopefully this article provides you with some inputs that can be considered when you are planning for a production-grade environment provisioning for multiple cloud providers.

Opinions expressed by DZone contributors are their own.

Comments