Using Azure DevOps Pipeline With Gopaddle for No-Code Kubernetes Deployments

Simplify the process of building automated pipelines for Azure workloads using gopaddle, a no-code platform for Kubernetes deployments across multi-clouds.

Join the DZone community and get the full member experience.

Join For FreeAzure pipelines is a cloud-based continuous integration and deployment (CI/CD) platform to build, test and deploy applications to cloud environments like Azure, AWS, or GCP through Kubernetes Services, Azure Web Apps, and Azure Functions. Azure DevOps pipelines can build container images, push the images to a container registry and deploy these images to Kubernetes environments of our choice.

Setting up a pipeline that leverages a custom container registry or deploys to multi-cloud Kubernetes environments requires cloud-specific expertise and is time-consuming. For instance, building a pipeline from scratch requires:

- Containerizing a service - Creating Dockerfile and Kubernetes YAML files

- Preparing the agent pool - Agent pools are the environments within which the pipeline scripts are executed, including the container builds. Either Microsoft Hosted Agents or Self-Hosted agents can be used. In either scenario, the agents need to be configured to build and push the Docker Images

- Deployment Configuration - Only Azure Kubernetes Service is supported as the target Kubernetes environment.

Introducing gopaddle

gopaddle is a no-code platform that helps to build, deploy and maintain cloud-native applications across hybrid environments. It automatically generates Dockerfiles and Kubernetes YAML files through the intelligent scaffolding process. gopaddle exposes APIs that can be extended and integrated with other pipeline tools like Azure DevOps.

No-Code and Multi-Cloud Capabilities with Azure DevOps

By integrating gopaddle with Azure DevOps pipelines, developers get the no-code automation with the flexibility of deploying to multiple cloud platforms. The following table illustrates the additional capabilities developers get by integrating both solutions:

Azure DevOps (without gopaddle) |

Azure DevOps (with gopaddle) |

|

Releases and Distributions |

|

|

Build Environments |

Agent Pools - Microsoft-hosted agents and Self-hosted agents are supported. Agent pools can be Linux, MacOS or Window VMs or Linux/Windows Containers |

|

Registries |

Azure Container Registry, Google Container Registry (via Google Service Accounts), Docker Hub (username/password-based authentication) are supported. For artifacts other than container images, Azure Artifacts or a NuGet repository can be used. |

Get additional support for a variety of Docker registries - gopaddle supports Azure Container Registry, AWS Elastic Container Registry, Google Container Registry, Docker Hub private registry, On-premise Container Registry like GitHub Registry. |

Source Control Repos |

Azure Repo/TFS, Bitbucket cloud, GitHub cloud, GitHub Enterprise, External Git repositories, and Subversion. Check the service connection types here - https://docs.microsoft.com/en-us/azure/devops/pipelines/library/service-endpoints?view=azure-devops&tabs=yaml |

Get additional support for a variety of source control repositories - gopaddle supports GitHub Cloud & On-prem, GitLab Cloud & On-prem, BitBucket Cloud |

Multi-Arch Build |

Multi-Arch build requires Linux agents along with a QEMU emulator. |

Get support multi-arch builds natively. gopaddle supports -

|

Deploy to K8s |

Has integrations with Azure Kubernetes Service. Support for other Kubernetes services through service connections (using username/password or token-based authentication) along with scripts to deploy to Kubernetes clusters using cloud-specific APIs or kubectl or kube APIs. In either scenario, it requires scripting to deploy applications to Kubernetes. |

Works seamlessly with Azure AKS, Google GKE, AWS EKS, HPE Ezmeral Runtime Enterprise, Huawei Kubernetes Service, MicroK8s, and any CNCF compatible Kubernetes environment. |

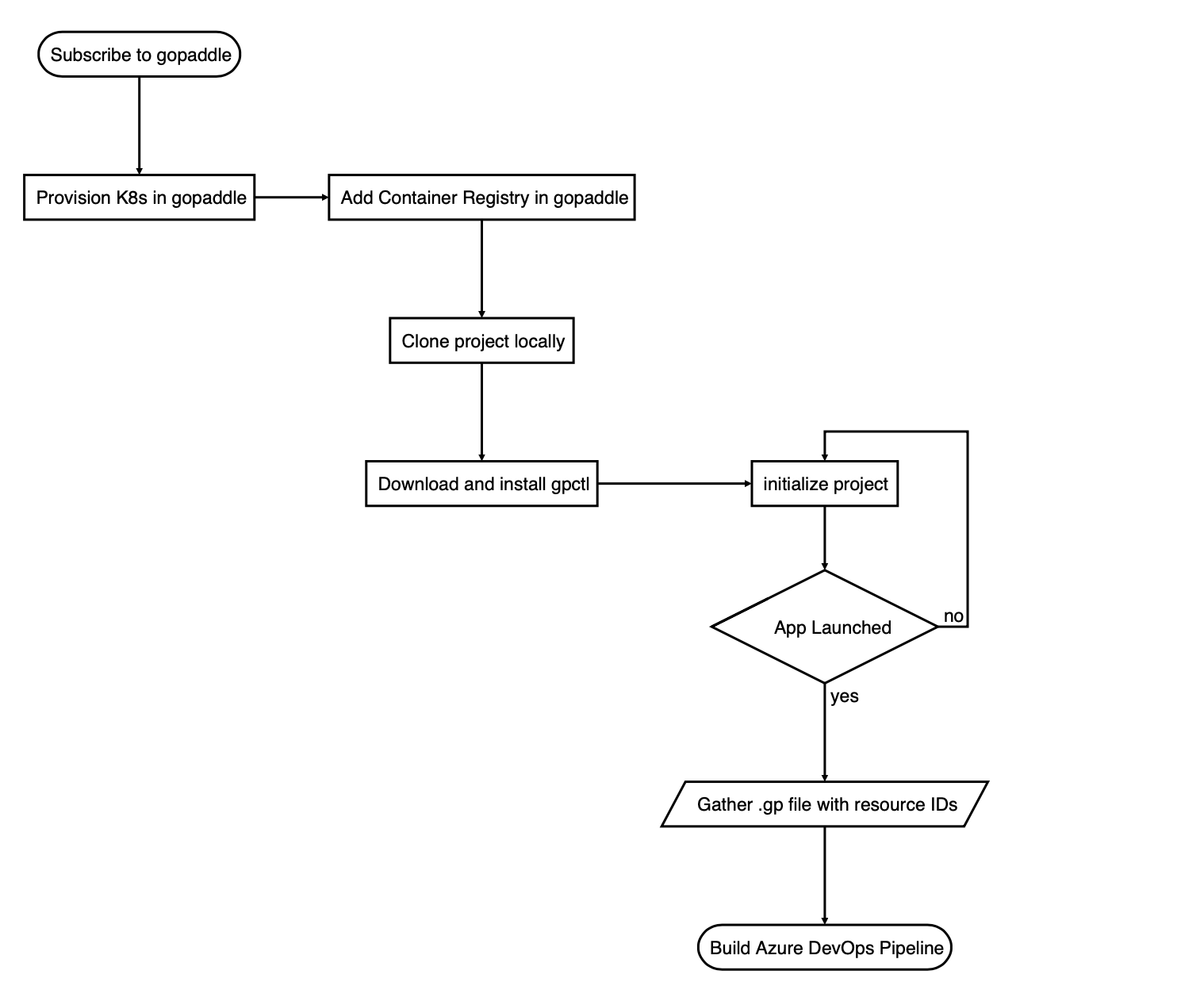

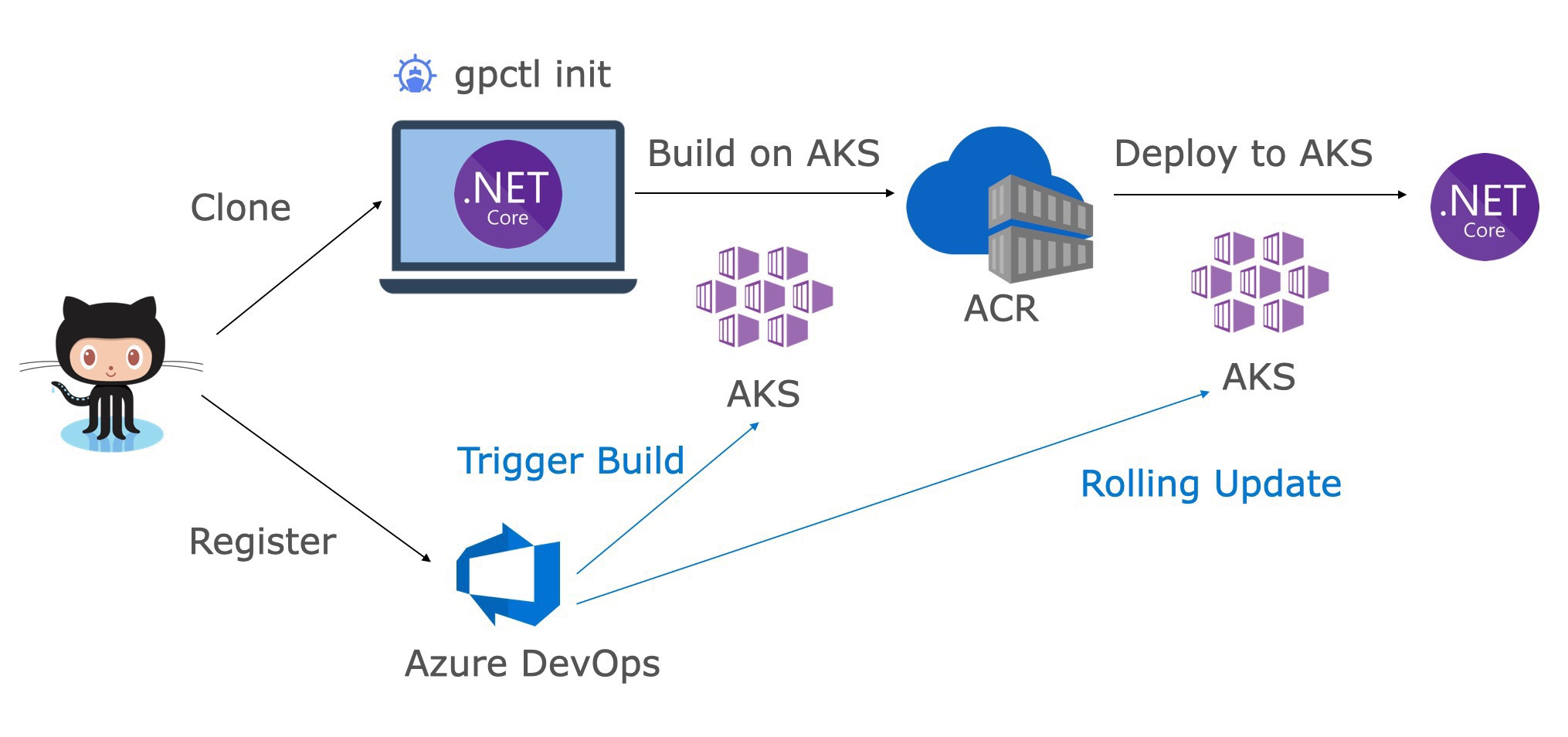

Workflow

Having explored the advantages of using gopaddle with the Azure DevOps pipeline, let us take a look at the process of integrating these two platforms. We will explore this in the context of a .NET Core application in the GitHub repository. To build a pipeline for this application, we need to initialize the project and create Docker/Kubernetes artifacts like Dockerfile and the Kubernetes YAML files. We can leverage gopaddle's intelligent scaffolding process to generate these artifacts.

The below workflow explains the step-by-step process of initializing the project.

a. Subscribe to gopaddle from here.

b. Provision Kubernetes cluster: Follow the steps here to provision a Kubernetes cluster on AWS/Google/Azure. If you are provisioning a GKE cluster, then we need a minimum capacity specified below to build the eShopOnWeb container in this cluster.

Type - N1-Standard-2

Disk - 40GB

Note: If you are using Azure Container Registry and AKS, then you need to register the Azure Cloud Account. Registering an Azure Cloud account requires Single-Sign-On (SSO) to your Azure Account. This requires an OAuth application be to created in your Azure Cloud Account which can authenticate our SSO requests. You can find more information here on creating an OAuth application and registering an Azure Cloud account with gopaddle.

c. Create an Allocation policy in gopaddle UI, with a minimum capacity for building eShopOnWeb container.

CPU Requests - 500 millicore

CPU Limits - 1500 millicore

Memory Requests - 4 G

Memory Limits - 7 G

With the above node size and the allocation capacity, it takes approximately 4-5 mins to generate a Docker image. If you would like to speed up the build process, then you need to increase the node capacity and the allocation policy.

d. Add a container registry to gopaddle by following the steps here. This registry will be used to push/pull the Docker images during the build and the deployment process.

e. Download and install gpctl command-line utility by following the steps here.

f. Fork and Clone the repository locally.

git clone https://github.com/dotnet-architecture/eShopOnWebNote: We need to fork this project to our local GitHub account as the Azure DevOps pipeline requires access to create a pipeline script in this repo.

g. Initialise the project from your local desktop: Create 3 scripts for building, starting, and checking the health of the application.

buildScript.sh

#!/bin/bash cd src/Web dotnet restore dotnet publish -c Release -o out

startScript.sh

#!/bin/bash cd src/Web/out dotnet Web.dll

healthCheck.sh

#!/bin/sh curl http://localhost:5000/

h. Export ENVs in your local machine.

export ASPNETCORE_ENVIRONMENT=Development

export ASPNETCORE_URLS=http://+:5000

i. Initialise the project using gpctl.

gpctl login --emailID=<emailId> --password=<password> --endPoint=https://portal.gopaddle.io

gpctl init --startScript=./startScript.sh --buildScript=./buildScript.sh --healthCheck=./healthCheck.shNote: During the init process, choose the Kubernetes cluster to build and deploy the application and choose the Docker registry to use for pushing the image.

Once the gpctl init completes, gopaddle creates a .gp file with a list of resource IDs that can be used to build the pipeline.

Creating Azure DevOps Pipeline

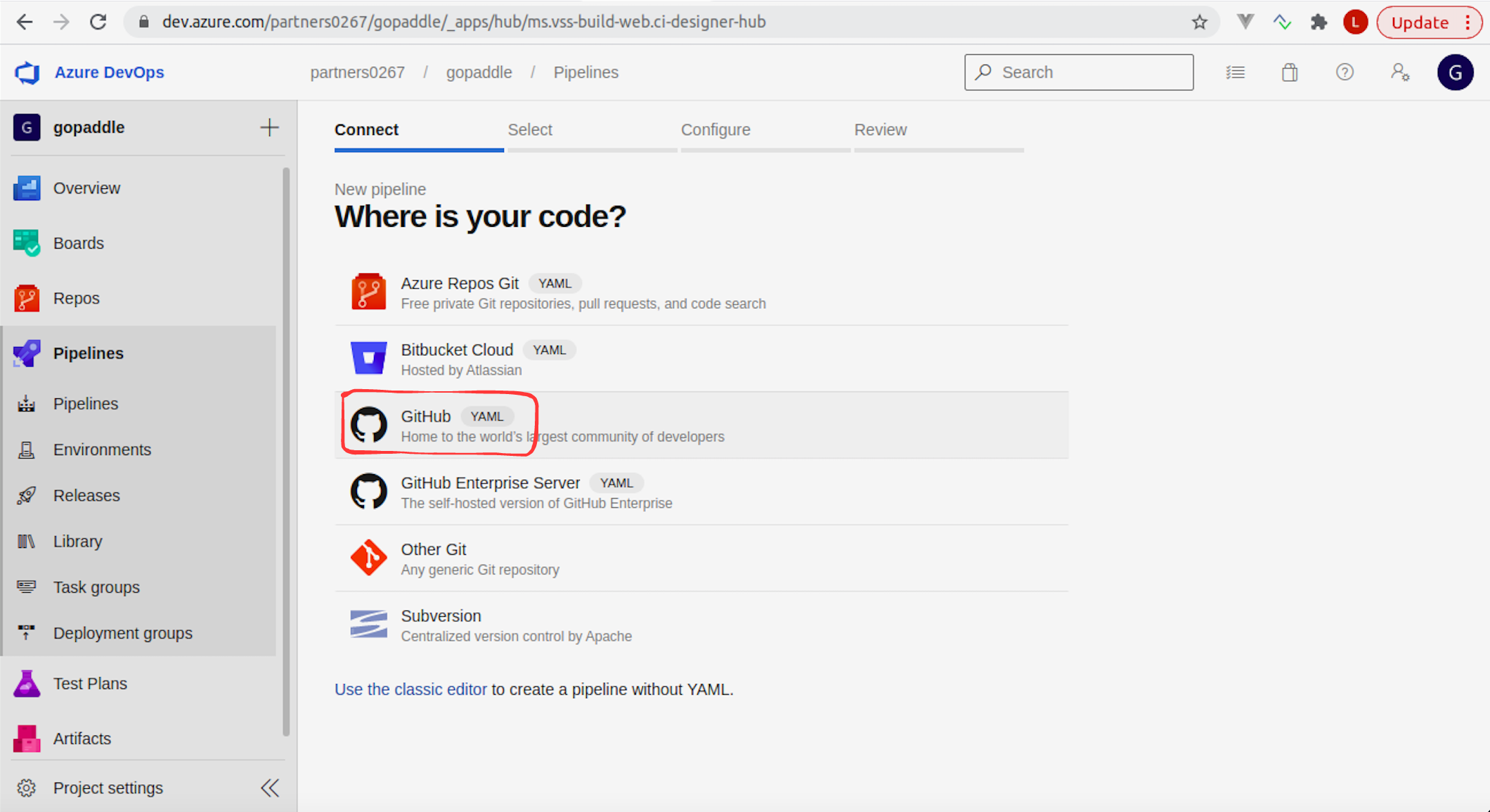

Now that we have initialized the project, it is time for us to create a pipeline in Azure DevOps.

From the Azure DevOps subscription, let us perform these steps.

a. Create a new pipeline.

b. Select the forked Github repository and the branch. Once you select the repo and the branch, you will be redirected to the Github sign-in page (or) if have you already logged in to GitHub, you will see the list of repositories in your Github account. You can choose the repository and authenticate the request from Azure DevOps.

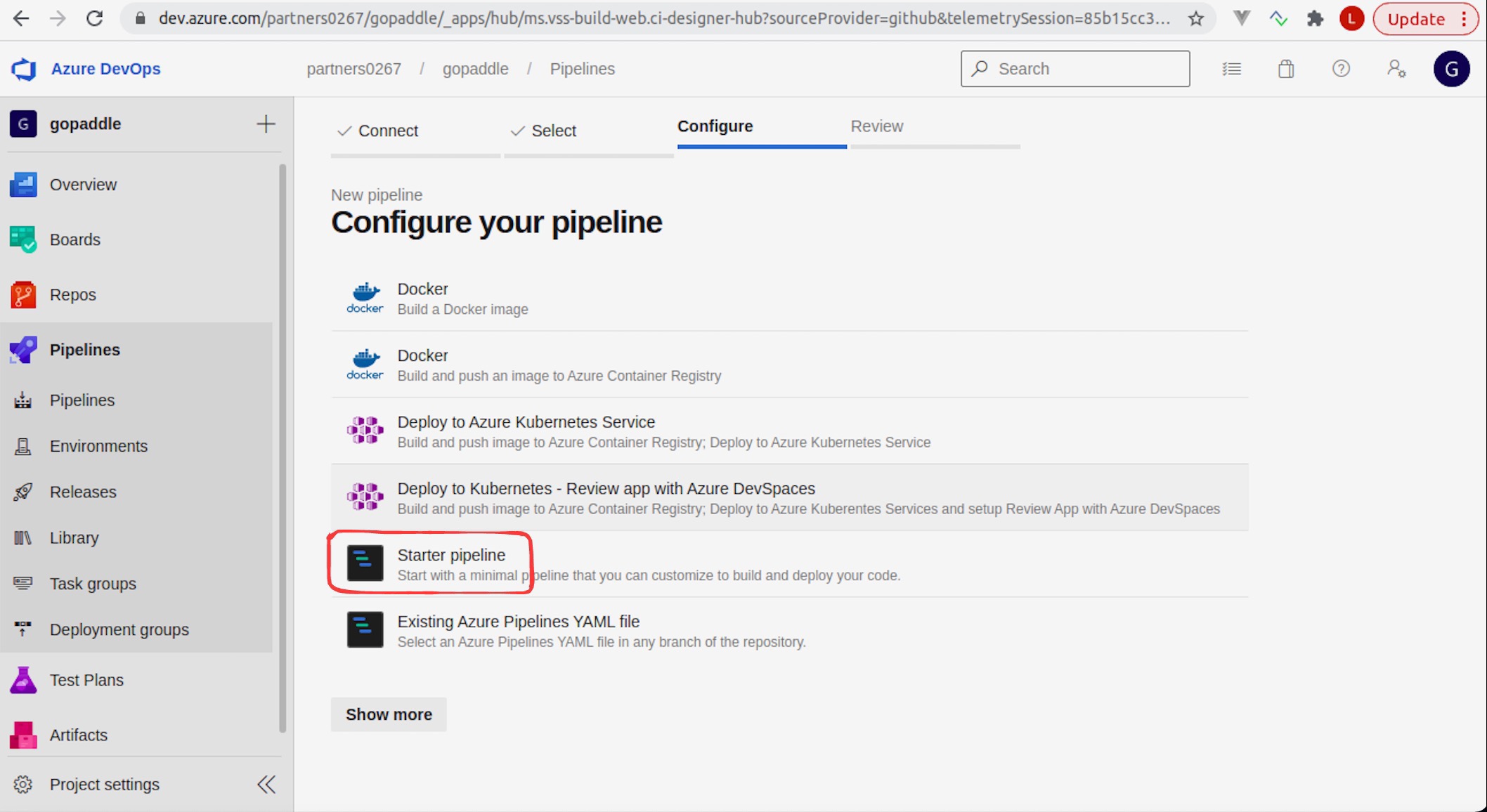

c. Create an Azure DevOps Pipeline of type 'Stater pipeline'.

Once the pipeline is created, a pipeline script file named azure-pipelines.yml gets added to the project root folder in the GitHub repository.

d. Edit this pipeline script and modify the contents based on the template script from here.

e. Create a secure variable to access the gopaddle API token by creating a variable group by name gp-api-key under the Library option in the Azure DevOps portal. Store the gopaddle API token under this group with the variable key as GP_API_TOKEN and the value as the API token from the gpctl init output.

f. Replace the contents of the variables section in the pipeline script with the values from the .gp file generated during the gpctl init process.

A Closer Look at the Pipeline Script

The pipeline has 2 stages - Build the application and Perform a rolling update once the build completes. But this pipeline can be extended to include more steps like running a regression suite or sending an email to the project stakeholders.

As soon as the code is committed to the project, Azure DevOps detects a change and triggers the pipeline. When the pipeline script is executed, it calls the gopaddle API to trigger a container build. The container build process builds the container image in the pre-configured Kubernetes cluster, pushes the Docker image to the container registry. The pipeline script waits until the build process is complete. The second script, picks the build information from the previous step - like the build ID, commit description, etc and initiates a rolling update using the gopaddle API.

Demo

You can find a brief demo of the pipeline integration:

As illustrated, the modernization process takes about 10 minutes (most of this time is spent on building the container image and deploying the application) to generate the Dockerfile and the Kubernetes YAML files and about 10 minutes to create the pipeline.

Through a simple integration, we get the benefit of modernizing the application and creating an end-to-end CI/CD pipeline by integrating gopaddle and Azure DevOps pipelines.

Opinions expressed by DZone contributors are their own.

Comments