Classification With XGBoost Algorithm in a Database

Oracle 21c features support for the hot new ML algorithm on the block.

Join the DZone community and get the full member experience.

Join For FreeIn this article, we will look at how to apply the XGBoost algorithm, one of the most popular ensemble learner methods, in a database. Hopefully, it will be a useful study in terms of awareness.

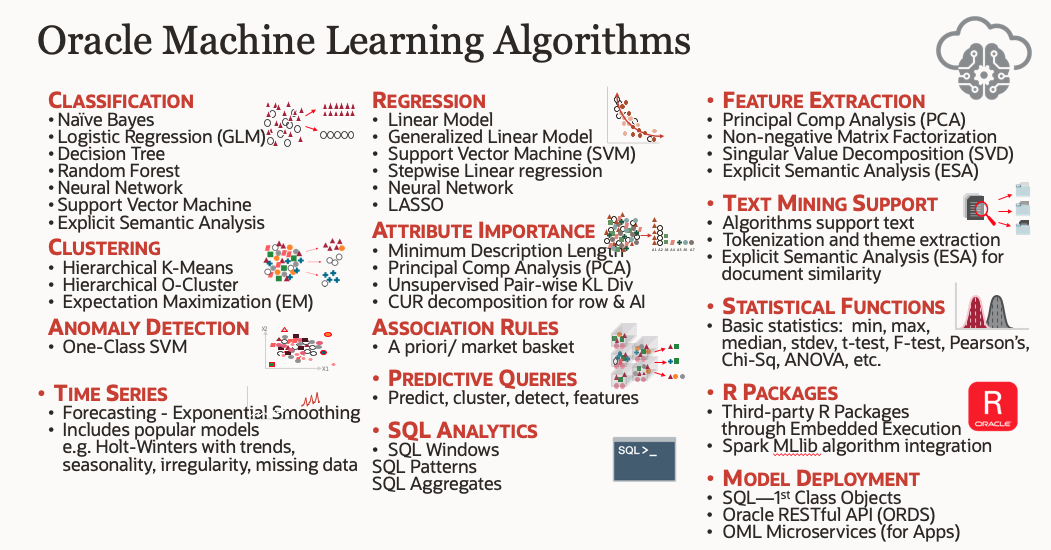

Advanced analytical applications can be developed using machine learning algorithms in Oracle database software since version 9i. As the database versions are renewed, new ones are added to these algorithm options. The current algorithm list that comes with Oracle 19c version is as follows.

With Oracle 21c, new algorithms have been added to this list. Undoubtedly, the most interesting of these algorithms was the XGBoost algorithm, one of the industry's most frequently used ensemble methods. XGBoost, which has proved its learning capacity with its success in ML competitions opened over Kaggle, is now ready for use in the Oracle database.

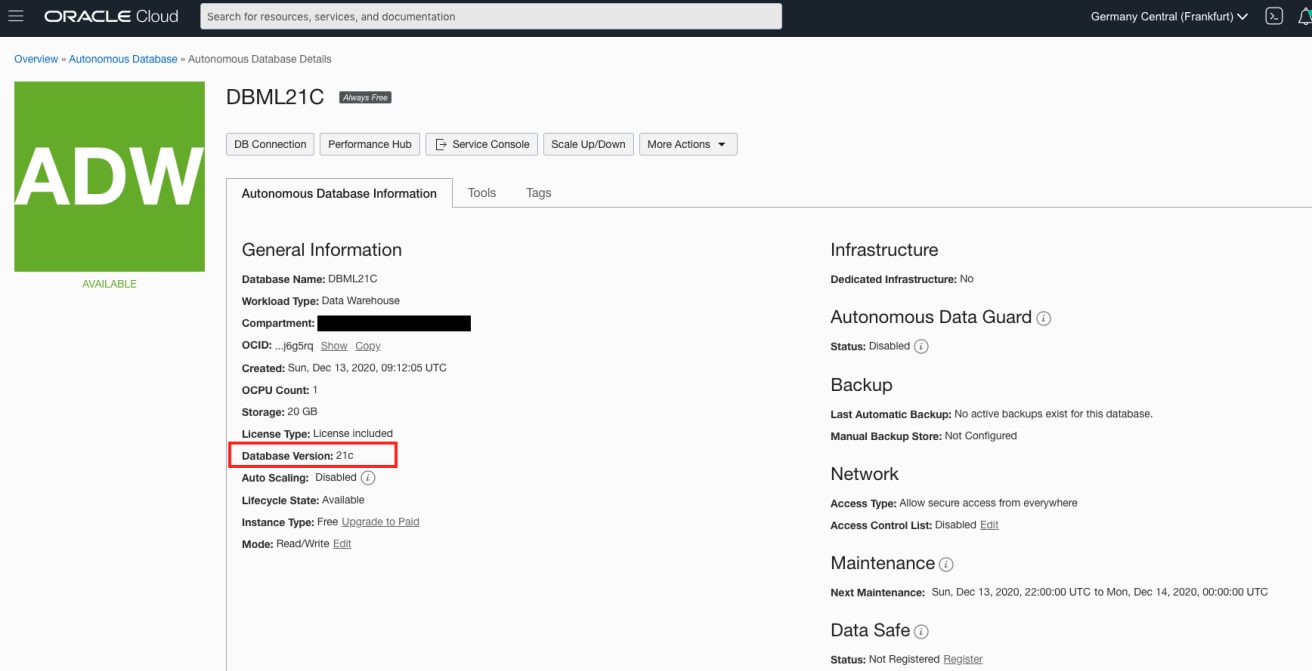

Let's create an XGBoost model with Oracle 21c and test how it is used. To do this test, I will revive an Autonomous Data Warehouse service that comes with Oracle 21c version on Oracle Cloud Infrastructure.

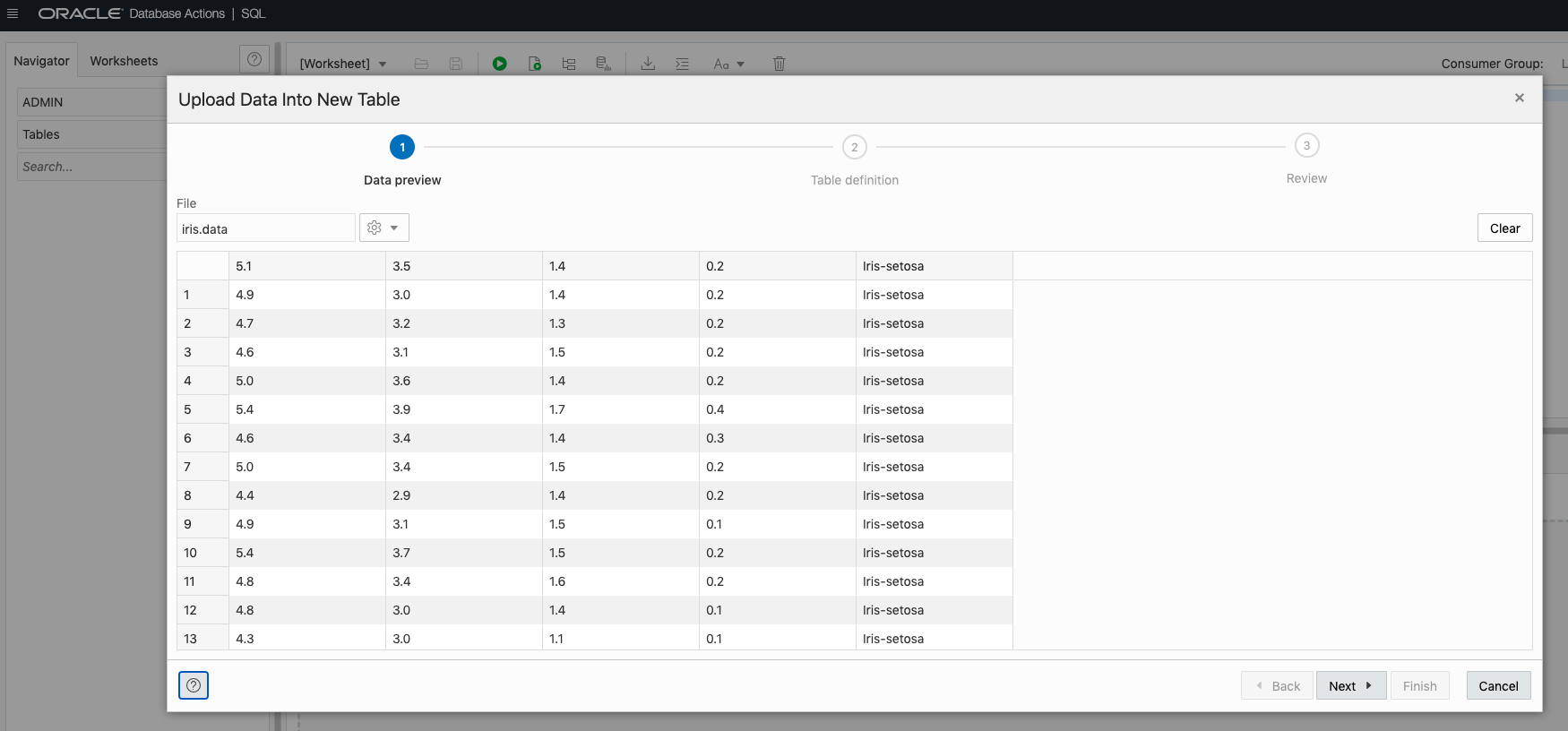

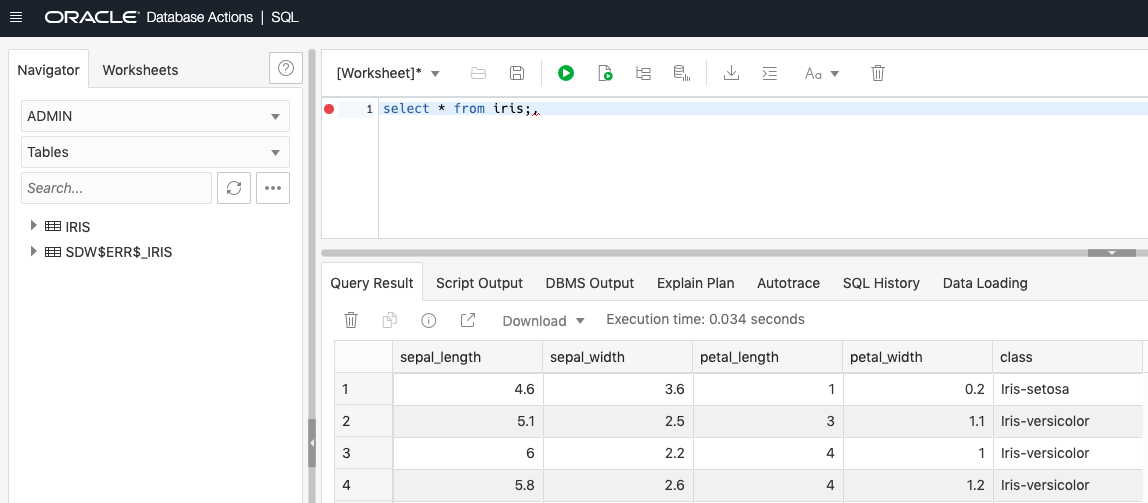

In this example, I will solve a classification problem, but it should not be forgotten that it will be done in regression with this infrastructure and algorithm. The dataset I will use will be Iris. After downloading the data set, I upload it to the database via the SQL Developer web interface.

CREATE TABLE ADMIN.IRIS

(

sepal_length FLOAT ,

sepal_width FLOAT ,

petal_length FLOAT ,

petal_width FLOAT ,

class VARCHAR2(4000)

) ;

We have loaded our data into the database, so now we can go to the editing part of our model.

We create a table to determine the algorithm parameters. We will write the parameters that will run the algorithm in this table to be used later.

xxxxxxxxxx

CREATE TABLE ADMIN.ALGO_VARIABLES (

SETTING_NAME VARCHAR2(4000),

SETTING_VALUE VARCHAR2(4000)

);

BEGIN

INSERT INTO ADMIN.ALGO_VARIABLES (setting_name, setting_value) VALUES

(dbms_data_mining.xgboost_objective, 'multi:softprob');

INSERT INTO ADMIN.ALGO_VARIABLES (setting_name, setting_value) VALUES

(dbms_data_mining.algo_name, dbms_data_mining.algo_xgboost);

INSERT INTO ADMIN.ALGO_VARIABLES (setting_name, setting_value) VALUES

(dbms_data_mining.xgboost_max_depth, '3');

INSERT INTO ADMIN.ALGO_VARIABLES (setting_name, setting_value) VALUES

(dbms_data_mining.xgboost_eta, '1');

INSERT INTO ADMIN.ALGO_VARIABLES (setting_name, setting_value) VALUES

(dbms_data_mining.xgboost_num_round, '5');

COMMIT;

END;

I have set my algorithm parameters. You can access detailed information about these parameters and options by following the link.

We loaded the data and set the values of our algorithm parameters. Now we can move on to the training phase.

xxxxxxxxxx

BEGIN

DBMS_DATA_MINING.CREATE_MODEL(

MODEL_NAME => 'XGBoost_Model',

MINING_FUNCTION => DBMS_DATA_MINING.CLASSIFICATION,

DATA_TABLE_NAME => 'iris',

CASE_ID_COLUMN_NAME => NULL,

TARGET_COLUMN_NAME => 'class',

SETTINGS_TABLE_NAME => 'algo_variables'

);

END;

SELECT *

FROM user_mining_model_attributes

WHERE model_name = 'XGBOOST_MODEL'

ORDER BY attribute_name;

Now let's move on to testing the model we developed. We can use the model in two ways. One of these might be to predict all the values in a table.

xxxxxxxxxx

select

PETAL_LENGTH,PETAL_WIDTH,SEPAL_LENGTH,SEPAL_WIDTH,

prediction (XGBOOST_MODEL using *) prediction

from iris_test t ;

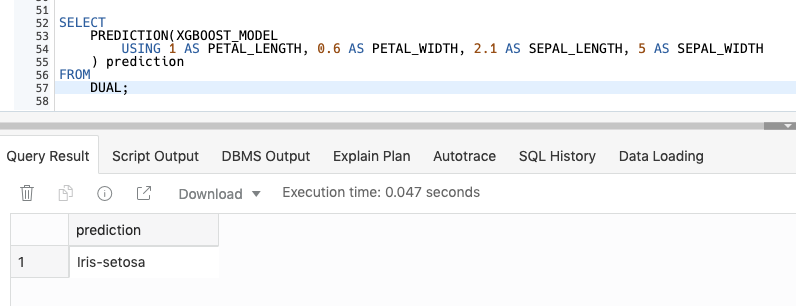

The other might be to guess for a single record.

Opinions expressed by DZone contributors are their own.

Comments