Choosing the Right Containerization and Cluster Management Tool

This comprehensive breakdown of ECS, Docker Swarm, Nomad, Kubernetes, DC/OS, and Rancher compare their pros and cons so you don't have to.

Join the DZone community and get the full member experience.

Join For FreeChoosing the right containerization and cloud computing cluster management tools can be a challenge. With so many on the market, deciding which one is right for your cluster computing architecture and workloads is hard.

It’s a decision organizations face regularly, so we researched the difference between leading tools including Amazon Web Services (AWS) ECS, Kubernetes, Rancher, Docker Swarm, DC/OS, and Nomad in an AWS infrastructure.

Each tool has a different function, but at a high level we can break them down as follows: ECS, Kubernetes, Docker Swarm, and Nomad provide pure container/application schedulers. While Rancher and DC/OS are considered full infrastructure management platforms that can aid organization and management both of containerized and standalone applications.

So, when should you use one over the other? What’s the best applicable use case for each of these cluster management and container orchestration tools? Based on our research methodology in an AWS environment, below are our findings and recommendations.

Containerizing Microservices With AWS ECS

Let’s start with ECS.

Since our test environment is hosted on AWS, we began our comparison of container orchestration and cluster management tools with EC2 Container Service (ECS).

A key objective here was to determine whether ECS could run multi-tenant workloads and utilize resources smarter than existing microservices currently deployed on bare EC2. In this environment, each application (or microservice) utilized a full EC2 instance with no resource sharing with other applications. In addition, approximately 60–70% of provisioned CPU/RAM capacity was idle (as seen via Amazon CloudWatch). To achieve better resource utilization and easier management, we planned to containerize the microservices and install an orchestration tool capable of handling service discovery, auto-scaling, secret management, and resource sharing.

In the past, ECS did not include support for dynamic port mapping (a feature that allows you to run several containers on the same port and same instance). However, as of 2016, this feature is included by way of the new AWS application load balancer (that permits host-based routing by URLs) which you can use with ECS. This automatically generates random ports and adds them to the load balancer when the new ECS “service” is created.

Based on our experience, container management within the ECS web console remains troublesome, for the following reasons:

Because the interface features several GUIDs of tasks and services, the result is somewhat cluttered and you cannot manually remove instances from a pool (for maintenance and upgrades, for example).

You are also required to create new task definitions instead of modifying existing ones.

ECS also lacks a simple option to start rolling updates (gradual redeploy of a new Docker image).

The Bottom Line on ECS: Our conclusion is that ECS has merit if you’re running small and simple Docker environments. Once you scale to deploy 20 or more microservices (or have a multi-tenant scenario with dev + test + staging + production environments per customer) getting everything to work seamlessly together, managing configurations, versions, rollbacks, and other operations gets tricky.

We’d recommend ECS for simple “create and forget” use cases for the simple reason that changing instances that have already been deployed is far from convenient. It involves submitting long JSONs to the AWS command line interface or through the web console. Plus, existing workloads can only be modified by creating a new task definition, which, if you’re modifying deployments frequently, results in hundreds of definitions.

Docker Swarm vs. Nomad

Next, we reviewed Docker Swarm and Nomad, two similar container orchestration tools that seem less complex than others.

Nomad (by Hashicorp) is a single binary that acts both as master node and client. It’s a scheduler and resource allocator that doesn’t need external services to save its state (this is stored on master nodes). And, because it’s a master node, it is highly available with automatic failover. Nomad also features management abstractions that allow you to divide a cluster logically to data centers, regions, and availability zones.

However, Nomad has some drawbacks:

It lacks service discovery, so an additional tool, such as Consul, is required. Consul must be installed alongside Nomad on each host in the cluster.

Nomad does not have a secret management or other custom key/value store. Integration with Vault or Etcd is necessary to keep application secrets and any arbitrary data needed by your distribution services.

Unfortunately, it can be challenging to configure, manage, and monitor each of these separate systems; collect logs; recover from failures; or upgrade them (even a single Vault upgrade is a painful project).

The open source version of Nomad lacks a GUI or dashboard like those offered by Kubernetes, Rancher, and DC/OS. This limits your ability to quickly glance at the state of hosts and services. Plus, everything must be managed by automated scripts or the command line interface. A Nomad UI is expected to be released as a part of Hashicorp Atlas (the paid version) though, as of August 2016, a planned beta release date wasn’t disclosed. There is, however, a community-developed Nomad UI.

Despite the availability of these integration tools, basing your production workloads on Nomad, when such great open source and feature-rich options like Kubernetes exist, is not something we recommend. However, there are scenarios when Nomad can make sense. For example, if you already rely heavily on Consul and maybe use Vault, then adding Nomad to your infrastructure may be a good choice as opposed to setting up Kubernetes, Mesos, or Rancher.

Moving on to Nomad’s close relation — Docker Swarm. According to its website, Docker Swarm provides native clustering capabilities to turn a group of Docker engines into a single, virtual Docker Engine. Swarm structures your cluster similarly to Nomad — several master nodes for failover and other hosts connected as workers. You can use “docker-compose” to launch complete interconnected Docker environments with many containers, describing them in YAML files.

With the Docker 1.12 release, Swarm is built into Docker Engine itself, and it is the native way to run a “Dockerized” applications cluster.

A nice feature of Swarm is that it provides automatic TLS encryption between nodes and masters, plus you get a default 90-day certificate rotation out-of-the-box. This enables nodes to automatically renew their certificates with masters.

Swarm also gets another check in the box because it supports the easy creation of overlay networks compared to Nomad and Kubernetes. The latter requires Weave or Calico network plugins installed on each host, but with Swarm, you can schedule containers to particular labeled nodes (like Kubernetes does) as described here.

Swarm does have a few cons:

Like the open source version of Nomad, Swarm lacks a useful UI, except for a few basic visualizers developed by the community that only show which container is deployed to which mode.

Swarm needs an external back-end to store service discovery records. Current compatible backends supported include Consul, Etcd, ZooKeeper, and plain text files mode.

The Bottom Line on Nomad and Docker Swarm: Swarm and Nomad get a big check in the box for the simplicity of running small development-only servers or small clusters (with no security or service discovery concern in mind). With these tools, you can begin running containerized environments in very little time — on your local machine or development server.

Another plus is that these tools have zero performance impact when scaled to thousands of nodes (Nomad documentation states it was tested on up to 5,000 nodes while Swarm was tested on 1,000 nodes with 50,000 containers). If you don’t make frequent changes to your infrastructure and your application is not highly interconnected, you can run massive, but simple, workloads successfully.

Kubernetes vs. DC/OS vs. Rancher

Now let’s have a look at Kubernetes, DC/OS (Mesosphere), and Rancher — each of which is complementary, yet distinct.

Let’s start with Kubernetes, a pure container/application scheduler, and then we’ll move on to Rancher and DC/OS which are more comprehensive infrastructure management platforms that can also support containerized and standalone applications.

Skipping the history and origins of Kubernetes, which most of us are familiar with, let’s get straight to the point of what can be done with Kubernetes in its current incarnation — 1.4.

As mentioned earlier in our comparison of Swarm and Nomad, Kubernetes wins out in terms of its UI. Feature-rich and robust, the dashboard gives you the ability to:

Launch new containers

Create services (load balancers that will route traffic to the correct containers)

Manage deployments (run rolling updates, replace a current application Docker image individually or in batches)

Scale deployments up or down

Manage secrets and configure maps

Manage persistent storage volumes used by containers

View batch running jobs and daemon sets (a container that will run on each worker node)

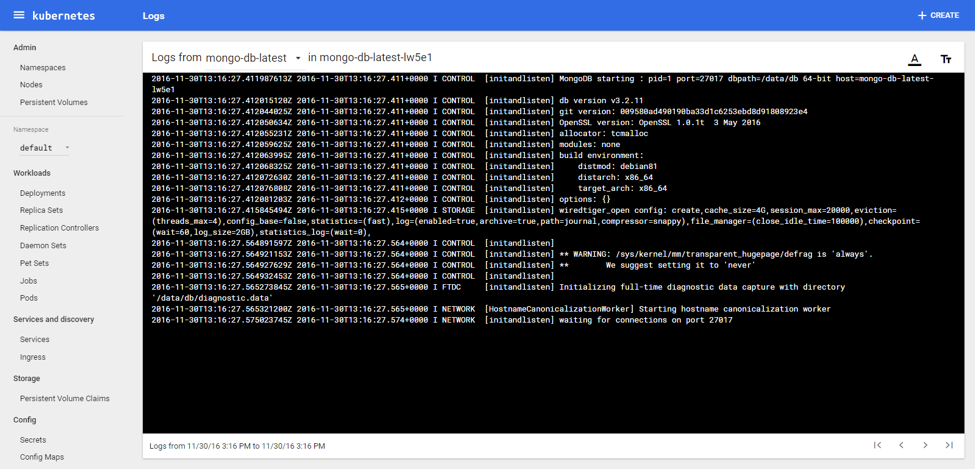

View each container log using easy pagination

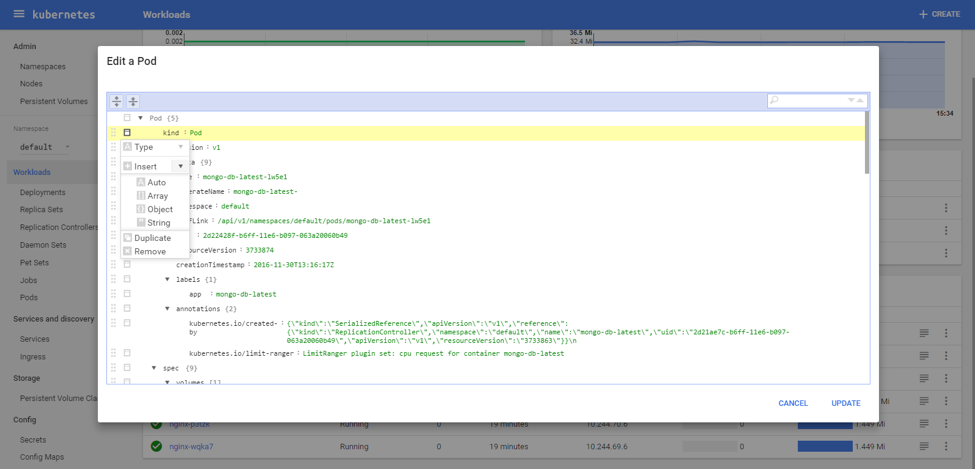

Easily view, edit, and update the YAML definitions of your services and containers

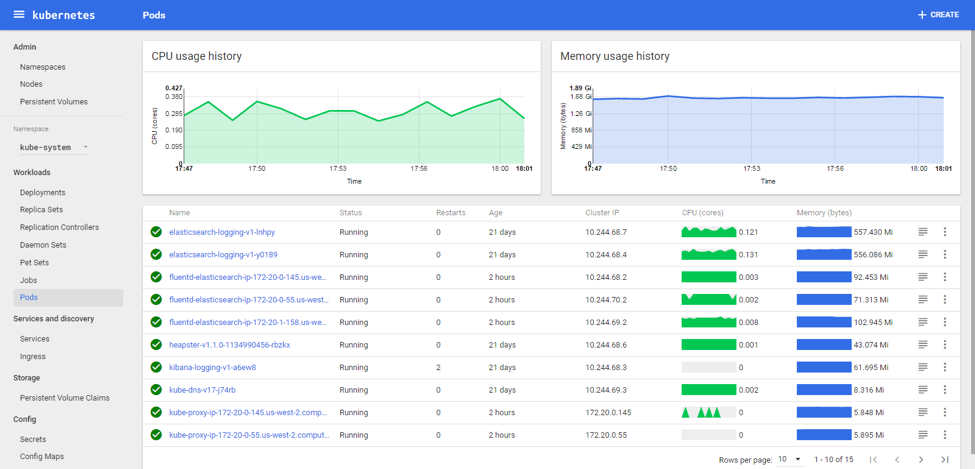

Scale the number of containers

Adjust CPU/RAM minimal requirements

(Kubernetes UI demo: Reading through the logs of MongoDB container directly in a browser)

(Kubernetes UI demo: view resource utilization and extra details of all pods in a namespace)

(Kubernetes UI demo: edit any pod, deployment, replica set, config map or service YAML definition easily in the browser)

Kubernetes provides a framework that fits all your needs. A cluster can be installed in multi-master for high-availability, and all state is kept in distributed Etcd container that can be backed-up. It also includes options to install log collectors and Elasticsearch, Grafana, and Kibana for log aggregation from containers and hosts. If you’re on the Google Compute Engine, logs can also be sent to Google Cloud Monitoring.

One of the most important features that we noted is the network separation between containers. In Kubernetes, there is a concept of “namespaces” which logically divide your deployments, services, and everything else. By default, all containers can talk to each other with no restrictions. To separate access between namespaces you’ll need to install one of the overlay network plugins like Calico, Weave, or Flannel. You can find a full list of supported plugins here.

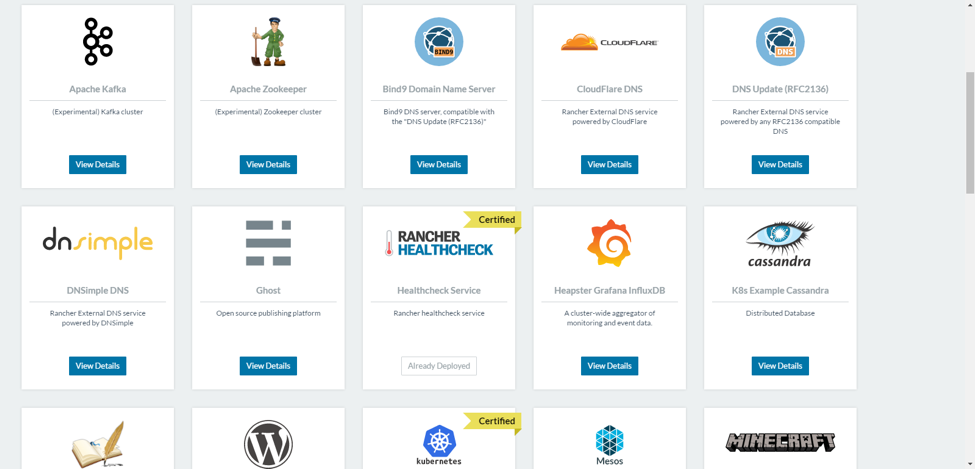

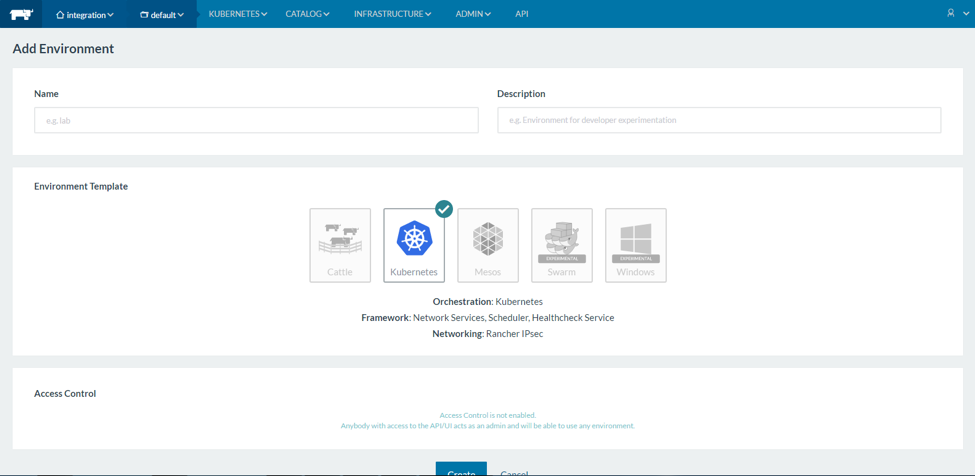

Moving on to Rancher and DC/OS infrastructure platforms, both of which are open source and include enterprise and community versions. With rich web GUIs, multiple plugins and features, when actively developed and maintained, these frameworks can handle the management of complex environments, and multi-cloud setup. Both can also be plugged into other container schedulers such as Docker Swarm, Kubernetes, and Apache Mesos (if you need additional features beyond what their built-in schedulers provide). If Kubernetes is your preference for container management you can install it on top of Rancher and benefit from all the tools in the Kubernetes ecosystem and Rancher at the same time. Moreover, it’s possible to launch separate environments of Swarm, Mesos, Kubernetes, and Cattle (the scheduler in Rancher) directly from the Rancher web UI with the click of a button. The latest release of Rancher 1.3 introduced experimental support for Windows based environments.

If the concept of the DC/OS and Rancher platforms seem similar to you, you’re not alone. Both have a catalog of applications, like Elasticsearch, Hadoop, Spark, Redis, MongoDB, etc. that can easily be installed directly on top of your infrastructure from the management web UI (screenshot below).

Applications that can be installed from catalog depend on a chosen orchestration platform in current Rancher environment, you might see a “not compatible” label on a button, which means this item can work only with another orchestration engine (you always can create additional “environment” and select another orchestration tool, this is a great advantage of using Rancher as a “base” for your infrastructure.

In DC/OS, this catalog is named “Universe” and is also available through the command line interface tool as “dcos.” After installing an app from the catalog, you will have the option to upgrade it once a new version is released and becomes available in the catalog. This upgrade is an easy operation from the web UI. (Note: Kubernetes has a similar catalog and package manager (called “helm”), but it’s in its infancy and not yet integrated into the UI). Items in the catalog are named “charts” (manifests of some application installation and configuration) which you can write yourself or download and run from the official repository.

Despite these catalog-based similarities, DC/OS and Rancher differ from other container orchestration systems, like Kubernetes, which is why we’ve separated them out.

Let’s get back to a comparison.

Of note in evaluating Rancher, is a network visualizer tool that shows how your containers and services connect with each other. Rancher also provides easy separation between clusters. Just switch between your Cattle / Kubernetes / Swarm environments in menu, then add worker hosts to a particular cluster, and run needed workloads in no time. Forget the time-consuming manual installation of Kubernetes and Mesos, Rancher or DC/OS will do the heavy lifting for you. In addition to its pretty UI, Rancher also offers important enterprise-grade features such as audit log, certificate store, and role-based user authorization. You can also configure access control through Active Directory, Azure AD, GitHub, and other authentication providers, to allow your developers or DevOps team members fine-grained access to particular clusters and resources.

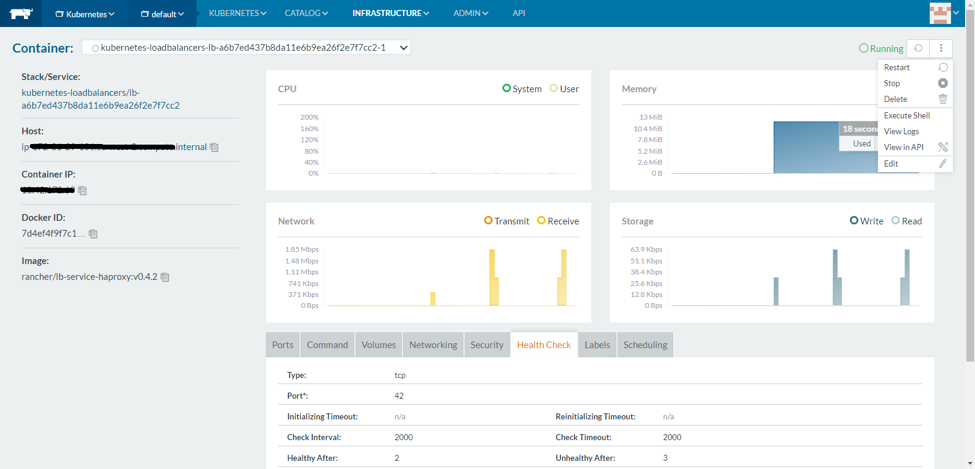

Below are a few additional screenshots of Rancher UI:

(Rancher UI demo: creating environment will launch all needed components of the orchestration system)

(Rancher UI demo: inspect any single container in detail, view its logs with “View Logs” from a menu in the top right corner, or directly open console to type commands via the “Execute Shell” option)

Moving on to DC/OS.

DC/OS is based on Apache Mesos and Marathon, which are also the default resource allocator (Mesos) and scheduler (Marathon). However, you can also install Kubernetes and it’s officially supported through their catalog. Read more about Mesos and Kubernetes interoperability here.

When comparing Rancher and DC/OS it becomes apparent that the community version of DC/OS is very limited in features (view a comparison here) and important functions like multi-tenancy, encryption, and user authorization modules aren’t present. Compare this with the Rancher community version which includes all the same features as the enterprise version, simply because they are the same. The downloadable version is both the community and the enterprise version, the only difference is whether you choose to purchase a support option.

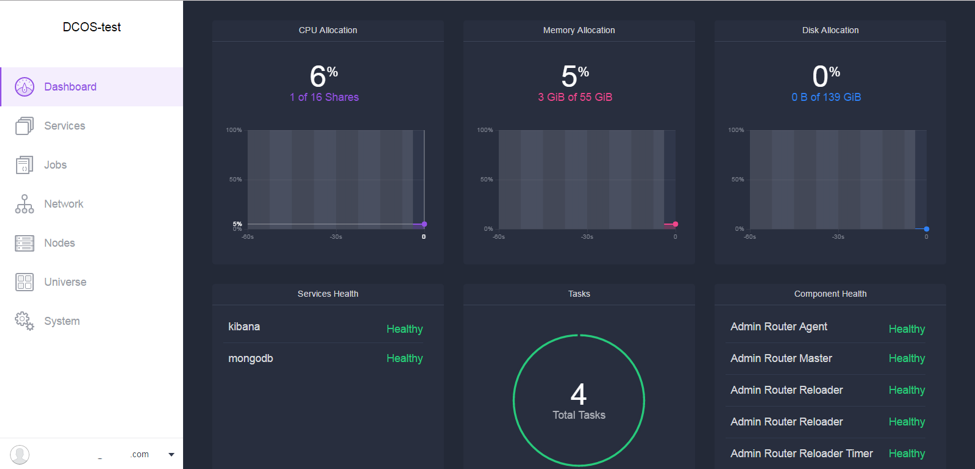

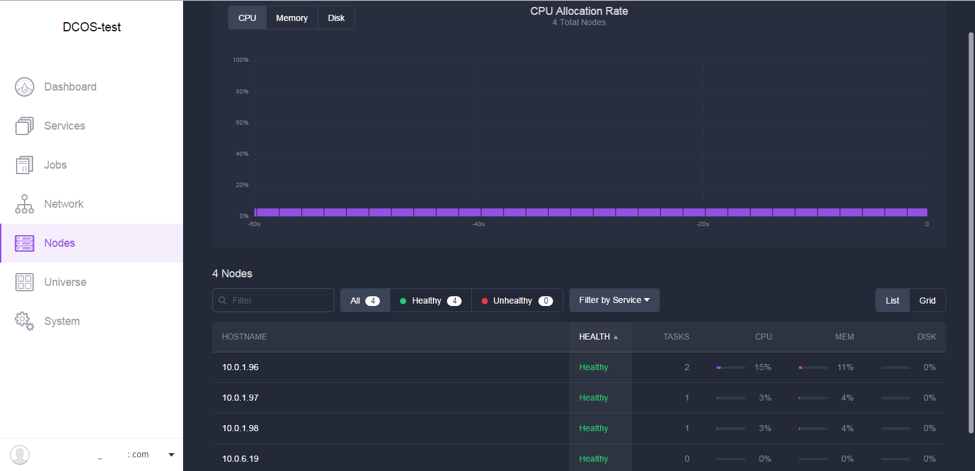

Here are a few screenshots of DC/OS web UI:

(DC/OS web UI demo: main dashboard shows overall status of the cluster)

(DC/OS web UI demo: inspect resource utilization on the “Nodes” tab)

In Conclusion

In summary, while each tool is a good option for running various workloads, much depends on your current environment. For small clusters running on AWS, ECS may be sufficient, just flip through the documentation to understand which features are available.

For serious future-proof production clusters, we’d recommend Kubernetes as one of the most actively developed, feature-rich, and actively used platforms on the market.

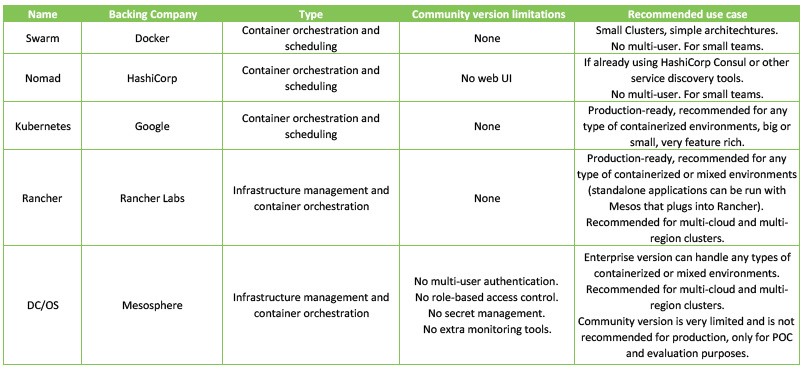

Check out the matrix below which offers a quick comparison and ideal use case for each tool mentioned.

Share your thoughts and questions in the comments section below.

Published at DZone with permission of Slava Koltovich. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments