AWS ELB Log Analysis with the ELK Stack

ELB logs are a valuable resource to have on your side. But it is being able to aggregate and analyze them with ELK that really allows you to benefit from the data.

Join the DZone community and get the full member experience.

Join For FreeElastic Load Balancers (ELB) allow AWS users to distribute traffic across EC2 instances. ELB access logs are one of the options users have to monitor and troubleshoot this traffic.

ELB access logs are collections of information on all the traffic running through the load balancers. This data includes from where the ELB was accessed, which internal machines were accessed, the identity of the requester (such as the operating system and browser), and additional metrics such as processing time and traffic volume.

Here is an example of an ELB access log:

2017-03-09T21:59:57.543344Z production-site-lb 54.182.214.11:6658 172.31.62.236:80 0.000049 0.268097 0.000041 200 200 0 20996 "GET http://site.logz.io:80/blog/kibana-visualizations/ HTTP/1.1" "Amazon CloudFront"ELB logs can be used for a variety of use cases — monitoring access logs, checking the operational health of the ELBs, and measuring their efficient operation, to name a few. In the context of operational health, you might want to determine if your traffic is being equally distributed amongst all internal servers. For operational efficiency, you might want to identify the volumes of access that you are getting from different locations in the world.

AWS allows you to ship ELB logs into an S3 bucket, and from there you can ingest them using any platform you choose. This article describes how to use the ELK Stack (Elasticsearch, Logstash, and Kibana) to index, parse, and visualize the data logged by your ELB instances.

Enabling ELB Logging

By default, ELB logging is turned off — so, your first step is to enable logging.

Before you do so, you will need to create a bucket in S3 and make sure it has the correct policy, as described in the AWS documentation:

{

"Id": "Policy1489048269282",

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Stmt1489048264236",

"Action": [

"s3:PutObject"

],

"Effect": "Allow",

"Resource": "arn:aws:s3:::daniel-elb/AWSLogs/011173820421/*",

"Principal": {

"AWS": [

"127311923021"

]

}

}

]

}Then, in the EC2 console, open the Load Balancers page and select your load balancer from the list.

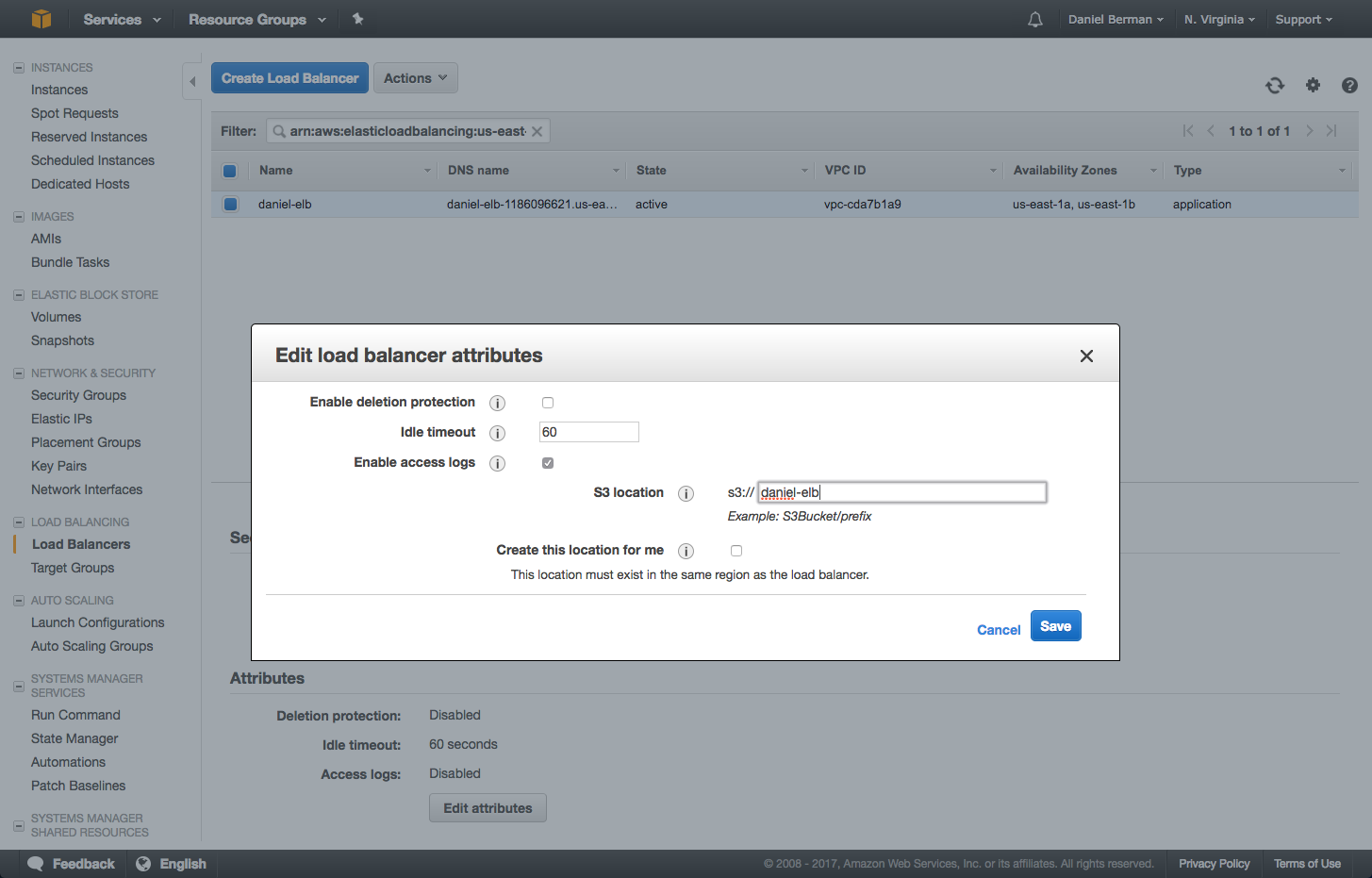

In the Description tab below, scroll down to the Attributes section and click Edit. In the dialog that pops up, enable access logs and then enter the name of the S3 bucket to which you want to ship the logs:

If the logging pipeline is setup correctly, you should see a test log file in the bucket after a few minutes. The access logs themselves take a while longer to be sent into the bucket.

Shipping Into ELK

Once in S3, there are a number of ways to get the data into the ELK Stack.

If you are using Logz.io, you can ingest the data directly from the S3 bucket, and parsing will be applied automatically before the data is indexed by Elasticsearch. If you are using your own ELK deployment, you can use a variety of different shipping methods to get Logstash to pull and enhance the logs.

Let’s take a look at these two methods.

Using Logz.io

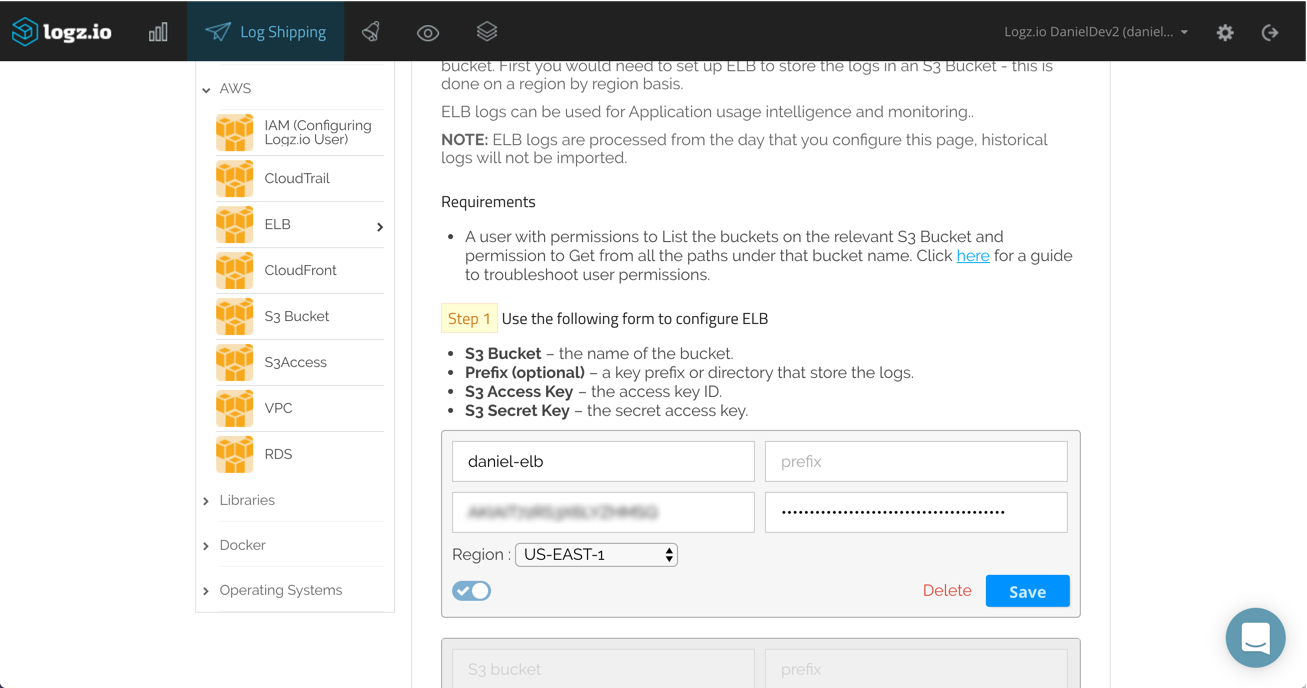

All you have to do to get the ELB logs shipped from S3 into the Logz.io ELK Stack is to configure the S3 bucket in which the logs will be stored in the Logz.io UI. Before you do so, make sure the bucket has the correct ListBucket and GetObject policies as described here.

Under Log Shipping, open the AWS > ELB tab. Enter the name of the S3 bucket together with the IAM user credentials (access key and secret key). Select the AWS region and click Save.

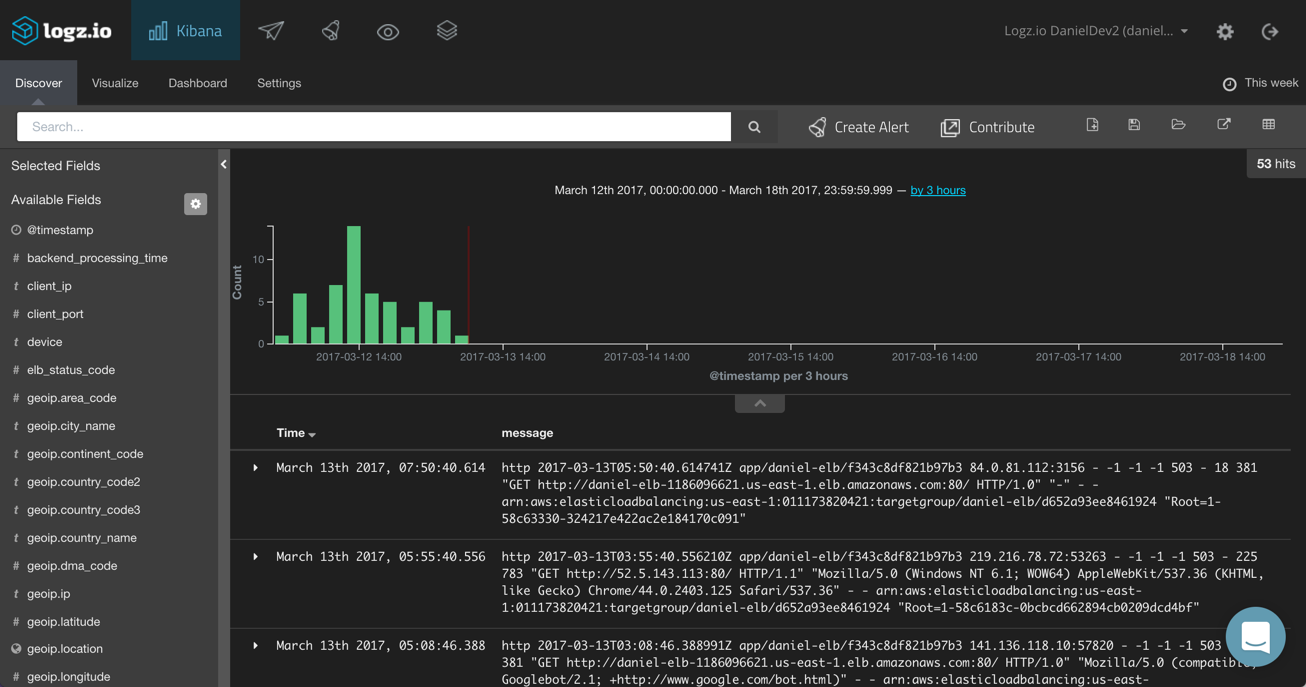

That’s all there is to it. Logz.io will identify the log type and automatically apply parsing to the logs. After a few seconds, the logs will be displayed in Kibana:

Using Logstash

As mentioned above, there are a number of ways to ship your ELB logs from the S3 bucket if you are using your own ELK deployment.

You can use the Logstash S3 input plugin or, alternatively, download the file and use the Logstash file input plugin. In the example below, I am using the latter, and in the filters section defined some grok patterns to have Logstash parse the ELB logs correctly. A local Elasticsearch instance is defined as the output:

input {

file {

path => "/pathtofile/*.log"

type => "elb"

start_position => "beginning"

sincedb_path => "log_sincedb"

}

}

filter {

if [type] == "elb" {

grok {

match => ["message", "%{TIMESTAMP_ISO8601:timestamp} %{NOTSPACE:loadbalancer} %{IP:client_ip}:%{NUMBER:client_port:int} (?:%{IP:backend_ip}:%{NUMBER:backend_port:int}|-) %{NUMBER:request_processing_time:float} %{NUMBER:backend_processing_time:float} %{NUMBER:response_processing_time:float} (?:%{NUMBER:elb_status_code:int}|-) (?:%{NUMBER:backend_status_code:int}|-) %{NUMBER:received_bytes:int} %{NUMBER:sent_bytes:int} \"(?:%{WORD:verb}|-) (?:%{GREEDYDATA:request}|-) (?:HTTP/%{NUMBER:httpversion}|-( )?)\" \"%{DATA:userAgent}\"( %{NOTSPACE:ssl_cipher} %{NOTSPACE:ssl_protocol})?"]

}

grok {

match => ["request", "%{URIPROTO:http_protocol}"]

}

if [request] != "-" {

grok {

match => ["request", "(?<request>[^?]*)"]

overwrite => ["request"]

}

}

geoip {

source => "client_ip"

target => "geoip"

add_tag => ["geoip"]

}

useragent {

source => "userAgent"

}

date {

match => ["timestamp", "ISO8601"]

}

}

}

output {

elasticsearch { hosts => ["localhost:9200"] }

stdout { codec => rubydebug }

}Analyzing and Monitoring ELB Logs

Whatever method you used to pull the ELB logs from S3 into your ELK, the result is the same. You can now use Kibana to analyze the logs and create a dashboard to monitor traffic.

What should you be monitoring?

ELB logs are similar to web server logs. They contain data for each request processed by your load balancer. Below are a few examples on what metrics or information can be monitored with visualizations.

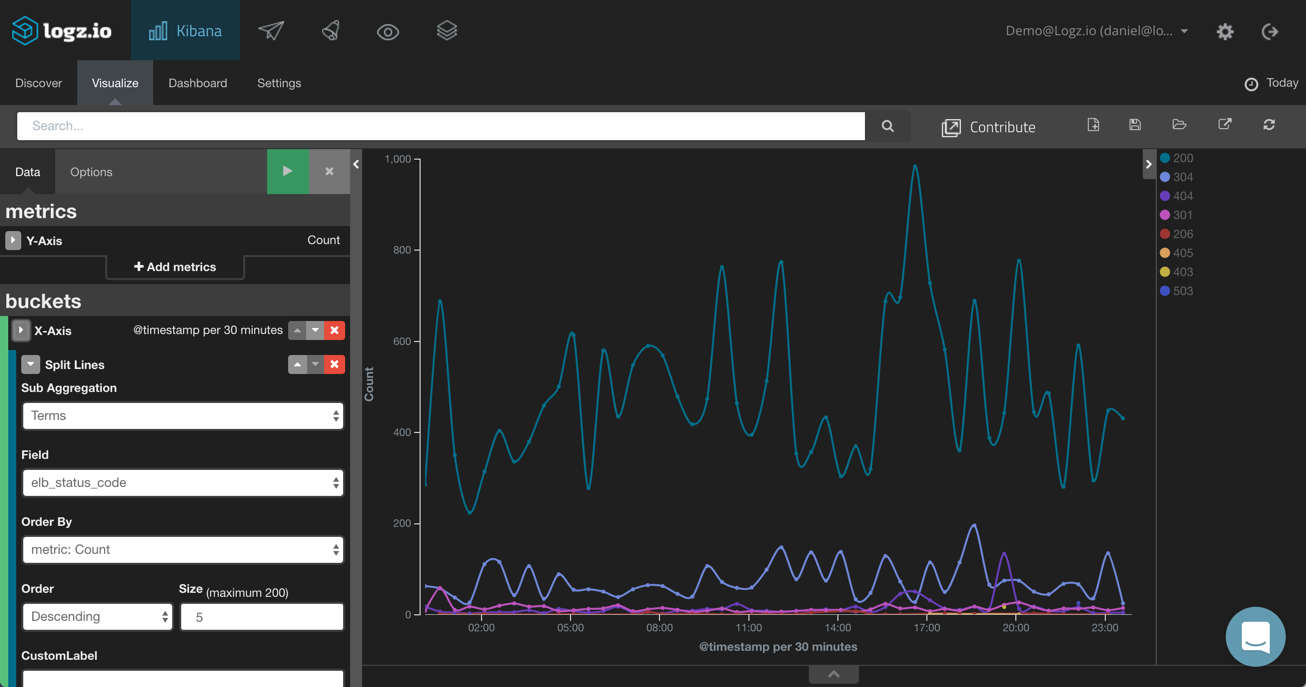

Response Codes

A useful metric to monitor is the rate of error response codes reported by our load balancer. To do this, I’m going to choose the Line Chart visualization. As our Y axis, we can leave the default Count aggregation. As the X axis, we are going to use both a date histogram and a split line using the elb_status_code field.

Geographic Distribution of Requests

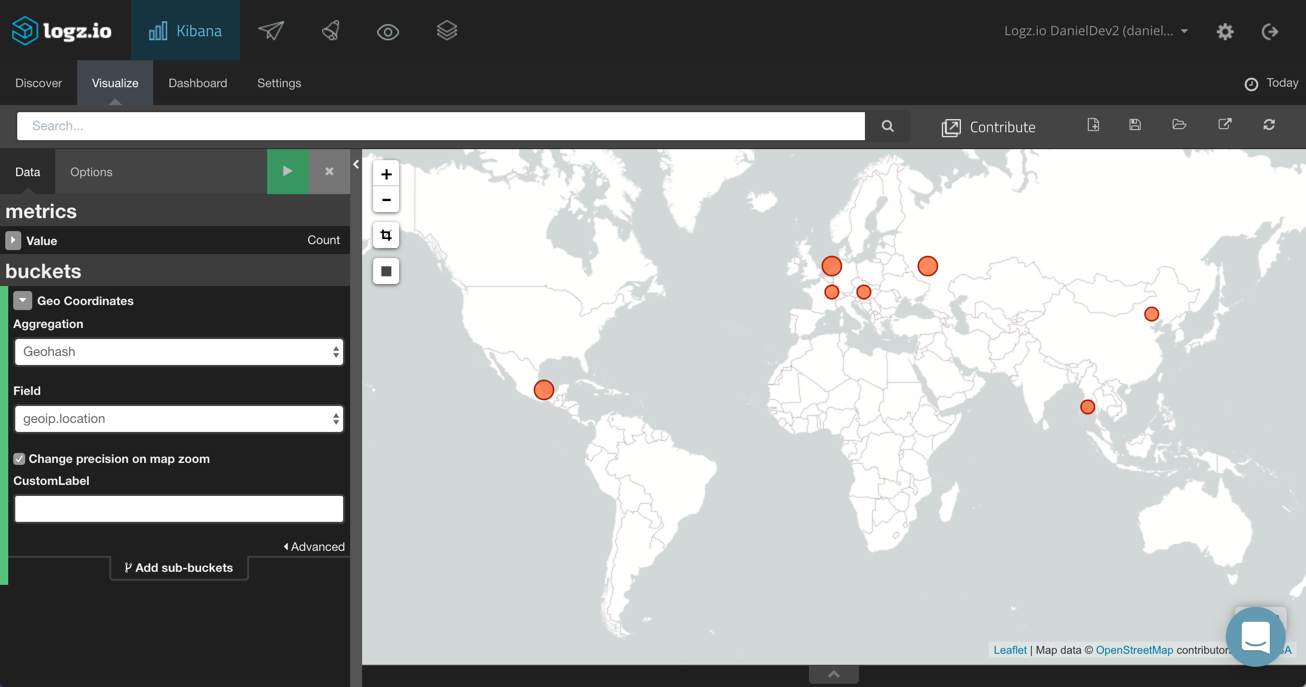

We can see a geographical depiction of the clients sending the requests being processed by our ELB instance. To do this, we will choose the Tilemap visualization and use the geoip.location field we added as part of our Logstash configuration.

Sent vs. Received Bytes

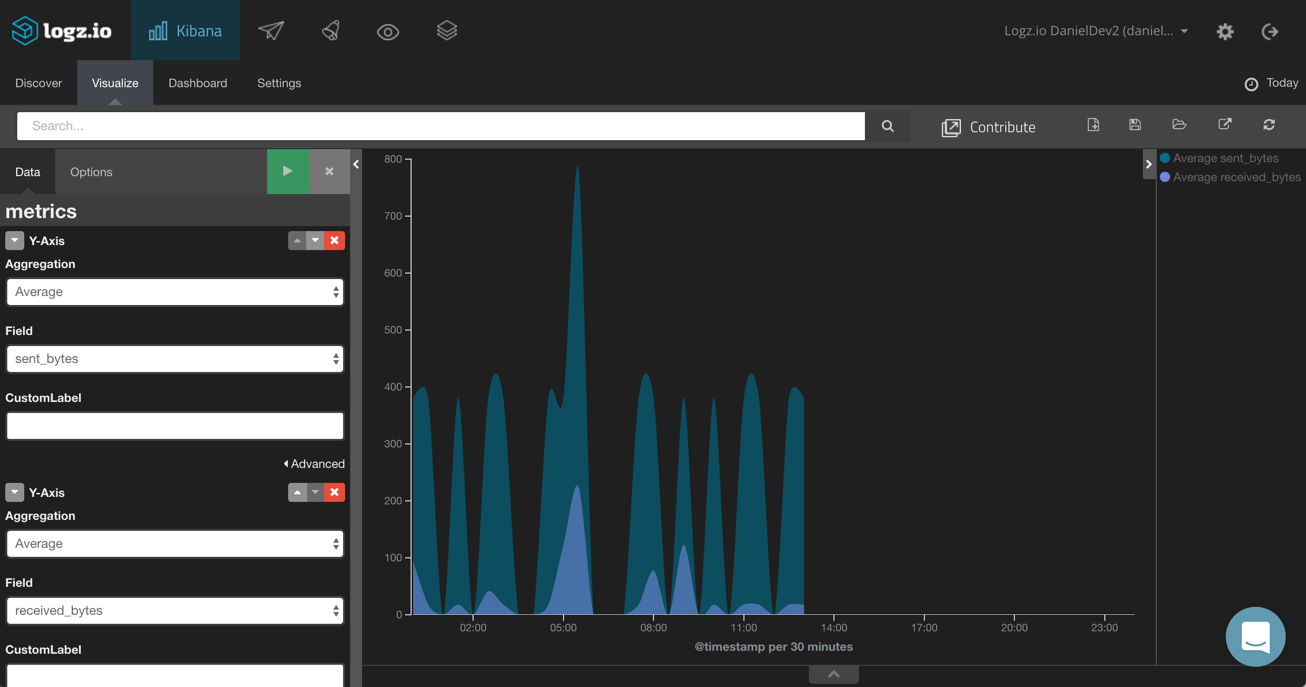

Using an Area Chart visualization, we can get a good picture on the incoming versus outgoing traffic using the sent_bytes and received_bytes metrics. The X axis (not shown in the image below) is a time histogram.

Requests Breakdown

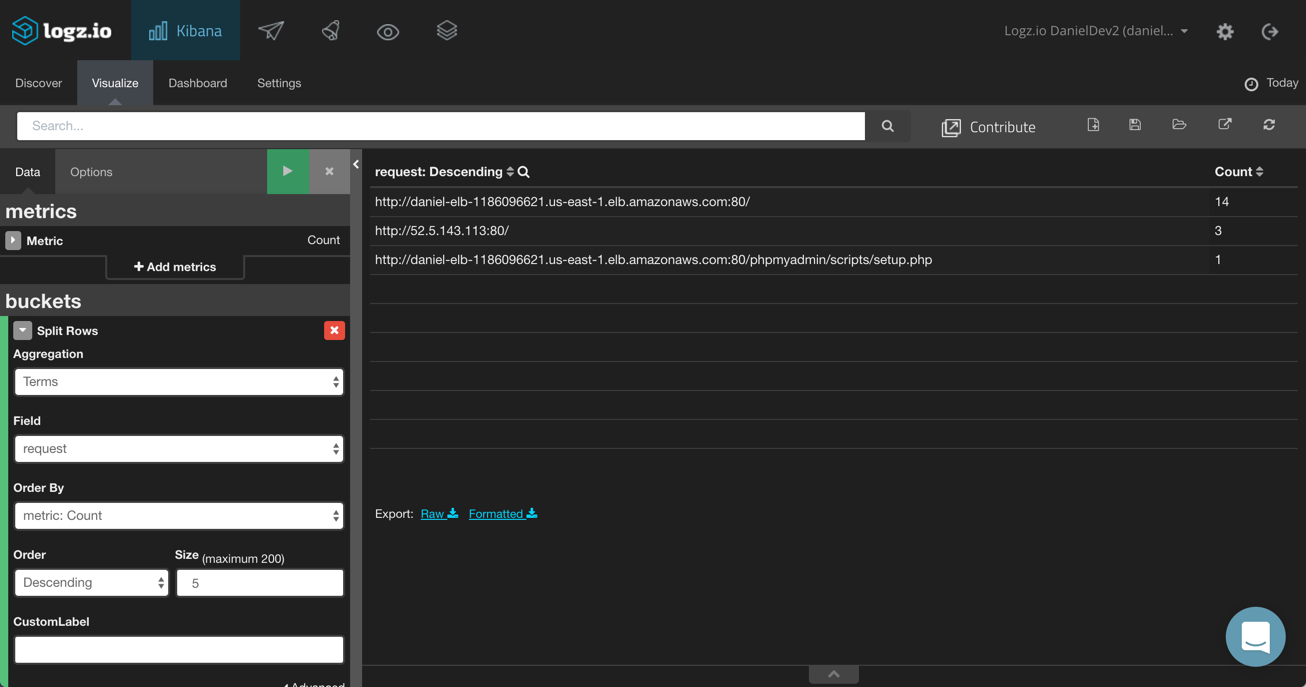

Using a Data Table visualization, you can see a list of all the requests (using the request field) being processed by your load balancer. You could also enhance this table further by adding the average processing time for both the requests and the responses.

Endnotes

Whether for statistical analysis, diagnostics, or troubleshooting, ELB logs are a valuable resource to have on your side. But it is being able to aggregate and analyze them with ELK that really allows you to benefit from the data.

The visualization examples above were simple demonstrations of how to analyze your ELB logs, but you can slice and dice the data in any way you want. By adding all these visualizations into one comprehensive dashboard, you can equip yourself with a very useful monitoring tool for your ELB instances.

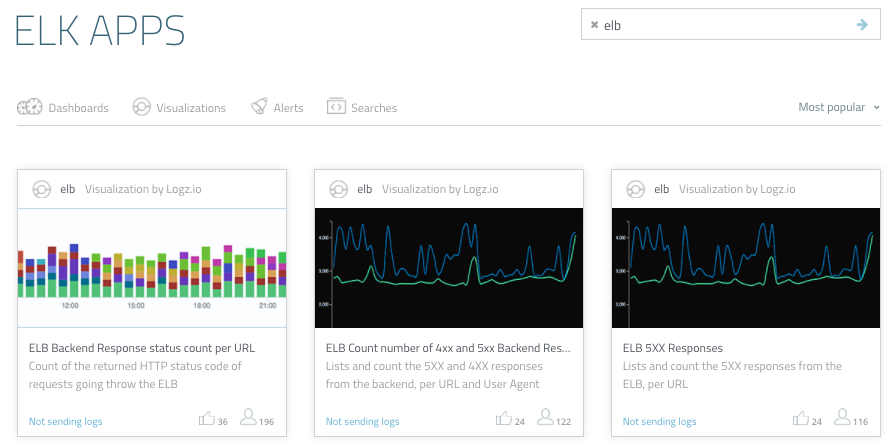

ELK Apps, Logz.io’s free library of pre-made KIbana visualizations and dashboards for many various log types, contains a number of ELB dashboards you can use to get started. Just open the page and search for “elb”:

Enjoy!

Published at DZone with permission of Daniel Berman, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments