The Future of AI Art: Exploring the Capabilities of Stable Diffusion Web UI (Automatic 1111)

Automatic 1111's web UI for Stable Diffusion simplifies AI Image Generation: text-to-image, sketch-to-image, model training, and API integration detailed.

Join the DZone community and get the full member experience.

Join For FreeWhat Are Stable Diffusion Models and Why Are They Important?

Stable diffusion models are used in machine learning, particularly for creating high-quality images, videos, or other types of data. They are based on the principles of diffusion processes, which involve transforming a simple, usually random, initial state into a complex and structured output by gradually refining the state through multiple iterations. Stable diffusion helps simplify many complex use cases, such as image generation, video generation, data augmentation, text-to-image generation, and scientific visualization, such as medical imaging and astronomy.

There are various ways to access stable diffusion models, such as cloud-based services, online platforms and APIs, research and academic resources, and Stable Diffusion Web UI (Automatic 1111).

In this article, we will go into detail about Automatic 1111 and its features.

Stable Diffusion Web UI (Automatic 1111)

Automatic 1111 is a Gradio-based web UI for Stable Diffusion models. It is a one-stop solution for all your AI-based image generation needs. This helper guide is specifically designed for beginners and intermediaries to help with the installation and setting up of APIs to create various workflows for AI-based image generations.

Key Features

There are endless possibilities to try out with Automatic 1111 and its APIs. Its "bring your own model" capability allows you to use your custom-trained or finetuned models in the app to generate images. Below are some of the widely used and most popular features to try out:

- Text-to-image: Generates images from text prompts.

- Image-to-image: Allows you to modify the objects of an existing image.

- Sketch-to-image: Converts your rough sketches into photo-realistic images using the most advanced neural network ControlNet models.

- Inpaint: Enhances the uploaded image to make it more color and realistic by filling in missing or damaged parts with realistic contents.

- Background replacement: Replaces the backgrounds of an uploaded image elegantly with simple text prompts.

- Caption generation: Generates text captions describing the objects of an uploaded image.

- Model fine-tuning: Using this handy tool, you can easily tune your model to the appropriate configuration. For instance, some models work great at higher steps whereas some yield good results at very low step counts, using this tool, you can benchmark the model attributes easily.

- Model training: Train an existing model or your custom models from scratch on your custom datasets. Tools like LoRa come in handy for all your training needs

- Custom scripts: Bring your custom scripts capabilities allows you to enhance the application UI with custom actions or functionalities.

For more features and use cases please refer to their official documentation here.

How To Install and Run

Platform-based installation instructions can be found in their official documentation.

Follow the instructions based on your system CPU and GPU configuration. Alternatively, you can Containerize the application and run it locally.

What Are Samplers and Why Are They Important?

Samplers play a vital role in image generation. Stable Diffusion models do not always generate clean images by themselves. The images generated are completely random and may contain noise. Samplers are used to suppress the noise in an iterative fashion. This denoising process is called Sampling.

There are several types of Samplers that are widely used in the image generation world:

- Euler

- DPM++

- LMS

- Heun

and more...

How To Add Samplers

Adding samplers is easy. In this article, we will see how to add the most popular and widely used sampler framework called K-diffusion. By default, these samplers should be available for you to use in Stable Diffusion Web UI, in case if you do not see them in your version, follow the instructions below to manually add them:

This step assumes that you already have:

- Installed GitHub CLI, if not download and install it from here

- Installed Automatic 1111 in your local computer and have access to the source code

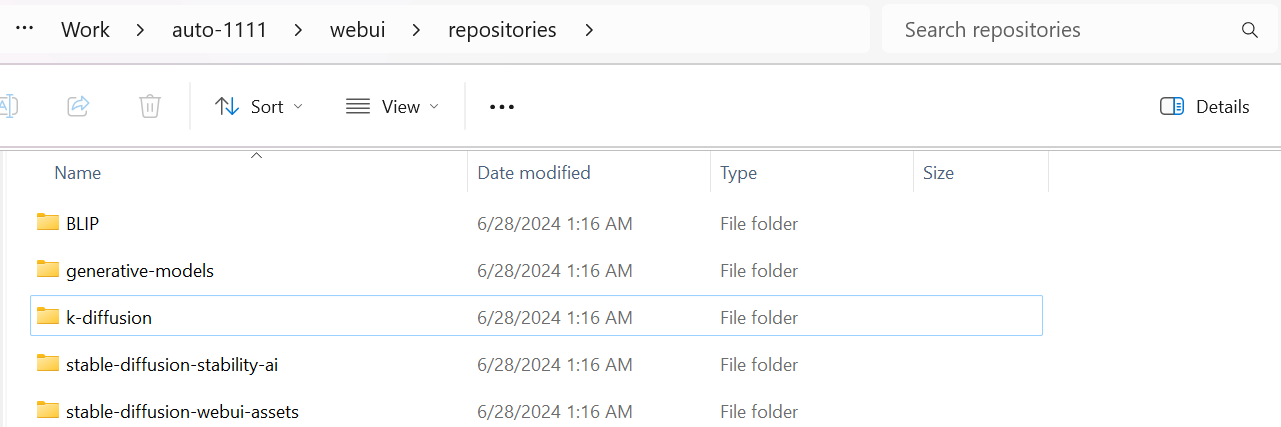

Once the prerequisites are met, open a command prompt, PowerShell or Terminal, and navigate to webui --> repositories folder in your workspace. Run the below git clone command to download the k-diffusion samplers.

git clone https://github.com/crowsonkb/k-diffusion.gitThis command creates a folder named k-diffusion inside repositories and downloads all the contents from crowsonkb/k-diffusion.

You can follow the same process for adding any other samplers. Once the samplers are downloaded, you may need to restart the application to load the newly downloaded samplers.

Automatic 1111 APIs

Automatic 1111 provides FastAPI-based restful APIs for various basic workflows. You can access the API docs using this URL.

Enabling APIs

By default, swagger docs or “/docs” URL may not display all the available Automatic 1111 APIs. The main APIs such as

- /sdapi/v1/txt2img

- /sdapi/v1/img2img

will not be enabled as well.

Enabling these APIs requires the Automatic 1111 to be restarted after setting the “--api” as a command line argument in the webui-user.bat file.

@echo off

set PYTHON=

set GIT=

set VENV_DIR=

set COMMANDLINE_ARGS=--api

call webui.batAfter setting this up, kill the terminal/command prompt and re-run the run.bat file. Once the application comes up, you will notice all the important APIs visible in the swagger docs.

How to Access the APIs

Accessing the Automatic 1111 APIs is just the same as any other Rest APIs. You can create a simple Curl Command, Python client, or use Postman.

Below is the sample client for calling Text-to-Image API with a simple text prompt.

import base64

import requests

import json

url = "http://localhost:7860/sdapi/v1/txt2img"

payload = json.dumps({

"prompt": "A beautiful girl with a red hair and blue eyes",

"negative_prompt": "fake, unreal, cartoon",

"seed": 1,

"steps": 20,

"width": 512,

"height": 512,

"cfg_scale": 7,

"hr_scheduler": "Karras",

"sampler_name": "DPM++ 2M",

"n_iter": 1,

"batch_size": 1

})

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

res_json = response.json()

images = res_json["images"]

for img in images:

img = base64.b64decode(img)

with open(f"output/master_piece.png", "wb") as f:

f.write(img)

print("Done!")This client sends a JSON payload to the /sdapi/v1/txt2img endpoint and receives generated images as base64 encoded strings in JSON format. The response is then processed by iterating through the base64 encoded strings, decoding them, and saving them as PNG files for human readability.

Important Note:

The code base referenced in this article is from version 1.9.3 of the Stable Diffusion Web UI. In this version, the Sampler (DPM++ 2M) and Scheduler (Karras) are represented as two separate attributes in the payload. If you're using an older version of the Stable Diffusion Web UI, your payload might look like this:

"sampler_name": "DPM++ 2M Karras",

Notice how the Scheduler is appended to the sampler, resulting in "DPM++ 2M Karras". Therefore, you may not have used the hr_scheduler attribute in the older versions.

Older Versions vs. Latest Version (1.9.3 and above)

Key Learnings

In this article, we explored:

- Capabilities of Stable Diffusion Web UI

- Samplers

- What are they?

- Why are they important?

- How to add samplers to the Automatic 1111?

- Automatic 1111 APIs

- Enabling and accessing the APIs

Hope you found something useful in this article, happy learning!

Opinions expressed by DZone contributors are their own.

Comments