Apache Kafka in the Gaming Industry: Use Cases + Architectures

How event streaming with Apache Kafka provides a scalable, reliable, and efficient infrastructure to make gamers happy and gaming companies successful.

Join the DZone community and get the full member experience.

Join For FreeThis blog post explores how event streaming with Apache Kafka provides a scalable, reliable, and efficient infrastructure to make gamers happy and gaming companies successful. Various use cases and architectures in the gaming industry are discussed, including online and mobile games, betting, gambling, and video streaming.

Learn about:

- Real-time analytics and data correlation of game telemetry

- Monetization network for real-time advertising and in-app purchases

- Payment engine for betting

- Detection of financial fraud and cheating

- Chat function in games and cross-games

- Monitor the results of live operations like weekend events or limited time offers

- Real-time analytics on metadata and chat data for marketing campaigns

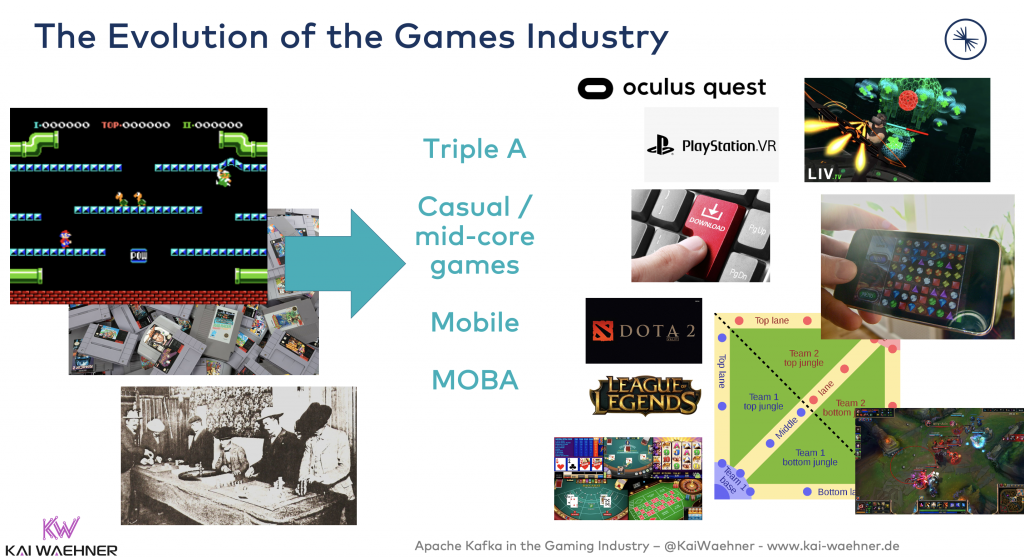

The Evolution of the Gaming Industry

The gaming industry must process billions of events per day in real-time and ensure consistent and reliable data processing and correlation across gameplay interactions and backend analytics. Deployments must run globally and work for millions of users 24/7, 365 days a year.

These requirements are valid for hardcore games and blockbusters, including massively multiplayer online role-playing games (MMORPG), first-person shooters, and multiplayer online battle arenas (MOBA), but also mid-core and casual games. Reliable and scalable real-time integration with consumer devices like smartphones and game consoles is as essential as cooperating with online streaming services like Twitch and betting providers.

Business Models in the Gaming Industry

Gaming is not just about games anymore. Though, even in the games industry, the option of playing games is diverse, from consoles and PCs to mobile games, casino games, online games, and various other options. In addition to the games, people also engage via professional eSports, $$$ tournaments, live video streaming, and real-time betting.

This is a crazy evolution, isn't it? Here are some of the business models relevant today in the gaming industry:

- Hardware sales

- Game sales

- Free-to-play and in-game purchases, such as skins or champions

- Gambling (loot boxes)

- Game-as-a-service (subscription)

- Seasonal in-game purchases like passes for theme events, mid-season invitational & world championship, passes for competitive play

- Game infrastructure as a service

- Merchandise sales

- Communities including eSports broadcast, ticket sales, franchising fees

- Live betting

- Video streaming, including ads, rewards, etc.

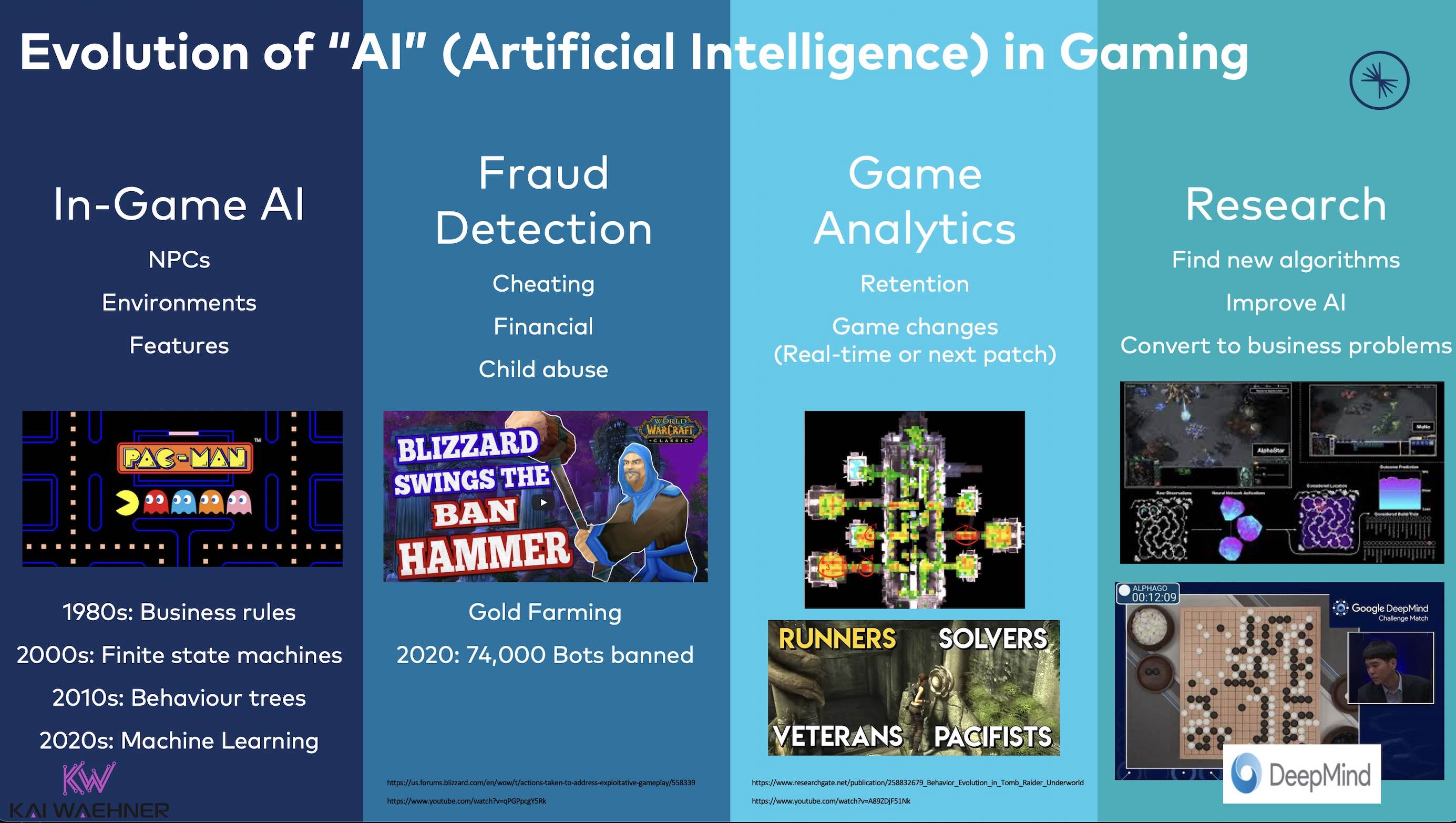

Evolution of AI in Gaming

Artificial intelligence (business rules, statistical models, machine learning, deep learning) is vital for many use cases in gaming. These use cases include:

- In-game AI: Non-playable characters (NPC), environments, features

- Fraud detection: Cheating, financial fraud, child abuse

- Game analytics: Retention, game changes (real-time delivery or via next patch/update)

- Research: Find new algorithms, improve AI, adapt to business problems

Many of the use cases I explore use AI in conjunction with event streaming and Kafka in the following.

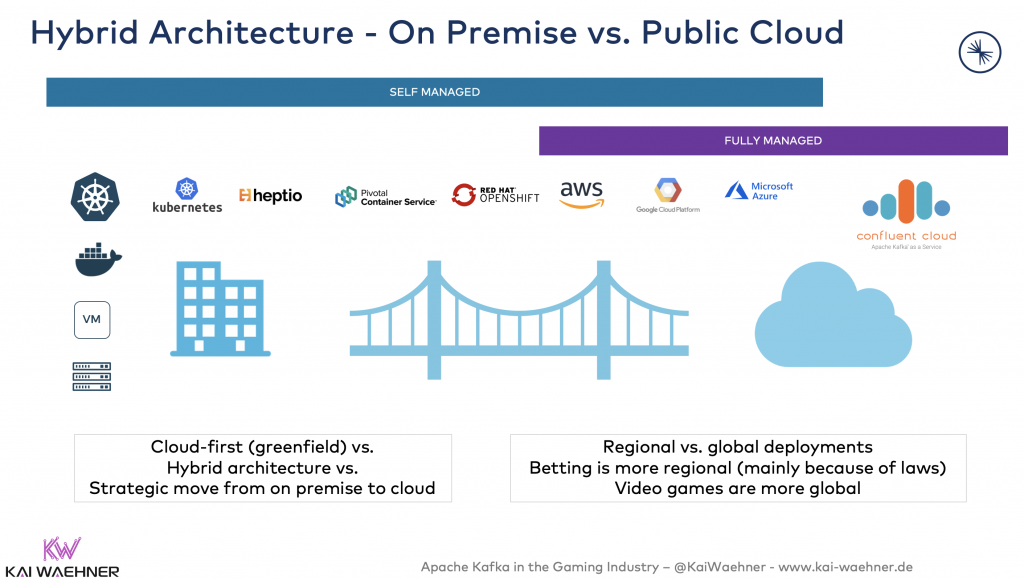

Hybrid Gaming Architectures for Event Streaming With Apache Kafka

A vast demand for building an open, flexible, scalable platform and real-time processing are the reasons why so many gaming-related projects use Apache Kafka. I will not discuss Kafka here and assume you know why Kafka became the de facto standard for event streaming.

What's more interesting is the different deployments and architectures I have seen in the wild. Infrastructures in the gaming industry are often global — sometimes cloud-only, sometimes hybrid with local on-premises installations. Betting is usually regional (mainly because of laws and compliance reasons). Games typically are global. If a game is excellent, it gets deployed and rolled out across the world.

Let's now take a look at several different use cases and architectures in the gaming industry. Most of these examples are relevant in all gaming-related use cases, including games, mobile, betting, gambling, and video streaming.

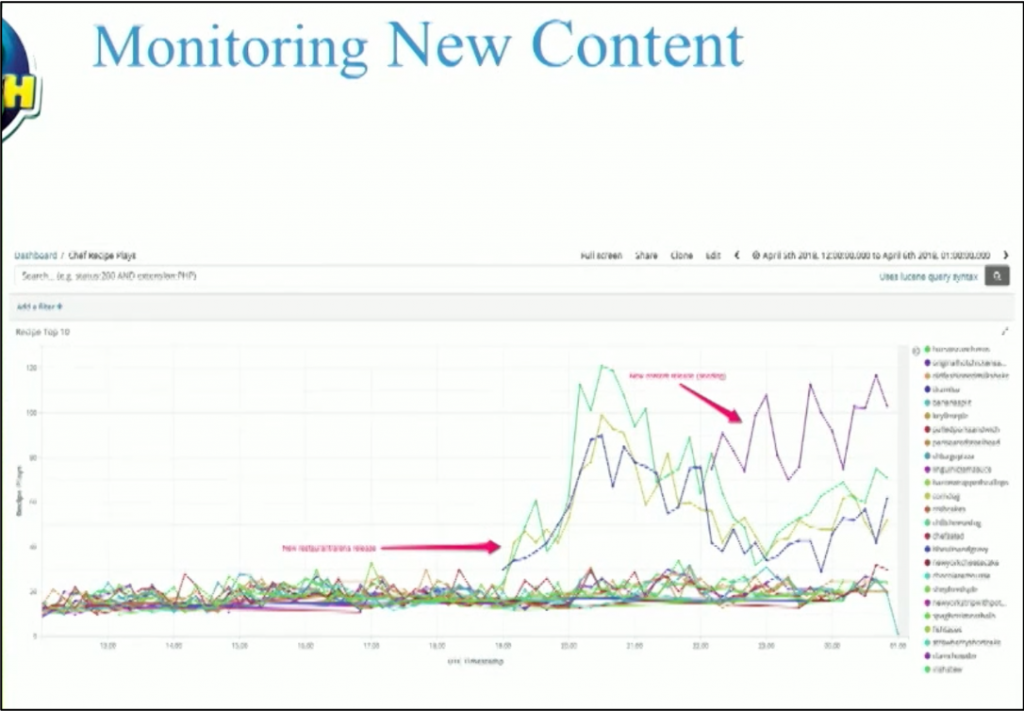

Infrastructure Operations: Live Monitoring and Troubleshooting

Monitoring the results of live operations is essential for every mission-critical infrastructure. Use cases include:

- Game clients, game servers, game services

- Service health 24/7

- Special events such as weekend tournaments, limited time offers, and user acquisition campaigns

Immediate and correct troubleshooting requires real-time monitoring. You need to be able to answer questions like, "Who creates the problem? Client? ISP? The game itself?"

Let's take a look at a typical example in the gaming industry — a new marketing campaign:

- "Play for free over the weekend"

- Scalability — huge extra traffic

- Monitoring — was the marketing campaign successful? How profitable is the game/business?

- Real-time (e.g., alerting)

- Batch (e.g., analytics and reporting of success with Snowflake)

A lot of diverse data has to be integrated, correlated, and monitored to keep the infrastructure running and to troubleshoot issues.

Elasticity Is the Key for Success in the Games Industry

A key challenge in infrastructure monitoring is the required elasticity. You cannot just provision some hardware, deploy the software, and operate it 24 hours, 365 days a year. Gaming infrastructures require elasticity. No matter if you care about online games, betting, or video streaming.

Chris Dyl, Director of Platform at Epic Games, pointed this out well at AWS Summit 2018: "We have an almost ten times difference in workloads between peak and low-peak. Elasticity is really, really important for us in any particular region at the cloud providers."

Confluent provides elasticity for any Kafka deployment, no matter if the event streaming platform runs self-managed at the edge or fully managed in the cloud. Check out "Scaling Apache Kafka to 10+ GB Per Second in Confluent Cloud" to see how Kafka can be scaled automatically in the cloud. Self-managed Kafka gets elastic by using tools such as Self-Balancing Kafka, Tiered Storage, and Confluent Operator for Kubernetes.

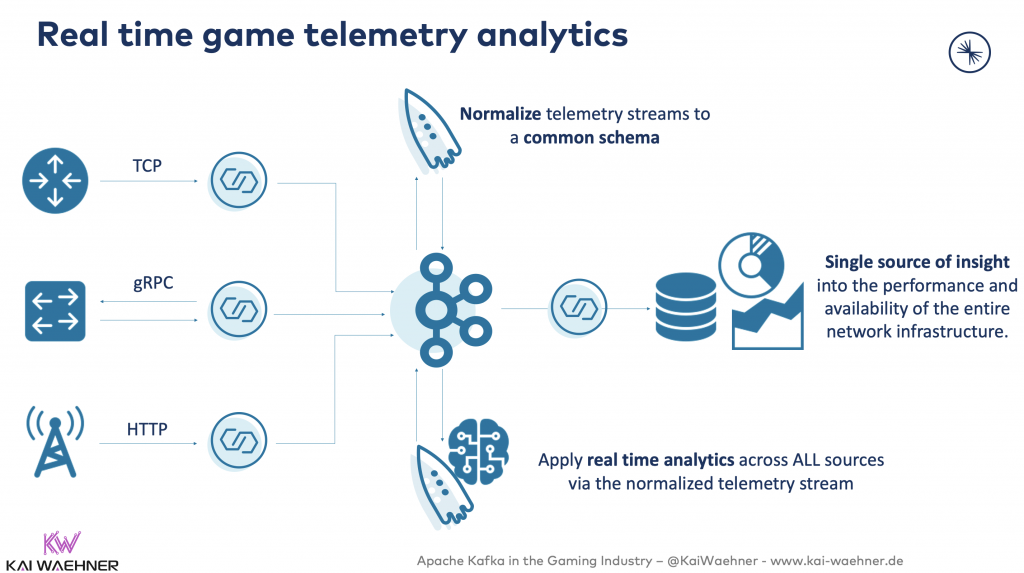

Game Telemetry: Real-time Analytics and Data Correlation With Kafka

Game Telemetry describes how the player plays the game. Player information includes business logic such as user actions (button clicks, shooting, use item) or game environment metrics (quests, level up), and technical information like login from a specific server, IP address, location.

Global Gaming requires proxies all over the world to guarantee regional latency for millions of clients. Besides, a central analytics cluster (with anonymized data) correlates data from across the globe. Here are some use cases for using game telemetry:

- Game monitoring

- How well do players progress through the game and what problems occurred

- Live operations — adjust the gameplay

- Server-side changes while the player is playing the game (e.g., time-limited event, give reward)

- Real-time updates to improve the game or align to audience needs (or in other words: recommend an item/upgrade/skin/additional in-game purchase)

Most use cases require processing big data streams in real-time:

Big Fish Games

Big Fish Games is an excellent example of live operations leveraging Apache Kafka and its ecosystem. They develop casual and mid-core games. 2.5 billion games were installed on smartphones and computers in 150 countries, representing over 450 unique mobile games and over 3,500 unique PC games.

Live operations use real-time analytics of game telemetry data. For instance, Big Fish Games increases revenue while the player plays the game by making context-specific recommendations for in-game purchases in real-time. Kafka Streams is used for continuous data correlation in real-time at scale.

Check out the details in the Kafka Summit Talk "How Big Fish Games Developed Real-Time Analytics."

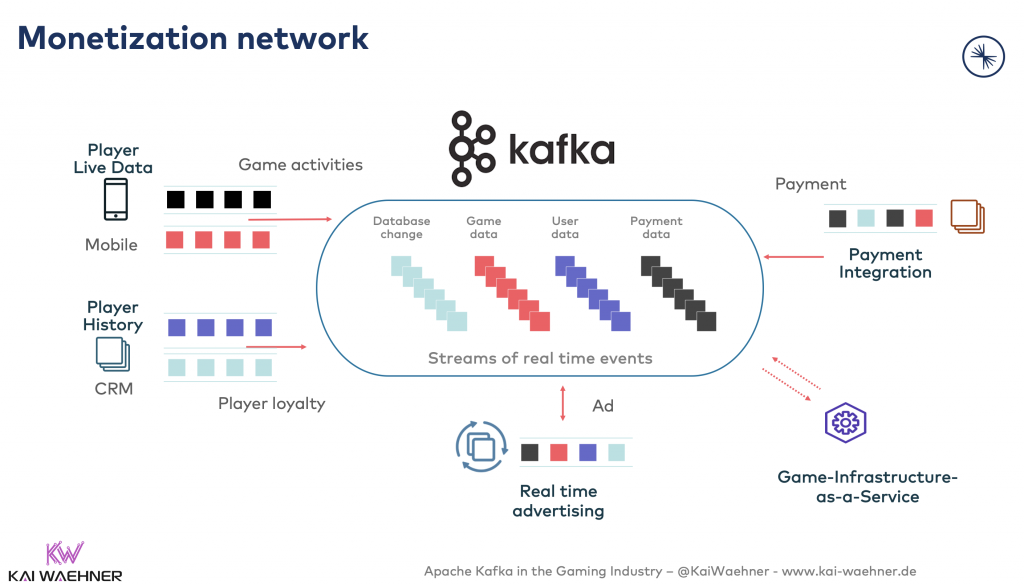

Monetization Network

Monetization networks are a fundamental component in most gaming companies. Use cases include:

- In-game advertising

- Micro-transactions and in-game purchases: Sell skins, upgrade to the next level...

- Game infrastructure as a service: Multi-platform and store integration, matchmaking, advertising, player identity and friends, cross-play, lobbies, leader boards, achievements, game analytics, etc.

- Partner network: Cross-sell game data, game SDK, game analytics, etc.

A monetization network looks like the following:

Unity Ads

Unity is a fantastic example. In 2019, content was installed 33 billion times, reaching 3 billion devices worldwide. The company provides a real-time 3D development platform.

Unity operates one of the largest monetization networks in the world:

- Reward players for watching ads

- Incorporate banner ads

- Incorporate augmented reality (AR) ads

- Playable ads

- Cross-promotions

Unity is a data-driven company:

- Average about half a million events per second

- Handles millions of dollars of monetary transactions

- Data infrastructure based on Confluent Platform, Confluent Cloud, and Apache Kafka

A single data pipeline provides the foundational infrastructure for analytics, R&D, monetization, cloud services, etc., for real-time and batch processing leveraging Apache Kafka:

- Real-time monetization network

- Feed machine learning models in real-time

- Data lake went from two-day latency down to 15 minutes

If you want to learn about their success story of migrating this platform from self-managed Kafka to fully-managed Confluent Cloud, read Unity's post on the Confluent Blog: "How Unity Uses Confluent for Real-Time Event Streaming at Scale."

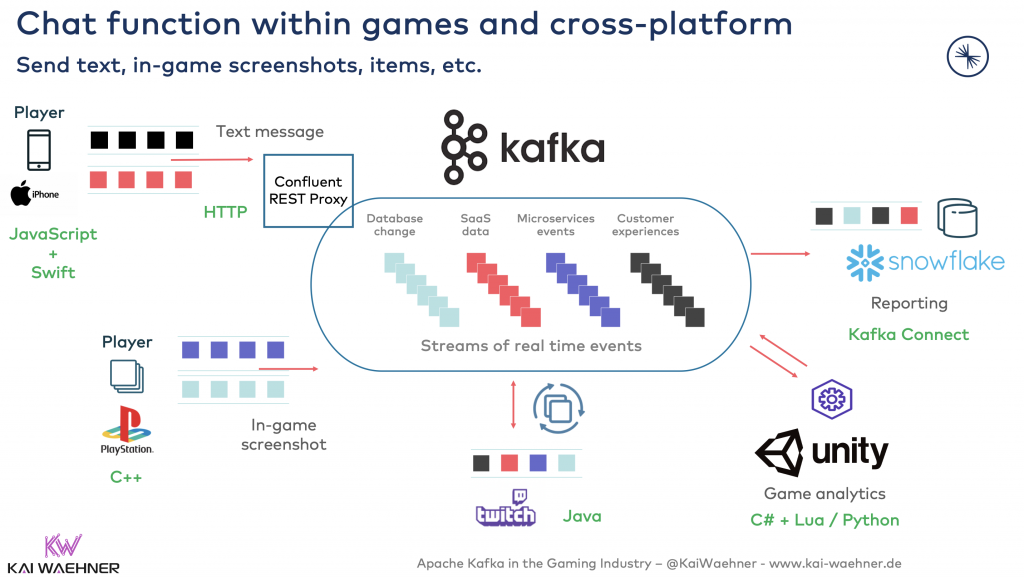

Chat Function Within Games and Cross-Platform

Building a chat platform is not a trivial task in today's world. Chatting means send text, in-game screenshots, in-game items, and other things. Millions of events have to be processed in real-time. Cross-platform chat platforms need to support various technologies, programming languages, and communication paradigms such as real-time, batch, request-response:

The characteristics of Kafka make it the perfect infrastructure for chat platforms due to high scalability, real-time processing, and real decoupling, including backpressure handling.

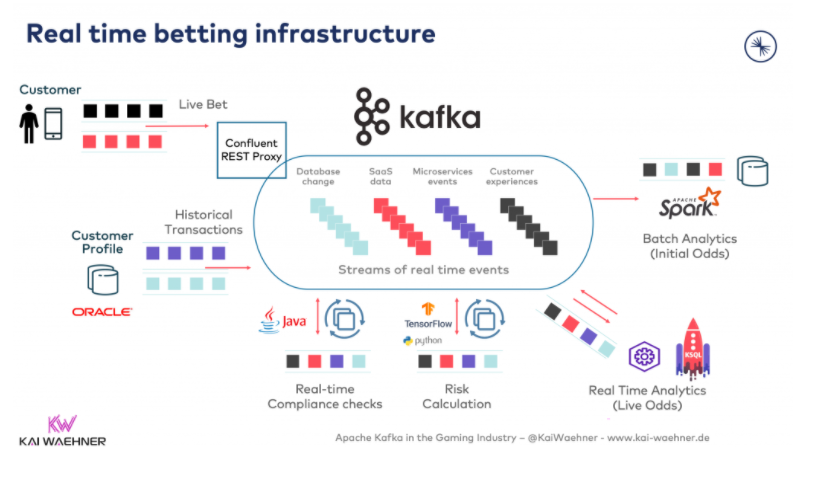

Payment Engine

Payment infrastructure needs to be real-time, scalable, reliable, and technology-independent. No matter if your solution is built for games, betting, casino, 3D game engines, video streaming, or any other third services.

Most payment engines in the gaming industry are built on top of Apache Kafka. Many of these companies provide public information about their real-time betting infrastructure. Here is one example of an architecture:

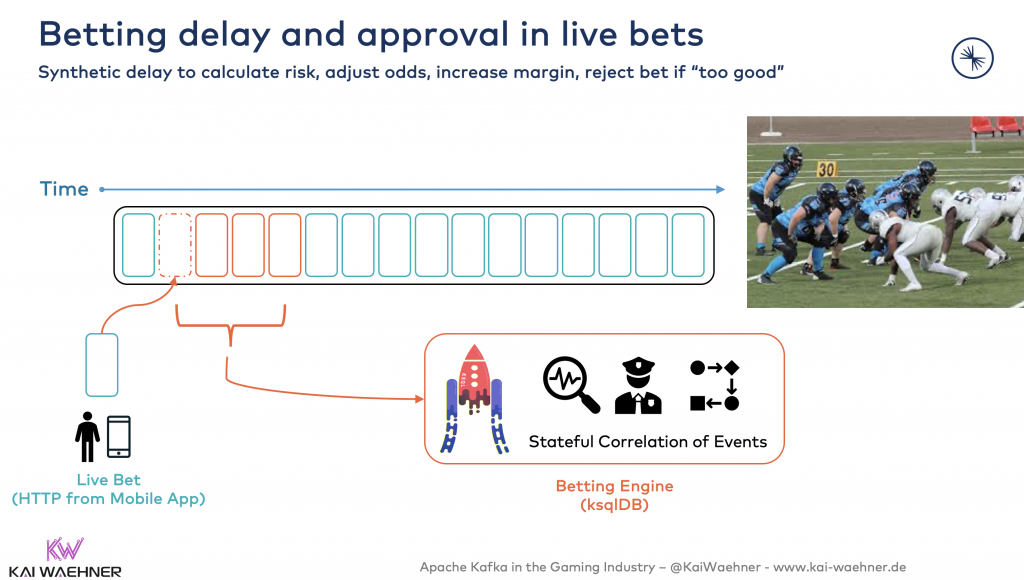

One example use case is the implementation of a betting delay and approval system in live bets. Stateful streaming analytics is required to improve the margin:

Kafka-native technologies like Kafka Streams or ksqlDB enable a straightforward implementation of these scenarios.

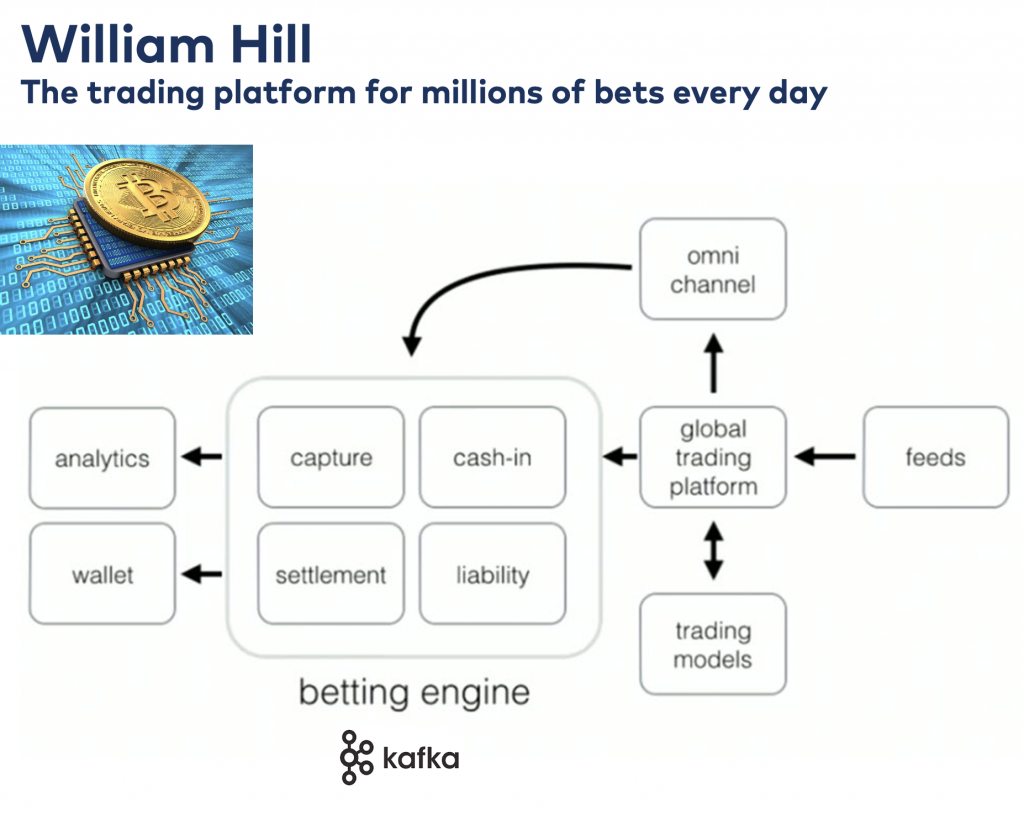

William Hill: A Secure and Reliable Real-time Microservice Architecture

William Hill went from a monolith to a flexible, scalable microservice architecture:

- Kafka as central, reliable streaming infrastructure

- Kafka for messaging, storage, cache, and processing of data

- Independent decoupled microservices

- Decoupling and replayability

- Technology independence

- High throughput + low latency + real-time

William Hill's trading platform leverages Kafka as the heart of all events and transactions:

- "Process-to-process" execution in real-time

- Integration with analytic models for real-time machine learning

- Various data sources and data sinks (real-time, batch, request-response)

Bookmaker Business Equals Banking Business (Including Legacy Middleware and Mainframes)

Not everyone can start from greenfield. Legacy middleware and mainframe integration, offloading, and replacement is a common scenario.

Betting usually is a regulated market. PII data is often processing on-premise in a regional data center. Non-PII data can be offloaded to the cloud for Analytics.

Legacy technologies like the mainframe are a crucial cost factor, monolithic and inflexible. I covered the relation between Kafka and Mainframe in detail in another post.

And here is the story about Kafka vs. Legacy Middleware (MQ, ETL, EBS).

Streaming Analytics for Retention, Compliance, and Customer Experience

Data quality is critical for legal compliance and responsible gaming compliance. Client retention is vital to keep engagement and revenue growth.

Plenty of real-time streaming analytics use cases exist in this environment. Some examples where Kafka-native frameworks like Kafka Streams or ksqlDB can provide the foundation for a reliable and scalable solution are:

- Player winning/losing streak

- Player conversion — from registration to wage (within x min)

- Game achievement of the player

- Fraud detection — e.g., payment windows

- Long-running windows per player over days/months

- Tournaments

- Incentive unhappy players with an additional free credit

- Reports to regulator — replay old events in a guaranteed order

- Geolocation to enable features, limitations, or commissions

Stream processing is also relevant for many other use cases, including fraud detection, as you will see in the next section.

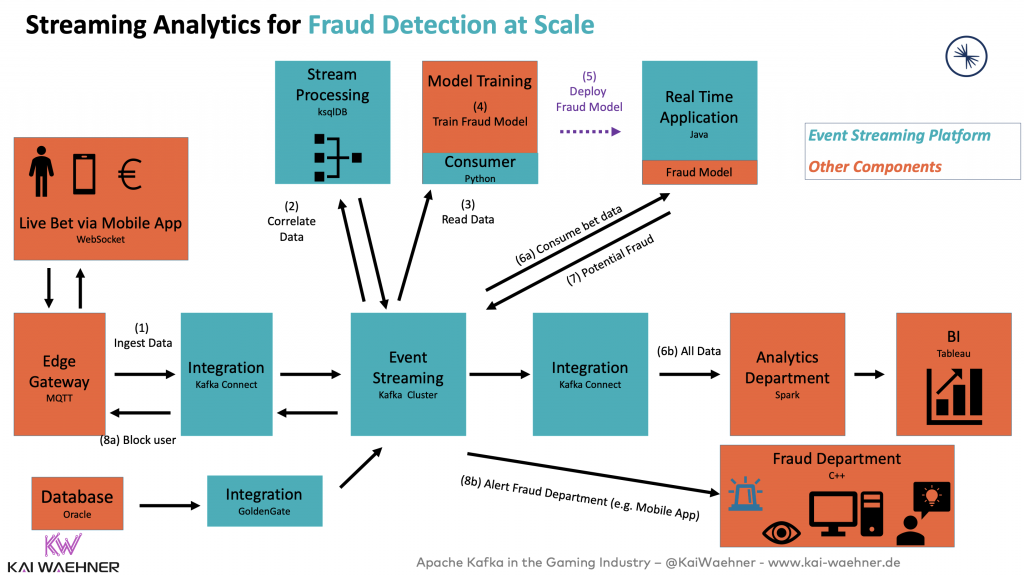

Fraud Detection in Gaming With Kafka

Real-time analytics for detecting anomalies is a widespread scenario in any payment infrastructure. In gaming, two different kinds of fraud exist:

- Cheating: Fake accounts, bots, etc.

- Financial fraud: match-fixing, stolen credit cards, etc.

Here is an example of doing streaming analytics for fraud detection with Kafka, its ecosystem, and machine learning:

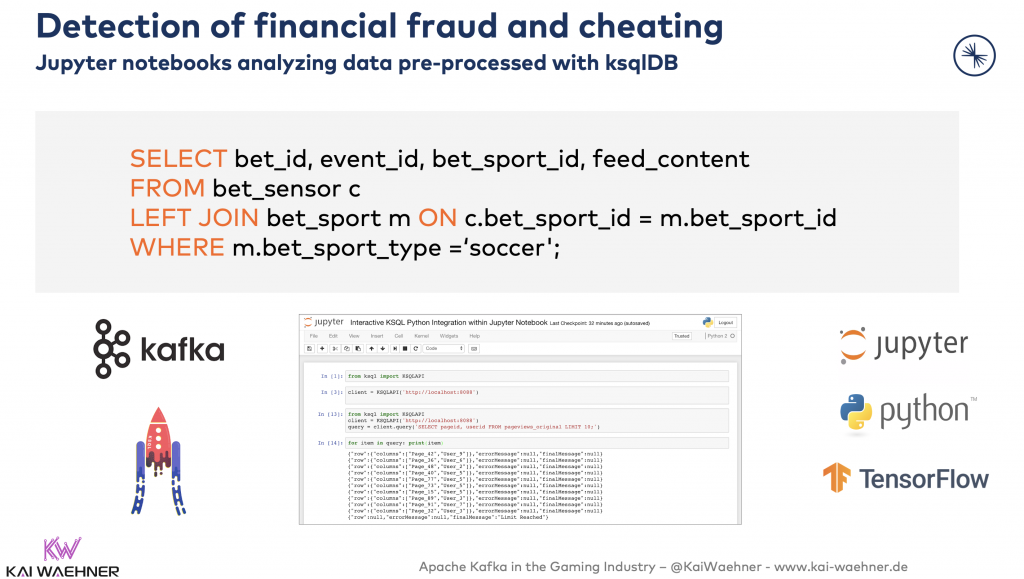

Here is an example of detecting financial fraud and cheating with Jupyter notebooks and Python to analyze data pre-processed with ksqlDB:

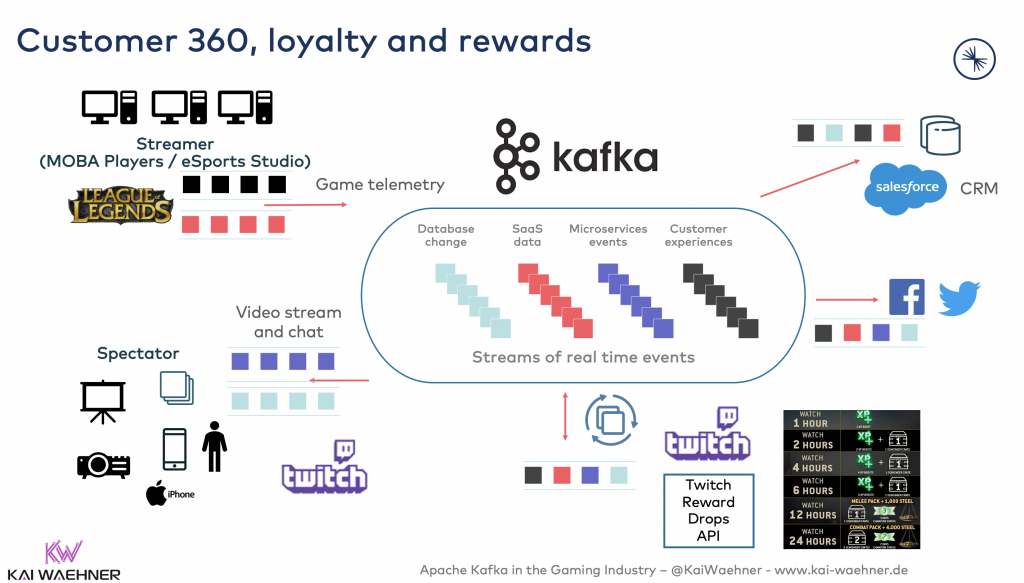

Customer 360: Recommendations, Loyalty System, Social Integration

Customer 360 is critical for real-time and context-specific acquisition, engagement, and retention. Use cases include:

- Real-time event streaming

- Game event triggers

- Personalized statistics and odds

- Player segmentation

- Campaign orchestration ("player journey")

- Loyalty system

- Rewards (e.g., upgrade, exclusive in-game content, beta keys for the announcement event)

- Avoid customer churn

- Cross-selling

- Social network integration

- Twitter, Facebook, and other social media sites

- Example: Candy Crush (I guess every Facebook user has seen ads for this game)

- Partner integration

- API Management

The following architecture depicts the relation between various internal and external components of a customer 360 solution:

Customer 360 at Sky Betting and Gaming

Sky Betting and Gaming has built a real-time streaming architecture for customer 360 use cases with Kafka's ecosystem.

When discussing why they choose Kafka-native frameworks like Kafka Streams instead of a zoo of technologies like Hadoop, Spark, Storm, and others, Kaerast states:

"Most of our streaming data is in the form of topics on a Kafka cluster. This means we can use tooling designed around Kafka instead of general streaming solutions with Kafka plugins/connectors.

"Kafka itself is a fast-moving target, with client libraries constantly being updated; waiting for these new libraries to be included in an enterprise distribution of Hadoop or any off the shelf tooling is not really an option. Finally, the data in our first use-case is user-generated and needs to be presented back to the user as quickly as possible."

Disney+ Hotstar: Telco-OTT for Millions of Cricket Fans in India

In India, people love cricket. Millions of users watch live streams on their smartphones. But they are not just watching it. Instead, gambling is also part of the story. For instance, you can bet on the result of the next play. People compete with each other and can win rewards.

This infrastructure has to run at an extreme scale. Millions of actions have to be processed each second. No surprise that Disney+ Hotstar chose Kafka as the heart of this infrastructure:

IoT Integration is often also part of such a customer 360 implementation. Use cases include:

- Live eSports events, TV, video streaming, and news stations

- Fan engagement

- Audience communication

- Entertaining features for Alexa, Google Home, or sports-specific hardware

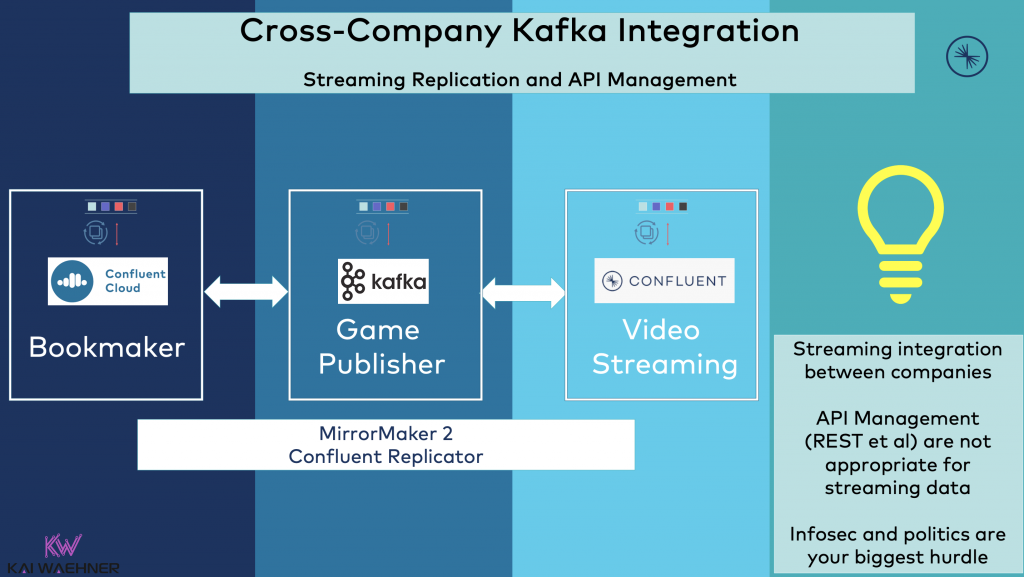

Cross-Company Kafka Integration

Last but not least, let's talk about a trend I see in many industries: Streaming replication across departments and companies.

Most companies in the gaming industry use event streaming with Kafka at the heart of their business. However, connecting to the outside world (i.e., other departments, partners, third party services) is typically done via HTTP/REST APIs. A total anti-pattern that's not scalable! Why not directly stream the data?

I see more and more companies moving to this approach.

API Management is an elaborate discussion on its own. Therefore, I have a dedicated blog post about the relation between Kafka and API Management.

Slides and Video: Kafka in the Gaming Industry

Here are the slides and on-demand video recording discussing Apache Kafka in the gaming industry in more detail:

The on-demand video recording can be watched here.

As you learned in this post, Kafka is used everywhere in the gaming industry. No matter if you focus on games, betting, or video streaming.

What are your experiences with modernizing the infrastructure and applications in the gaming industry? Did you or do you plan to use Apache Kafka and its ecosystem? What is your strategy? Let's connect on LinkedIn and discuss it!

Published at DZone with permission of Kai Wähner, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments