Apache Kafka and SAP ERP Integration Options

Integration of Apache Kafka and SAP systems. Overview and Trade-offs of APIs, SDKs, Tools, Kafka Connect Connectors for ECC, S4/Hana, other SAP apps.

Join the DZone community and get the full member experience.

Join For FreeA question I get every week from customers across the globe is, "How can I integrate my SAP system with Apache Kafka?" This post explores various alternatives, including connectors, third party tools, custom glue code, and trade-offs between the different options.

After exploring what SAP is, I will discuss several integration options between Apache Kafka and SAP systems:

- Traditional middleware (ETL/ESB)

- Web services (SOAP/REST)

- 3rd party turnkey solutions

- Kafka-native connectivity with Kafka Connect

- Custom glue code using SAP SDKs

Disclaimer before you read on:

I am not an SAP expert. It is tough to stay up-to-date with the vast and complex ecosystem of SAP products, (re-)brands, versions, services, SDKs, and APIs. I am sorry if some of the below information is not 100% accurate or outdated. Always double-check on the SAP website (if the links from Google still work, I had some issues with some pages "no longer available" while researching for this blog post). If you see any inaccurate or missing information, please let me know and I will update the blog post.

What is SAP?

SAP is a German multinational software corporation that makes enterprise software to manage business operations and customer relations.

It is quite interesting: Nobody asks how to integrate with IBM or Oracle. Instead, people more specifically ask how to integrate with IBM MQ, IBM DB2, IBM Mainframe (still very ambiguous), or any other of the hundreds of IBM products.

For SAP, people ask: "How can I integrate with SAP?" Let's clarify what SAP is before exploring integration options.

The company is primarily known for its ERP software. But if you check out the official "What is SAP?" page, you find out that SAP offers solutions across a wide range of areas:

- ERP and Finance

- CRM and Customer Experience

- Network and Spend Management

- Digital Supply Chain

- HR and People Engagement

- Experience Management

- Business Technology Platform

- Digital Transformation

- Small and Midsize Enterprises

- Industry Solutions

SAP's Software Portfolio

SAP's stack includes homegrown products like SAP ERP and acquisitions with their own codebase, including Ariba for supplier network, hybris for e-commerce solutions, Concur for travel & expense management, and Qualtrics for experience management.

Even if you talk about SAP ERP, the situation is still not that easy. Most companies still run SAP ERP Central Component (ECC, formerly called SAP R/3), SAP's sophisticated (and aged) ERP product. ECC runs on a third-party relational database from Oracle, IBM, or Microsoft, while HANA is SAP's in-memory database. The new ERP product is SAP S4/Hana (no, this is not just the famous in-memory database). Oh, and there is SAP S4/Hana Cloud. And before you wonder: No, this is not the same feature set as the on-premise version!

Various interfaces exist depending on your product. An interface can be an (awful) proprietary technologies like BAPI or iDoc, (okayish) standards-based web service APIs using SOAP or REST / HTTP, a (non-scalable) JDBC database connectivity, or if you are lucky even a (scalable and real-time) Event/Messaging API.

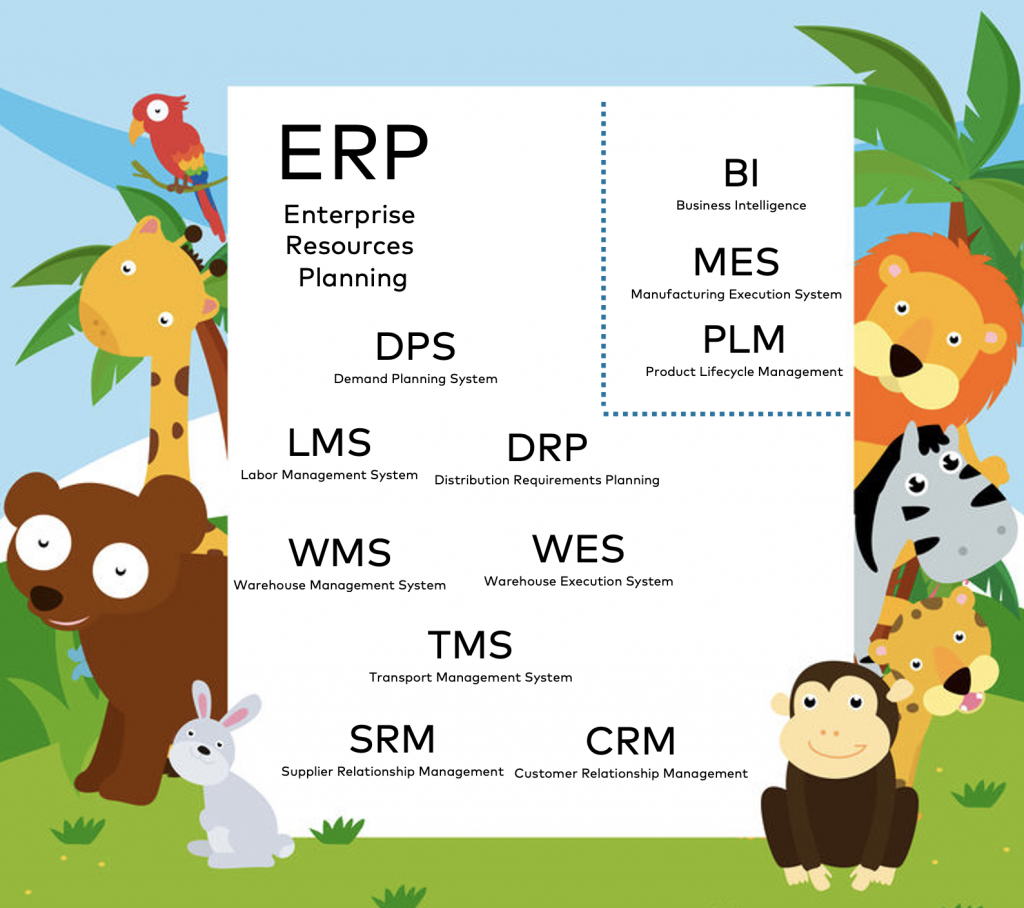

And sorry, we are still not done yet. Even if you talk about ERP systems, this can mean anything from a zoo of products or components, depending on who you are talking to:

So, before you want to discuss the integration of your SAP product with Kafka, find out the product, version, and deployment infrastructure of your SAP components.

Different Integration Options Between Kafka and SAP

After this introduction, you hopefully understand that there is no silver bullet for SAP integration. The following will explore different integration options between Kafka and SAP and their trade-offs. The main focus is on SAP ERP (old ECC and new S4/Hana), but the overview is more generic, including integration capabilities with other components and products.

Also, keep in mind that you typically need or want to integrate with a function or service. Direct integration with the data object does not make much sense in most cases, as you would have to re-implement the mapping and denormalization between the data objects. Especially for source integration, i.e., building pipelines from SAP to Kafka. In the case of SAP ERP, you typically integrate with RFC/BAPI/iDoc or any other web service interface for this reason.

Traditional Middleware (ETL/ESB) for SAP Integration

Integration tools exist just for the sake of integrating different sources and sinks:

- Extract-Load-Transform (ETL) for batch integration, like Informatica, Talend or SAP NetWeaver Process Integration

- Enterprise Service Bus (ESB) for integration via web services and messaging, like TIBCO BusinessWorks or Software AG webMethods

- Integration Platform as a Service (iPaaS) for cloud-native integration, similar to ETL/ESB tools, but provided as a fully managed service, such as Boomi, Mulesoft, or SAP Cloud Integration (and some cloud-washed products from legacy middleware vendors).

Most traditional middleware products were built to integrate with complex, proprietary systems from the last 20+ years, such as IBM Mainframe, EDIFACT, and guess what ERP systems like SAP ECC. In the meantime, all of them also have a Kafka connector. There are plenty of good reasons why many companies chose Kafka as a modern integration platform instead of a legacy of traditional middleware.

Pros:

- In place: Typically already in place, no new project is required.

- Maturity: Built over the years (because of the complexity), running in production for a long time already

- Tooling: Visual coding for the integration (required because of the complexity), directly map iDoc / BAPI / Hana / SOAP schemas to other data structures

- Integration: Not just connectors to the legacy systems but also Kafka for producing and consuming messages (due to market pressure)

Cons:

- Legacy: Products are as old as the source and sink systems,

- Scalability: Monolithic, inflexible architecture

- Tight coupling: Integration has to be developed and runs on the middleware, no real decoupling and domain-driven design DDD like in Kafka

- Licensing: High-cost per server, often already planned to be replaced (e.g., you can replace 100+ IBM MQ or TIBCO EMS servers with a single Kafka cluster)

- Point-to-point: No streaming architecture, most integrations are based on web services (even if the core under the hood is based on a messaging system)

TL;DR:

Traditional integration tools are mature and have great tooling, but limited scalability/flexibility and high licensing cost. Often a quick win as it is already running, and you just need to add the Kafka connector.

Custom Glue Code for Kafka Integration Using SAP SDKs

Writing your custom integration between SAP systems and Kafka is a viable option. This glue code typically leverages the same SDKs as third-party tools use under the hood:

- Legacy: SAP NetWeaver RFC SDK - a C/C++ interface for connecting to SAP systems from release R/3 4.6C up to today's SAP S/4HANA systems.

- Legacy: SAP Java Connector (SAP JCo) - the famous JCO.jar library - is a Java SDK for integration to the SAP ECC / ERP (this is just a wrapper around the C/C++ SDK).

- Legacy: SAP ACO is an integrated ABAP component that is designed to consume RFC Services on remote ABAP systems.

- Legacy: JMS Adapter to integrate via the standard messaging protocol. Great option (if you get it running and working for your use case and functions). For instance, JMS integration can be done via SAP PI.

- Modern: SAP Cloud SDK allows developing applications with Java or JavaScript that communicate with SAP solutions and services such as SAP S/4 Hana Cloud, SAP SuccessFactors, and others (the term 'Cloud' actually means 'Cloud-native' in this case, i.e., this SDK also works with SAP's on-premise products).

- Modern: SAP Cloud Platform Enterprise Messaging: S4/Hana provides an asynchronous messaging interface (running on Solace on CloudFoundry under the hood). Different messaging standards are supported, including AMQP 1.0 and JMS (depending on the specific product you look at). Some examples demonstrate how to connect via the Java Client using the JMS API.

Pros:

- Flexibility: Custom coding allows you to implement precisely what you need.

Cons:

- Maintenance: No vendor support - develop, maintain, operate, support by yourself.

- Point-to-point: No streaming architecture, most integrations are based on web services (even if the core under the hood is based on a messaging system).

TL;DR:

"Build vs. Buy" always has trade-offs. I have only seen custom glue code for SAP integration in the field if no solution from a vendor was available and affordable. SAP Cloud Platform Enterprise Messaging is the best integration pattern for Kafka.

SOAP/REST Web Services for SAP Integration

The last 15 years brought us web services for building a Service-oriented Architecture (SOA) to integrate applications. A web service typically uses SOAP or REST / HTTP as technology. I will not start yet another FUD war here. Both have their use cases and trade-offs.

Pros:

- Standards-based: Different SDKs, products, and services talk the same language (at least in theory; true for HTTP, not so true for SOAP); most middleware tools have proper support for building HTTP services.

- Simplicity (HTTP): Well-understood, supported by most programming languages and APIs, established for many use cases - middleware is just yet another one.

Cons:

- Point-to-point: No streaming architecture, most integrations are based on web services (even if the core under the hood is based on a messaging system).

- Tight coupling: Integration has to be developed and runs on the middleware, no real decoupling, and domain-driven design DDD like in Kafka.

- Complexity (SOAP): SOAP/WSDL is just the tip of the iceberg! Check out the list of WS-* standards to understand why this is often called the "WS star hell".

- Missing features (REST / HTTP): Representational state transfer (REST) is a concept, but most people mean synchronous HTTP communication. Most middleware tools (and most other applications) only just a small fraction of the full standard. HTTP is an excellent standard, but all the tooling and features need to be built on top of it.

- Only indirect support: Several SAP products do not provide open interfaces. While using SOAP or HTTP under the hood, you are forced to use the licensed tooling to create web services. For instance, SAP Business Connector (restricted license version of webMethods Integration Server), SAP NetWeaver Process Integration (PI), SAP Process Orchestration (PO), Cloud Platform Integration (CPI), or SAP Cloud Integration.

TL;DR:

SOAP and REST web services work well for point-to-point communication and have good tool support. Both have their trade-offs, make sure to choose the right one - if your SAP product provides both interfaces. Unfortunately, you will often not have a choice. Even worse: You cannot use any tool but are forced to use the right licensed SAP tool or wrapper interface. Large scale, high volume, and continuous processing of data are not ideal requirements for these (legacy) integration products.

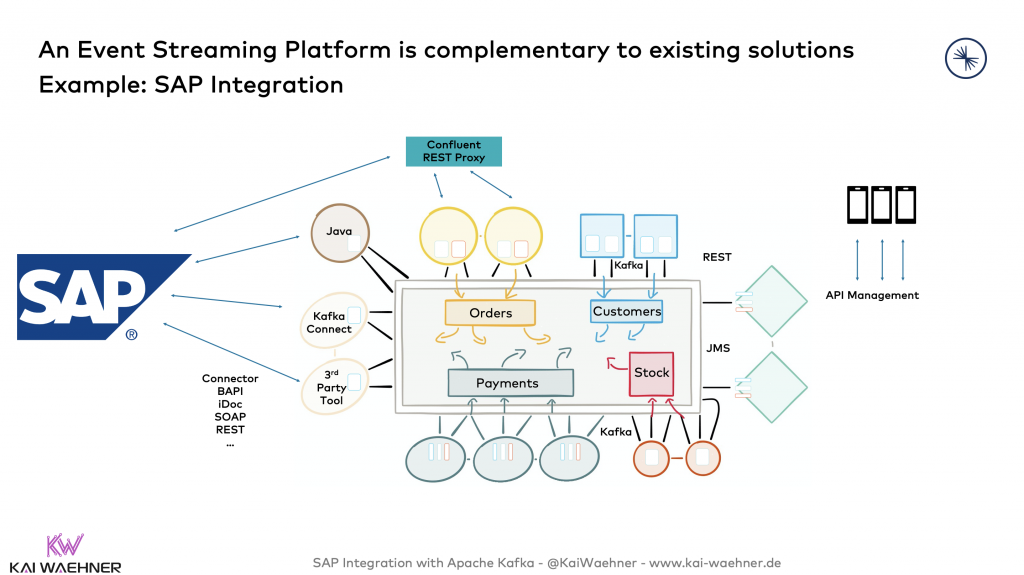

For direct HTTP(S) communication with Kafka, Confluent Rest Proxy is an excellent option for producing, consuming, and administrating from any Kafka client (including custom SAP applications). For instance, SAP Cloud Platform Integration (CPI) can use this integration pattern to integrate between SAP and Kafka.

SAP-specific Third-Party Tools for Kafka

SAP integration is a huge market globally. Plenty of software vendors have built specific integration software for SAP systems.

A few examples I have seen in the field recently:

Examples:

- ASAPIO: Their Cloud Integrator is designed for SAP ERP and SAP S/4HANA. It enables the replication of required data between system environments based on SAP NetWeaver technology.

- Advantco: The Kafka Adapter is fully integrated with the SAP Adapter Framework, which means the Kafka connections can be configured, managed, and monitored using the standard SAP PI/PO & CPI tools. Includes support for Confluent Schema Registry (+ Avro / Protobuf).

- workato: The company provides SAP OData Integration to Kafka and various pre-built recipe templates.

These are just three examples. Many more exist for on-premise, cloud, and hybrid integration with different SAP products and interfaces.

Some of these tools are natively integrated into SAP's integration tools instead of being completely independent runtimes. This can be good or bad. An advantage of this approach is that you can leverage the SAP-native features for complex iDoc / BAPI mappings and the integrated 3rd party connector for Kafka communication.

Pros:

- Turnkey solution: Built for SAP integration, often combined with other additional helpful features beyond just doing the connectivity, more lightweight than traditional generic middleware.

- Focus: Many 3rd party solutions focus on a few specific use cases and/or products and technologies. It is much harder to integrate with "SAP in general" than focusing on a particular niche, e.g., Human Resources processes and related HTTP interfaces.

- Maturity: Built over the years

- Tooling: Visual coding for the integration (required because of the complexity), directly map iDoc / BAPI / Hana / SOAP schemas to other data structures

- Integration: Not just connectors to the legacy systems but also modern technologies such as Kafka

Cons:

- Scalability: Often monolithic, inflexible architecture (but focusing on SAP integration only, therefore often "okayish")

- Tight coupling: Integration has to be developed and runs on the tool, but separated from other middleware, thus decoupling and domain-driven design DDD in conjunction with Kafka

- Licensing: Moderate cost per server (typically cheaper than the traditional generic middleware)

- Point-to-point: No streaming architecture, most integrations are based on web services (even if the core under the hood is based on a messaging system)

TL;DR:

A turnkey solution is an excellent choice in many scenarios. I see this pattern of combining Kafka with a dedicated 3rd party solution for SAP integration very often. I like it as the architecture is still decoupled, but no vast efforts required for doing a (complex) SAP integration. And there is still hope that even SAP themselves releases a nice Kafka-native integration platform.

Kafka-native SAP Integration with Kafka Connect

Kafka Connect, an open-source component of Apache Kafka, is a framework for connecting Kafka with external systems such as databases, key-value stores, search indexes, and file systems.

Kafka Connect connectors are available for SAP ERP databases: Confluent Hana connector and SAP Hana connector for S4/Hana and Confluent JDBC connector for R/3 / ECC to integrate with Oracle / IBM DB2 / MS SQL Server.

Pros:

- Kafka-native: Kafka under the hood, providing real-time processing for high volumes of data with high scalability and reliability.

- Simplicity: Just one infrastructure for messaging and data integration, much easier to develop, test, operate, scale, and license than using different frameworks or products (e.g., Kafka for messaging plus an ESB for data integration).

- Real decoupling: Kafka's architecture uses smart endpoints and dumb pipes by design, one of the key design principles of microservices. Not just for the applications, but also for the integration components. Leverage all the benefits of a domain-driven architecture for your Kafka-native middleware.

- Custom connectors: Kafka Connect provides an open template. If no connector is available, you (or your favorite system integrator or Kafka-vendor) can build an SAP-specific connector once, and you can roll it out everywhere.

Cons:

- Only database connectors: No connectors available beyond the native JDBC database integration are available at the time of writing this.

- Anti-pattern of direct database access: In most cases, you want or need to integrate with a function or service, not with the data objects. In most cases, you don't even get direct access from the database admin anyway.

- Efforts: Build your own SAP-native (i.e., non-JDBC) connector or ask (and pay) your favorite SI or Kafka vendor.

TL;DR:

Kafka Connect is a great framework and used in most Kafka architectures for various good reasons. For SAP integration, the situation is different because no connectors are available (beyond direct database access). It took 3rd party vendors many years to implement RFC/BAPI/iDoc integration with their tools. Such an implementation will probably not happen again for Kafka because it is very complex, and these proprietary legacy interfaces are dying anyway.

A Kafka Connect connector for SAP Cloud Platform Enterprise Messaging using its Java client would be a feasible and best option. I assume we will see such a connector on the market sooner or later.

Embedded Kafka in SAP Products

We have seen various integration options between SAP and Kafka. Unfortunately, all of them are based on the principle of "data at rest" in contrary to Kafka processing "data in motion". The closest fit until here is the integration via the SAP Cloud Platform Enterprise Messaging because you can at least leverage an asynchronous messaging API.

The real added value comes when Kafka is leveraged not just for real-time messaging but for event streaming. Kafka provides a combination of messaging, data integration, data processing, and real decoupling using its distributed storage infrastructure.

Native Event Streaming with Kafka in SAP Products

Interestingly, some of SAPs acquisitions leverage Kafka under the hood for event streaming. Two public examples:

- SAP Concur: Wanny Morellato, a director of engineering, lead the effort of refactoring Concur monolithic travel and expense backend into an event-driven distributed system of microservices using Kafka: Breaking Down a SQL Monolith with Change Tracking, Kafka and KStreams/KSQL

- SAP Qualtrics: A particular challenge amidst their growth was implementing standardizations around different types of data. They were working out how to blend numerical data - about companies' subscriptions, or sales, or content engagement - with experience data collected from surveys. To do that, they utilize technologies like Kafka and Spark and really fast data stores to create a real-time engine to transform data into actionable observational reports.

Obviously, people are also waiting for the Kafka-native SAP S4/Hana interface so that they can leverage events in real-time for processing data in motion and correlate real-time and historical data together. A native Kafka integration with SAP S4/Hana should be the next step!

Having said this, current SAP blogs (from mid of 2019) still talk about replacing the 20+ years old BAPI and RFC integration style with SOAP and OData (Open Data Protocol, an open protocol that allows the creation and consumption of queryable and interoperable REST) APIs in SAP S/4HANA Public Cloud.

My personal feeling and hope are that a native Kafka interface is just a matter of time as the market demand is everywhere across the globe (I talk to many customers in EMEA, US, and APAC), and even several non-S4/Hana SAP products use Kafka internally.

I have also seen a two-fold approach from some other vendors: Provide a Kafka-native interface to the outside world first (in SAP terms you could e.g. provide a Kafka-interface on top of BAPIs. At a later point, reengineer the internal architecture away from the non-scalable technology to Kafka under the hood (in SAP terms you could replace RFC / BAPI functions with a more scalable Kafka-native version - even using the same API interface and message structure).

Native Streaming Replication between Products, Departments, and Companies

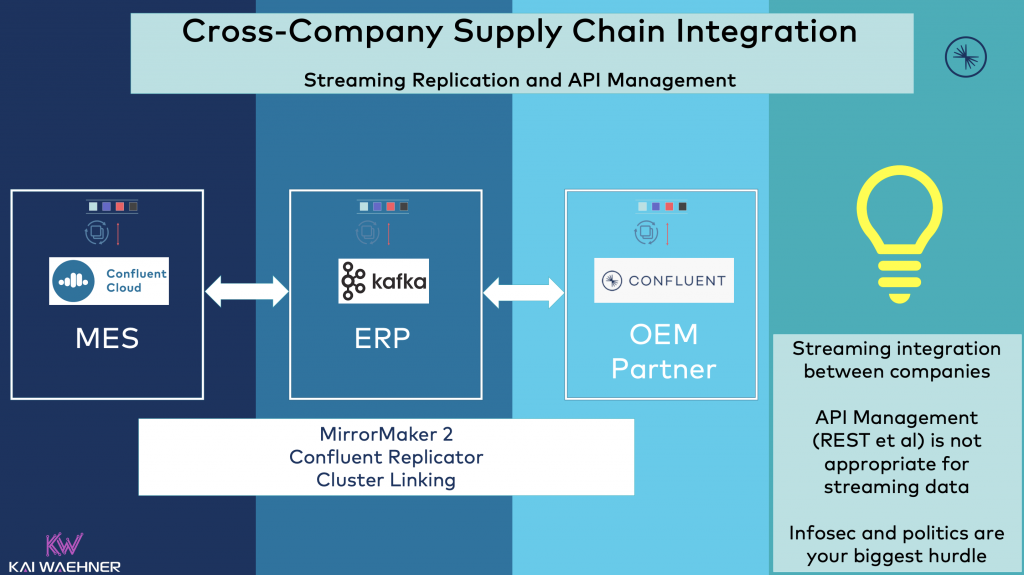

Native Kafka integration does not just happen within a product or company. A widespread trend I see on the market in different industries is to integrate with partners via Kafka-native streaming replication instead of REST APIs:

Think about it: If you use Kafka in different application infrastructures, but the interface is just a web service or database, then all the benefits might go away because scalability and/or real-time data correlation capabilities go away.

More and more vendors of standard software use Kafka as the backbone of their internal architecture. If the interface between products (imaginatively say SAP's ERP system, SAP's MES system, and the SCM application of an OEM customer) is just a SOAP or REST API, then this does not scale and perform well for the requirements of use cases in the digital transformation and Industry 4.0.

Hence, more and more companies leverage Kafka not just internally but also between departments or even different companies. Streaming replication between companies is possible with tools like MirrorMaker 2.0 or Confluent Replicator. Or you use the much simpler Cluster Linking from Confluent, which enables integration between hybrid, multi-cloud, or 3rd party integration using the Kafka protocol under the hood.

SAP + Apache Kafka = The Future for ERP et al

There is huge demand across the globe to integrate SAP applications with Apache Kafka for real-time messaging, data integration, and data processing at scale. The demand is true for SAP ERP (ECC and S4/Hana) but also for most other products from the vast SAP portfolio.

Kafka is deployed in many modern and innovative use cases for supply chain management, manufacturing, customer experience, and so on. Edge, hybrid and multi-cloud Kafka deployments is the norm, not an exception.

Kafka integrates with SAP systems well. Different integration options are available via SAP SDKs and 3rd party products for proprietary interfaces, open standards, and modern messaging and event streaming concepts. Choose the right option for your need and get started with Kafka SAP integration.

What are your experiences with SAP Kafka integration? How did it work? What challenges did you face and how did you or do you plan to solve this? What is your strategy? Let's connect on LinkedIn and discuss it!

Published at DZone with permission of Kai Wähner, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments