Apache Kafka and API Management — How to Use Mulesoft

Kafka and API Management tools like Mulesoft or Apigee are complementary, not competitive!

Join the DZone community and get the full member experience.

Join For FreeEvent Streaming with Apache Kafka and API Management / API Gateway solutions (Apigee, Mulesoft Anypoint, Kong, TIBCO Mashery, etc.) are complementary, not competitive! Read this blog post to understand the relation between these two components in your enterprise architecture.

API Management is relevant for many years already. I talked about "A New Front for SOA: Open API and API Management as Game Changer" in 2014 when SOAP Web Services and Service-Oriented Architectures (SOA) were cutting-edge technologies and concepts. Exposing APIs and monetization were still in its infancy at that time. EDI / EDIFACT and similar complex technologies were used for B2B communication. B2C communication was just starting with smartphones and mobile apps. Internally billing was done with estimations and Excel sheets instead of automated and accurate information systems.

Let's start this blog post with an overview about the current market situation. Use cases and the relation between event streaming with Apache Kafka and API Management with tools like Mulesoft Anypoint Platform are discussed afterwards. The last part of the post explores the future of API Management for streaming technologies (and how you can even solve this use case today already).

Market Situation — One Middleware Tool to Solve All Your Problems?

Microservices became the new black in enterprise architectures. APIs provide functions to other applications or end users. Even if your architecture uses another pattern than microservices, like SOA (Service-Oriented Architecture) or Client-Server communication, APIs are used between the different applications and end users.

Apache Kafka plays a key role in modern architectures to build open, scalable, flexible and decoupled real time applications. API Management complements Kafka by providing a way to implement and govern the full life cycle of the APIs. This blog post explores how event streaming with Apache Kafka and API Management (including API Gateway and Service Mesh technologies) complement each other, and why they are still not always a perfect match.

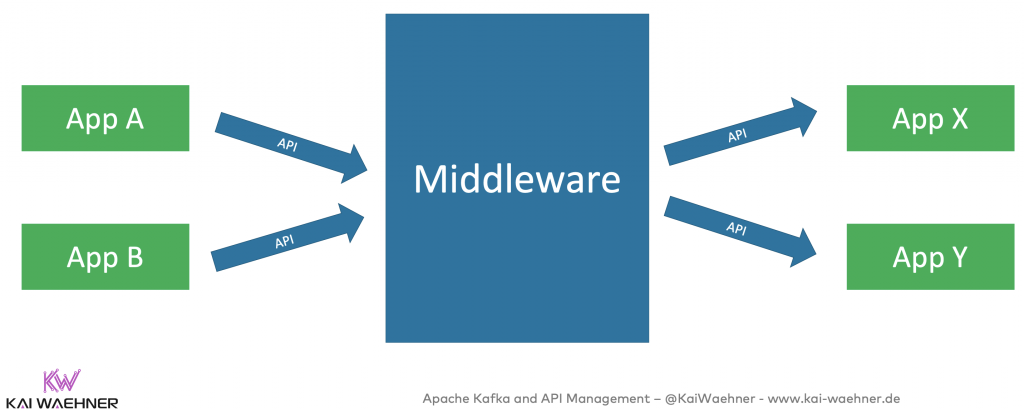

In the middleware market, every software vendor is the best one and puts itself into the middle of the enterprise architecture; at least if you trust marketing graphics. No matter which vendor's website you visit, you will see something similar to this:

Middleware, Event Streaming and API Management Vendors

Here are some examples of global middleware vendors providing software to glue together applications and to provide APIs:

- Universal Players offer various products. Vendors like Red Hat / IBM, Oracle, Software AG, TIBCO even offer different overlapping and competing solutions. For instance, IBM has 10+ products for integration middleware (not included are the rebranded product names).

- Cloud Providers like AWS, GCP, Azure and Alibaba provide a vast number of services for gluing together applications and services.

- Some companies focus just on Messaging, for instance Solace or Synadia (the company behind nats.io).

- Event Streaming Platforms like Confluent or Streamlio (the company behind Pulsar; acquired by Splunk recently) are relative new on the market (compared to the above categories), but get more and more traction these days.

- API Management solutions like Mulesoft, Apigee or Kong focus on the creation, life cycle management on monetization of APIs.

- New startups focus on specific niches or cutting edge technologies, like solo.io providing an API Gateway on top of Envoy Proxy Service Mesh.

MQ, ETL, ESB, Kafka, API Management — When to Use Which Tool(s)?

Obviously this market situation creates an important question: When to use which tool(s)? How to they overlap with each other? When are they complementary?

I covered the discussion about traditional middleware and Kafka already in detail. Check out "Event streaming with Apache Kafka vs. traditional middleware using MQ, ETL, ESB".

It is also relative easy to explain the relation between traditional middleware and API Management: Build a SOAP or REST based application (aka web service) and put an API Gateway or API Management tool in front of it to manage its lifecycle and monetize it.

How do Apache Kafka and API Management relate to each other? This question is harder to answer because both solve very different problems based on different technologies. Let's discuss this topic in more detail in the following.

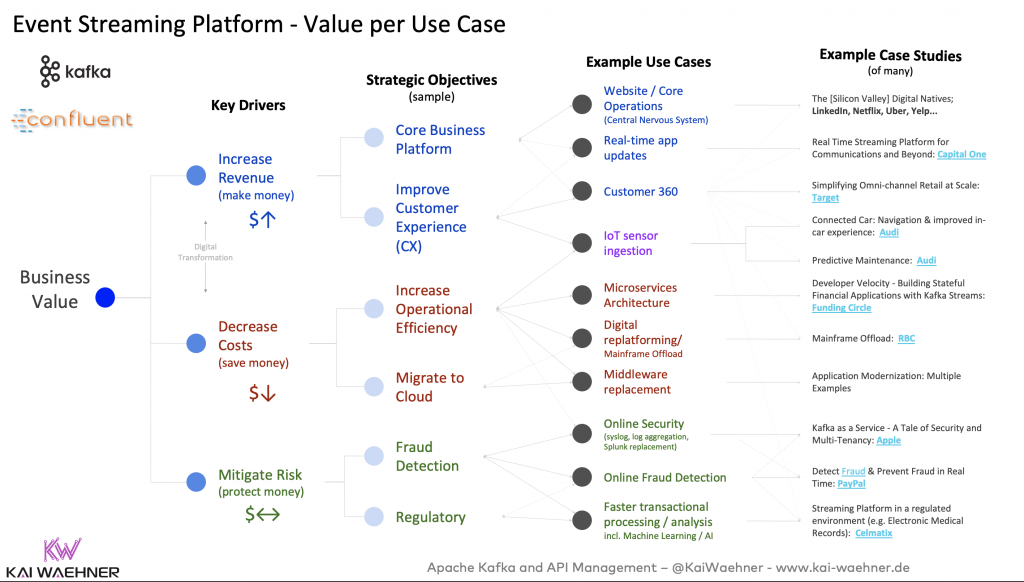

Use Cases for Event Streaming and Apache Kafka

First of all, it is very important to understand what 'Event Streaming' is and why this is different from the "traditional API approach" providing REST or SOAP web services.

Apache Kafka Is Used in All Industries and Verticals

Some use cases can also be done with other technologies, but it is easier and a simpler architecture with Kafka. That is true for integration layers and microservice architectures — and all the use cases around this like real time monitoring or customer 360.

Some other use cases cannot be done easily with other technologies because others don’t provide the combination of messaging + storage + processing in one single platform in a scalable, reliable and fault tolerant way — which is e.g. required to build a connected car infrastructure or sensor processing and analytics at scale in real time.

In the early era of Apache Kafka, many companies just used it for data ingestion into Hadoop or another data lake. The significant difference today - and this is what i would define as innovative - is that companies today use Apache Kafka as Event Streaming Platform to build mission-critical infrastructures and core operations platforms.

To be fair, Kafka is not the best solution for every problem. If you need point-to-point messaging, use something like RabbitMQ or IBM MQ. If you need to transfer large files, evaluate the market for MFT (Managed File Transfer) products. And... If you need to manage and monetize APIs, then evaluate API Management solutions.

Kafka's Ecosystem to Build Mission-Critical and Scalable Platforms and Real-Time Applications

Apache Kafka is more than just data ingestion or messaging. Apache Kafka (which includes Kafka Connect and Kafka Streams) and its open ecosystem (Schema Registry, ksqlDB, etc.) established a complete event streaming platform for many innovative use cases.

Here are some examples:

An interesting trend can be seen here: More and more Kafka deployments are mission-critical focusing on business transactions. These deployments cannot be down for an hour because the company behind it would be in huge trouble then.

Many more use cases from companies in almost all existing verticals and industries can be found at the Kafka Summit website. Videos and slides from all past talks are available for free. This includes success stories from tech giants, traditional companies and cutting edge startups.

Why Event Streaming With Apache Kafka?

Kafka has a few unique characteristics:

- Combination of messaging, storage, integration and processing of data

- Event-based architecture for real time processing, supporting modern design patterns like Event Sourcing and CQRS

- Built for high availability, high throughput and cloud-native DevOps and CI/CD integration

- Open source with a huge community and ecosystem

For these and other reasons, Kafka became the de facto standard for Microservice architectures and many other application infrastructures. Many of these use cases cannot be built with traditional middleware due to various limitations of scalability, non-flexible architectures or simply too high cost for building a highly available deployment.

So, what is the relation between event streaming with Kafka and API Management? Let's explore this in the next section.

What Is an API and its Relation to Event Streaming?

Event Streaming is changing from ground up how applications are built. More scalable, more reliable, decoupled, real time. In many new innovative use cases, there is no way around using event streaming instead of web services and traditional APIs.

This brings up several questions. Why do we still need to create and manage APIs? Does it make sense to put an API on top of streaming data? What technology and interface should this API use?

Let's cover the basics first...

API (Application Programming Interface)

An API (application programming interface) is a computing interface which defines interactions between multiple software intermediaries. It defines the kinds of calls or requests that can be made, how to make them, the data formats that should be used, the conventions to follow, etc.

API Technologies

From a technical perspective, most people and products mean REST (HTTP) or SOAP (XML) web services when talking about APIs. Most API Gateway and API Management tools just support these technologies.

These two technologies are established in most companies for many years and are very mature. Some people prefer the one, some the other. Some people don't like either one but have to use them because REST and SOAP web services are the de facto standard in enterprises today.

In fact, many other API technologies are available. Many of these other APIs do not use synchronous request-response patterns, but asynchronous communication.

Examples: WebSocket, MQTT, Server-side Events (SSE), or the Kafka protocol (the underlying wire protocol implemented in Kafka). So why are more and more technologies emerging?

Synchronous Request-Response vs. Asynchronous Event Streaming

Two very different communication paradigms exist: Request-response and event streaming.

Request-Response communication has the following characteristics:

- Low latency

- Typically synchronous

- Point-to-point

- “Bespoke API”

- e.g. HTTP, SOAP, gRPC

Event streams are based on these concepts:

- Messaging / Pub Sub (sending data from A to B and C)

- Continuous data processing (filtering, transformations, aggregations, business logic)

- Often asynchrounous

- Event-driven, supporting patterns like Event Sourcing and CQRS

- General-purpose events

- e.g. Apache Kafka

Both approaches have their trade-offs. Most architectures need request-response and event streams!

REST and SOAP Web Services typically use synchronous communication. This is not the full story, you could e.g. also use JMS-based SOAP communication, but the reality in most cases is synchronous request-response. Event streaming is asynchronous, but you can implement request-reply patterns, too.

Event Streaming instead of REST / SOAP Web Services

So what are the most important reasons why event streaming with technologies like Apache Kafka is often used for new projects instead of REST / SOAP web services?

REST / SOAP web services do not provide characteristics to build a scalable, reliable real time infrastructure for a high throughput of events. Period!

The other big advantage of Kafka is that it decouples microservices from each other. The storage of Kafka and the asynchronous (i.e. decoupled) communication keeps every microservice independent from each other. Microservice A does not need to know Microservice B, but they can still communicate with each other. Even if one of them is down while the other one is producing data. There can still be a contract (a term used in API Management a lot) between the producers and consumers, for instance using the Confluent Schema Registry.

One thing to point out here is that most API Management solutions and API Gateway today don't support Event Streams but only Web Service APIs, unfortunately.

But let's go one step back first and understand what API Management actually is.

What Is API Management?

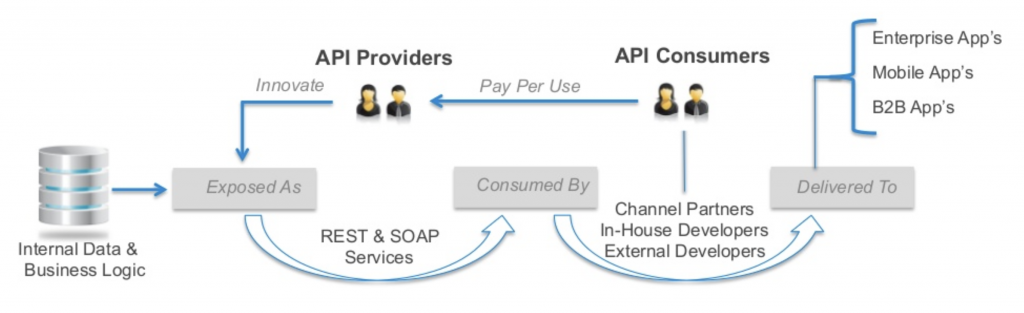

API management is the process of creating and publishing web application programming interfaces (APIs), enforcing their usage policies, controlling access, nurturing the subscriber community, collecting and analyzing usage statistics, and reporting on performance. API Management components provide mechanisms and tools to support developer and subscriber community.

Use Cases for API Management

API Management can be used for different scenarios:

- Open API: Developer portal and API Gateway

- Partner Gateway: Access control for well-known external parties

- Mobile App Gateway: Access control for apps deployed externally

- Cloud Integration Gateway: Governance and mediation control for SaaS

- Internal Governance: Manage, monetize and bill internal services and applications

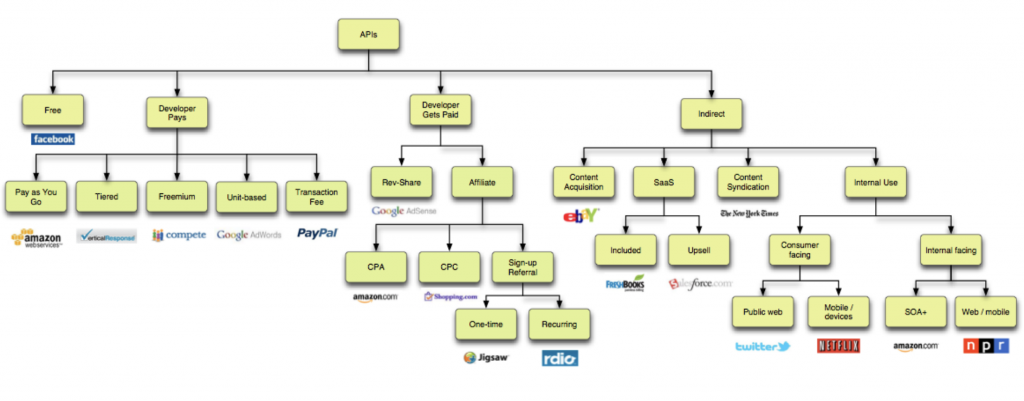

Various different API business models are possible as John Musser explained very well in 2013 already:

What changed since 2013? Not that much! The main idea is the same: APIs are provided for the public, external partners or internal teams. However, technically speaking, more and more of these interfaces need to use a technology for real time streaming data at scale. REST APIs are not ideal or sometimes not even possible at all with its limitations regarding scalability.

No matter if the API Management solution supports just REST / SOAP web services or modern streaming technologies, the API development workflow looks like this:

While API Management solutions vary, components that provide the following functionalities are typically found in products:

API Gateway

A server that acts as an API front-end, receives API requests, enforces throttling and security policies, passes requests to the back-end service and then passes the response back to the requester. A gateway often includes a transformation engine to orchestrate and modify the requests and responses on the fly. A gateway can also provide functionality such as collecting analytics data and providing caching. The gateway can provide functionality to support authentication, authorization, security, audit and regulatory compliance.

API Life Cycle Management and Publishing Tools

A collection of tools that API providers use to define APIs, for instance using the OpenAPI or RAML specifications, generate API documentation, manage access and usage policies for APIs, test and debug the execution of API, including security testing and automated generation of tests and test suites, deploy APIs into production, staging, and quality assurance environments, and coordinate the overall API lifecycle.

Developer Portal/API Store

Community site, typically branded by an API provider, that can encapsulate for API users in a single convenient source information and functionality including documentation, tutorials, sample code, software development kits, an interactive API console and sandbox to trial APIs, the ability to subscribe to the APIs and manage subscription keys such as OAuth2 Client ID and Client Secret, and obtain support from the API provider and user and community.

Reporting and Analytics

Functionality to monitor API usage and load (overall hits, completed transactions, number of data objects returned, amount of compute time and other internal resources consumed, volume of data transferred). This can include real-time monitoring of the API with alerts being raised directly or via a higher-level network management system, for instance, if the load on an API has become too great, as well as functionality to analyze historical data, such as transaction logs, to detect usage trends.

Functionality can also be provided to create synthetic transactions that can be used to test the performance and behavior of API endpoints. The information gathered by the reporting and analytics functionality can be used by the API provider to optimize the API offering within an organization's overall continuous improvement process and for defining software Service-Level Agreements for APIs.

Monetization and Billing

Functionality to support charging for access to commercial APIs. This functionality can include support for setting up pricing rules, based on usage, load and functionality, issuing invoices and collecting payments including multiple types of credit card payments.

As you can see: An API Management solution has some exciting features to build and operate APIs! So what is the relation to Kafka? As discussed earlier, many innovative use cases require a scalable, reliable event streaming platform. That's what Kafka is.

Kafka and API Management — Friends, Enemies or Frenemies?

To be very clear

- Apache Kafka does not provide out-of-the-box capabilities of an API Management solution.

- API Management solutions do not provide event streaming capabilities to continuously send, process, store and handle millions of events in real time (aka stream processing / streaming analytics).

Therefore, the combination of Kafka and API Management solution makes a lot of sense in many scenarios. It is NOT a competitive situation (like many people think - or are "taught" by some vendors).

Unique API Management Features

Some of the unique features of API Management products are:

- API Developer Portal and Publishing Tools

- API Life Cycle Management

- Billing and Monetization

These components can be provided as standalone services respectively products (e.g. from a cloud provider) or within a complete platform (like Mulesoft Anypoint Platform).

Domain-Driven Design (DDD), Decoupling and Anti-Patterns

Some features from API Management tools overlap with other solutions. You should question if API Management is the right spot for doing this. This is not a 'yes or no' discussion. But I think in many cases, the API Management solution should not be used for tasks where other platforms provide the better capabilities regarding scalability, tooling, reliability, performance, and other characteristics.

A clear separation of concerns is important to simple and flexible enterprise architecture. Don't couple things too tightly. This was a key issue of ESB deployments in the past. Don't do the same fault with API Management. It is not a surprise and should be a warning that several vendors even built their API Management product on top of their ESB to couple things together.

Martin Fowler taught us several years ago "not to recreate ESB Anti Patterns with Kafka". Keep this in mind for your API strategy, too! My article "Microservices, Apache Kafka, and Domain-Driven Design" should also help you understanding how important the separation of concerns and decoupling is for your enterprise architecture. This is true for Kafka, APIs and other business applications.

Overlapping Features Between Kafka and API Management

Kafka provides a messaging and storage solution for event-based processing as its core. In addition, Kafka Connect (for integration) and Kafka Streams (for stream processing) are part of the open source project.

API Management exists for completely different use cases as discussed in detail in the above section: To create, publish, manage and monetize APIs.

Nevertheless, some overlapping features exist between Kafka and API Gateways and API Management solutions. Here are some examples:

- Protocol conversion: One consumer or client requires JSON while the other one can only process Avro, Protobuf or XML.

- ETL (Extract Transform Load): Transformations, filtering, sorting and similar tasks.

- Connectivity: Integration with back-end systems like databases, data warehouses, data lakes, messaging systems, business applications.

- API Gateway: Routing, public endpoints, single entry point, access control, encryption, throttling, etc. are common features. This can either be configured/implemented by a dedicated API Gateway (like Amazon API Gateway) or with a Kafka-based platform (like Confluent Platform providing features such as RBAC, Rest Proxy, etc).

Who should solve these overlapping tasks? The Event Streaming Platform or the API Management solution? Well, each vendor will tell you that they can do it the best way. Think about your architecture and requirements. What makes most sense? As so often: It depends!

If you want to build a scalable, reliable integration pipeline, Kafka is probably the better choice. If you need to provide a flexible Gateway interface for REST web services with routing configurations, a dedicated API Gateway is probably the best choice. Try to keep the architecture as simple as possible.

Let's now take a look at an architecture to understand how Kafka and API Management solutions play together very well.

Microservices, API Management (Mulesoft Anypoint) and Event Streaming (Kafka)

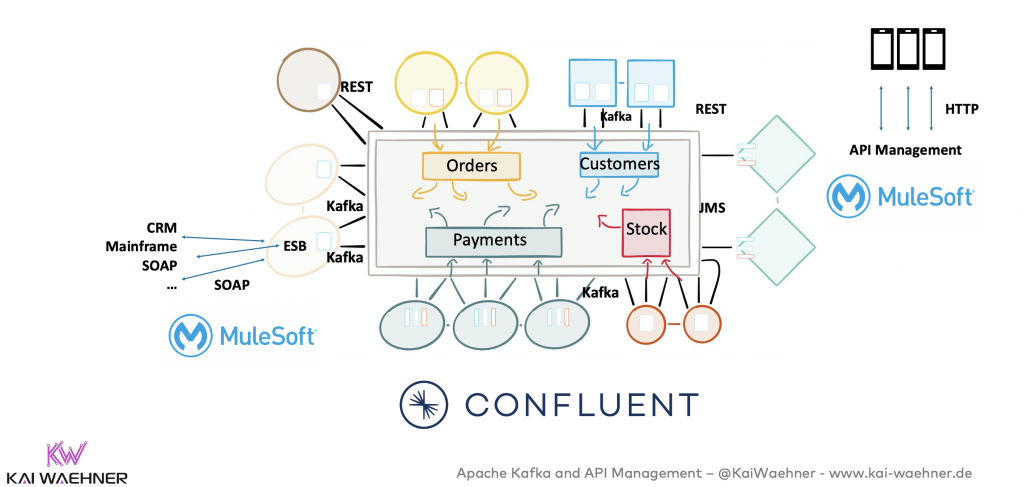

The following examples shows a microservices architecture leveraging Event Streaming and API Management. It uses a combination of Confluent Platform for the event-based nervous system and Mulesoft Anypoint Platform for API Management and integration with some legacy applications:

There are different options to combine Kafka and Event Streaming with API Management solutions:

- Event Streaming is used to process data continuously at scale in real time

- Event Streaming is used to directly integrate with various data sources and data sinks (databases, messaging systems, business applications, etc.)

- The heart of many companies is Event Streaming, gluing together streaming applications with batch, request-response and other platforms.

- API Management is used to provide an API interface (including lifecycle management, monetization, etc) on top of Kafka applications, e.g. using services via Confluent REST Proxy, the REST API of Confluent Cloud to provision a new Kafka cluster, or the REST API running on top of a custom Kafka Streams / ksqlDB application or microservice using Interactive Queries.

- Kafka is used as backend infrastructure. A proxy or business application is used in between Kafka and business applications. API Management is not directly used with Kafka interfaces, but one layer higher on top of the applications which use Kafka under the hood.

Most enterprise architectures require event streaming, request-response and API management. I hope if you read this far in this blog post, you agree and now understand why Apache Kafka and API Management platforms are complementary, not competitive.

But it is also clear that event streaming and today's API Management tools don't fit together perfectly because in many cases it does not make sense to put a REST or SOAP API on top of event streaming data.

The Missing Killer Feature: Native Kafka Integration in API Management and API Gateway

The last section explored options how Kafka and API Management work together very well.

In an ideal world, an API could be put directly on top of the Kafka protocol. In the real world, almost all API Management products today only support REST / SOAP web services. This means you (have to) build a web service on top of event streaming to provide the API Management capabilities.

Envoy proxy, one of the established proxies for building a Service Mesh, actually supports the Kafka protocol natively. On TCP level, no need to use HTTP REST APIs. This is huge from scalability and performance perspective. HTTP / synchronous request-response is an anti-pattern for streaming data and will not work if large scale is required for the streaming application. Check out "Service Mesh and Cloud-Native Microservices with Apache Kafka, Kubernetes and Envoy, Istio, Linkerd" for more details on this topic.

Unfortunately, examples like Envoy's support for the Kafka protocol are very rare today. What if you get native Kafka support in your API Management solution?

Streaming-Based API Management for Cross Companies Communication

API Management using REST or SOAP web services is not appropriate for streaming data and large scale use cases. Therefore, more and more enterprises build streaming applications. How strange is it that almost all of these enterprises use the anti-pattern of providing a request-response based REST API on top of the streaming services for API Management?

Support for the Kafka protocol would be very helpful to make API Management even more complementary than it is today. Think about the huge opportunities if you could build life cycle management and monetization / billing on top of a streaming Kafka service.

Cross-Company Streaming Replication

Even without proper support for event streaming in most API Management tools, I have seen many customers doing Kafka-native real time communication at scale between different business units or projects. Check out "Architecture patterns for distributed, hybrid, edge and global Apache Kafka deployments" to understand various different options.

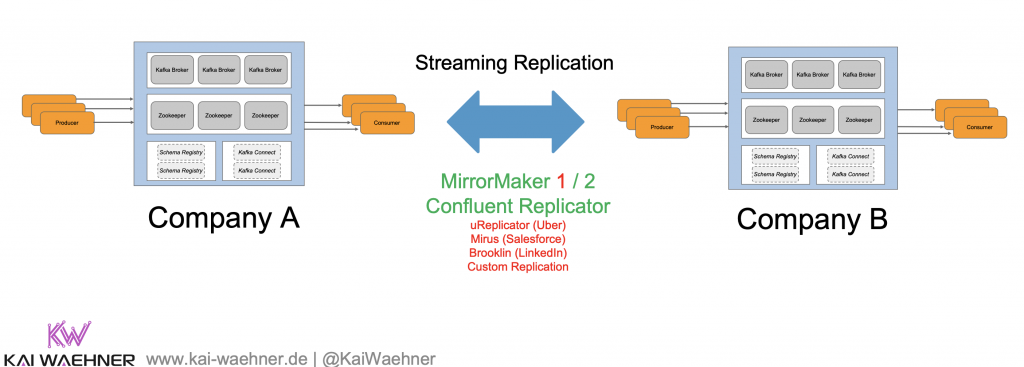

Here is the most exciting use case: Streaming replication between different enterprises:

Different tools enable streaming replication between business units, regions or companies:

- MirrorMaker 1

- MirrorMaker 2

- Confluent Replicator

- uReplicator (Uber)

- Mirus (Salesforce)

- Brooklin (LinkedIn)

- Custom Replication

If you want to rely on a mature and battle-tested product, then Confluent Replicator is the way to go today in 2020 for real time streaming replication. MirrorMaker 1 should never be an option. MirrorMaker 2 will be a great option in some quarters, but today it is very new and probably not the best option for a mission-critical project yet. All other options are only recommended if you want to dive deep into the project.

Tools like Confluent Schema Registry provide governance for the "streaming API interface". Technologies like Avro, Protobuf or JSON Schema are used to define the "API contract" and process large data volumes efficiently and in real time.

Event Streaming Internally and REST API to the Outside World?

A cross-company streaming architecture has one key drawback: Information security and politics are your biggest enemy! :-) But I have seen customers running this setup in production with a partner company. So it is doable, and even without API Management in the middle, you can leverage event streaming at scale with your partner. Think about use cases like airline ticketing, retail transactions or financial services.

Why would you build everything in real time at scale internally, but only provide a non-scalable synchronous HTTP interface to the outside world? And your external partners are asking themselves exactly the same question...

API Management for event streaming would make this easier from security and monetization / billing perspective. I hope this feature will be implemented soon by various API Management software vendors.

The Future — Streaming-Based API Management for Apache Kafka?

Most architectures require request-response based communication (typically REST) and event streaming (typically Kafka). API Management helps making applications accessible; no matter if the heart of the infrastructure is event-based or a point-to-point communication.

I think (and hope) the future will provide streaming-based API Management solutions for Apache Kafka. Envoy's support for the Kafka protocol is a first example. A few other frameworks also provide some "first hacks" already.

I hope this blog post helped you understanding the relation between Event Streaming with Apache Kafka and API Management solutions such as Kong or Mulesoft Anypoint Platform. They are complementary, not competitive.

How do you think about API Management in conjunction with event streaming and Apache Kafka? What is your strategy? Let’s connect on LinkedIn and discuss!

Published at DZone with permission of Kai Wähner, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments