The challenge of real-time data processing cannot be managed by architectural understanding alone. We must also consider a series of fundamental concepts.

Data Challenges

The “Three Vs” (Volume, Velocity, Variety) concept represents some of the bigger challenges in handling data in real-time environments:

- Volume: Managing and storing large volumes of data is sometimes more difficult with a real-time solution because large amounts of data can delay processing and cause missed opportunities or other business impacts due to delayed responses.

- Velocity: The speed at which new data is generated and collected can lead to bottlenecks, thus impacting the time in which the data can begin to be processed.

- Variety: Managing diverse data types requires a versatile solution to process and store different formats and structures. Often, a diverse range of data types is required to provide comprehensive insights.

On top of the "Three Vs," other fundamental characteristics that are causing data solution challenges include those in the table below:

Table 1: Common data solution challenges

| Challenge |

Description |

| Data freshness |

Related to data velocity, data freshness refers to the “age” of the data, or how up to date the data is. Having the latest information is crucial to providing real-time insights. Imagine making decisions in real time based on yesterday's prices and stocks. The velocity of the end-to-end solution will provide constraints and bottlenecks for the availability of the updated data. It is also important to be able to detect, predict, and notify on the data freshness used for making decisions and/or providing insights. |

| Data quality |

A crucial aspect of data solutions that refers to the accuracy, completeness, relevance, timeliness, consistency, and validity of the data. Data quality is an unresolved problem due to the complexity of being able to analyze and detect these cases in large distributed solutions. Imagine we are providing a real-time analysis of sales during Black Friday and the data is not complete because the North American store data is not being sent; if it is not detected that the information is incomplete, any decision we make will be incorrect. |

|

Schema management

|

Schemas need to evolve continuously and in alignment with the business' evolution, including new attributes or data types. The challenge is managing this evolution without disrupting existing applications or losing data. Solutions must be designed to detect schema changes in real time and to support backward and forward compatibility, which helps to mitigate issues. As an example, a common approach is to use schema registry solutions. |

Performance

The performance of a solution lies in the attributes of efficiency, scalability, elasticity, availability, and reliability that are typically considered essential:

Table 2: Key components of real-time data performance

| Component |

Description |

| Efficiency |

Refers to the optimal use of resources to achieve output targets in terms of work performed and response times, using the least amount of resources possible and at an effective cost. |

| Scalability |

A solution's ability to increase its capacity as well as handle the growth of a workload. Traditionally, scalability has been categorized into vertical scalability (e.g., adding more resources to existing nodes) and horizontal scalability (e.g., adding more nodes); although nowadays, with serverless solutions in the cloud, this type of scaling is often transparent and automatic. |

|

Elasticity |

The capacity of a solution to adapt resources with the workload changes. This is closely related to scalability. A solution is elastic when it can provision and deprovision resources in an automated way to match current demands as quickly as possible. |

| Availability |

How long the solution is operational, accessible, and functional. This is usually expressed as a percentage where higher values indicate more reliable access. |

| Reliability |

Ensures solution capacity to continue working correctly in the event of a failure (fault tolerance) and the ability to recover from failures quickly and restore normal operation (recoverability). This is closely related to the availability of a solution: The challenge of durability and avoiding data loss is greatest in distributed real-time data solutions. |

Security and Compliance

Nowadays, with the rise of cloud solutions, the exposure of services to public networks and data protection laws, such as the General Data Protection Regulation (GDPR), make security and compliance two core components of any data solution.

Table 3: Security and compliance considerations for real-time data architectures

| Consideration |

Description |

| Access control |

Secures resources by managing who or what can view or use any given resources. There are several types of approaches: role-based access control (RBAC), attribute access control (ABAC), and rule-based access control. |

| Data in transit |

Refers to data that is being transferred over a network from one location to another. When data comes out of a vulnerable system, it is crucial to secure the transmission of data to protect sensitive information or guarantee that the data cannot be manipulated. Cost, complexity, and performance are often the key challenges faced. |

| Data retention |

The capacity to collect, store, and keep data for a duration. Data retention policies are crucial for organizations due to reasons like compliance with legal regulations or operational necessity. In addition to having the capacity to define these policies, the challenge lies with having simple mechanisms needed to manage them. |

| Data masking |

Provides the ability to obfuscate or anonymize data to protect sensitive information in both production and non-production systems. In real-time data solutions where data is continuously generated, processed, and analyzed, data masking is very complex due to the impact on the performance. |

Architecture Patterns

Architecture patterns are key to effectively managing and capitalizing on the power of instantaneous data. These architecture patterns can be categorized into two segments: base patterns and specific architectures. While base patterns, like stream-to-stream, batch-to-stream, and stream-to-batch, lay the foundational framework for managing and directing data flow, specific architectures like Lambda, Kappa, and other streaming architectures build upon these foundations, offering specialized solutions tailored to varied business needs.

Base Patterns

The following patterns are fundamental to building complex data architectures like Lambda or Kappa, which are designed to manage scenarios of modern data processing.

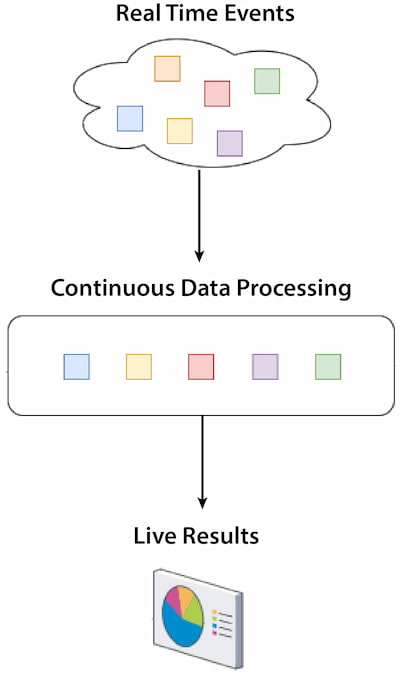

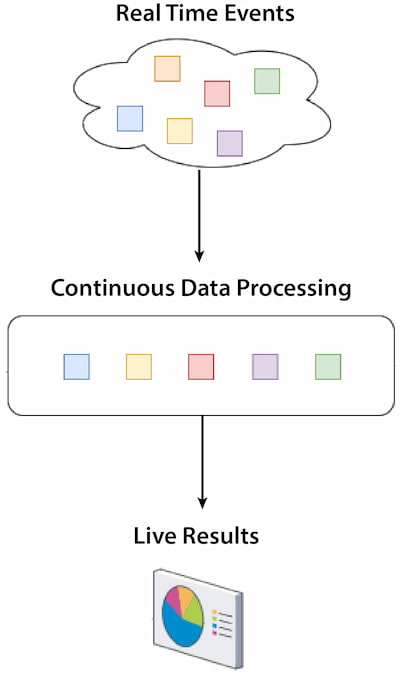

Stream-to-Stream

Stream-to-stream refers to a type of real-time data processing where the input and the output data streams are generated and processed continuously. This approach enables high reactivity, analysis, and actions.

Figure 1: Stream-to-stream

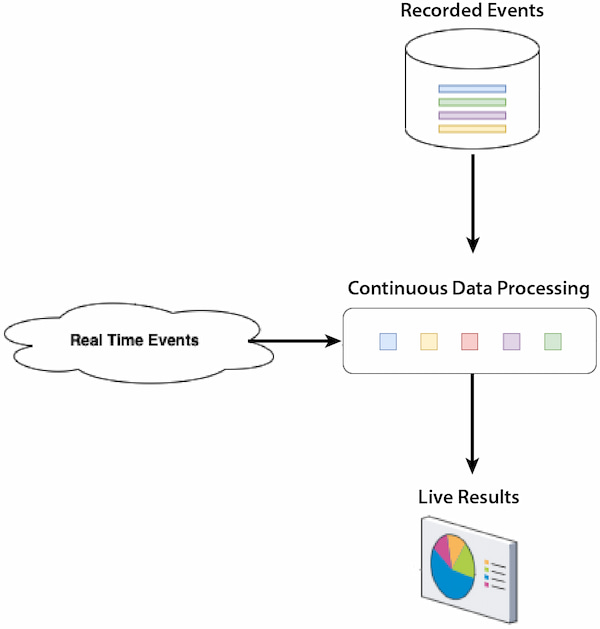

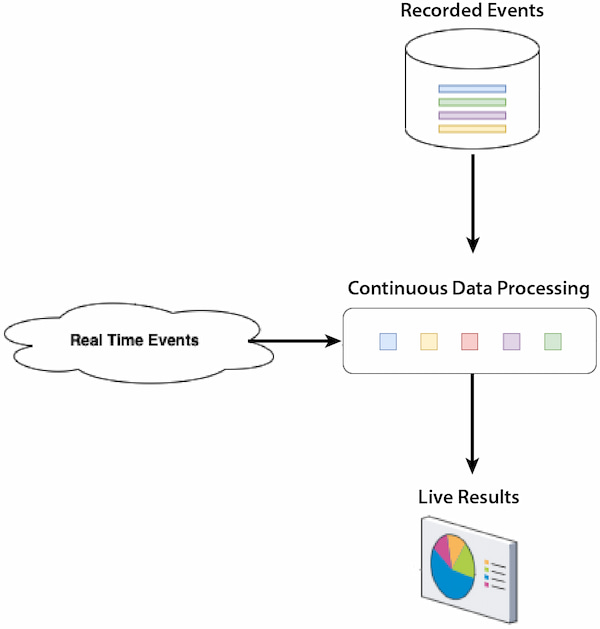

Batch-to-Stream

Batch-to-stream typically involves the conversion of any data accumulated (batch) in a data repository into a structure that can be processed in real time. There are several use cases for this implementation like integrating historical data insights with real-time data or sending consolidated data in small time windows from a relational database to a real-time platform.

Figure 2: Batch-to-stream

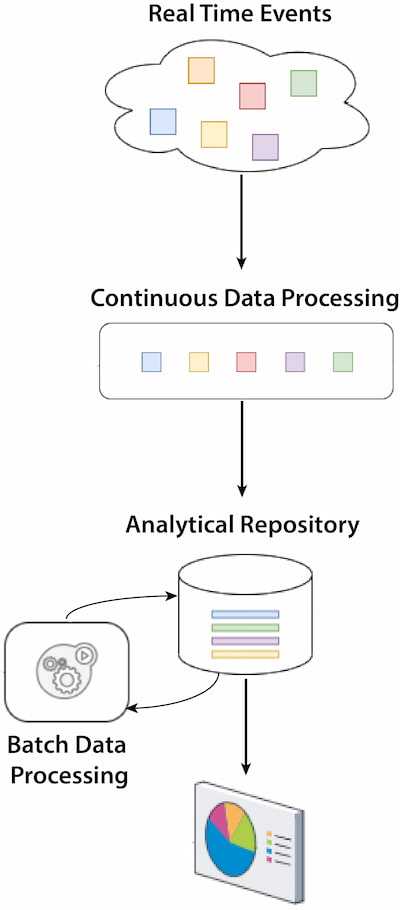

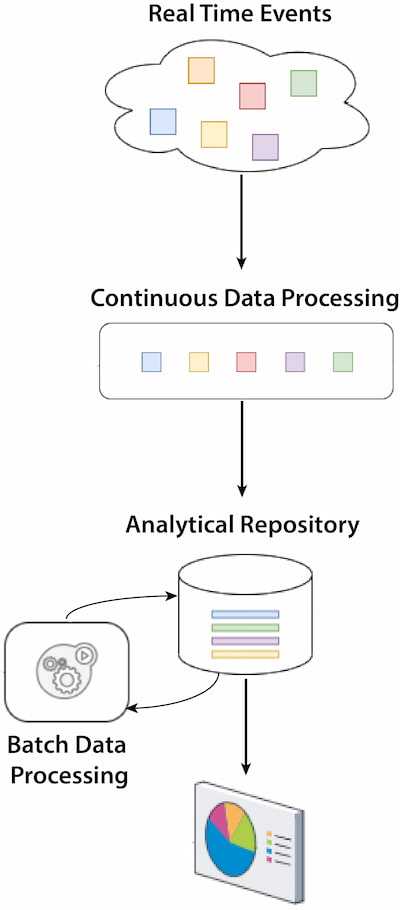

Stream-to-Batch

This pattern is oriented toward scenarios where real time is not required; bulk data processing is more efficient and meets most business requirements. Often, the incoming data stream is accumulated for a time and then processed in batches at scheduled intervals.

The stream-to-batch pattern usually happens in architectures where the operational systems are event- or streaming-based and where one of the use cases requires advanced analytics or a machine learning process that uses historical data. An example is sales or stock movements that require real-time and heavy processing of historical data for forecast calculation.

Figure 3: Stream-to-batch

It is essential to understand the requirements and behavior of any end-to-end solution. This will allow us to identify which architectures are best suited for our use cases — and consider any limitations and/or restrictions.

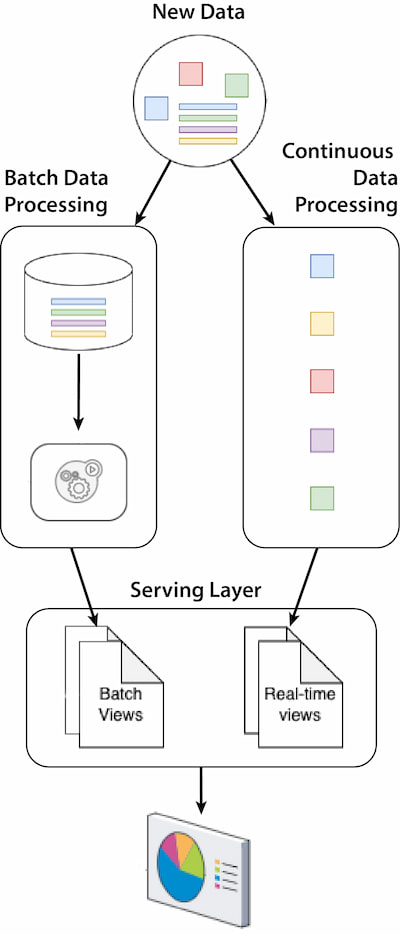

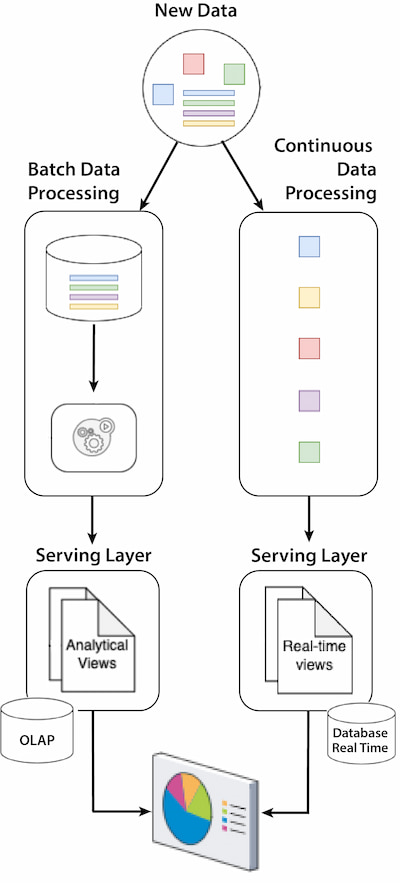

Lambda Architecture

Lambda architecture is a hybrid data pattern that combines batch and real-time processing. This approach enables complex decisions with high reactivity by providing real-time information and big data analysis.

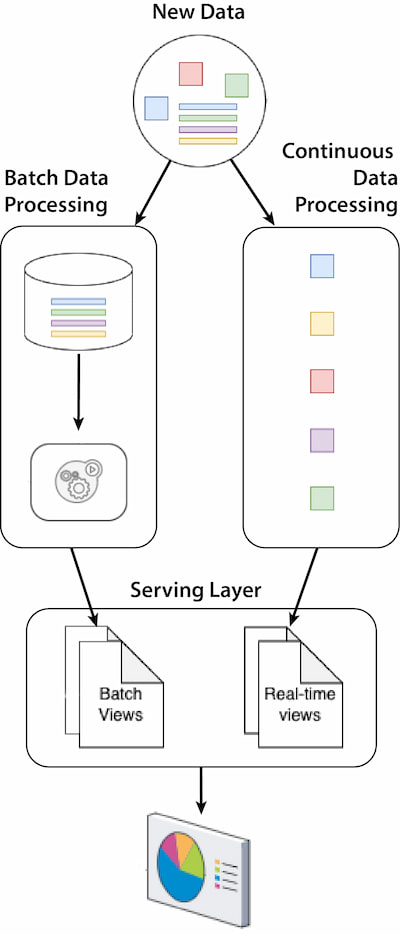

Figure 4: Lambda architecture — Unified Serving Layer

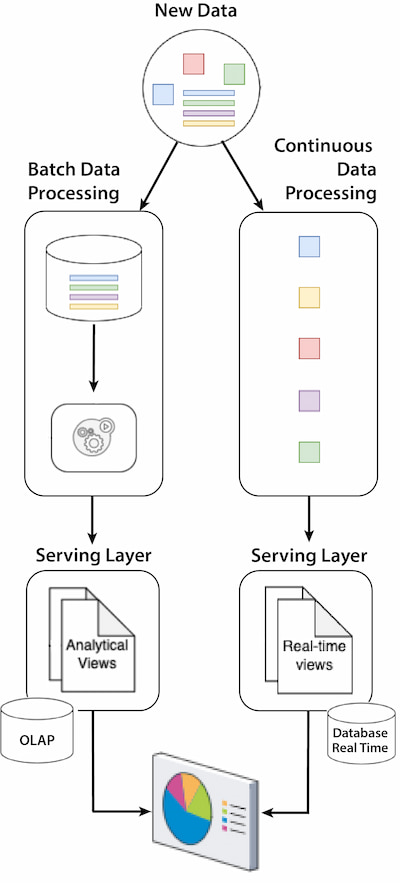

Figure 5: Lambda architecture — Separate Serving Layer

Components:

- Batch layer: Manages the historical data and is responsible for running batch jobs, providing exhaustive insights.

- Speed layer: Processes the real-time data streams, providing insights with the most up-to-date data.

- Serving layer: Merges the results obtained from the batch and speed layers to respond to queries with different qualities of freshness, accuracy, and latency.

- There are two scenarios: one in which both views can be provided from the same analytical system using solutions like Apache Druid and another using two components like PostgreSQL as a data warehouse for analytical views and Apache Ignite as an in-memory database for real-time views.

Advantages:

- Scalability: This has a higher scalability because real-time and batch layers are decoupled so that they can scale independently — both vertically and horizontally.

- Fault tolerance: The batch layer provides immutable data (raw data) that allows recomputing information and insights from the start of the pipeline. The recomputation capabilities ensure data accuracy and availability.

- Flexibility: This allows the management of a variety of data types and processing needs.

Challenges:

- Complexity: Managing and maintaining two layers increases the effort and resources required.

- Consistency: This refers to the eventual consistency between both layers that can be challenging for the data quality process.

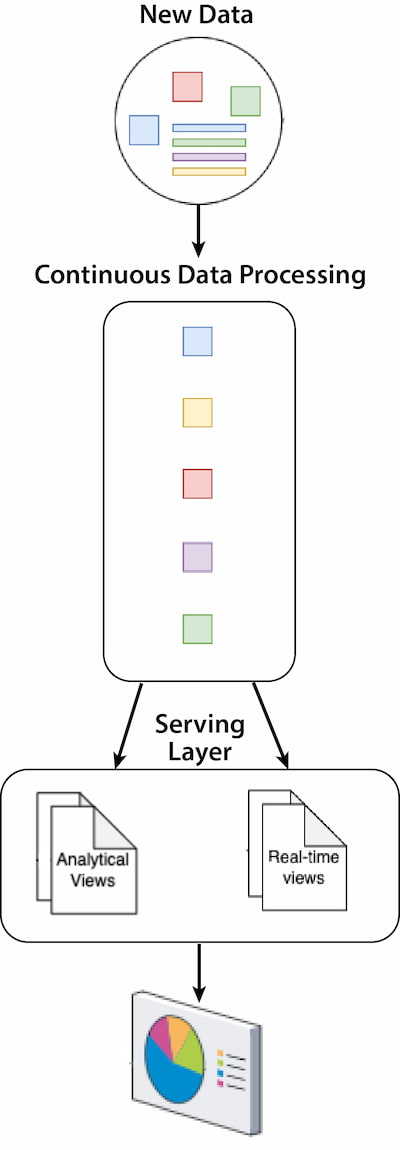

Kappa Architecture

Kappa architecture is designed to represent the principles of simplicity and real-time data processing by eliminating the batch processing layer. It is designed to handle large datasets in real time and process data as it comes in. The goal is to be able to process both real-time and batch processes with a single technology stack — and as far as possible with the same code base.

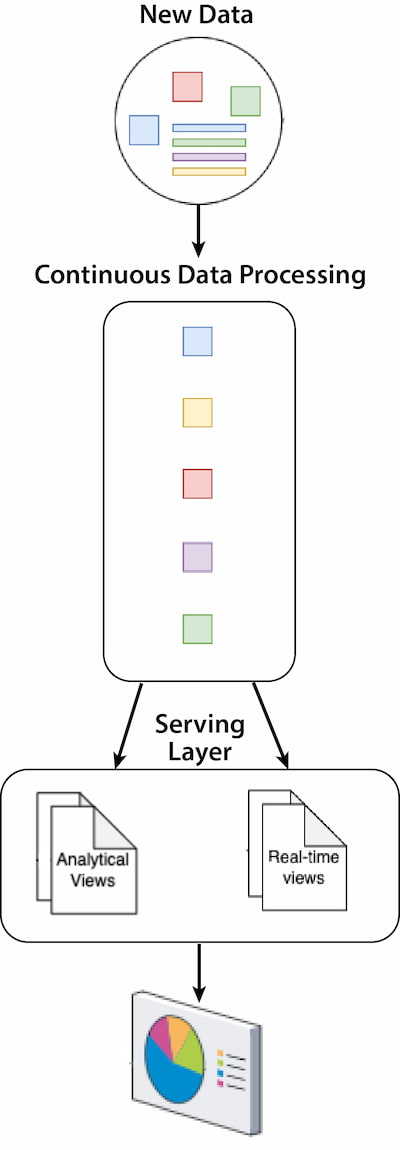

Figure 6: Kappa architecture

Components:

- Streaming processing layer: This layer manages the historical data and is responsible for running batch jobs, providing exhaustive insights. It allows the reprocessing of historical data and, therefore, becomes the source of truth.

- Serving layer: This layer provides results to the users or other applications via APIs, dashboards, or database queries. The serving layer is, indeed, typically composed of a database engine, which is responsible for indexing and exposing the processed stream to the applications that need to query it.

Advantages:

- Simplicity: Reduces the complexity of an architecture like Lambda by handling processing with a single layer and one code base.

- Consistency: Eliminates the need to manage the consistency of data between multiple layers.

- Enhanced user experience: Provides crucial information as the data comes in, improving responsiveness, decision-making, and user satisfaction.

Challenges:

- Historical analysis: Focused on real time and may not have the capabilities to perform in-depth analyses on large historical data.

- Data quality: Ensuring exactly-once processing and avoiding duplicate information can be challenging in some architectures.

- Data ordering: Maintaining the order of real-time data streams can be complex in distributed solutions.

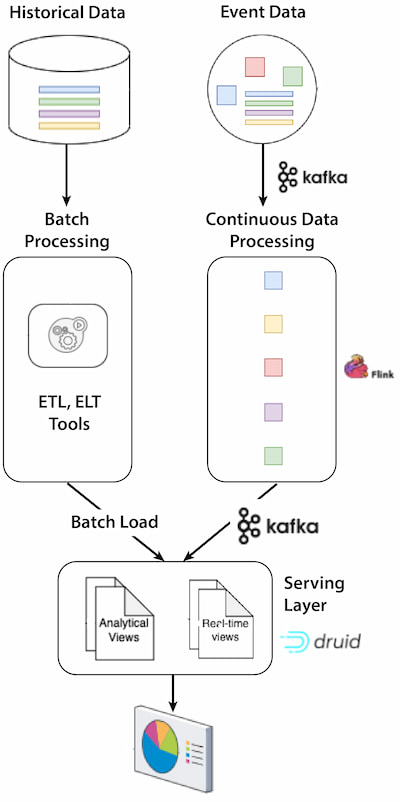

Streaming Architecture

Streaming architecture is focused on continuous data stream processing that allows for providing insights and making decisions in or near real time. It is similar to the Kappa architecture but has a different scope and capabilities; it covers a broader set of components and considerations for real-time scenarios from IoT, analytics, or smart systems. On the other hand, Kappa architecture can be considered an evolution of Lambda architecture, aiming to simplify patterns; although, it is often the precursor to streaming data architectures.

Components: The continuous data processing layer of a streaming architecture is more complex than other architectures like Kappa as new products and capabilities have emerged over the years.

- Stream ingestion layer: This layer is responsible for collecting, ingesting, and forwarding the incoming data streams to processing layers and is composed of producers and source connectors. For example, nowadays, there are layers of connectors to enable seamless and real-time ingestion of data from various external systems into streaming platforms like Debezium for databases, Apache Kafka Connect for several sources, Apache Flume for logs, and many others. This layer plays a crucial role that can impact a solution's overall performance and reliability.

- Stream transport layer: This layer is the central hub/message broker for data streaming that handles large volumes of real-time data efficiently and ensures data persistence, replicability, fault tolerance, and high availability, making it easier to integrate various data sources and data consumers, like analytics tools or databases. Apache Kafka is, perhaps, the most popular and widely adopted open-source streaming platform; although, there are other alternatives like Apache Pulsar or other private cloud-based solutions.

- Streaming processing layer: This layer is responsible for processing, transforming, enriching, and analyzing real-time-ingested data streams using solutions and frameworks like Kafka Streams, Apache Flink, or Apache Beam.

- Serving layer: Once data has been processed, it may need to be stored to provide both analytical and real-time views. In contrast to Kappa, the goal of this solution is not to simplify the stack but to provide business value so that the serving layer can be composed of different data repositories like data warehouses, data lakes, time series databases, or in-memory databases depending on the use case.

Advantages:

- Real-time insights: Enables users and organizations to analyze and respond as the data comes in, providing real-time decision-making.

- Scalability: Usually, these are highly scalable platforms that allow for managing large volumes of data in real time.

- Enhanced user experience: Provides crucial information as the data comes in, improving responsiveness, decision-making, and user satisfaction.

Challenges:

- Historical analysis: Focused on real time and may not have the capabilities to perform in-depth analyses on large historical data.

- Data quality: Ensuring exactly-once processing and avoiding duplicate information can be challenging in some architectures.

- Data ordering: Maintaining the order of real-time data streams can be complex in distributed solutions.

- Cost-effectiveness: It is, indeed, a significant challenge because this architecture requires continuous processing and often the storage of large volumes of data in real time.

Change Data Capture Streaming Architecture

Change data capture (CDC) streaming architecture is focusing on capturing data changes in the databases, such as inserts, updates, or deletes. Then, these changes are converted to streams and sent to other streaming platforms. This architecture is used in several cases such as synchronized data repositories, real-time analytics (RTA), or maintaining data integrity across diverse environments.

Components:

- Capture layer: This layer captures changes made to the source data, identifies data modifications, and extracts changes from the source database.

- Processing layer: This layer processes the data and can apply transformations, conversion, mapping, filtering, or enrichment to prepare data for the target data repository.

- Serving layer: This layer provides access to the stream data to be consumed, processed, and analyzed, thus triggering business processes and/or persisted data in the target system to be analyzed in real time.

Advantages:

- Real-time synchronization: Enables the synchronization of data across different data repositories, ensuring data consistency and integrity.

- Reduce load on source database: By capturing only the changes on the source data and sending them to several targets, the load on the source database decreases significantly compared to full data extractions or multiple extractions.

- Operational efficiency: Automate data synchronization processes, minimizing the risk of errors.

Challenges:

- Data consistency: Ensuring data quality across a distributed system can be challenging when the data is distributed to many destinations.

- Error handling: Providing a robust mechanism to handle errors across the different layers is a complex solution when the data is sent to several systems.