Why and How We Built a Primary-Replica Architecture of ClickHouse

Take a deep dive into the challenges and solutions one company experienced while implementing a primary-replica architecture for ClickHouse.

Join the DZone community and get the full member experience.

Join For FreeOur company uses artificial intelligence (AI) and machine learning to streamline the comparison and purchasing process for car insurance and car loans. As our data grew, we had problems with AWS Redshift which was slow and expensive. Changing to ClickHouse made our query performance faster and greatly cut our costs. But this also caused storage challenges like disk failures and data recovery.

To avoid extensive maintenance, we adopted JuiceFS, a distributed file system with high performance. We innovatively use its snapshot feature to implement a primary-replica architecture for ClickHouse. This architecture ensures high availability and stability of the data while significantly enhancing system performance and data recovery capabilities. Over more than a year, it has operated without downtime and replication errors, delivering expected performance.

In this post, I’ll deep dive into our application challenges, the solution we found, and our future plans. I hope this article provides valuable insights for startups and small teams in large companies.

Data Architecture: From Redshift to ClickHouse

Initially, we chose Redshift for analytical queries. However, as data volume grew, we encountered severe performance and cost challenges. For example, when generating funnel and A/B test reports, we faced loading times of up to tens of minutes. Even on a reasonably sized Redshift cluster, these operations were too slow. This made our data service unavailable.

Therefore, we looked for a faster, more cost-effective solution, and we chose ClickHouse despite its limitations on real-time updates and deletions. The switch to ClickHouse brought significant benefits:

- Report loading times were reduced from tens of minutes to seconds. We’re able to process data much more efficiently.

- The total expenses were cut to no more than 25% of what they had been.

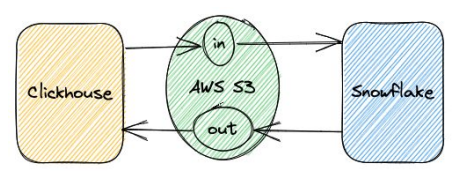

Our design was centered on ClickHouse, with Snowflake serving as a backup for the 1% of data processes that ClickHouse couldn't handle. This setup enabled seamless data exchange between ClickHouse and Snowflake.

Jerry data architecture

ClickHouse Deployment and Challenges

We initially maintained a stand-alone deployment for a number of reasons:

- Performance: Stand-alone deployments avoid the overhead of clusters and perform well under equal computing resources.

- Maintenance costs: Stand-alone deployments have the lowest maintenance costs. This covers not only integration maintenance costs but also application data settings and application layer exposure maintenance costs.

- Hardware capabilities: Current hardware can support large-scale stand-alone ClickHouse deployments. For example, we can now get EC2 instances on AWS with 24 TB of memory and 488 vCPUs. This surpasses many deployed ClickHouse clusters in scale. These instances also offer the disk bandwidth to meet our planned capacity.

Therefore, considering memory, CPU, and storage bandwidth, stand-alone ClickHouse is an acceptable solution that will be effective for the foreseeable future.

However, there are certain inherent issues with the ClickHouse approach:

- Hardware failures can cause long downtime for ClickHouse. This threatens the application's stability and continuance.

- ClickHouse data migration and backup are still difficult tasks. They require a reliable solution.

After we deployed ClickHouse, we ran into the following problems:

- Scaling and maintaining storage: Maintaining appropriate disk utilization rates became difficult due to the rapid expansion of data.

- Failures of the disk: ClickHouse is designed to make aggressive use of hardware resources in order to provide the best query performance. As a result, read and write operations happen frequently. They often exceed disk bandwidth. This increases the risk of disk hardware failures. When such failures occur, recovery can take several hours to over ten hours. This depends on the data volume. We've heard that other users had similar experiences. Although data analysis systems are typically considered replicas of other system's data, the impact of these failures is still significant. Therefore, we need to be ready for any hardware failures. Data migration, backup, and recovery are extremely difficult operations that take more time and energy to complete successfully.

Our Solution

We selected JuiceFS to address our pain points for the following reasons:

- JuiceFS was the only available POSIX file system that could run on object storage.

- Unlimited capacity: We haven't had to worry about storage capacity since we started using it.

- Significant cost savings: Our expenses are significantly lower with JuiceFS than with other solutions.

- Strong snapshot capability: JuiceFS effectively implements the Git branching mechanism at the file system level. When two different concepts merge so seamlessly, they often produce highly creative solutions. This makes previously challenging problems much easier to solve.

Building a Primary-Replica Architecture of ClickHouse

We came up with the idea of migrating ClickHouse to a shared storage environment based on JuiceFS. The article Exploring Storage and Computing Separation for ClickHouse provided some insights for us.

To validate this approach, we conducted a series of tests. The results showed that with caching enabled, JuiceFS read performance was close to that of local disks. This is similar to the test results in this article.

Although write performance dropped to 10% to 50% of disk write speed, this was acceptable for us.

The tuning adjustments we made for JuiceFS mounting are as follows:

- To write asynchronously and prevent possible blocking problems, we enabled the writeback feature.

- In cache settings, we set

attrcachetoto “3,600.0 seconds” andcache-sizeto “2,300,000.” We enabled the metacache feature. - Considering the possibility of longer I/O runtime on JuiceFS than on local drives, we introduced the block-interrupt feature.

Increasing cache hit rates was our optimization goal. We used JuiceFS Cloud Service to increase the cache hit rate to 95%. If we need further improvement, we’ll consider adding more disk resources.

The combination of ClickHouse and JuiceFS significantly reduced our operational workload. We no longer need to frequently expand disk space. Instead, we focus on monitoring cache hit rates. This greatly alleviated the urgency of disk expansion. Furthermore, data migration is not necessary in the event of hardware failures. This lowered possible risks and losses considerably.

We greatly benefited from the easy data backup and recovery options that the JuiceFS snapshot capability offered. Thanks to snapshots, we can view the original state of the data and resume database services at any time in the future. This approach addresses issues that were previously handled at the application level by implementing solutions at the file system level. In addition, the snapshot feature is very fast and economical, since only one copy of the data is stored. Users of JuiceFS Community Edition can use the clone feature to achieve similar functionality.

Moreover, without the need for data migration, downtime was dramatically reduced. We could quickly respond to failures or allow automated systems to mount directories on another server, ensuring service continuity. It’s worth mentioning that ClickHouse startup time is only a few minutes, which further improves system recovery speed.

Furthermore, our read performance remained stable after the migration. The entire company noticed no difference. This demonstrated the performance stability of this solution.

Finally, our costs significantly decreased.

Why We Set up a Primary-Replica Architecture

After migrating to ClickHouse, we encountered several issues that led us to consider building a primary-replica architecture:

- Resource contention caused performance degradation. In our setup, all tasks ran on the same ClickHouse instance. This led to frequent conflicts between extract, transform, and load (ETL) tasks and reporting tasks, which affected overall performance.

- Hardware failures caused downtime. Our company needed to access data at all times, so long downtime was unacceptable. Therefore, we sought a solution, which led us to the solution of a primary-replica architecture.

JuiceFS supports multiple mount points in different locations. We tried to mount the JuiceFS file system elsewhere and run ClickHouse at the same location. During the implementation, however, we encountered some issues:

- Through file lock mechanisms, ClickHouse restricted a file to be run by only one instance, which presented a challenge. Fortunately, this issue was easy to solve by modifying the ClickHouse source code to handle the locking.

- Even during read-only operations, ClickHouse retained some state information, such as write-time cache.

- Metadata synchronization was also a problem. When running multiple ClickHouse instances on JuiceFS, some data written by one instance might not be recognized by others. Fixing the problem required restarting instances.

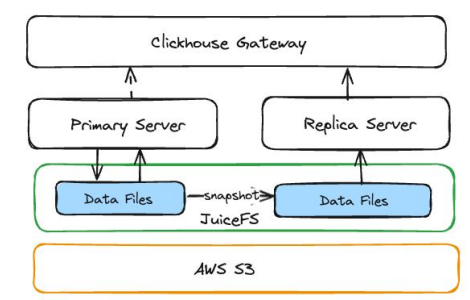

So we used JuiceFS snapshots to set up a primary-replica architecture. This method works like a regular primary-backup system. The primary instance handles all data updates, including synchronization and extract, transform, and load (ETL) operations. The replica instance focuses on query functionality.

ClickHouse primary-replica architecture

How We Created a Replica Instance for ClickHouse

1. Creating a Snapshot

We used the JuiceFS snapshot command to create a snapshot directory from the ClickHouse data directory on the primary instance and deploy a ClickHouse service on this directory.

2. Pausing Kafka Consumer Queues

Before starting the ClickHouse instance, we must stop the consumption of stateful content from other data sources. For us, this meant pausing the Kafka message queue to avoid competing for Kafka data with the primary instance.

3. Run ClickHouse on the Snapshot Directory

After starting the ClickHouse service, we injected some metadata to provide information about the ClickHouse creation time to users.

4. Delete ClickHouse Data Mutation

On the replica instance, we deleted all data mutations to improve system performance.

5. Performing Continuous Replication

Snapshots only save the state in which they were created. To ensure that it reads the most recent data, we periodically replace the original instance with a replica one. This method is simple to use and efficient because each copy instance begins with two copies and a pointer to one. Even if we need ten minutes or more, we typically run it every hour to suit our needs.

Our ClickHouse primary-replica architecture has been running stably for over a year. It has completed more than 20,000 replication operations without failure, demonstrating high reliability. Workload isolation and the stability of data replicas are key to improving performance. We successfully increased overall report availability from less than 95% to 99%, without any application-layer optimizations. In addition, this architecture supports elastic scaling, greatly enhancing flexibility. This allows us to develop and deploy new ClickHouse services as needed without complex operations.

What’s Next

Our plans for the future:

- We’ll develop an optimized control interface to automate instance lifecycle management, creation operations, and cache management.

- We also plan to optimize write performance. From the application layer, given the robust support for the Parquet open format, we can directly write most loads into the storage system outside ClickHouse for easier access. This allows us to use traditional methods to achieve parallel writes, thereby improving write performance.

- We noticed a new project, chDB, which allows users to embed ClickHouse functionality directly in a Python environment without requiring a ClickHouse server. Combining CHDB with our current storage solution, we can achieve a completely serverless ClickHouse. This is a direction we are currently exploring.

Published at DZone with permission of Tao Ma. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments