What Powers Similarity Search in Milvus Vector Database

This post introduces what Knowhere is, how it is different from Faiss, and how the code of Knowhere is structured.

Join the DZone community and get the full member experience.

Join For Free

As the core vector execution engine, Knowhere to Milvus is what an engine is to a sports car. This post introduces what Knowhere is, how it is different from Faiss, and how the code of Knowhere is structured.

The Concept of Knowhere

Narrowly speaking, Knowhere is an operation interface for accessing services in the upper layers of the system and vector similarity search libraries like Faiss, Hnswlib and Annoy in the lower layers of the system. In addition, Knowhere is also in charge of heterogeneous computing. More specifically, Knowhere controls which hardware (eg. CPU or GPU) to execute index building and search requests. This is how Knowhere gets its name — knowing where to execute the operations. More types of hardware including DPU and TPU will be supported in future releases.

In a broader sense, Knowhere also incorporates other third-party index libraries like Faiss. Therefore, as a whole, Knowhere is recognized as the core vector computation engine in the Milvus vector database.

From the concept of Knowhere, we can see that it only processes data computing tasks, while those tasks like sharding, load balance, and disaster recovery are beyond the work scope of Knowhere.

Starting from Milvus 2.0.1, Knowhere (in the broader sense) becomes independent from the Milvus project.

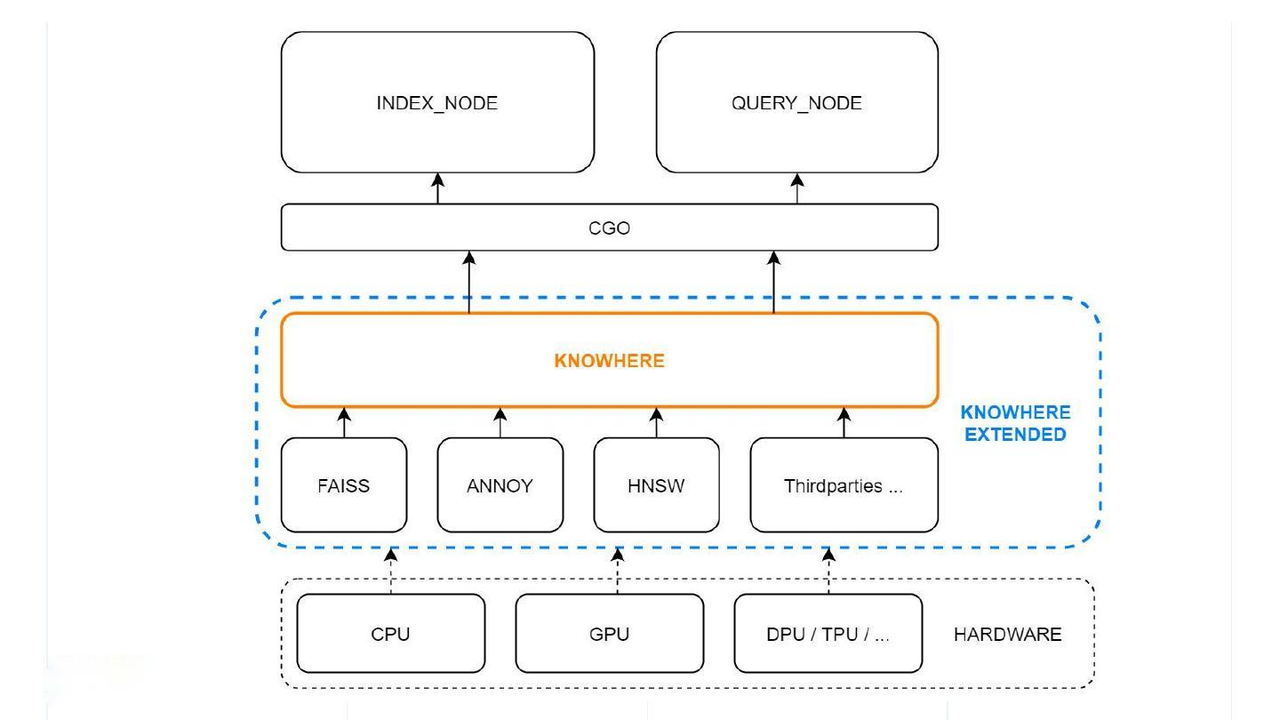

Knowhere in the Milvus Architecture

Computation in Milvus mainly involves vector and scalar operations. Knowhere only handles the operations on vectors in Milvus. The figure above illustrates the Knowhere architecture in Milvus.

The bottom-most layer is the system hardware. The third-party index libraries are on top of the hardware. Then Knowhere interacts with the index node and query node on the top via CGO.

This article talks about Knowhere in its broader sense, as marked within the blue frame in the architecture illustration.

Knowhere Vs Faiss

Knowhere not only further extends the functions of Faiss but also optimizes the performance. More specifically, Knowhere has the following advantages.

1. Support for BitsetView

Initially, bitset was introduced in Milvus for the purpose of “soft deletion”. A soft-deleted vector still exists in the database but will not be computed during a vector similarity search or query. Each bit in the bitset corresponds to an indexed vector. If a vector is marked as “1” in the bitset, it means this vector is soft-deleted and will not be involved during a vector search.

The bitset parameters are added to all the exposed Faiss index query API in Knowhere, including CPU and GPU indexes.

2. Support for More Similarity Metrics for Indexing Binary Vectors

Apart from Hamming, Knowhere also supports Jaccard, Tanimoto, Superstructure, and Substructure. Jaccard and Tanimoto can be used to measure the similarity between two sample sets while Superstructure and Substructure can be used to measure the similarity of chemical structures.

3. Support for AVX512 Instruction Set

Faiss itself supports multiple instruction sets including AArch64, SSE4.2, and AVX2. Knowhere further extends the supported instruction sets by adding AVX512, which can improve the performance of index building and query by 20% to 30% compared to AVX2.

4. Automatic SIMD-instruction Selection

Knowhere is designed to work well on a wide spectrum of CPU processors (both on-premises and cloud platforms) with different SIMD instructions (e.g., SIMD SSE, AVX, AVX2, and AVX512). Thus the challenge is, given a single piece of software binary (i.e., Milvus), how to make it automatically invoke the suitable SIMD instructions on any CPU processor? Faiss does not support automatic SIMD instruction selection and users need to manually specify the SIMD flag (e.g., “-msse4”) during compilation. However, Knowhere is built by refactoring the codebase of Faiss. Common functions (e.g., similarity computing) that rely on SIMD accelerations are factored out. Then for each function, four versions (i.e., SSE, AVX, AVX2, AVX512) are implemented and each is put into a separate source file. Then the source files are further compiled individually with the corresponding SIMD flag. Therefore, at runtime, Knowhere can automatically choose the best-suited SIMD instructions based on the current CPU flags and then link the right function pointers using hooking.

5. Other Performance Optimization

Read Milvus: A Purpose-Built Vector Data Management System for more about Knowhere’s performance optimization.

Understanding the Knowhere Code

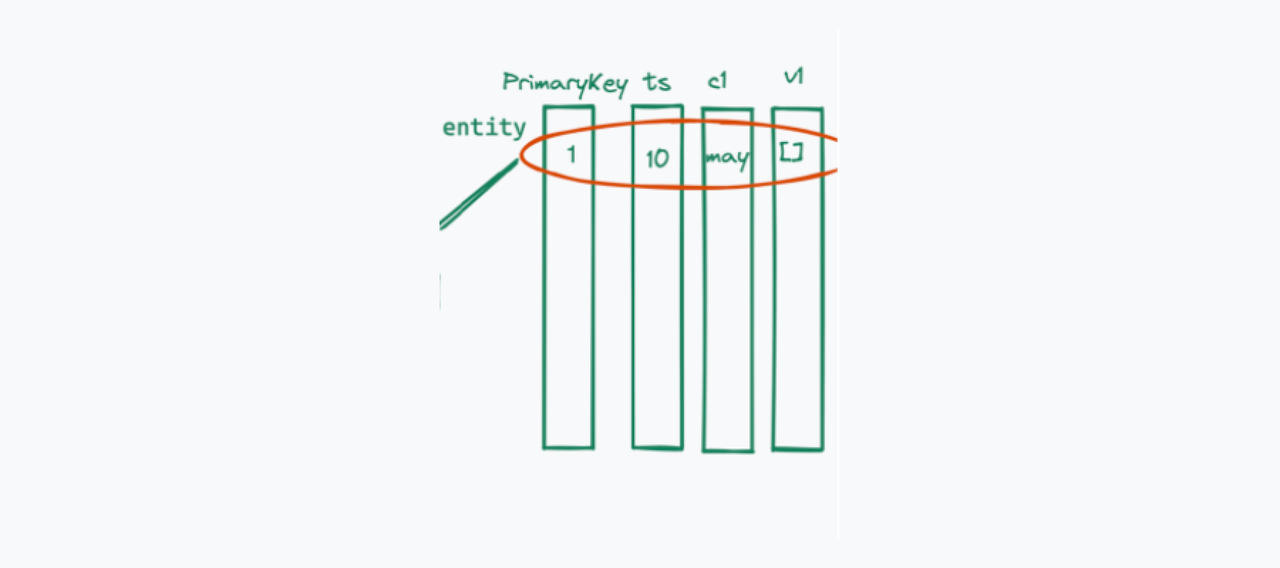

As mentioned in the first section, Knowhere only handles vector search operations. Therefore, Knowhere only processes the vector field of an entity (currently, only one vector field for entities in a collection is supported). Index building and vector similarity search are also targeted at the vector field in a segment.

Index

The index is a type of independent data structure from the original vector data. Indexing requires four steps: create an index, insert data, train data, and build an index.

For some AI applications, dataset training is an individual process from vector search. In this type of application, data from datasets are first trained and then inserted into a vector database like Milvus for similarity search. Open datasets like sift1M and sift1B provide data for training and testing. However, in Knowhere, data for training and searching are mixed together. That is to say, Knowhere trains all the data in a segment and then inserts all the trained data and builds an index for them.

Knowhere Code Structure

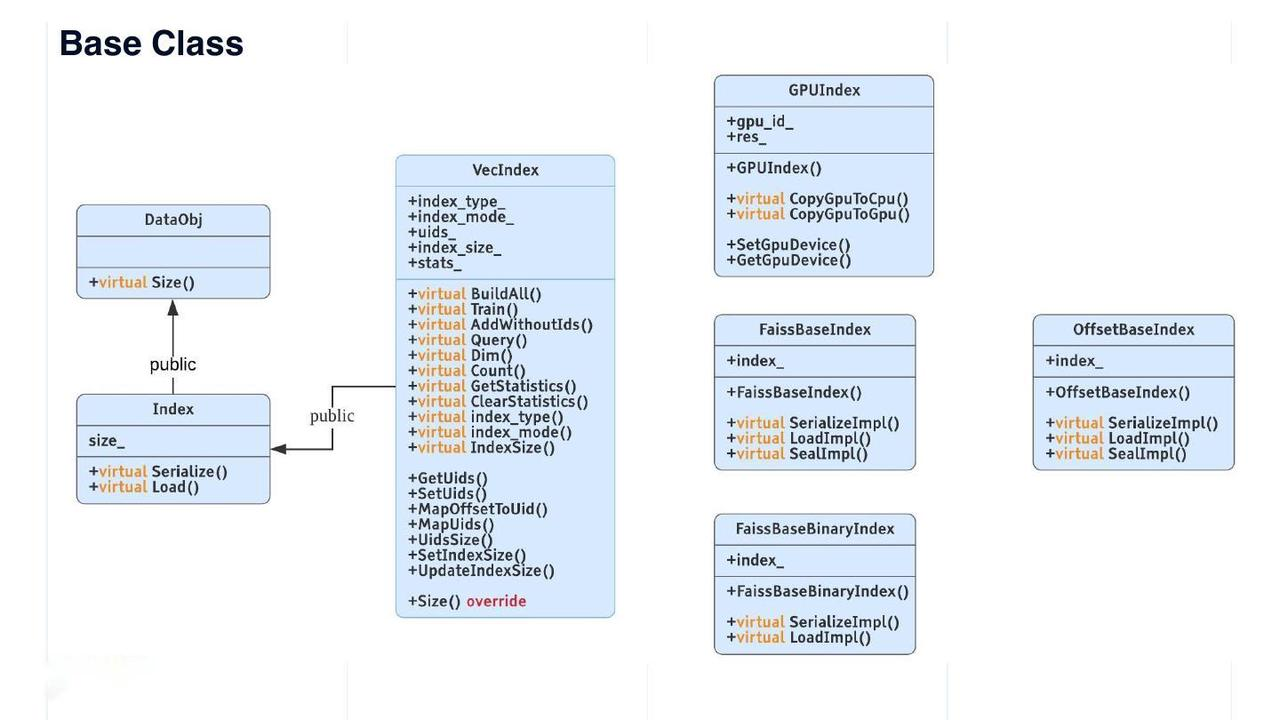

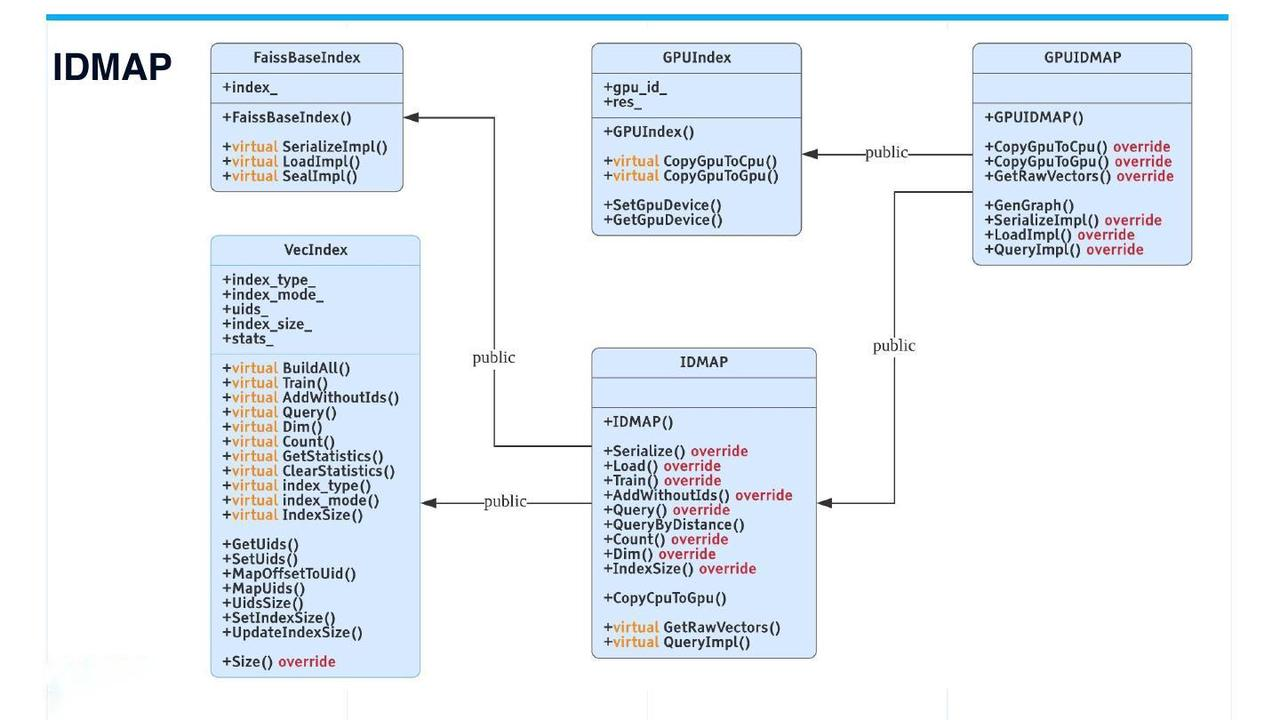

DataObj is the base class of all data structures in Knowhere. Size() is the only virtual method in DataObj. The Index class inherits from DataObj with a field named "size_". The Index class also has two virtual methods - Serialize() and Load(). The VecIndex class derived from Index is the virtual base class for all vector indexes. VecIndex provides methods including Train(), Query(), GetStatistics(), and ClearStatistics().

Knowhere base classes.

Other index types are listed on the right in the figure above.

- The Faiss index has two subclasses: FaissBaseIndex for all indexes on float point vectors, and FaissBaseBinaryIndex for all indexes on binary vectors.

- GPUIndex is the base class for all Faiss GPU indexes.

- OffsetBaseIndex is the base class for all self-developed indexes. Only vector ID is stored in the index file. As a result, an index file size for 128-dimensional vectors can be reduced by 2 orders of magnitude. We recommend taking the original vectors into consideration as well when using this type of index for vector similarity search.

Technically speaking, IDMAP is not an index, but rather used for brute-force search. When vectors are inserted into the vector database, no data training and index building is required. Searches will be conducted directly on the inserted vector data.

However, for the sake of code consistency, IDMAP also inherits from the VecIndex class with all its virtual interfaces. The usage of IDMAP is the same as other indexes.

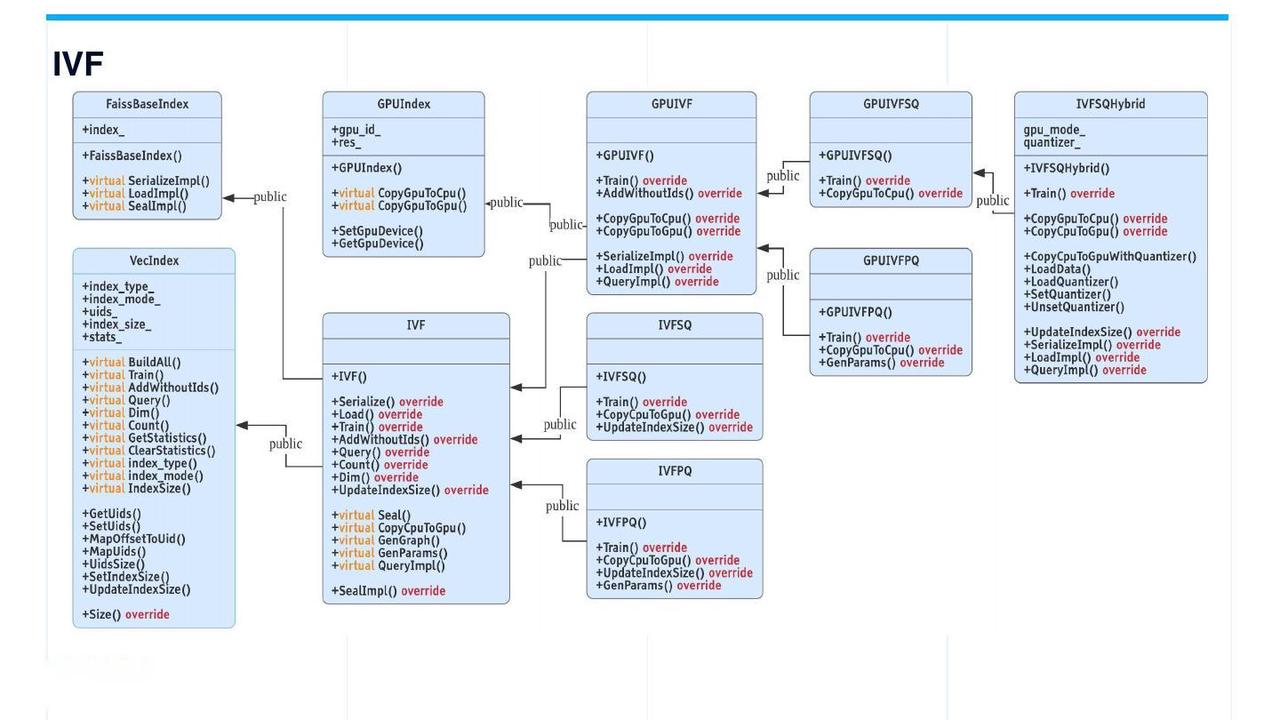

The IVF (inverted file) indexes are the most frequently used. The IVF class is derived from VecIndex and FaissBaseIndex and further extends to IVFSQ and IVFPQ. GPUIVF is derived from GPUIndex and IVF. Then GPUIVF further extends to GPUIVFSQ and GPUIVFPQ.

IVFSQHybrid is a class for a self-developed hybrid index that is executed by coarse quantizing on GPU. And search in the bucket is executed on the CPU. This type of index can reduce the occurrence of memory copy between CPU and GPU by leveraging the computing power of GPU. IVFSQHybrid has the same recall rate as GPUIVFSQ but comes with a better performance.

The base class structure for binary indexes is relatively simpler. BinaryIDMAP and BinaryIVF are derived from FaissBaseBinaryIndex and VecIndex.

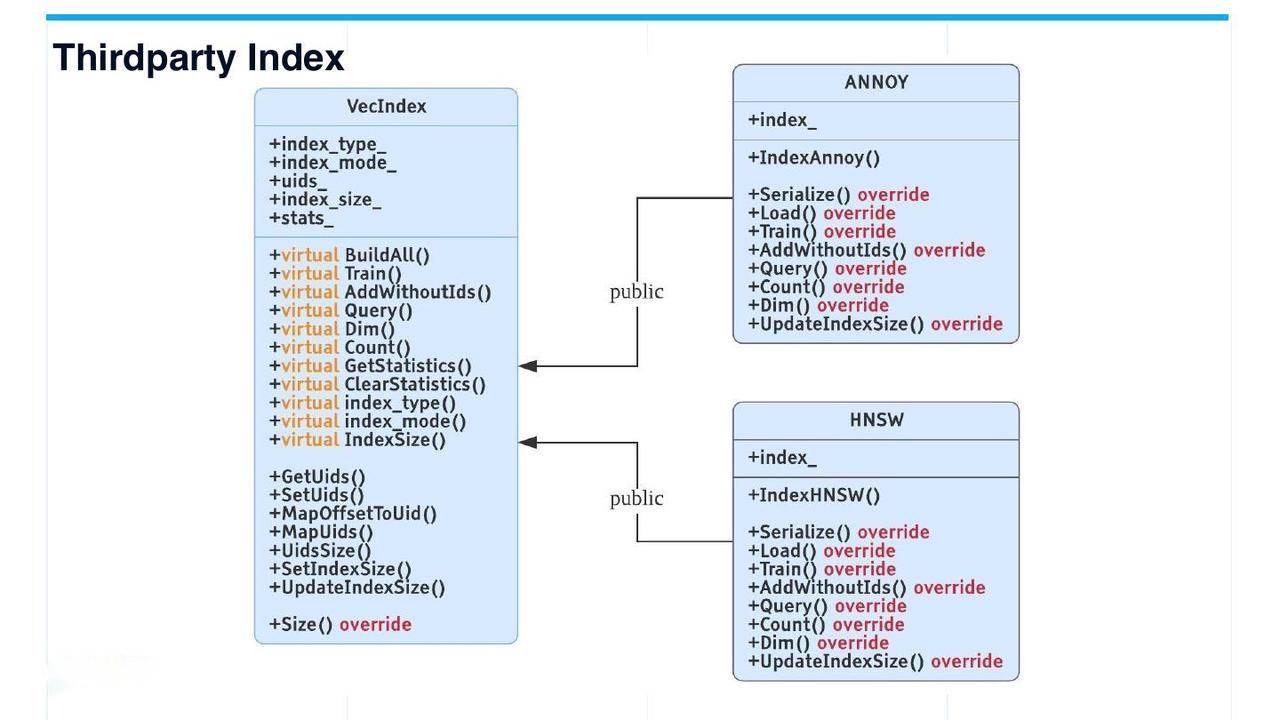

Currently, only two types of third-party indexes are supported apart from Faiss: tree-based index Annoy, and graph-based index HNSW. These two common and frequently used third-party indexes are both derived from VecIndex.

Adding Indexes to Knowhere

If you want to add new indexes to Knowhere, you can refer to existing indexes first:

- To add a quantization-based index, refer to IVF_FLAT.

- To add a graph-based index, refer to HNSW.

- To add a tree-based index, refer to Annoy.

After referring to the existing index, you can follow the steps below to add a new index to Knowhere.

- Add the name of the new index in

IndexEnum. The data type is a string. - Add data validation check on the new index in the file

ConfAdapter.cpp. The validation check is mainly to validate the parameters for data training and query. - Create a new file for the new index. The base class of the new index should include,

VecIndex, and the necessary virtual interface ofVecIndex. - Add the index building logic for the new index in

VecIndexFactory::CreateVecIndex(). - Add unit test under the

unittestdirectory.

Published at DZone with permission of Yudong Cai. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments