Unveiling Vulnerabilities via Generative AI

In this article, learn more about code scanning and reporting exposure of security-sensitive parameters in MuleSoft APIs.

Join the DZone community and get the full member experience.

Join For FreeCode scanning for vulnerability detection for exposure of security-sensitive parameters is a crucial practice in MuleSoft API development.

Code scanning involves the systematic analysis of MuleSoft source code to identify vulnerabilities. These vulnerabilities could range from hardcoded secure parameters like password or accessKey to the exposure of password or accessKey in plain text format in property files. These vulnerabilities might be exploited by malicious actors to compromise the confidentiality, integrity, or availability of the applications.

Lack of Vulnerability Auto-Detection

MuleSoft Anypoint Studio or Anypoint platform does not provide a feature to keep governance on above mentioned vulnerabilities. It can be managed by design time governance, where a manual review of the code will be needed. However, there are many tools available that can be used to scan the deployed code or code repository to find out such vulnerabilities. Even you can write some custom code/script in any language to perform the same task. Writing custom code adds another complexity and manageability layer.

Using Generative AI To Review the Code for Detecting Vulnerabilities

In this article, I am going to present how Generative AI can be leveraged to detect such vulnerabilities. I have used the Open AI foundation model “gpt-3.5-turbo” to demonstrate the code scan feature to find the aforementioned vulnerabilities. However, we can use any foundation model to implement this use case.

This can be implemented using Python code or any other code in another language. This Python code can be used in the following ways:

- Python code can be executed manually to scan the code repository.

- It can be integrated into the CICD build pipeline, which can scan and report the vulnerabilities and result in build failure if vulnerabilities are present.

- It can be integrated into any other program, such as the Lambda function, which can run periodically and execute the Python code to scan the code repository and report vulnerabilities.

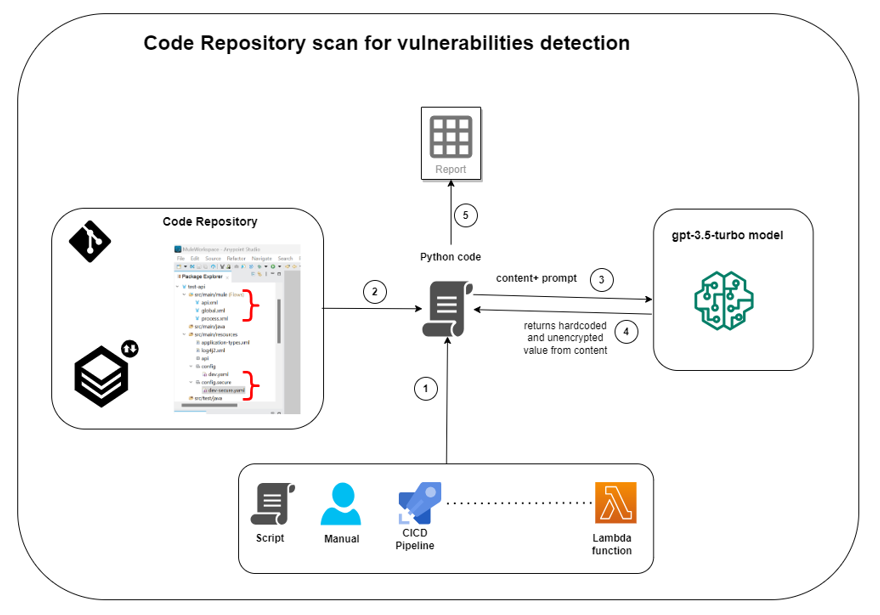

High-Level Architecture

Architecture

- There are many ways to execute the Python code. A more appropriate and practical way is to integrate the Python code into the CICD build pipeline. CICD build pipeline executes the Python code.

- Python code reads the MuleSoft code XML files and property files.

- Python code sends the MuleSoft code content and prompts the OpenAI gpt-3.5-turbo model.

- OpenAI mode returns the hardcoded and unencrypted value.

- Python code generates the report of vulnerabilities found.

Implementation Details

MuleSoft API project structure contains two major sections where security-sensitive parameters can be exposed as plain text. src/main/mule folder contains all the XML files, which contain process flow, connection details, and exception handling. MuleSoft API project may have custom Java code also. However, in this article, I have not considered the custom Java code used in the MuleSoft API.

src/main/resources folder contains environment property files. These files can be .properties or .yaml files for development, quality, and production. These files contain property key values, for example, user, password, host, port, accessKey, and secretAccesskey in an encrypted format.

Based on the MuleSoft project structure, implementation can be achieved in two steps:

MuleSoft XML File Scan

Actual code is defined as process flow in MuleSoft Anypoint Studio. We can write Python code to use the Open AI foundation model and write a prompt that can scan the MuleSoft XML files containing the code implementation to find hardcoded parameter values. For example:

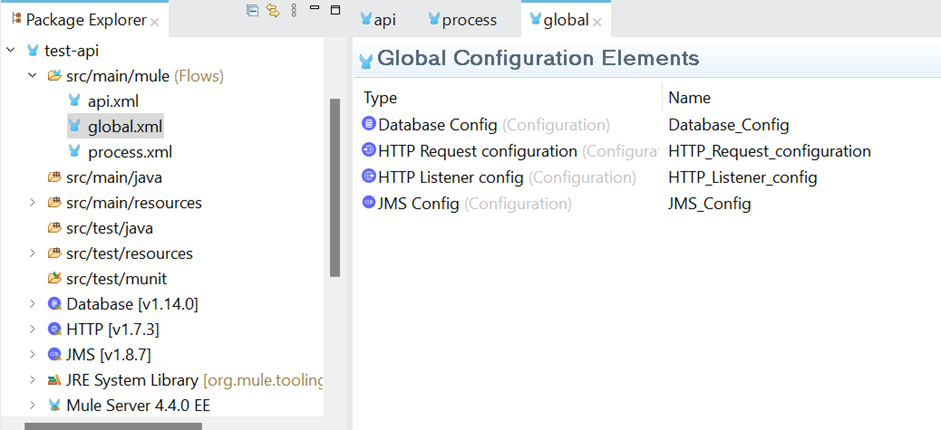

- Global.xml/Config.xml file: This file contains all the connector configurations. This is standard recommended by MuleSoft. However, it may vary depending on the standards and guidelines defined in your organization. A generative AI foundation model can use this content to find out hardcoded values.

- Other XML files: These files may contain some custom code or process flow calling other for API calls, DB calls, or any other system call. This may have connection credentials hard-coded by mistake. A generative AI foundation model can use this content to find out hardcoded values.

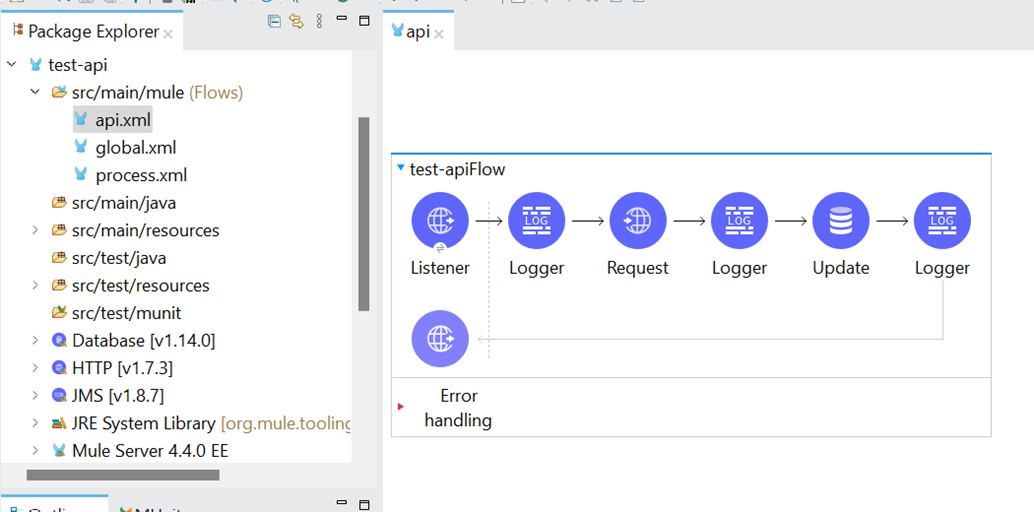

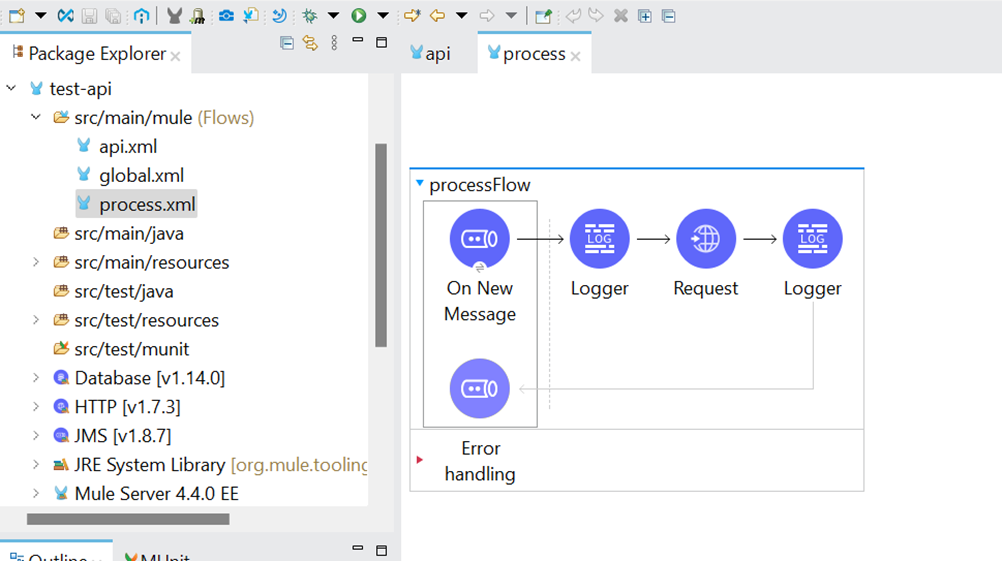

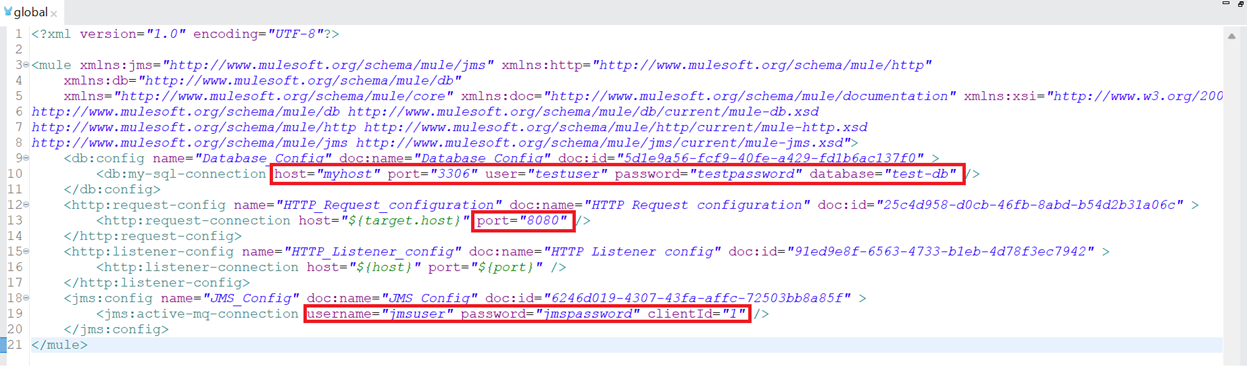

I have provided the screenshot of a sample MuleSoft API code. This code has three XML files; one is api.xml, which contains the Rest API flow. Process.xml has a JMS-based asynchronous flow. Global.xml has all the connection configurations.

api.xml

process.xml

global.xml

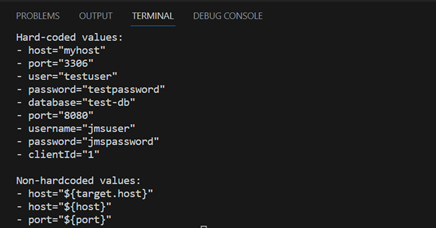

For demonstration purposes, I have used a global.xml file. The code snippet has many hardcoded values for demonstration. Hardcoded values are highlighted in red boxes:

![Hardcoded values]()

Python Code

The Python code below uses the Open AI foundation model to scan the above XML files to find out the hard-coded values.

import openai,os,glob

from dotenv import load_dotenv

load_dotenv()

APIKEY=os.getenv('API_KEY')

openai.api_key= APIKEY

file_path = "C:/Work/MuleWorkspace/test-api/src/main/mule/global.xml"

try:

with open(file_path, 'r') as file:

file_content = file.read()

print(file_content)

except FileNotFoundError:

except Exception as e:

print("An error occurred:", e)

message = [

{"role": "system", "content": "You will be provided with xml as input, and your task is to list the non-hard-coded value and hard-coded value separately. Example: For instance, if you were to find the hardcoded values, the hard-coded value look like this: name=""value"". if you were to find the non-hardcoded values, the non-hardcoded value look like this: host=""${host}"" "},

{"role": "user", "content": f"input: {file_content}"}

]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=message,

temperature=0,

max_tokens=256

)

result=response["choices"][0]["message"]["content"]

print(result)Once this code is executed, we get the following outcome:

The result from the Generative AI Model

Similarly, we can provide api.xml and process.xml to scan the hard-coded values. You can even modify the Python code to read all the XML files iteratively and get the result in sequence for all the files.

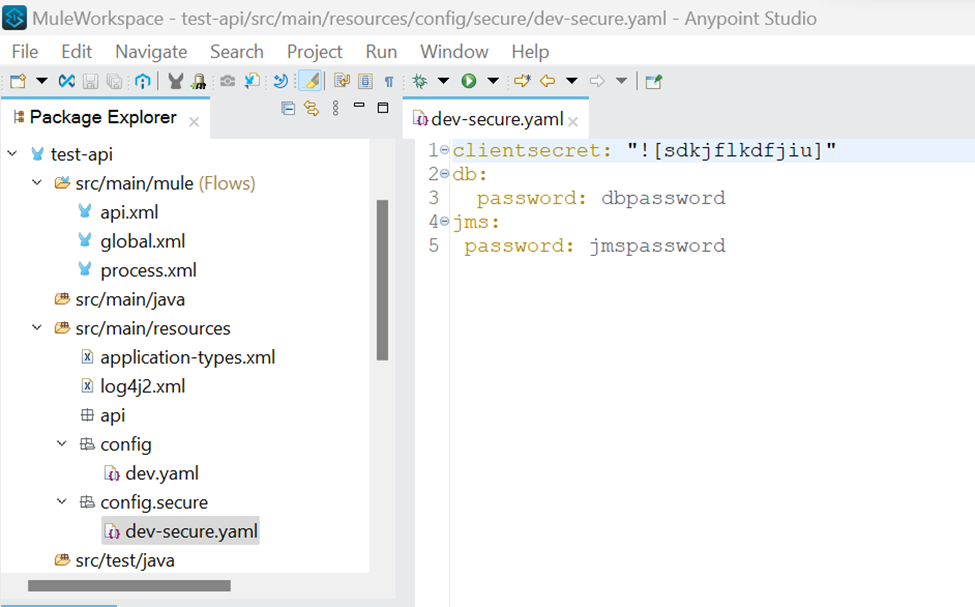

Scanning the Property Files

We can use the Python code to send another prompt to the AI model, which can find the plain text passwords kept in property files. In the following screenshot dev-secure.yaml file has client_secret as the encrypted value, and db.password and jms.password is kept as plain text.

config file

Python Code

The Python code below uses the Open AI foundation model to scan config files to find out the hard-coded values.

import openai,os,glob

from dotenv import load_dotenv

load_dotenv()

APIKEY=os.getenv('API_KEY')

openai.api_key= APIKEY

file_path = "C:/Work/MuleWorkspace/test-api/src/main/resources/config/secure/dev-secure.yaml"

try:

with open(file_path, 'r') as file:

file_content = file.read()

except FileNotFoundError:

print("File not found.")

except Exception as e:

print("An error occurred:", e)

message = [

{"role": "system", "content": "You will be provided with xml as input, and your task is to list the encrypted value and unencrypted value separately. Example: For instance, if you were to find the encrypted values, the encrypted value look like this: ""![asdasdfadsf]"". if you were to find the unencrypted values, the unencrypted value look like this: ""sdhfsd"" "},

{"role": "user", "content": f"input: {file_content}"}

]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=message,

temperature=0,

max_tokens=256

)

result=response["choices"][0]["message"]["content"]

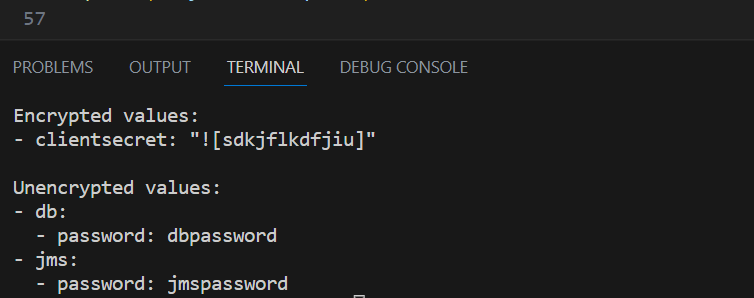

print(result)Once this code is executed, we get the following outcome:

result from Generative AI

Impact of Generative AI on the Development Life Cycle

We see a significant impact on the development lifecycle. We can think of leveraging Generative AI for different use cases related to the development life cycle.

Efficient and Comprehensive Analysis

Generative AI models like GPT-3.5 have the ability to comprehend and generate human-like text. When applied to code review, they can analyze code snippets, provide suggestions for improvements, and even identify patterns that might lead to bugs or vulnerabilities. This technology enables a comprehensive examination of code in a relatively short span of time.

Automated Issue Identification

Generative AI can assist in detecting potential issues such as syntax errors, logical flaws, and security vulnerabilities. By automating these aspects of code review, developers can allocate more time to higher-level design decisions and creative problem-solving.

Adherence To Best Practices

Through analysis of coding patterns and context, Generative AI can offer insights on adhering to coding standards and best practices.

Learning and Improvement

Generative AI models can "learn" from vast amounts of code examples and industry practices. This knowledge allows them to provide developers with contextually relevant recommendations. As a result, both the developers and the AI system benefit from a continuous learning cycle, refining their understanding of coding conventions and emerging trends.

Conclusion

In conclusion, conducting a code review to find security-sensitive parameters exposed as plain text using OpenAI's technology has proven to be a valuable and efficient process. Leveraging OpenAI for code review not only accelerated the review process but also contributed to producing more robust and maintainable code. However, it's important to note that while AI can greatly assist in the review process, human oversight and expertise remain crucial for making informed decisions and fully understanding the context of the code.

Opinions expressed by DZone contributors are their own.

Comments