Unlocking the Potential of IoT Applications With Real-Time Alerting Using Apache Kafka Data Streams and KSQL

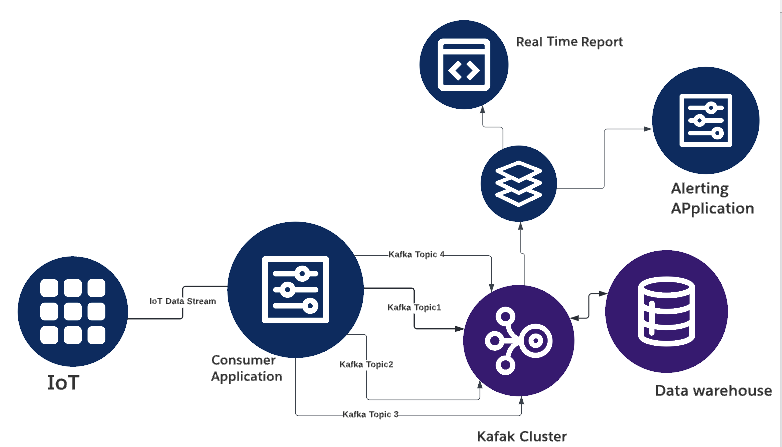

This article takes the reader through the use of Kafka data streams in IoT devices with the presentation of real-time analysis.

Join the DZone community and get the full member experience.

Join For FreeIoT devices have revolutionized the way businesses collect and utilize data. IoT devices generate an enormous amount of data that can provide valuable insights for informed decision-making. However, processing this data in real-time can be a significant challenge, particularly when managing large data volumes from numerous sources. This is where Apache Kafka and Kafka data streams come into play.

Apache Kafka is a distributed streaming platform that can handle large amounts of data in real-time. It is a messaging system commonly used for sending and receiving data between systems and applications. It can also be used as a data store for real-time processing. Kafka data streams provide a powerful tool for processing and analyzing data in real-time, enabling real-time analytics and decision-making.

One of the most important applications of Kafka data streams is real-time monitoring. IoT devices can be used to monitor various parameters, such as temperature, humidity, and pressure. By using Kafka data streams, this data can be processed and analyzed in real-time, allowing for early detection of issues and immediate response. This can be particularly beneficial in manufacturing, where IoT devices can monitor machine performance and alert maintenance personnel to potential problems.

Another application of Kafka data streams is predictive maintenance. By analyzing IoT data in real-time using Kafka data streams, it is possible to predict when maintenance will be required on devices. This can help to prevent downtime and reduce maintenance costs. For instance, sensors in vehicles can monitor engine performance and alert the driver to potential problems before they cause a breakdown.

Energy management is another area where IoT devices can be leveraged using Kafka data streams. IoT devices can be used to monitor energy consumption in real-time. By using Kafka data streams, this data can be analyzed to identify energy-saving opportunities and optimize energy usage. For example, smart buildings can use sensors to monitor occupancy and adjust heating and cooling systems accordingly.

Smart cities are another application of Kafka data streams for IoT devices. IoT devices can be used to monitor and control various aspects of city life, such as traffic flow, air quality, and waste management. By using Kafka data streams, this data can be processed and analyzed in real-time, allowing for quick response to changing conditions and improved quality of life for residents. For example, sensors in smart traffic lights can adjust the timing of the lights to reduce congestion and improve traffic flow.

One of the advantages of using Kafka data streams for IoT devices is that it enables real-time analytics and decision-making. This is important because it allows businesses to respond quickly to changing conditions and make informed decisions based on current data. The real-time nature of Kafka data streams means that businesses can monitor and analyze data as it is generated rather than waiting for batch processing to occur. This enables businesses to be more agile and responsive.

We are using Apache Camel to consume IoT data and write it to a Kafka topic:

import org.apache.camel.builder.RouteBuilder;

import org.apache.camel.component.kafka.KafkaConstants;

import org.apache.camel.model.dataformat.JsonLibrary;

public class RestApiToKafkaRoute extends RouteBuilder {

@Override

public void configure() throws Exception {

// Set up Kafka component

from("kafka:{{kafka.bootstrap.servers}}")

.routeId("kafka")

.to("log:received-message");

// Set up REST API component

from("timer://rest-api-timer?period={{rest.api.timer.period}}")

.routeId("rest-api")

.to("rest:get:{{rest.api.url}}")

.unmarshal().json(JsonLibrary.Jackson, DeviceData.class)

.split(body())

.process(exchange -> {

// Extract device ID from data and set Kafka topic header

DeviceData deviceData = exchange.getIn().getBody(DeviceData.class);

String deviceId = deviceData.getDeviceId();

exchange.getMessage().setHeader(KafkaConstants.TOPIC, deviceId);

})

.marshal().json(JsonLibrary.Jackson)

.to("kafka:{{kafka.topic}}");

}

}

KSQL is a streaming SQL engine for Apache Kafka. It enables real-time data processing and analysis by providing a simple SQL-like language for working with Kafka data streams. KSQL makes it easy to create real-time dashboards and alerts that can be used for monitoring and decision-making. Real-time dashboards are an important tool for monitoring IoT devices using Kafka data streams. Dashboards can be used to display key performance indicators (KPIs) in real-time, allowing businesses to monitor the health and performance of their IoT devices. Dashboards can also be used to visualize data trends and patterns, making it easier to identify opportunities for optimization and improvement. Alerts are another important tool for monitoring IoT devices using Kafka data streams. Alerts can be used to notify businesses when certain conditions are met, such as when a device exceeds a certain threshold or when a potential issue is detected. Alerts can be sent via email, SMS, or other means, allowing businesses to respond quickly to potential issues.

sample Kql query for dashboard for IOT data alerts:

CREATE TABLE pressure_alerts AS

SELECT device_id, pressure

FROM iot_data_stream

WHERE pressure > 100;

CREATE STREAM pressure_alerts_stream (device_id VARCHAR, pressure INT, alert_type VARCHAR)

WITH (kafka_topic='pressure_alerts', value_format='JSON');

CREATE TABLE pressure_alert_count AS

SELECT alert_type, COUNT(*)

FROM pressure_alerts_stream

WINDOW TUMBLING (SIZE 1 MINUTE)

GROUP BY alert_type;

SELECT * FROM pressure_alert_count;KSQL also provides a real-time dashboard for monitoring and visualizing data in Kafka data streams. The dashboard can display real-time data streams and visualizations and can be used to track performance metrics and detect anomalies in real-time. This enables users to gain real-time insights and make informed decisions based on the data.

Below is a sample program that enables the consumption of data from a Kafka topic and issues an alert based on a predetermined threshold limit, as shown in the example where the pressure level exceeds 100.

import com.twilio.Twilio;

import com.twilio.rest.api.v2010.account.Message;

import com.twilio.type.PhoneNumber;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.KafkaStreams;

import org.apache.kafka.streams.StreamsBuilder;

import org.apache.kafka.streams.StreamsConfig;

import org.apache.kafka.streams.kstream.KStream;

import org.apache.kafka.streams.kstream.Produced;

import java.util.Properties;

public class AlertTrigger {

// Set Twilio Account SID and Auth Token

public static final String ACCOUNT_SID = "your_account_sid_here";

public static final String AUTH_TOKEN = "your_auth_token_here";

// Set Twilio phone number and mobile app endpoint

public static final String TWILIO_PHONE_NUMBER = "+1234567890";

public static final String MOBILE_APP_ENDPOINT = "https://your.mobile.app/endpoint";

public static void main(String[] args) {

// Set up properties for Kafka Streams

Properties props = new Properties();

props.put(StreamsConfig.APPLICATION_ID_CONFIG, "alert-trigger");

props.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

props.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

props.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

// Build Kafka Streams topology

StreamsBuilder builder = new StreamsBuilder();

// Read data from Kafka topic

KStream<String, String> input = builder.stream("iot-data");

// Define KSQL query for alert trigger

String ksql = "SELECT device_id, pressure FROM iot-data WHERE pressure > 100";

// Create Kafka Streams application and start processing

KafkaStreams streams = new KafkaStreams(builder.build(), props);

streams.start();

// Set up Twilio client

Twilio.init(ACCOUNT_SID, AUTH_TOKEN);

// Process alerts

input.filter((key, value) -> {

// Execute KSQL query to check for alert condition

// If pressure is greater than 100, trigger alert

// Replace this with your actual KSQL query

return true;

})

.mapValues(value -> {

// Create alert message

String message = "Pressure has exceeded threshold value of 100!";

return message;

})

.peek((key, value) -> {

// Send notification to mobile app endpoint

Message message = Message.creator(new PhoneNumber(MOBILE_APP_ENDPOINT), new PhoneNumber(TWILIO_PHONE_NUMBER), value).create();

})

.to("alert-topic", Produced.with(Serdes.String(), Serdes.String()));

// Gracefully shut down Kafka Streams application

Runtime.getRuntime().addShutdownHook(new Thread(streams::close));

}

}

Overall, Apache Kafka and Kafka data streams, combined with Kafka Connect and KSQL, offer a powerful toolset for processing and analyzing real-time data from IoT devices. By integrating IoT devices with Kafka data streams, organizations can gain real-time insights and improve decision-making, leading to significant improvements in efficiency, cost savings, and quality of life. The KSQL dashboard provides a powerful way to visualize and monitor the data in real-time, allowing users to quickly identify trends, anomalies, and potential issues. With the continued growth of IoT devices and the increasing demand for real-time analytics, Kafka data streams and KSQL are likely to become even more important in the years to come.

Use Case for IoT Applications With Real-Time Alerting Using Apache Kafka Data Streams:

- Instantaneous Monitoring of Aero Engine Performance

- Aero Engine Compliance Test Evaluations.

Opinions expressed by DZone contributors are their own.

Comments