Universal Semantic Layer: Going Meta on Data, Functionality, Governance, and Semantics

What is a universal semantic layer, and how is it different from a semantic layer? Is there actual semantics involved? Who uses that, how, and what for?

Join the DZone community and get the full member experience.

Join For FreeWhat is a universal semantic layer, and how is it different from a semantic layer? Is there actual semantics involved? Who uses that, how, and what for?

When Cube Co-founder Artyom Keydunov started hacking away a Slack chatbot back in 2017, he probably didn't have answers to those questions. All he wanted to do was find a way to access data using a text interface, and Slack seemed like a good place to do that.

Keydunov had plenty of time to experiment, validate, and develop Cube, as well as get insights along the way. We caught up and talked about all of the above, as well as Cube's latest features and open-source core.

Back in 2017, chatbots were fashionable. The time between 2017 and 2024 saw chatbots go out of fashion and then make a huge comeback with the advent of ChatGPT. Not much came out of Keydunov’s Slack chatbot in 2017, but that was the springboard to launch into developing Cube. Marking the full circle, Cube is now announcing new Large Language Model integrations.

The state of the art in Natural Language Processing in 2017 was not what it is today. But the problem that caught Keydunov's attention was a different one. How do you go from natural language to some multidimensional data model representation, and then from that to SQL?

That intermediate layer that Keydunov started building to solve that problem is what gradually evolved to Cube: a universal semantic layer. But what exactly is a semantic layer, and how is a universal semantic layer different?

Semantic Layers and the One Layer To Rule Them All

As per Keydunov's definition, even though multidimensional data models are at the heart of semantic layers, you don't have to know or care about them to benefit from using tools that build on them. For Keydunov, when you drag and drop metrics into a canvas on a Business Intelligence (BI) tool, you are using a semantic layer.

"Today's semantic layers live inside of BI tools. They essentially take measures and dimensions, all of these high-level business objects such as revenue and active users, all of these definitions, and translate them down to the underlying SQL queries to run against the back end", Keydunov said.

The idea is not new, and Keydunov elaborated on the history. But what's changed in the last few years is scale and diversification. Where relying on a single BI tool used to be the rule, today it's the exception. So what happens when you have an explosion of different data visualization and consumption tools used in organizations?

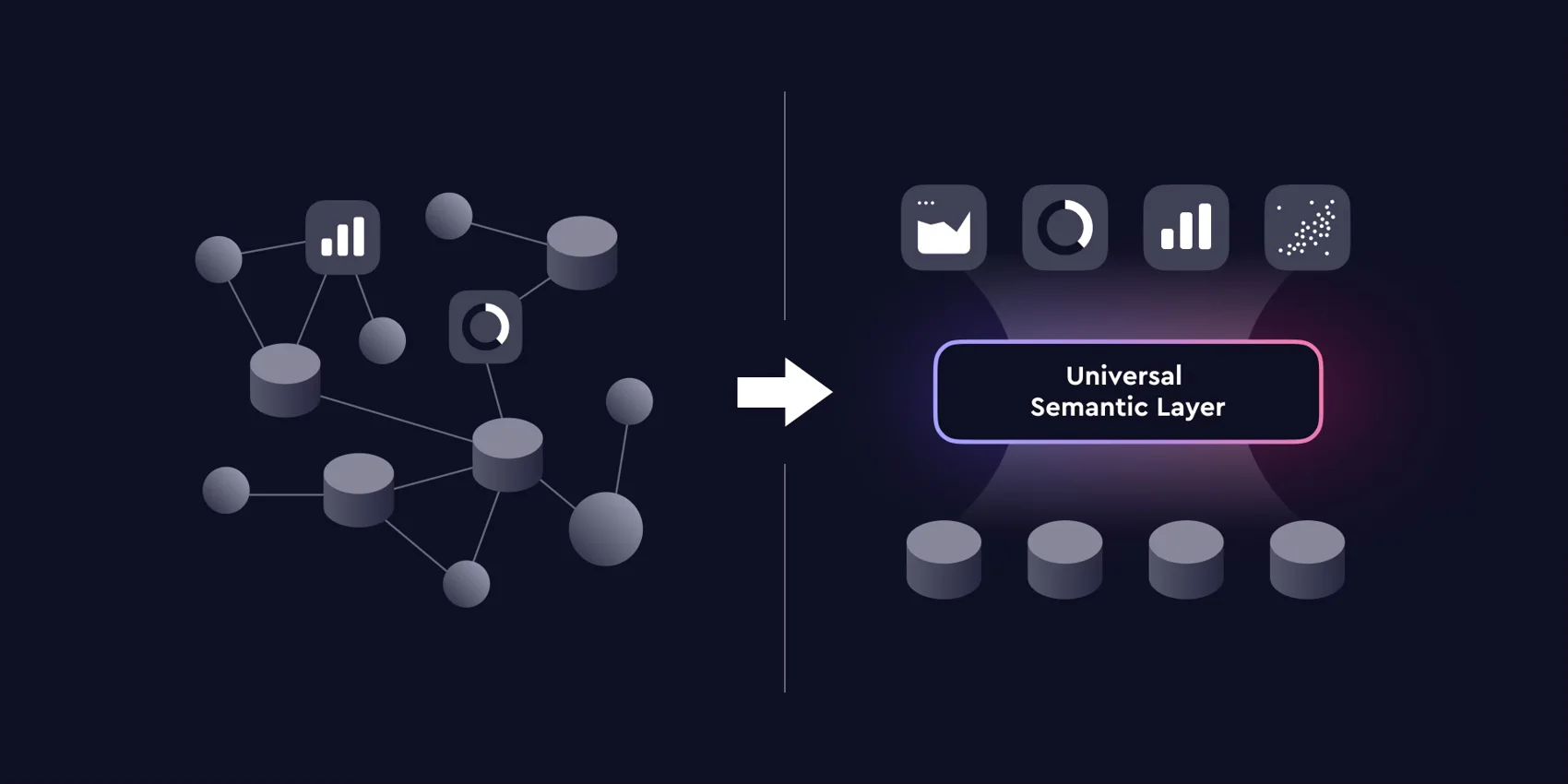

A mess, that's what. Even if semantic layers exist in each tool, their definitions soon start drifting apart. This defeats the purpose of having a semantic layer in the first place, and that's what gave birth to the notion of a universal semantic layer.

"You have all these tools. They all have semantic layers. They all have definitions. You probably don't want to repeat yourself and build the same metric across multiple systems. So let's take out the definition and put it in one place, where you can have it under version control.

You can apply all the best practices, and then just use that metric across all of these BI tools. And if you wanna add 10 more BI tools, just add them. You still have your metrics in a single place", as Keydunov put it.

Going Meta

That sounds like a reasonable approach, but it also raises some questions. In a way, that's a timeless problem that universal semantic layers are trying to address: unifying access over a number of data sources, aka data integration. The difference here is that instead of working directly on the data layer, integrating BI tools is like data integration gone meta.

When integrating relational databases, for example, there is a standard that can be used to query across all of them — SQL. But what about BI tools — how do you query across them? In Cube, that can be done in 3 ways.

The first one is actually SQL, as Cube offers the equivalent of virtual tables internally. The second one is JSON, be it vanilla or the GraphQL flavor. And the third one is MDX for the Microsoft ecosystem.

As far as metric definition goes, in Cube that can be done using YAML or Python. As Keydunov shared, this reflects Cube's philosophy of treating everything as code. That may sound cool, but it also comes with some implications.

Going Full Circle

The idea of treating data and definitions as software artifacts and applying version control to them is gaining momentum. However, it's hard to imagine non-technical users writing YAML or Python code.

Intuitively, one might expect a tool like Cube that offers a universal semantic layer over BI tools to be addressed by business users. Notably, BI tools have been through the "self-service" wave.

The key idea there was to enable business users to use BI tools with little or no intervention from the technologically savvy. Cube, however, is used primarily by data engineering types who mediate on behalf of business users as per Keydunov.

If you need data engineers to access BI tools, does that mean we've come full circle? Keydunov acknowledges the concern.

On the one hand, he believes that there needs to be some kind of balance between "moving fast and breaking things" — in this case, metric definitions — and slow and cumbersome change cycles. People should be empowered to experiment and make ad-hoc changes, but incorporating them into organizational repositories should be properly governed.

On the other hand, Keydunov also thinks that the answer to making things more accessible for non-technical users may be Large Language Model-powered co-pilots. "If you think about data models as just being code, why wouldn't LLMs be able to generate that code in the future?", as he puts it.

Large Language Models and Context

Using LLMs to generate data models is an interesting idea, even though the long-term effects of LLM-generated code remain to be seen. Cube's latest release uses LLMs and semantic layer context to help users access data in data warehouses.

This, too, takes a meta approach. Instead of aiming to query the data store in the backend directly, the queries are hitting Cube's semantic layer. This, Keydunov noted, makes those queries simpler to produce. Cube takes the queries as input and uses the context stored in the form of metric definitions to expand the queries and direct them to the data store.

Universal Semantic Layer. Image: Cube

Keydunov's experience in implementing this points to an important takeaway. The hard part was not getting the LLM to produce SQL. It was getting it to produce the right SQL, taking into account all the context — aka schema — required for this.

The team at Cube took a mixed approach to pass on this context to the LLM, using both context windows and RAG. At some point, Keydunov referred to this context as "just text." At another point, he referred to it as "a knowledge graph."

Semantics, Knowledge Graphs, and Semantic Layers

This brings up the question of whether semantics in the form of knowledge graphs are actually used in semantic layers. Semantics about semantics has always been a challenge, Keydunov said.

A Data Cube vocabulary and a metric ontology do exist, but it doesn't look like they're used in the semantic layer ecosystem. Just getting convergence on terminology among vendors was hard enough as per Keydunov.

Recent research points to the fact that using context for RAG in the form of knowledge graphs yields better results compared to using the same context in the form of database schemas. And Graph RAG is gaining traction. So perhaps this could be an idea worth considering for semantic layers as well.

Keydunov noted that people in the Cube community built on these experiments by extending them to run using Kube's semantic layer instead of knowledge graphs, purportedly getting even better results. Going from a DDL to a knowledge graph is an improvement, and then going from a knowledge graph to a semantic layer may be another improvement as per Keydunov.

Cube Features and Availability

Cube was designed to work with all SQL-enabled data sources, including cloud data warehouses, query engines, and application databases. It also supports other data sources such as Elasticsearch, MongoDB, Druid, Parquet, CSV, and JSON. Most of the drivers for data sources are supported either directly by the Cube team or by their vendors.

There are also community-supported drivers, as Cube's core is open source. People can create and run data models on the open-source version. The commercial SaaS version of Cube comes with features such as a caching engine and the newly released LLM integration and chart prototyping.

Published at DZone with permission of George Anadiotis. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments