Understanding and Reducing PostgreSQL Replication Lag

Replication lag in PostgreSQL is the delay in synchronizing changes from the primary server to replicas, affecting performance and consistency.

Join the DZone community and get the full member experience.

Join For FreeReplication lag in PostgreSQL occurs when changes made on the primary server take time to reflect on the replica server. Whether you use streaming or logical replication, lag can impact performance, consistency, and system availability. This post covers the types of replication, their differences, lag causes, mathematical formulas for lag estimation, monitoring techniques, and strategies to minimize replication lag.

Types of Replication in PostgreSQL

Streaming Replication

Streaming replication continuously sends Write-Ahead Log (WAL) changes from the primary to one or more replica servers in near real-time. The replica applies the changes sequentially as they're received. This method replicates the entire database and ensures replicas stay synchronized.

Advantages

- Low latency with near real-time synchronization.

- Efficient for full database replication.

Disadvantages

- Replicas are read-only, so all write transactions must go to the primary node.

- If the network connection breaks, lag can increase significantly.

Logical Replication

Logical replication transfers data-level changes rather than low-level WAL data. It enables selective replication, where only specific tables or parts of a database are replicated. Logical replication uses a logical decoding process to convert WAL changes into SQL-like changes.

Advantages

- Allows selective replication of specific tables or schemas.

- Supports writable replicas with conflict resolution options.

Disadvantages

- Higher latency due to logical decoding overhead.

- It is less efficient than streaming replication for large datasets.

How Replication Lag Occurs

Replication lag occurs when the rate at which changes are generated on the primary server exceeds the rate at which they can be processed and applied to the replica server. This imbalance can occur due to various underlying factors, each contributing to delays in data synchronization. The most common causes of replication lag are:

Network Latency

Network latency refers to the time data travels from the primary server to the replica server. WAL (Write-Ahead Log) segments are continuously transmitted over the network during streaming replication. Even minor delays in network transmission can accumulate, causing the replica to lag.

Causes

- High network round-trip times (RTT).

- More bandwidth to handle high volumes of WAL data.

- Network congestion or packet loss.

If the primary server generates significant changes during peak traffic, a slow or overloaded network can cause a bottleneck, preventing the replica from receiving WAL changes.

Solution

Use low-latency, high-bandwidth network connections and enable WAL compression (wal_compression = on) to reduce data size during transmission.

I/O Bottlenecks

I/O bottlenecks occur when a replica server's disk is too slow to write incoming WAL changes. Streaming replication relies on writing changes to disk before they are applied, so any delays in the I/O subsystem may cause lag to build up.

Causes

- Slow or overloaded hard drives (HDDs).

- Insufficient disk write throughput.

- Disk contention from other processes.

- If the replica server uses spinning disks (HDD) rather than solid-state drives (SSD), WAL changes may not be written fast enough to keep up with data changes, causing the replica to fall behind the primary.

Solution

To optimize a replica's disk I/O, use SSDs for faster write speeds and isolate replication processes from other disk-intensive tasks.

CPU/Memory Constraints

Replication processes require CPU and memory to decode, write, and apply changes. If a replica server lacks sufficient processing power or memory, it may struggle to keep up with incoming modifications, resulting in replication lag.

Causes

- Limited CPU cores or slow processors.

- Insufficient memory for WAL buffers.

- Other processes consume CPU or memory resources.

- If the replica is processing large transactions or running queries alongside replication, the CPU may become saturated, slowing the replication process.

Solution

Allocate more CPU cores and memory to the replica server. Increase the size of the wal_buffers to improve WAL processing efficiency.

Heavy Workloads on the Primary Server

Replication lag can also occur when the primary server generates too many changes too quickly for the replica to handle. Large transactions, bulk inserts, or frequent updates can overwhelm replication.

Causes

- Bulk data imports or large transactions.

- High-frequency updates to large tables.

- High concurrency workloads on the primary.

- The transaction load may be too heavy if the primary server processes multiple large transactions simultaneously, such as during a bulk data import. The volume of WAL data can exceed what the replica can process in real-time, increasing lag.

Solution

Optimize transactions by batching more minor updates and avoiding long-running transactions. If strict synchronization isn’t critical, use asynchronous replication to reduce the replication burden.

Resource Contention

Resource contention occurs when multiple processes compete for the same resources, such as CPU, memory, or disk I/O. This can happen on either the primary or replica server and lead to delays in replication processing.

Causes

- Other processes consume disk I/O, CPU, or memory.

- Background tasks such as backups or analytics running concurrently.

- Network contention between replication traffic and other data transfers.

- If the replica server also runs backups or analytical queries, competition for CPU and disk resources might slow the replication process.

Solution

Isolate replication workloads from other resource-intensive processes. Schedule backups and analytics during off-peak hours to prevent interference with replication.

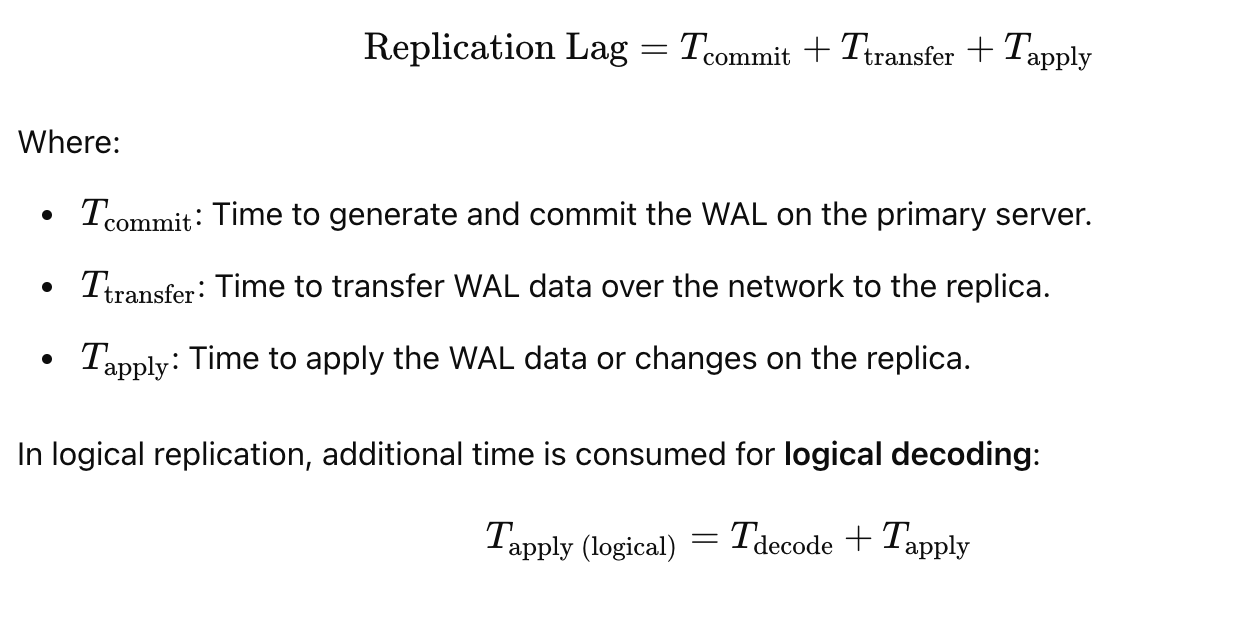

Mathematical Formula for Replication Lag

Use the following formula to calculate replication lag:

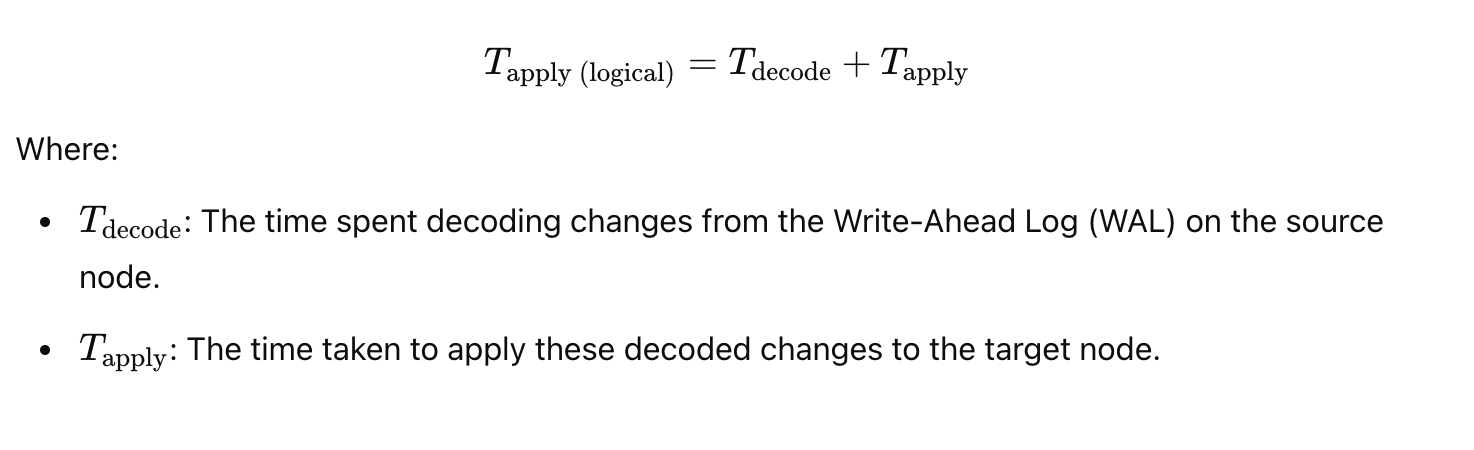

In logical replication, additional time is consumed by logical decoding:

Monitoring Replication Lag

Streaming Replication Monitoring

The pg_stat_replication view can be used to monitor streaming replication lag. It provides insights into the state and lag between the primary and replica servers.

SELECT application_name, state,

pg_size_pretty(sent_lsn - write_lsn) AS lag_bytes,

sync_state

FROM pg_stat_replication;

sent_lsn: Last WAL location sent to the replica.write_lsn: Last WAL location written on the replica.lag_bytes: The difference between the two indicates lag.

Logical Replication Monitoring

Logical replication lag can be monitored using the pg_stat_subscription view.

SELECT subscription_name, active,

pg_size_pretty(pg_current_wal_lsn() - replay_lsn) AS lag_bytes

FROM pg_stat_subscription;

Example: Visualizing Replication Lag

The following Python code snippet visualizes streaming and logical replication lag over time.

import matplotlib.pyplot as plt

time = ['12:00', '12:10', '12:20', '12:30', '12:40', '12:50', '13:00']

streaming_lag = [0.5, 0.6, 0.4, 0.7, 1.0, 0.9, 0.6]

logical_lag = [2.0, 2.5, 3.0, 2.8, 3.5, 4.0, 3.2]

plt.plot(time, streaming_lag, label='Streaming Replication Lag', marker='o')

plt.plot(time, logical_lag, label='Logical Replication Lag', marker='s')

plt.xlabel('Time')

plt.ylabel('Lag (seconds)')

plt.title('Replication Lag Over Time')

plt.legend()

plt.grid(True)

# Save the plot as an image file

plt.savefig('replication_lag_plot.png')

print("Plot saved as replication_lag_plot.png")

The resulting graph compares the performance of streaming and logical replication. Logical replication tends to have more variable lag due to decoding and processing overhead.

How to Reduce Replication Lag

1. Optimize WAL Configuration

- Increase

wal_buffersto hold more WAL data in memory. - Set

wal_writer_delayto a lower value (e.g., 10ms) to write WAL data faster.

wal_buffers = 64MB

wal_writer_delay = 10ms2. Improve Network Performance

- Use low-latency, high-bandwidth network connections between primary and replicas.

- Compress WAL data during transmission to reduce transfer time:

wal_compression = on.

3. Use Asynchronous Replication (When Possible)

-

Asynchronous replication reduces lag by not waiting for the replica to confirm changes but introduces a data loss risk.

ALTER SYSTEM SET synchronous_commit = 'off';4. Enable Parallel Apply in Logical Replication

-

PostgreSQL 14+ allows parallel application of logical changes, reducing lag for large transactions.

ALTER SUBSCRIPTION my_subscription SET (parallel_apply = on);5. Allocate More Resources to Replicas

- Ensure the replica has enough CPU and memory to process WAL changes quickly.

- Use SSDs for faster disk I/O on the replica.

6. Batch Transactions

-

Group multiple minor updates into fewer transactions to minimize overhead.

Real-World Examples

Reducing Streaming Replication Lag

A company running a high-traffic PostgreSQL cluster faced replication lag during peak hours. They halved the replication lag by increasing wal_buffers to 64MB and reducing wal_writer_delay to 10ms. Switching to a high-speed network connection reduced the lag to less than a second.

Reducing Logical Replication Lag

A system with multiple logical subscriptions experienced lag during high write workloads. Enabling parallel application in PostgreSQL 14 distributed the workload across numerous workers, reducing the replication lag from 4 seconds to under 1 second.

Conclusion

Replication lag is a critical issue that affects the performance and consistency of PostgreSQL systems. Streaming replication offers low latency but requires the entire database to be replicated, while logical replication provides flexibility but with higher overhead. Regular monitoring using pg_stat_replication and pg_stat_subscription allows administrators to detect and mitigate lag.

Optimizing WAL configurations, improving network performance, using parallel applications, and allocating sufficient resources can significantly reduce lag. Proper tuning ensures replicas remain synchronized and the system maintains high availability and performance.

Published at DZone with permission of Dr. Ibrar Ahmed. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments