Transaction Management with Spring and MySQL

This article examines the different types of transaction management method that can take place in a database and examples of how MySQL uses isolation.

Join the DZone community and get the full member experience.

Join For FreeA transaction is a unit of program execution that accesses and possibly updates various data items. It contains multiple steps that must appear to a user as a single, indivisible unit which either executes in its entirety or not at all. We are going to discuss different aspects of transaction management in the MySQL InnoDB environment and how Spring implements those aspects using proxies.

Table of Contents

- ACID

- Serializability

- Isolation levels

- How MySQL implements each level of isolation

- Examples with Spring

- References

ACID

Database system maintains the following properties of the transactions:

- Atomicity: Either all the operations of the transaction are reflected properly in the database or none are. MySQL ensures atomicity using undo logs that store information about the updates that are made by each transaction.

- Consistency: If the database is consistent before the execution of the transaction, the database remains consistent after the execution of the transaction. There are two types of consistency. The first is ensured by the database system; for example, the data integrity constraints while the other is the responsibility of the programmer who codes the transaction to ensure the application-dependent consistency constraints.

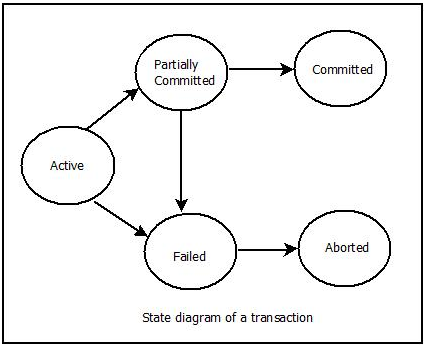

3. Durability: To understand durability we first must understand the state diagram of a transaction.

In the above diagram, we notice the “Partially Committed” state. The transaction enters this state after the execution of the last statement. In fact, all those updates are made on memory so any system failure (e.g. power outage) can result in a loss of changes corresponding to this transaction. Durability means that after a transaction commits, the updates should be saved despite any possible system failure, and for that reason, information about the updates should be saved on stable storage (MySQL uses redo logs). After that, the transaction moves from “Partially Committed” state to the “Committed” state so that database system can reconstruct updates whenever they are needed.

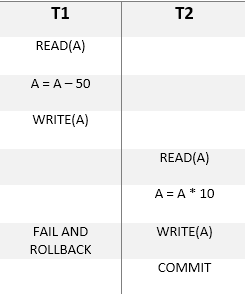

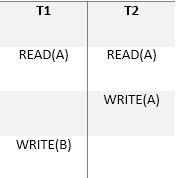

4. Isolation: The basic idea behind isolation that each transaction should be unaware of other transactions running at the same time. Let T1, T2 be two transactions running at the same time

You will notice that T2 read a value that is never committed and this will leave the database inconsistent.

The best solution for this problem is to run transactions serially (one after the other). We will talk about this solution in the next section, “Serializability” but this solution has negative performance effects where transactions should wait for each other so that, many solutions have been developed to compromise between performance and consistency, and we will talk about those solutions in the “Isolation levels” section.

Serializability

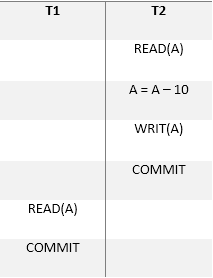

As we see in table above, transactions running at the same time may leave the database inconsistent, but there is a type of schedule, called a Serial Schedule that doesn’t affect the database consistency:

A Serial Schedule consists of a sequence of instructions from various transactions, where the instructions belonging to one single transaction appear together in that schedule.

Many database systems can’t generate such a schedule to execute concurrently transactions. Imagine that T1 and T2 started at the same time and the database system chose T2 then T. In this case, T1 will wait for T2 to be committed which may remain active for hours!

A more efficient type of schedule that also preserves the consistency and isolation properties is the Serializable Schedule.

To understand serializable schedules we should first discuss the situations where two concurrent transactions conflict:

Please note that the examples used below are part of a schedule so it isn’t a complete one and each example may contains multiple additional instructions and each transaction may have rolls back or commits

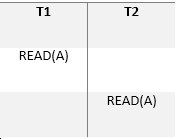

1- Read/Read situation:

T2 reads record A after T1 but in fact, order here does not matter since the same value of A is read by T1 and T2, regardless of the order. so there is no conflict here and we can swap between the two operations and obtains a new schedule that is equivalent to the original one.

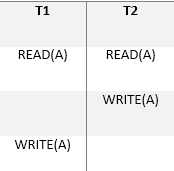

2. Read/Write situation:

If T1 reads the value of A before it is written by T2 (the situation above) we will get a different value from the situation where T1 reads the value of A after it is written by T2. There is a conflict here and we can’t swap between the two operations.

3. Write/Read situation:

Same (Read/Write) situation and there is a conflict here and we can’t swap between the two operations.

4. Write/Write situation (A.K.A Dirty Write):

The value of A will be different if it is written finally by T1 or T2 and if the schedule above contains an additional READ(A) operation it will be affected by the order so there is a conflict here and we can’t swap between the two WRITE/WRITE operations.

We say that two transactions T1 and T2 conflict if they contain two operations (one for each transaction) on the same data item, and at least one of these instructions is a write operation.

Thus, only in the case of (read/read) does the relative order of their execution not matter and we can swap between the two instructions. By doing such a swap the database system generates (conflict equivalence) schedules to the original one.

Now we can define the Serializable Schedule: as a schedule that is conflict equivalent to a Serial Schedule, and this is the type of schedule that is generated when you set the isolation level to Serializable.

Isolation Levels

As we discussed before, serializability has its own performance issues where any case of conflict will cause one of the transactions to wait for the other to be committed. so many solutions have been developed to compromise between consistency and performance and they are called isolation levels.

Note that dirty write in all the following solutions will cause the conflicting transaction to wait until the other to be committed.

- Serializable: where the database system generates a serializable schedule to execute the concurrent transactions.

- Repeatable Read: a transaction eliminates the (read/write) and (write/read) conflicts by ignoring any database update made by another concurrent transaction. So the only conflict that forces a transaction to wait is the dirty write.

- Read Committed: a transaction eliminates the (read/write) and (write/read) conflicts by reading only the updates that are made by a committed concurrent transaction and ignoring updates that are made by uncommitted transactions. So the only conflict that forces a transaction to wait is the dirty write.

- Read Uncommitted: a transaction eliminates the (read/write) and (write/read) conflicts by reading the updates that are made by a committed/uncommitted concurrent transaction. So the only conflict that forces a transaction to wait is the dirty write.

You can notice how the last three solutions affect the consistency, especially the Read Uncommitted solution, where the transaction can read uncommitted data and depends on it to do other operations. It is the responsibility of the application developers to ensure consistency when they choose one of these three solutions.

How MySQL Implements Each Level of Isolation

To understand how MySQL implements each level of isolation we should discuss some definitions:

- Shared Lock: Permits the transaction that holds the lock to read a row. Multiple active transactions may have a shared lock on the same row.

- Exclusive Lock: Permits the transaction that holds the lock to update or delete a row. A transaction can exclusively lock a row only if it isn’t (shared or exclusive) locked by another transaction.

- Snapshot: A representation of data at a particular time, which remains the same even as changes are committed by other transactions. Used by certain isolation levels to allow consistent reads.

- Consistent Nonlocking Reads: A consistent read means that MySQL InnoDB uses multi-versioning to present to a query a snapshot of the database at a point in time (it depends on the database and the information stored in the undo log to build that snapshot). The query sees the changes made by transactions that committed before that point of time, and no changes made by later or uncommitted transactions. The exception to this rule is that the query sees the changes made by earlier statements within the same transaction.

- Locking Reads: Select records with a shared lock or an exclusive lock.

- Dirty Reads: An operation that retrieves unreliable data, data that was updated by another transaction but not yet committed.

- Serializable: MySQL uses Locking Reads with this type of isolation to ensure that the generated schedule is a serializable one. So if transaction T1 reads a record A another one T2 can’t update A until T1 commits.

- Repeatable Read: MySQL uses Consistent Nonlocking Reads with this type of isolation, where the transaction obtains only one snapshot with the first consistent read operation that didn’t change until the transaction commits. So it ignores any database update made by other transactions.

- Read Committed: MySQL uses Consistent Nonlocking Reads with this type of isolation, but the difference from Repeatable Read level is that each consistent read within a transaction sets and reads its own fresh snapshot. So it reads the updates made by committed concurrent transactions.

- Read Uncommitted: MySQL uses Dirty Reads with this type of isolation depending on the database and changes on the memory log buffer.

Examples with Spring

We will depend on the following entity:

public class TestTransaction implements Serializable {

(strategy = GenerationType.IDENTITY)

private Long id; (length = 50)

private String name; public Long getId() {

return id;

} public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

}

Serializable Isolation Level:

xxxxxxxxxx

private TestService testService;

public TestService(TestTransactionRepository repository) {

this.repository = repository;

}

(isolation = Isolation.SERIALIZABLE)

public void T1() {

TestTransaction t = repository.findById(1L).get();

String name = String.format("Name - %s", new Date().getTime());

t.setName(name);

// we used saveAndFlush to flush the mysql instructions

// to the database system so the row get locked

repository.saveAndFlush(t);

testService.T2();

}

// We use propagation = REQUIRES_NEW to start a new transaction T2

// different from T1

(propagation = Propagation.REQUIRES_NEW,

isolation = Isolation.SERIALIZABLE)

public void T2() {

// Transaction T2 will stuck here

// because it needs a shared lock on the same row that is already exclusively locked by T1

TestTransaction t = repository.findById(1L).get();

}

Repeatable Read:

xxxxxxxxxx

private EntityManager entityManager;

private TestService testService;

public TestService(TestTransactionRepository repository) {

this.repository = repository;

}

(isolation = Isolation.REPEATABLE_READ)

public void T1() {

TestTransaction t = repository.findById(1L).get();

System.out.print(t.getName()); // output: X

testService.T2(); // updates the record then commits

// we used the entity manager proxy provided by spring

// So that it will manage the persistence context related

// to the current active transaction (T1)

entityManager.detach(t);

// fetch the record another time

t = repository.findById(1L).get();

System.out.print(t.getName()); // output: X/* Output: X

* Why? T1 obtains only one snapshot

* with the first consistent read operation

* that didn't change until the transaction commits.

* So it ignores any database update made by other transactions.

*/}

(propagation = Propagation.REQUIRES_NEW,

isolation = Isolation.READ_COMMITTED)

public void T2() {

TestTransaction t = repository.findById(1L).get();

t.setName("Y");

}

Read Committed:

xxxxxxxxxx

private EntityManager entityManager;

private TestService testService;

public TestService(TestTransactionRepository repository) {

this.repository = repository;

}

(isolation = Isolation.READ_COMMITTED)

public void T1() {

TestTransaction t = repository.findById(1L).get();

System.out.print(t.getName()); // output: X

testService.T2(); // updates the record then commits

// we used the entity manager proxy provided by spring

// So that it will manage the persistence context related

// to the current active transaction (T1)

entityManager.detach(t);

// fetch the record another time

t = repository.findById(1L).get();

System.out.print(t.getName()); // output: Y/* Output: Y

* Why? Each consistent read within a transaction T1

* sets and reads its own fresh snapshot. So it reads

* the updates made by committed concurrent transactions.

*/}

(propagation = Propagation.REQUIRES_NEW,

isolation = Isolation.READ_COMMITTED)

public void T2() {

TestTransaction t = repository.findById(1L).get();

t.setName("Y");

}

Published at DZone with permission of Ali Ali. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments