The State of Data Streaming for Digital Natives (Born in the Cloud)

Explore digital natives born in the cloud, leverage Apache Kafka for innovation and new business models, and discover trends, architectures, and case studies.

Join the DZone community and get the full member experience.

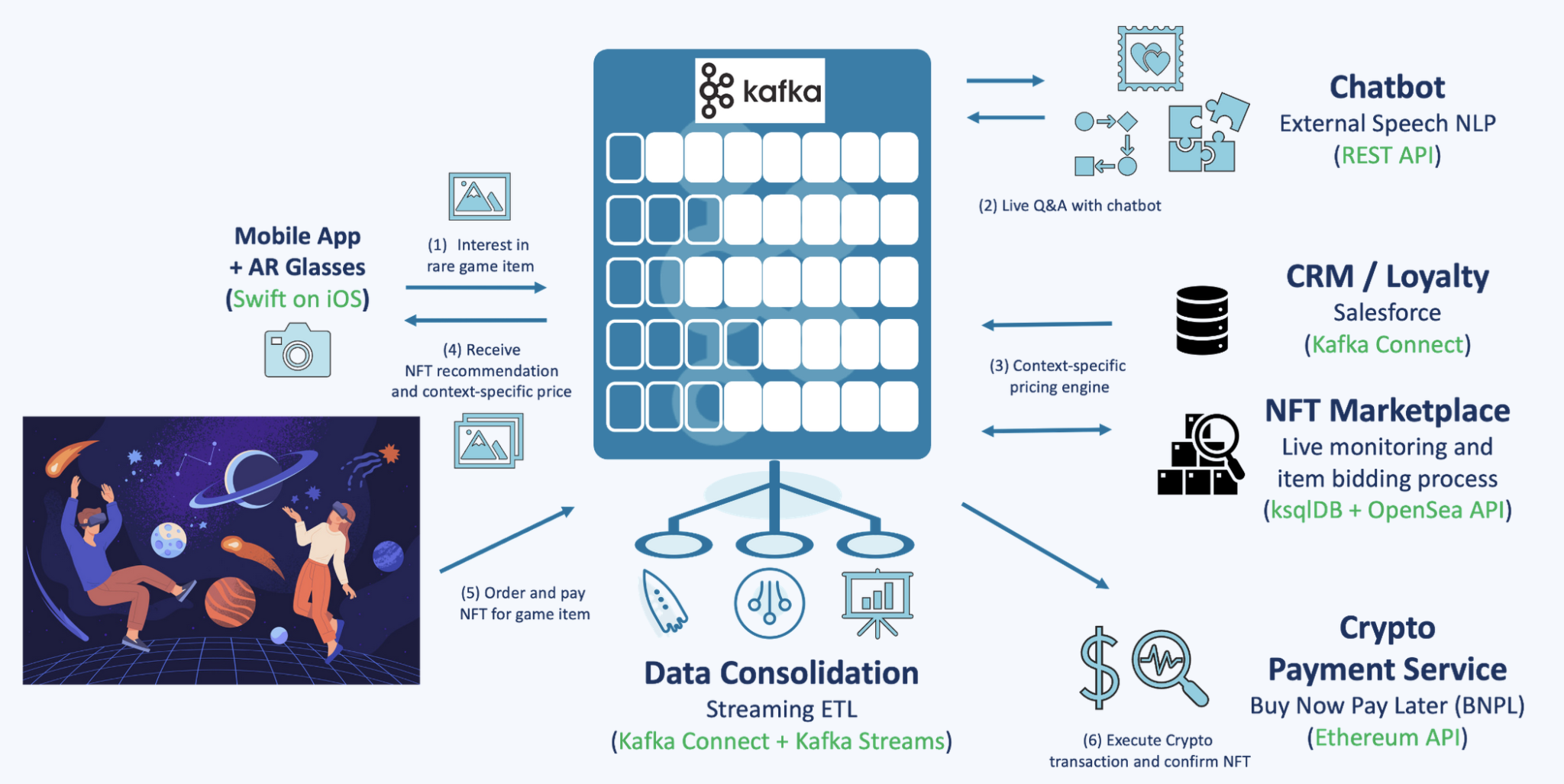

Join For FreeThis blog post explores the state of data streaming in 2023 for digital natives born in the cloud. The evolution of digital services and new business models requires real-time end-to-end visibility, fancy mobile apps, and integration with pioneering technologies like fully managed cloud services for fast time-to-market, 5G for low latency, or augmented reality for innovation. Data streaming allows integrating and correlating data in real-time at any scale to improve the most innovative applications leveraging Apache Kafka.

I look at trends for digital natives to explore how data streaming helps as a business enabler, including customer stories from New Relic, Wix, Expedia, Apna, Grab, and more. A complete slide deck and on-demand video recording are included.

General Trends for Digital Natives

Digital natives are data-driven tech companies born in the cloud. The SaaS solutions are built on cloud-native infrastructure that provides elastic and flexible operations and scale. AI and Machine Learning improve business processes while the data flows through the backend systems.

The Data-Driven Enterprise in 2023

McKinsey and Company published an excellent article on seven characteristics that will define the data-driven enterprise:

- Data embedded in every decision, interaction, and process

- Data is processed and delivered in real-time

- Flexible data stores enable integrated, ready-to-use data

- Data operating model treats data like a product

- The chief data officer’s role is expanded to generate value

- Data-ecosystem memberships are the norm

- Data management is prioritized and automated for privacy, security, and resiliency

This quote from McKinsey and Company precisely maps to the value of data streaming for using data at the right time in the right context. The below success stories are all data-driven, leveraging these characteristics.

Digital Natives Born in the Cloud

A digital native enterprise can have many meanings. IDC has a great definition:

"IDC defines Digital native businesses (DNBs) as companies built based on modern, cloud-native technologies, leveraging data and AI across all aspects of their operations, from logistics to business models to customer engagement. All core value or revenue-generating processes are dependent on digital technology.”

Companies are born in the cloud, leverage fully managed services, and are consequently innovative with fast time to market.

AI and Machine Learning (Beyond the Buzz)

“ChatGPT, while cool, is just the beginning; enterprise uses for generative AI are far more sophisticated,” says Gartner. I can't agree more. But even more interesting, Machine Learning (the part of AI that is enterprise-ready) is already used in many companies.

While everybody talks about Generative AI (GenAI) these days, I prefer talking about real-world success stories that have leveraged analytic models for many years already to detect fraud, upsell to customers, or predict machine failures. GenAI is "just" another more advanced model that you can embed into your IT infrastructure and business processes the same way.

Data Streaming at Digital Native Tech Companies

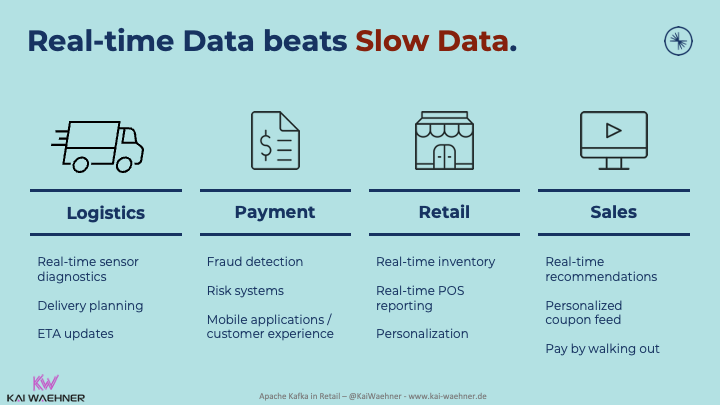

Adopting trends across industries is only possible if enterprises can provide and correlate information correctly in the proper context. Real-time, which means using the information in milliseconds, seconds, or minutes, is almost always better than processing data later (whatever later means):

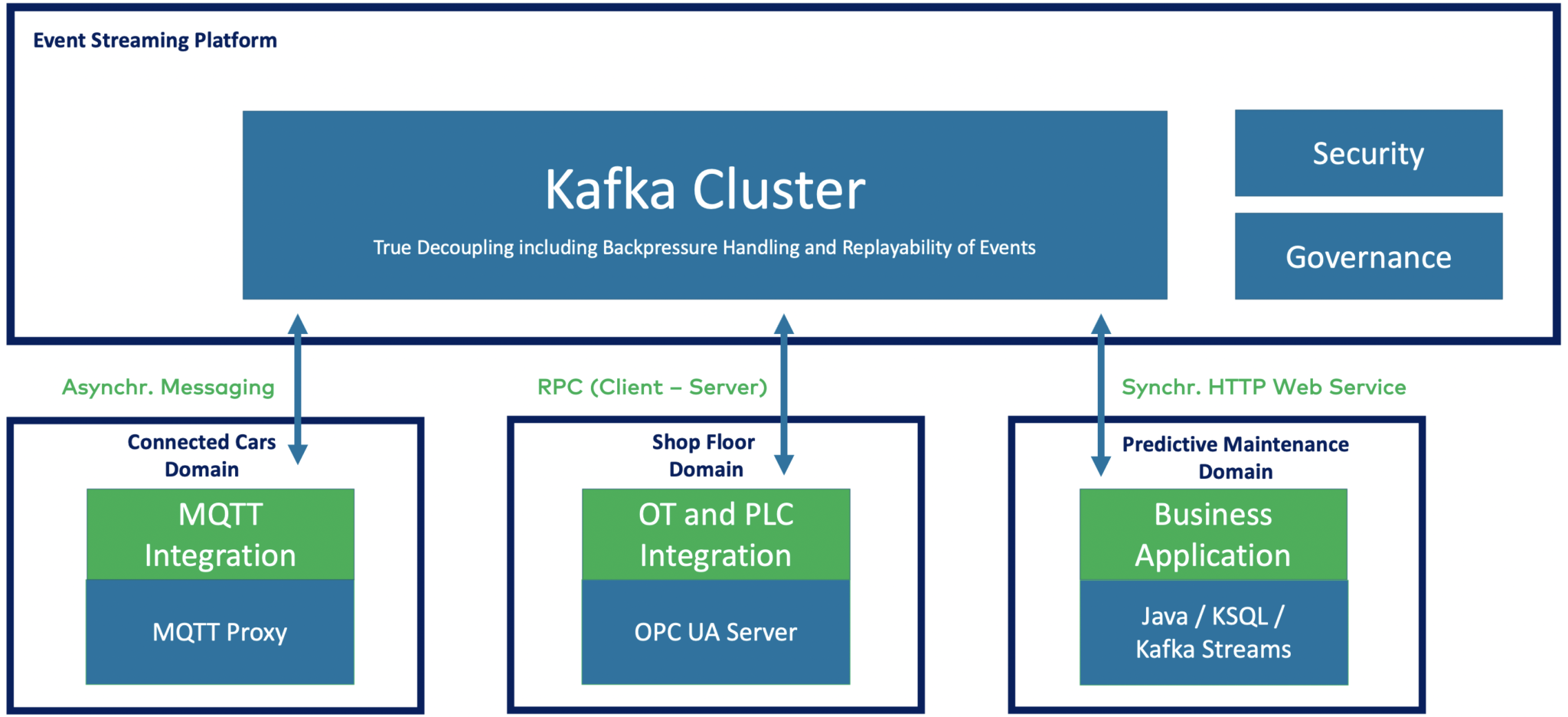

Digital natives combine all the power of data streaming: Real-time messaging at any scale with storage for true decoupling, data integration, and data correlation capabilities.

Data streaming with the Apache Kafka ecosystem and cloud services are used throughout the supply chain of any industry. Here are just a few examples:

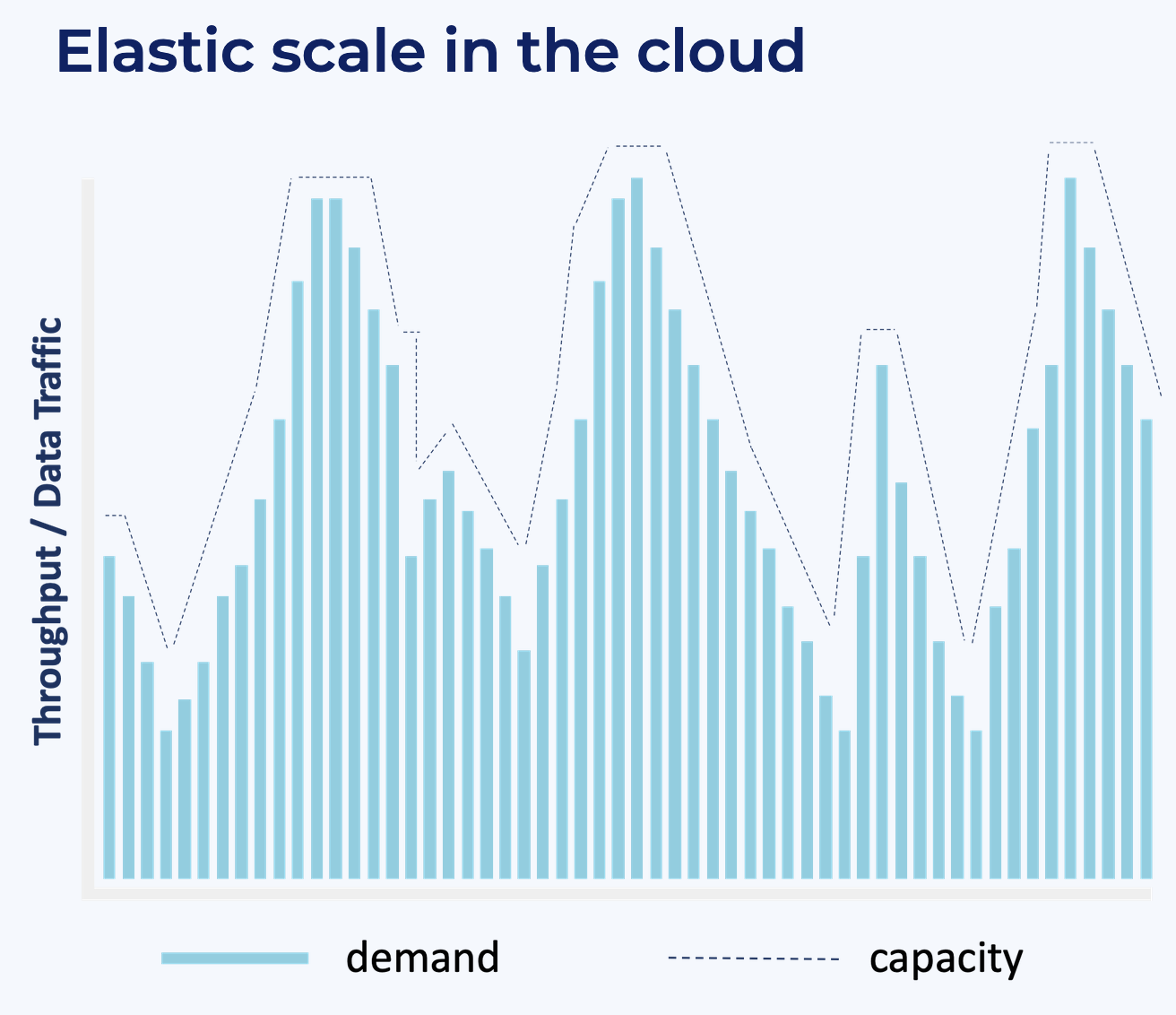

Elastic Scale With Cloud-Native Infrastructure

One of the most significant benefits of cloud-native SaaS offerings is elastic scalability out of the box. Tech companies can start new projects with a small footprint and pay-as-you-go. If the project is successful or if industry peaks come (like Black Friday or the Christmas season in retail), the cloud-native infrastructure scales up and back down after the peak:

There is no need to change the architecture from a proof of concept to an extreme scale. Confluent's fully managed SaaS for Apache Kafka is an excellent example. Learn how to scale Apache Kafka to 10+ GB per second in Confluent Cloud without the need to re-architect your applications.

There is no need to change the architecture from a proof of concept to an extreme scale. Confluent's fully managed SaaS for Apache Kafka is an excellent example. Learn how to scale Apache Kafka to 10+ GB per second in Confluent Cloud without the need to re-architect your applications.

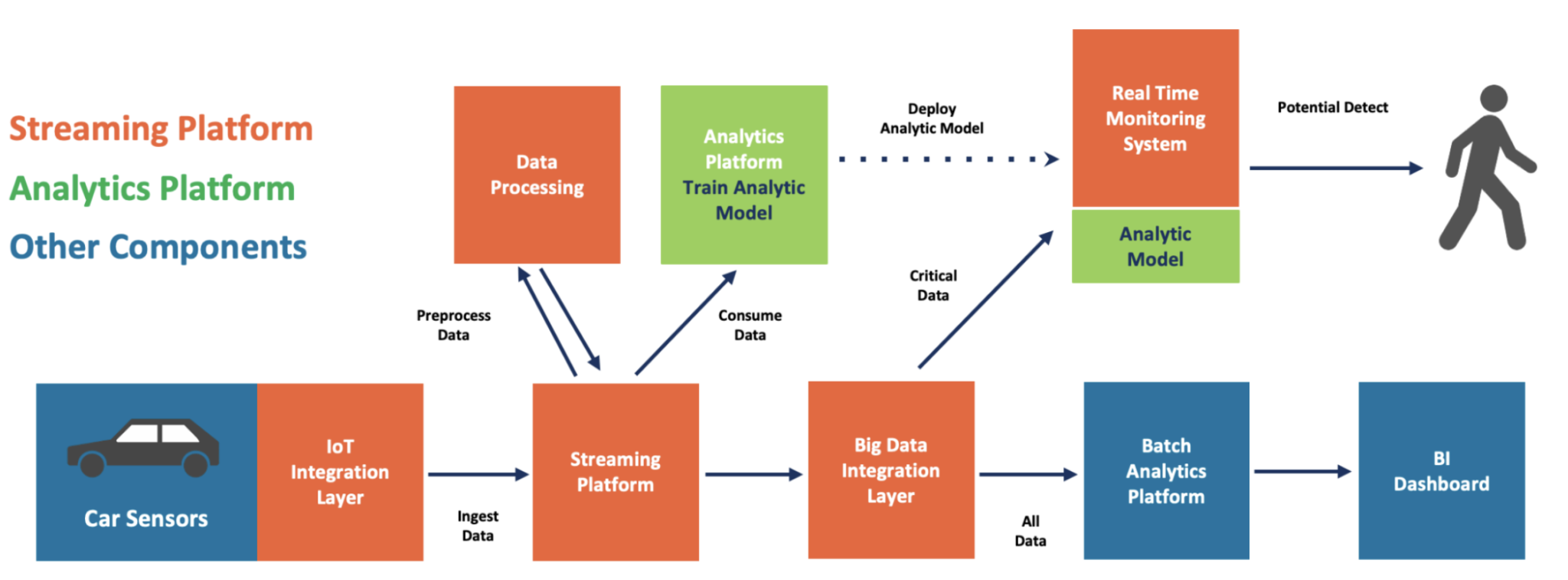

Data Streaming + AI/Machine Learning = Real-Time Intelligence

The combination of data streaming with Kafka and machine learning with TensorFlow or other ML frameworks is nothing new. I explored how to "Build and Deploy Scalable Machine Learning in Production with Apache Kafka" in 2017, i.e., six years ago.

Since then, I have written many further articles and supported various enterprises deploying data streaming and machine learning. Here is an example of such an architecture:

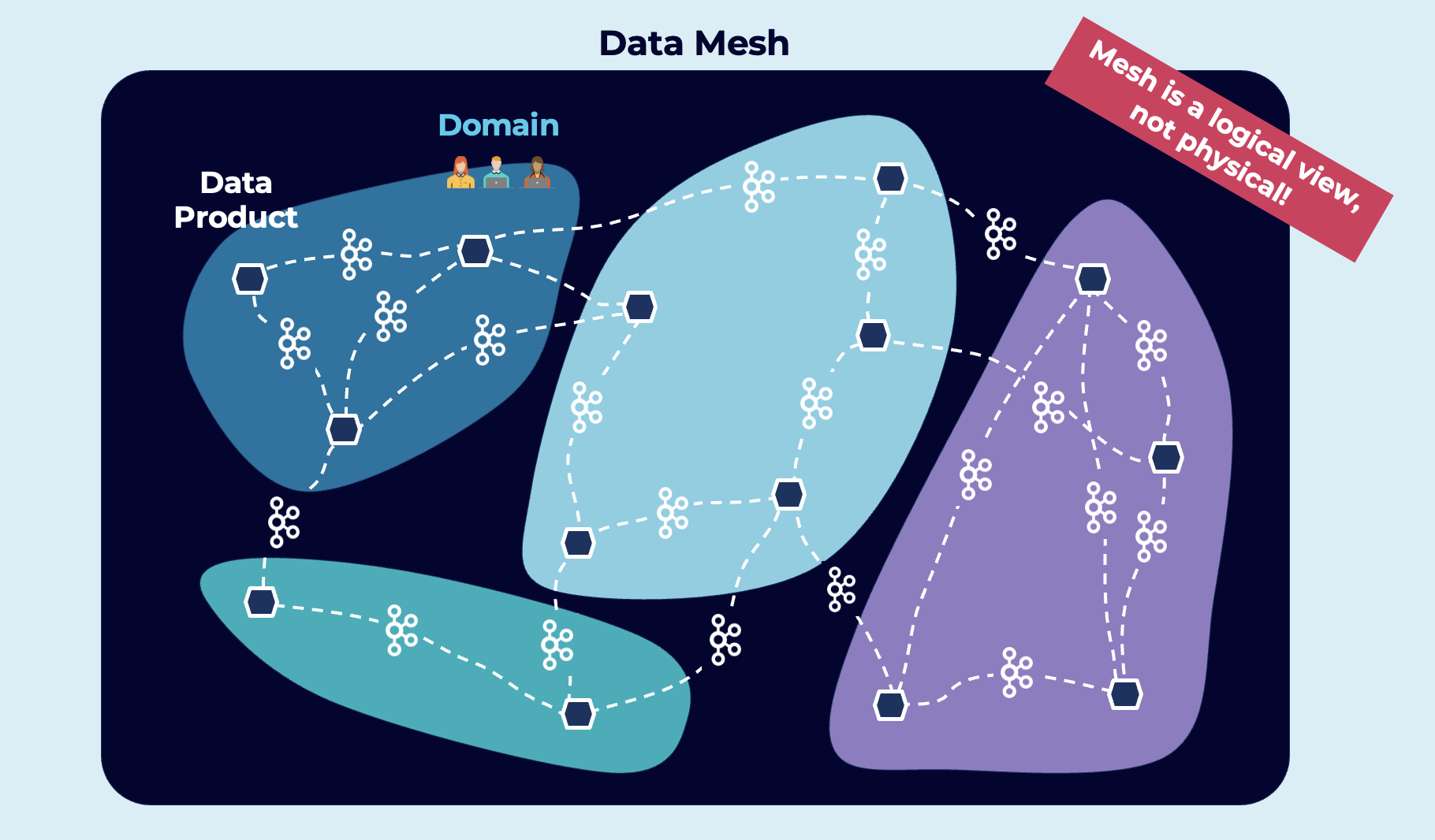

Data Mesh for Decoupling, Flexibility and Focus on Data Products

Digital natives don't (have to) rely on monolithic, proprietary, and inflexible legacy infrastructure. Instead, tech companies start from scratch with modern architecture. Domain-driven design and microservices are combined in a data mesh, where business units focus on solving business problems with data products:

Architecture Trends for Data Streaming Used by Digital Natives

Digital natives leverage trends for enterprise architectures to improve cost, flexibility, security, and latency. Four essential topics I see these days at tech companies are:

- Decentralization with a data mesh

- Kappa architecture replacing Lambda

- Global data streaming

- AI/Machine Learning with data streaming

Let's look deeper into some enterprise architectures that leverage data streaming.

Decentralization With a Data Mesh

There is no single technology or product for a data mesh! However, the heart of a decentralized data mesh infrastructure must be real-time, reliable, and scalable.

Data streaming with Apache Kafka is the perfect foundation for a data mesh: Dumb pipes and smart endpoints truly decouple independent applications. This domain-driven design allows teams to focus on data products:

Contrary to a data lake or data warehouse, the data streaming platform is real-time, scalable, and reliable — a unique advantage for building a decentralized data mesh.

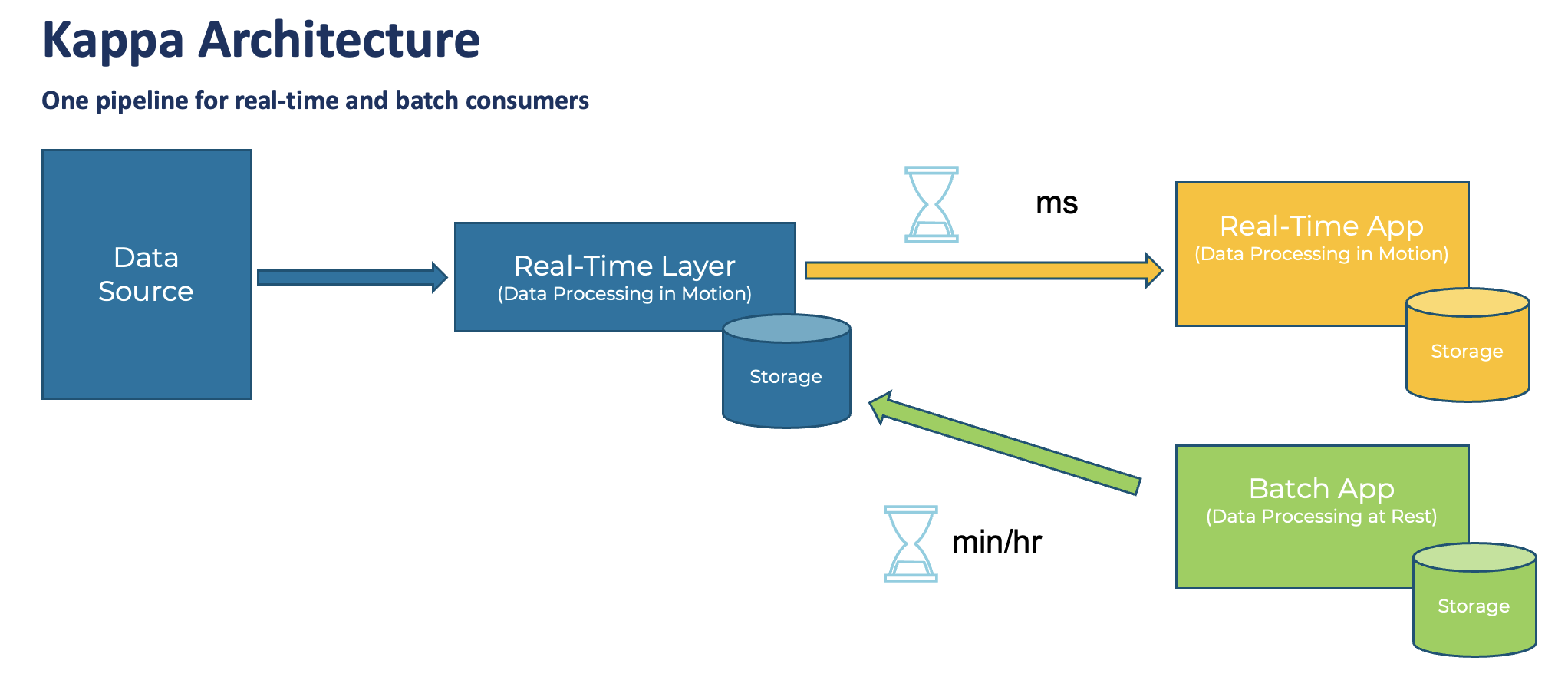

Kappa Architecture Replacing Lambda

The Kappa architecture is an event-based software architecture that can handle all data at all scales in real time for transactional AND analytical workloads.

The central premise behind the Kappa architecture is that you can perform real-time and batch processing with a single technology stack. The heart of the infrastructure is streaming architecture.

Unlike the Lambda Architecture, in this approach, you only re-process when your processing code changes and need to recompute your results.

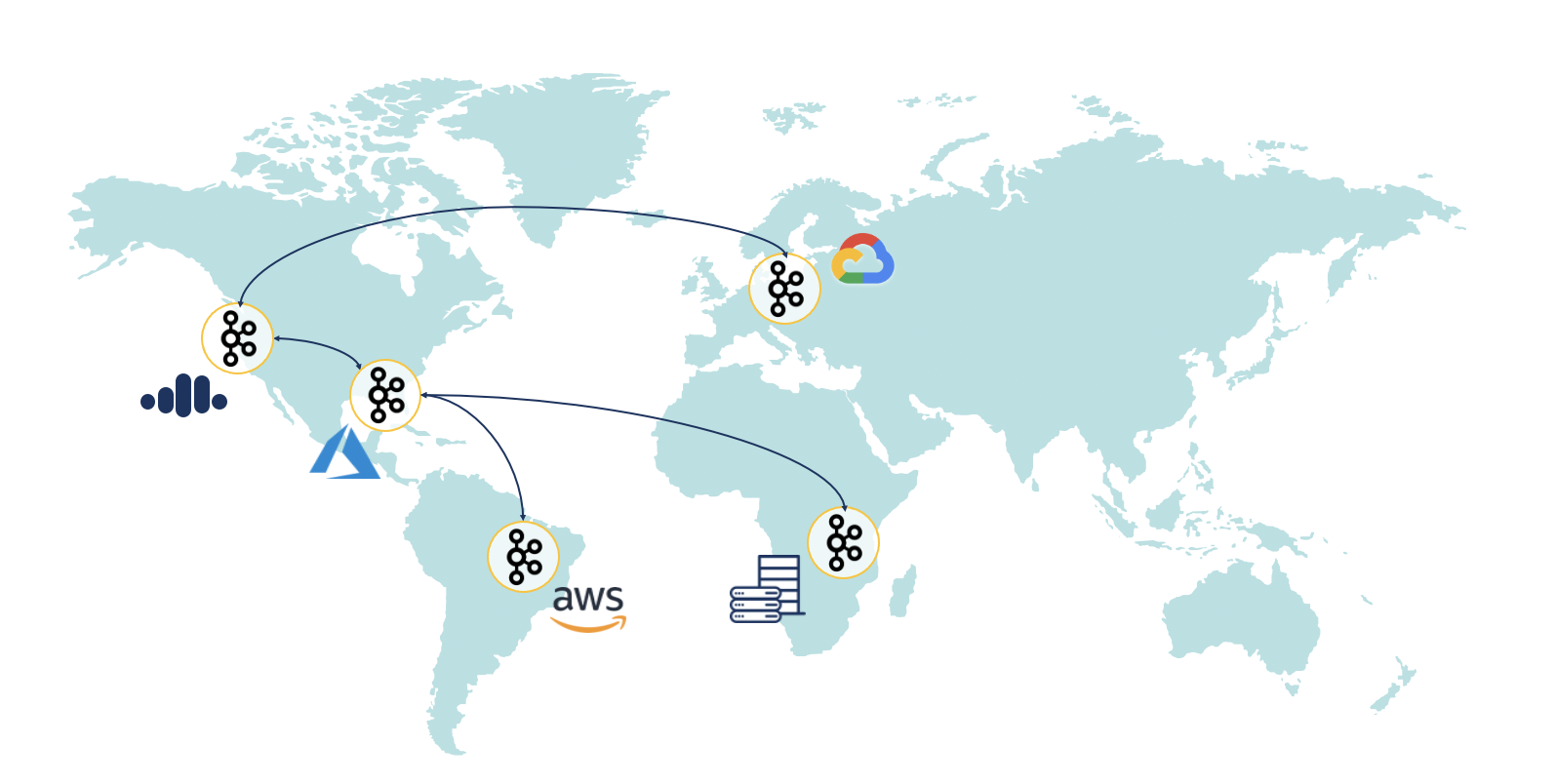

Global Data Streaming

Multi-cluster and cross-data center deployments of Apache Kafka have become the norm rather than an exception.

Several scenarios require multi-cluster Kafka deployments with specific requirements and trade-offs, including disaster recovery, aggregation for analytics, cloud migration, mission-critical stretched deployments, and global Kafka.

Natural Language Processing (NLP) With Data Streaming for Real-Time Generative AI (GenAI)

Natural Language Processing (NLP) helps many projects in the real world for service desk automation, customer conversation with a chatbot, content moderation in social networks, and many other use cases. Generative AI (GenAI) is "just" the latest generation of these analytic models. Many enterprises have combined NLP with data streaming for many years for real-time business processes.

Apache Kafka became the predominant orchestration layer in these machine learning platforms for integrating various data sources, processing at scale, and real-time model inference.

Here is an architecture that shows how teams easily add Generative AI and other machine learning models (like large language models, LLM) to their existing data streaming architecture:

Time to market is critical. AI does not require a completely new enterprise architecture. True decoupling allows the addition of new applications/technologies and embedding them into the existing business processes.

An excellent example is Expedia: The online travel company added a chatbot to the existing call center scenario to reduce costs, increase response times, and make customers happier.

New Customer Stories of Digital Natives Using Data Streaming

So much innovation is happening with data streaming. Digital natives lead the race. Automation and digitalization change how tech companies create entirely new business models.

Most digital natives use a cloud-first approach to improve time-to-market, increase flexibility, and focus on business logic instead of operating IT infrastructure. Elastic scalability gets even more critical when you start small but think big and global from the beginning.

Here are a few customer stories from worldwide telecom companies:

- New Relic: Observability platform ingesting up to 7 billion data points per minute for real-time and historical analysis.

- Wix: Web development services with online drag-and-drop tools built with a global data mesh.

- Apna: India's largest hiring platform powered by AI to match client needs with applications.

- Expedia: Online travel platform leveraging data streaming for a conversational chatbot service incorporating complex technologies such as fulfillment, natural-language understanding, and real-time analytics.

- Alex Bank: A 100% digital and cloud-native bank using real-time data to enable a new digital banking experience.

- Grab: Asian mobility service that built a cybersecurity platform for monitoring 130M+ devices and generating 20M+ Al-driven risk verdicts daily.

Resources To Learn More

This blog post is just the starting point. Learn more about data streaming and digital natives in the following on-demand webinar recording, the related slide deck, and further resources, including pretty cool lightboard videos about use cases.

On-Demand Video Recording

The video recording explores the telecom industry's trends and architectures for data streaming. The primary focus is the data streaming case studies. Check out our on-demand recording:

Slides

If you prefer learning from slides, check out the deck used for the above recording:

Slides: The State of Apache Kafka for Digital Natives in 2023

Data Streaming Case Studies and Lightboard Videos of Digital Natives

The state of data streaming for digital natives in 2023 is fascinating. New use cases and case studies come up every month. This includes better data governance across the entire organization, real-time data collection and processing data from network infrastructure and mobile apps, data sharing and B2B partnerships with new business models, and many more scenarios.

We recorded lightboard videos showing the value of data streaming simply and effectively. These five-minute videos explore the business value of data streaming, related architectures, and customer stories. Stay tuned; I will update the links in the next few weeks and publish a separate blog post for each story and lightboard video.

And this is just the beginning. Every month, we will talk about the status of data streaming in a different industry. Manufacturing was the first. Financial services second, then retail, telcos, digital natives, gaming, and so on.

Published at DZone with permission of Kai Wähner, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments