The Mystery of Eureka Self-Preservation

Here, we examine a quick overview of how Eureka's self-preservation mode works as well as a couple of traps to avoid, depending on your network.

Join the DZone community and get the full member experience.

Join For FreeEureka is an AP system in terms of CAP theorem which in turn makes the information in the registry inconsistent between servers during a network partition. The self-preservation feature is an effort to minimize this inconsistency.

Defining Self-Preservation

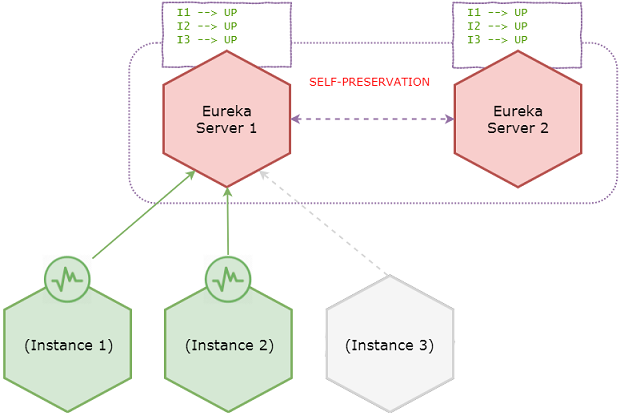

Self-preservation is a feature where Eureka servers stop expiring instances from the registry when they do not receive heartbeats (from peers and client microservices) beyond a certain threshold.

Let’s try to understand this concept in detail.

Starting With a Healthy System

Consider the following healthy system.

Suppose that all the microservices are in a healthy state and registered with the Eureka server. In case you are wondering why — that’s because Eureka instances register with and send heartbeats only to the very first server configured in the service-URL list. For example:

eureka.client.service-url.defaultZone=server1,server2Eureka servers replicate the registry information with adjacent peers and the registry indicates that all the microservice instances are in UP state. Also, suppose that instance 2 is used to invoke instance 4 after discovering it from a Eureka registry.

Encountering a Network Partition

Assume a network partition happens and the system is transitioned to the following state.

Due to the network partition, instances 4 and 5 lost connectivity with the servers; however, instance 2 is still having connectivity to instance 4. The Eureka server then evicts instances 4 and 5 from the registry since it’s no longer receiving heartbeats. Then it will start observing that it suddenly lost more than 15% of the heartbeats, so it enters self-preservation mode.

From now onward, the Eureka server stops expiring instances in the registry even if the remaining instances go down.

Instance 3 has gone down, but it remains active in the server registry. However, servers accept new registrations.

The Rationale Behind Self-Preservation

Self-preservation features can be justified for the following two reasons.

- Servers not receiving heartbeats could be due to a poor network partition (i.e., does not necessarily mean the clients are down) which could be resolved sooner.

- Even though the connectivity is lost between servers and some clients, clients might have connectivity with each other; i.e., Instance 2 has connectivity to Instance 4 as in the above diagram during the network partition.

Configurations (With Defaults)

Listed below are the configurations that can directly or indirectly impact self-preservation behavior.

eureka.instance.lease-renewal-interval-in-seconds = 30This indicates the frequency the client sends heartbeats to the server to indicate that it is still alive. It’s not advisable to change this value since self-preservation assumes that heartbeats are always received at intervals of 30 seconds.

eureka.instance.lease-expiration-duration-in-seconds = 90This indicates the duration the server waits since it received the last heartbeat before it can evict an instance from its registry. This value should be greater than lease-renewal-interval-in-seconds. Setting this value too long impacts the precision of actual heartbeats per minute calculation described in the next section, since the liveliness of the registry is dependent on this value. Setting this value too small could make the system intolerable to temporary network glitches.

eureka.server.eviction-interval-timer-in-ms = 60 * 1000A scheduler is run at this frequency which will evict instances from the registry if the lease of the instances are expired as configured by lease-expiration-duration-in-seconds. Setting this value too long will delay the system entering into self-preservation mode.

eureka.server.renewal-percent-threshold = 0.85This value is used to calculate the expected heartbeats per minute as described in the next section.

eureka.server.renewal-threshold-update-interval-ms = 15 * 60 * 1000A scheduler is run at this frequency which calculates the expected heartbeats per minute as described in the next section.

eureka.server.enable-self-preservation = trueLast but not least, self-preservation can be disabled if required.

Making Sense of Configurations

The Eureka server enters self-preservation mode if the actual number of heartbeats in last minute is less than the expected number of heartbeats per minute.

Expected Number of Heartbeats per Minute

We can see the means of calculating the expected number of heartbeats per minute threshold. Netflix code assumes that heartbeats are always received at intervals of 30 seconds for this calculation.

Suppose the number of registered application instances at some point in time is N and the configured renewal-percent-threshold is 0.85.

- Number of heartbeats expected from one instance/min = 2

- Number of heartbeats expected from N instances/min = 2 * N

- Expected minimum heartbeats/min = 2 * N * 0.85

Since N is a variable, 2 * N * 0.85 is calculated every 15 minutes by default (or based on renewal-threshold-update-interval-ms).

Actual Number of Heartbeats in Last Minute

This is calculated by a scheduler which runs in a frequency of one minute.

Also as described above, two schedulers run independently in order to calculate the actual and expected number of heartbeats. However, it’s another scheduler which compares these two values and identifies whether the system is in self-preservation mode — which is EvictionTask. This scheduler runs in a frequency of eviction-interval-timer-in-ms and evicts expired instances; however, it checks whether the system has reached self-preservation mode (by comparing actual and expected heartbeats) before evicting.

The Eureka dashboard also does this comparison every time when you launch it in order to display the message ‘…INSTANCES ARE NOT BEING EXPIRED JUST TO BE SAFE’.

Conclusion

- My experience with self-preservation is that it’s a false-positive most of the time where it incorrectly assumes a few down microservice instances to be a poor network partition.

- Self-preservation never expires, until and unless the down microservices are brought back (or the network glitch is resolved).

- If self-preservation is enabled, we cannot fine-tune the instance heartbeat interval, since self-preservation assumes heartbeats are received at intervals of 30 seconds.

- Unless these kinds of network glitches are common in your environment, I would suggest turning it off (even though most people recommend keeping it on).

Published at DZone with permission of Fahim Farook. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments