The Anatomy of a Microservice, One Service, Multiple Servers

It’s (finally) time to look at the API Server layer and expose the business service to the outside world.

Join the DZone community and get the full member experience.

Join For FreeThe first article of this series, "Microservice Definition and Architecture", includes a high-level architecture diagram. Subsequent articles have covered the architecture’s first two layers. It’s (finally) time to look at the API Server layer and expose the business service to the outside world. As has been the case for this series, I’ll continue to demonstrate the solution through a sample project that can be found on GitHub at https://github.com/relenteny/microservice.

There are two API Servers; RESTful and gRPC. The source is located in the media-server module. Why two implementations? The transport mechanism does make a difference. By far, the most common protocol is REST. The protocol is easy to produce and consume. It leverages a very mature transport mechanism; HTTP 1.x. Its ubiquitous support across tech stacks really makes it the API Server de facto standard protocol.

On the other hand, gRPC requires more intentional design by providing an interface specification (protobuf) and requires HTTP/2 as its protocol, which currently doesn’t have wide browser support. However, gRPC offers significant advantages including a compact binary message format, bi-directional streaming and improved performance at scale. The question becomes, if gRPC doesn’t have wide browser support, what’s its value? To me, this gets to the core definition of a microservice.

While HTTP-based technologies are key to a microservice architecture, they are not the defining component of a microservice architecture.

When I read articles or discuss microservices, the conversation almost always focuses on HTTP-based topics including REST and OpenAPI. While HTTP-based technologies are key to a microservice architecture, they are not the defining component of a microservice architecture. REST, as well as gRPC API Servers, provide the communication and marshaling component that allows a business service to interact with external processes.

I’ve been involved in multiple projects where a microservice contract was designed starting with a JSON specification. This is backward. The JSON specification is the marshaling definition for the RESTful exposure of a business service. The distinction may be subtle, but, once again, based on experience, when definition starts with the JSON specification, decisions at that level can affect design decisions in the server-side implementation that paint a service into the corner of not considering other integration use cases.

Back to the question about the value of gRPC then. While not out of the question, today, it’s unlikely that external applications would use gRPC to access a microservice. By external application, I mean an application accessing the microservice from outside the firewall, or external to an organization hosting the microservice. The answer to the value of a gRPC API Server is found behind the firewall or within an organization’s infrastructure.

The case can be made that in a true microservices environment, the interaction between microservices is just as frequent, if not more so, than external interaction, including browsers.

The case can be made that in a true microservices environment, the interaction between microservices is just as frequent, if not more so, than external interaction, including browsers. Being smart about microservice deployment contributes to the overall cost of running an application. Note that I didn’t say performance. Performance is an aspect of scaling. Scaling is an aspect of cost.

Therefore, the deployment of efficient microservices impacts scaling, which impacts cost. There are many articles and blogs on the performance and efficiency of gRPC over REST. While, at this time, it’s not readily consumable by the typical external API Server use case; the browser, taking the time to add a gRPC API Server implementation of a microservice, can play a role in the deployment of efficient microservices. Keep in mind that deployment capabilities and efficiencies play a major role in business competitiveness. Leveraging infrastructure as a competitive advantage is a real thing.

Leveraging infrastructure as a competitive advantage is a real thing.

There are many gRPC resources. Microsoft has a nice, concise page providing a very good overview. You can find it at Compare gRPC services with HTTP APIs, and yes, a Linux/Java guy just referenced a Microsoft website.

The Microsoft page summarizes gRPC and HTTP APIs nicely:

| Feature | gRPC | HTTP APIs with JSON |

|---|---|---|

Contract |

Required (.proto) |

Optional (OpenAPI) |

Protocol |

HTTP/2 |

HTTP |

Payload |

Protobuf (small, binary) |

JSON (large, human-readable) |

Prescriptiveness |

Strict specification |

Loose. Any HTTP is valid |

Streaming |

Client, server, bi-directional |

Client, server |

Browser support |

No (requires grpc-web) |

Yes |

Security |

Transport (TLS) |

Transport (TLS) |

Client code-generation |

Yes |

OpenAPI + third-party tooling |

Multiple API Servers and Overall Microservice Architecture

In addition to supporting multiple transport mechanisms that can improve performance and efficiency, when providing more than one API Server, there’s an architectural benefit. That is helping to enforce separation of concerns. While the high-level architecture diagram presented in Microservice Definition and Architecture depicts a clear separation of concerns, like any other development effort, implementing this pattern does require diligence.

In a previous article in this series, I stated that I believe developers have the best intentions in mind. Of course, there are exceptions, but individuals do want to do a good job. The problem comes in when deadlines loom. Things start to get thrown off the back of the truck. Shortcuts are taken. Non-functional requirements such as metrics gathering and reporting are missed.

The more an architecture helps guide a team, the less likely these things will happen. Specifically, in the case of having two API Servers, business logic remains where it’s supposed to remain: in the business service. If a decent level of testing exists, tests should fail if business logic is introduced into one API Server and not the other. The quick fix may be to copy business logic from one API Server to the other, but, during code analysis, that would flag a duplicate code violation.

Plus, if you’re copying and pasting, why not just cut from the one API Server and paste into the business service?

Revisiting Business Service Implementations

In my previous article in this series, The Anatomy of a Microservice, Satisfying the Interface, I demonstrated multiple implementations of the business service. Two of those implementations are intended for use in server-side applications. If you’ve followed this series, hopefully, it’s no surprise that there are client implementations for both RESTful and well as gRPC business service invocation.

This is a contract-based design leveraging dependency injection at it’s very best. There is nothing new here, but strangely (to me at least), this pattern is seldomly seen.

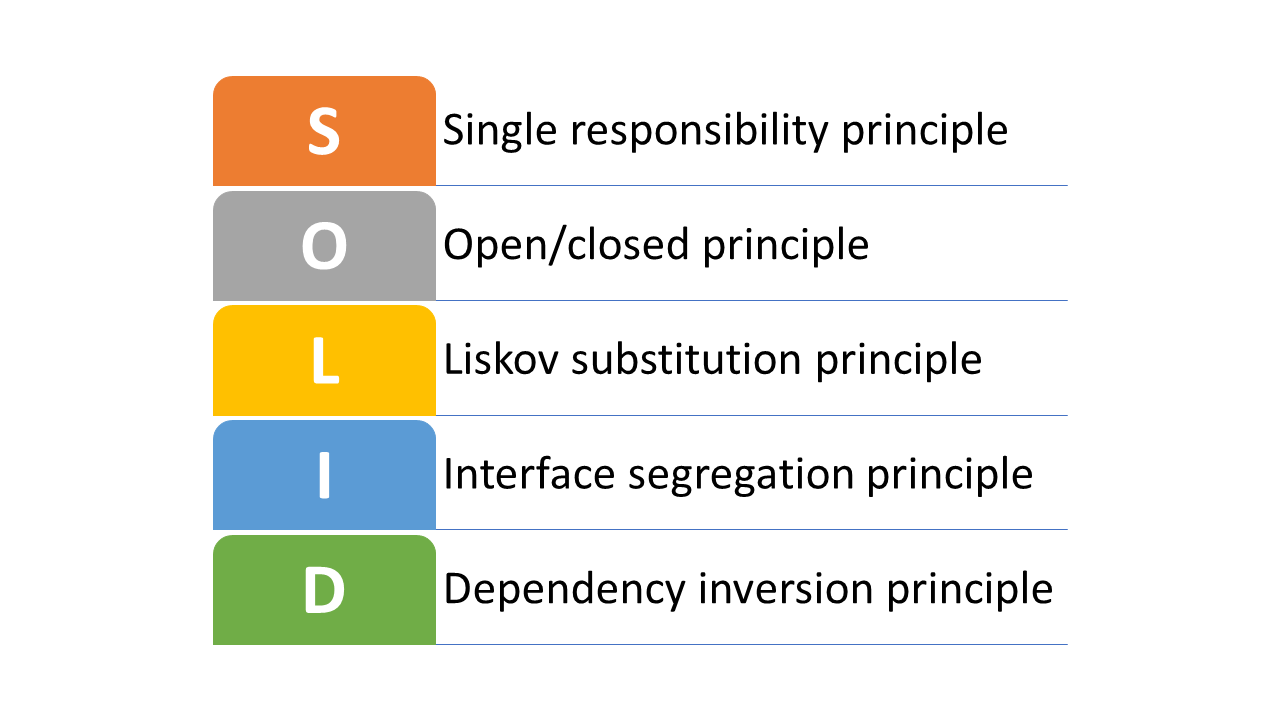

To me, this is where interface/contract design and inversion of control/dependency injection – both core tenants of SOLID — truly shine.

Why, as a consumer of an API, should I be concerned with the implementation specifics of access a service? As a consumer of an API, why should I develop, test and instantiate a remote business service integration any different than a service that would be in my own codebase? Once again referencing the high-level architecture diagram, you see the interaction between remote applications and the API Interface layer, but over to the right of the diagram, there’s a system interacting directly with the business service.

It may be that for security or performance reasons nothing can go over the wire, and an application must embed the service directly. Along with the actual implementation of a business service, also providing client implementations gives the consumer of the business service integration choices. This is a contract-based design leveraging dependency injection at it’s very best. There is nothing new here, but strangely (to me at least), this pattern is seldomly seen.

public class SampleClient {

MediaService mediaService;

public static void main(String[] args) {

SampleClient sampleClient = new SampleClient();

sampleClient.getMovies();

}

public void getMovies() {

mediaService.getMovies().doOnNext(movie -> {

System.out.println(movie.getTitle());

}).blockingSubscribe();

}

}

What's the difference in the above code whether the implementation of the business service is native, REST or gGRPC, or a mock versus an actual implementation? I'd say it's a trick question, but since you've gotten this far in the article, you already know that there's no difference. The difference is in the deployment packaging; not in the compiled code.

Up Next

When I started this article, my intent was to begin the discussion on building the microservices and looking at the Quarkus feature of using the GraalVM to produce a native executable. As it obviously turned out, I spent a bit of time working through some of the architecture principles involved in developing the API Server implementations. Hopefully, you found it useful.

In my next article, we’ll explore building the application looking at how Quarkus supports building both uber jars as well as native executable using GraalVM. Stay tuned for The Anatomy of a Microservice, Java at Warp Speed.

Further Reading

Run and Configure Multiple Instances in a Single Tomcat Server

Opinions expressed by DZone contributors are their own.

Comments