Testing Your Monitoring Configurations

This article reviews testing monitoring setups such as Fluentd and Fluentbit through log replays or synthetic log generation.

Join the DZone community and get the full member experience.

Join For FreeMonitoring is a small aspect of our operational needs; configuring, monitoring, and checking the configuration of tools such as Fluentd and Fluentbit can be a bit frustrating, particularly if we want to validate more advanced configuration that does more than simply lift log files and dump the content into a solution such as OpenSearch. Fluentd and Fluentbit provide us with some very powerful features that can make a real difference operationally. For example, the ability to identify specific log messages and send them to a notification service rather than waiting for the next log analysis cycle to be run by a log store like Splunk. If we want to test the configuration, we need to play log events in as if the system was really running, which means realistic logs at the right speed so we can make sure that our configuration prevents alerts or mail storms.

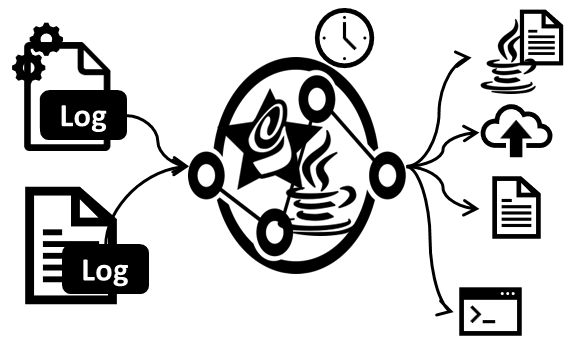

The easiest way to do this is to either take a real log and copy the events into a new log file at the speed they occurred or create synthetic events and play them in at a realistic pace. This is what the open-source LogGenerator (aka LogSimulator) does. I created the LogGenerator a couple of years ago, having addressed the same challenges before and wanting something that would help demo Fluentd configurations for a book (Logging in Action with Fluentd, Kubernetes, and more).

Why not simply copy the log file for the logging mechanism to read? Several reasons for this. For example, if you're logging framework can send the logs over the network without creating back pressure, then logs can be generated without being impacted by storage performance considerations. But there is nothing tangible to copy. If you want to simulate into your monitoring environment log events from a database, then this becomes even harder as the DB will store the logs internally. The other reason for this is that if you have alerting controls based on thresholds over time, you need the logs to be consumed at the correct pace. Just allowing logs to be ingested whole is not going to correctly exercise such time-based controls.

Since then, I've seen similar needs to pump test events into other solutions, including OCI Queue and other Oracle Cloud services. The OCI service support has been implemented using a simple extensibility framework, so while I've focused on OCI, the same mechanism could be applied as easily to AWS' SQS, for example.

A good practice for log handling is to treat each log entry as an event and think of log event handling as a specialized application of stream analytics. Given that the most common approach to streaming and stream analytics these days is based on Kafka, we're working on an adaptor for the LogSimulator that can send the events to a Kafka API point.

We built the LogGenerator so it can be run as a script, so modifying it and extending its behavior is quick and easy. we started out with developing using Groovy on top of Java8, and if you want to create a Jar file, it will compile as Java. More recently, particularly with the extensions we've been working with, Java11 and its ability to run single file classes from the source.

We've got plans to enhance the LogGenerator so we can inject OpenTelementry events into Fluentbit and other services. But we'd love to hear about other use cases see for this.

For more on the utility:

Opinions expressed by DZone contributors are their own.

Comments