Testing Level Dynamics: Achieving Confidence From Testing

In this article, explore shared experiences to gain insight into how teams have tried to achieve confidence from testing.

Join the DZone community and get the full member experience.

Join For FreeAll We Can Aim for Is Confidence

Releasing features is all about confidence: confidence that features work as expected; confidence that our work is based on quality code; confidence that our code is easily maintainable and extendable; and confidence that our releases will make happy customers. Development teams develop and test their features to the best of their abilities so that quality releases occur within a timeframe.

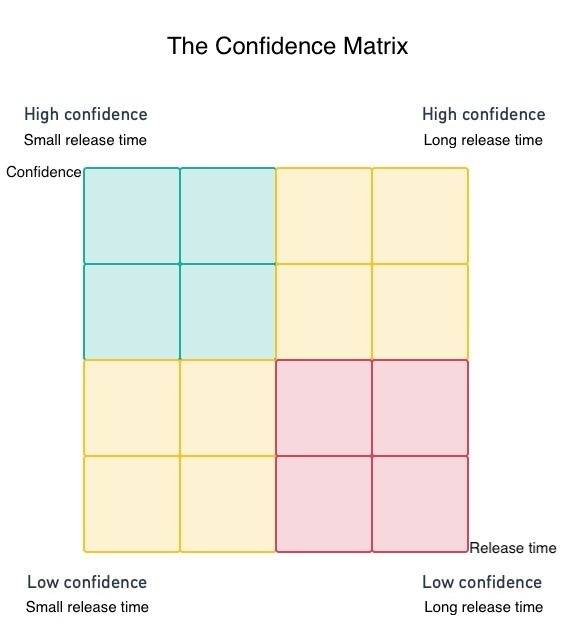

The confidence matrix shown below depicts four main areas:

- The high confidence and small release time area (an area that all development teams strive for)

- The low confidence and small release time area

- The high confidence and long release time area

- The low confidence and long release time area

The first is when we’ve made a quality release quickly. The second is when we quickly released features that may be buggy. The third is when it took us a while to do a quality release. The fourth is when it took us a while to make a buggy release. Think of the confidence matrix as a return on investment (ROI) matrix in its most basic form where our return is confidence and our investment is time.

When feature development starts, confidence could be high or low. We may be confident that we know what we must develop and how to do it. I’ve found that most software projects start in the low-confidence zone. New features could mean new unknowns that result in low confidence. Most importantly, as our development and testing activities continue, as our release time reaches the deadline, our confidence should increase. Unfortunately, this is not always the case.

To achieve confidence, most teams test and use development best practices. Despite their best efforts, I’ve seen teams releasing fast or slow with high or low confidence. Teams’ confidence may have started low but finished high or vice versa. This article shares experiences about how teams have tried to gain confidence from testing.

Confidence From Tests Requires Reliable Tests

Tests will either pass or fail. We execute them to get a true picture of the system under test. The system could be a unit or units of code or a complete application. The true picture could be that a new feature is ready to be released or that there are problems that need to be fixed before releasing. Once we’ve got the true picture we can make decisions based on testing results and not guesses.

How do we know that we’ve got the true picture? By trusting our testing results. Trusting our testing results means that no matter how many times we execute a test suite, all the tests will have no false positives and no false negatives. Tests should not pass accidentally. For example, if out of ten runs they pass five times and fail five times, they are not reliable. Such testing results are as good as guesses and will not give us a true picture of the system under test. A test may be failing for irrelevant reasons while the functionality that it exercises could be working as expected. We need to have reliable tests where we can trust our test results.

No matter how much code we cover with tests, no matter how fast or slow our tests run, we will get confidence from our testing efforts if and only if our tests are reliable.

Levels of Testing: Speed vs Scope

A simple way to understand scope is the following rule of thumb: large scope means that we cover many lines of code. Small scope means that we cover a few lines of code.

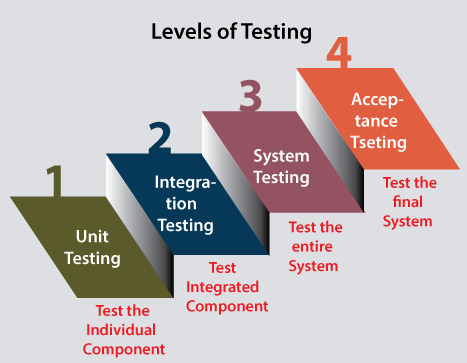

Traditionally, there are four testing levels. The lower level is unit testing, followed by integration testing, system testing, and acceptance testing, which is the higher testing level. Unit testing is about making educated decisions about what inputs should be used and what outputs are expected per input. Groups of inputs should be identified that have common characteristics and are expected to be processed in the same way by the unit of code under test. This is known as partitioning, and once such groups are identified, they should be covered by unit tests. Unit tests have a small scope. To cover our code thoroughly we need many unit tests. This is usually not a problem because we can run thousands of them in a few seconds. As we go from lower to higher testing levels the scope increases and test execution speed becomes an issue.

Unit testing is about making educated decisions about what inputs should be used and what outputs are expected per input. Groups of inputs should be identified that have common characteristics and are expected to be processed in the same way by the unit of code under test. This is known as partitioning, and once such groups are identified, they should be covered by unit tests. Unit tests have a small scope. To cover our code thoroughly we need many unit tests. This is usually not a problem because we can run thousands of them in a few seconds. As we go from lower to higher testing levels the scope increases and test execution speed becomes an issue.

Once a unit of code is defined we may also define components of code by grouping code units together. Integration testing is about interactions and interfacing between different components. Compared with unit tests, integration tests have a larger scope, but are roughly at the same order of magnitude when it comes to test execution speed.

At a system level, our product is tested at a large scope. A single system test could cover thousands of units and hundreds of components of code. Such tests take time to execute. If we could build confidence without needing thousands of them, then that would be good news. The bad news is that test execution speed is so low that it could prolong our feature releases considerably.

Similar to system tests, acceptance testing has a large scope. In some companies, it is performed by customers or company team members at the customer’s site. Other companies use acceptance testing as validation testing performed by the customers.

Speed Is Vital

To release a feature, we could test to gain confidence that it works as expected, functionally and non-functionally. It takes time to build confidence. We need time to perform development and testing, assess our testing results, and make a decision about releasing or not. Are we good to release or should we fix the bugs we’ve found, redeploy to test that all fixes are OK, and then release?

To minimize the feature-release time, we need to minimize at least:

- The time it takes to develop the feature: Using coding best practices during development is one way to introduce fewer bugs.

- The time it takes to test: We test to find bugs. Are they important? We should fix and redeploy. Are they not important? We could deploy with known issues.

There are teams that fix a bug, deploy the bug fix in a testing environment, test that the bug fix works as expected and that it does not introduce any new issues, and then deploy to production. Others deploy bug fixes directly to production (this is faster but could be riskier).

Release speed is vital. Depending on how much time we’ve got to release a feature, I’ve seen teams making various decisions in order to handle deadlines. These included:

- Features are released without testing while coding standards used for development are questionable. An example of this is a team that usually started and finished their development efforts in the low confidence area. The team has had a hard time understanding why a number of problems have arisen after their releases. Most importantly, the most critical problems remained under their radar for a long time.

- Features are released without testing while other coding standards are met and developers are confident with their code. There was a team of experienced developers that did not believe in testing. The closer to testing that they would get would be debugging their code. They were usually between the high confidence/small release time and high confidence/long release time areas in the confidence matrix. Bugs could have fallen under their radar occasionally and testers from other teams would be brought for QA testing when the team was about to release features with rich functionality.

- Features are released with just a few unit or integration-level tests but a large number of UI tests. This is a case that I’ve seen many times. Such teams would fall in any of the four areas in the confidence matrix. When showstopper bugs were found late by the testers and when fixing them required major rewrites from developers, the team's confidence was low and the release deadlines may have been prolonged. Even if no showstoppers were found, testing was a bottleneck. Developers were reluctant to change the code in a number of areas and each change called for extensive regression testing at the UI from QA testers. When releasing features with rich functionality, QA testing at the UI was a bottleneck because the test execution speed was low and the tests were many. UI test automation has helped to overcome this problem for some teams while for other teams it gave a smaller ROI than expected.

- Features are released with a large number of unit and integration tests and a minimal set of UI tests. Such teams would usually fall in the high confidence/small release time area of the confidence matrix. Bugs may occasionally have gone under the radar, especially for features with rich functionality but they were usually fixed quickly without side effects. They had continuous integration and continuous deployment setup. Their continuous builds were made of unit tests and integration tests. Frequently executing unit and integration tests was the main source of their confidence. A final confidence boost was given by a small number of manual exploratory tests in the UI.

- Features released with a large number of integration tests, a number of unit tests, and a few UI tests. This was the case for teams that used microservices and teams that executed a large number of front-end tests. Some JavaScript frontend developers, for example, were strong believers of the “write tests, not too many, mostly integration” paradigm. In the case of backend developers writing microservices, they believed that in a world of microservices, the biggest complexity is not within the microservice itself, but in how it interacts with others. As a result, they gave special attention to writing tests exercising interactions between microservices. Such teams usually avoided the low confidence and long release time area in the confidence matrix.

Following good coding standards and best coding practices does not mean that we should not test. In fact, testing is another best practice for coding. As this article focuses on testing and not other coding standards, it suffices to mention that testing is always a good idea. However, when developing and testing, our release speed will be affected by our testing speed, too. Testing dynamics per testing level need to be taken into account, in order to get the most value from our testing efforts for the allocated time.

Test Execution Speed

Testing at any level is important and necessary. The lower the testing level the faster the test execution speed. I’ve witnessed at least three ways that test execution speed has affected how development teams work.

- To identify what compromises to make: If we must make compromises, make an educated decision about what to do and what to avoid. Depending on how much time we have for testing, I've seen teams choosing at what testing level they should test. Ideally, if time and costs were not a constraint, we should test at all levels possible. This is because 100% test coverage at a unit level does not mean that we will catch no bugs with integration testing and/or with system testing. The same is true for each testing level. However, a test suite of 1000 unit tests may take an hour to complete while a UI automation suite with 200 tests may take one day to complete. Although choosing not to test at any level may involve risks, if we have little time to dedicate to testing we may make educated decisions according to what tests we want to run and at what level.

- To identify how fast we will get feedback from our tests: The test result is our feedback. Did the test pass? Our feedback is a green light. Did the test fail? Our feedback is a red light. A development team tested first the most important functional and non-functional areas of their release. They first tested at a testing level that test execution speed was the fastest. As a result, showstopper bugs could be found early during testing and hence they were also fixed early without jeopardizing release time. The main factor that lowered their confidence was showstoppers that were found late and fixed late, resulting in missing release deadlines. They’ve found that the best way to allocate their testing efforts was to start with quick feedback testing (unit and integration testing). If no showstoppers are found, then for the remaining time, continue with higher-level testing.

- To help identify our testing levels: People often go back and forth about whether particular tests are unit tests or integration tests. Large unit tests could also be considered small integration tests and vice-versa. But what are they really and at what level do they belong? There was a team that shared a definition like, "If a test talks to the database or if it communicates across the network, if it involves accessing file systems like editing configuration files, then it’s not a unit test." The reasoning behind this was simple: test execution speed. If a test talked to the database, for example, then it would take longer to execute. Since unit tests are the fastest across all testing levels, the team decided to call low-level tests that performed such time-consuming actions as integration tests. Another team was using fault detection time as a guide. Α test failed. If it took seconds to detect the fault in the code that caused the failure, then the failing test was a unit test. If it took minutes to detect the fault then the failing test was an integration test.

There was a group that used architects and tech leads to write a few integration tests. Their main goal was to ensure that the choreography and orchestration of the architectural components were working. Such tests usually covered 10 to 20% of the code at maximum and having a large scope they usually were slow.

In another group, QA and business analysts wrote acceptance tests to achieve a maximum of 50% of code coverage. They also wrote a few system tests as final tests of choreography and orchestration. The system tests covered very little of the actual business rules and were the slowest.

Wrapping Up

There is a popular debate about what percentage of tests to write at what testing level. I’ve tried to shift the focus a little bit on confidence over time. It’s all about confidence, and a great deal of it can be achieved by running tests quickly and reliably. Testing closer to the unit/integration level will be quicker and necessary, but not sufficient. Higher testing levels will also need to be covered which will probably cost more in execution time, maintenance, and reliability. Let’s not forget one of our basic prerequisites for tests to be valuable: tests that pass for the right reasons and fail for useful reasons. I’ve shared a number of experiences about how different development teams managed their testing efforts resulting in different levels of confidence over time.

Opinions expressed by DZone contributors are their own.

Comments