Testing in CI

Automated testing is a must in continous integration, and there are four characteristics that should take priority.

Join the DZone community and get the full member experience.

Join For FreeThis article is featured in the new DZone Guide to DevOps: Implementing Cultural Change. Get your free copy for insightful articles, industry stats, and more!

Automated tests are a key component of continuous integration (CI) pipelines. They provide confidence that with newly added check-ins, the build will still work as expected. In some cases, the automated tests have the additional role of gating deployments upon failure.

With such a critical responsibility, it's important that the automated tests are developed to meet the needs of continuous integration. For this, there are four main factors that should be considered when developing automated tests for CI: speed, reliability, quantity, and maintenance.

Speed

A major benefit of CI is that it provides the team with fast feedback. However, having a suite of automated tests that take hours to run negates that benefit. For this reason, it's important to design automated tests to run as quickly as possible.

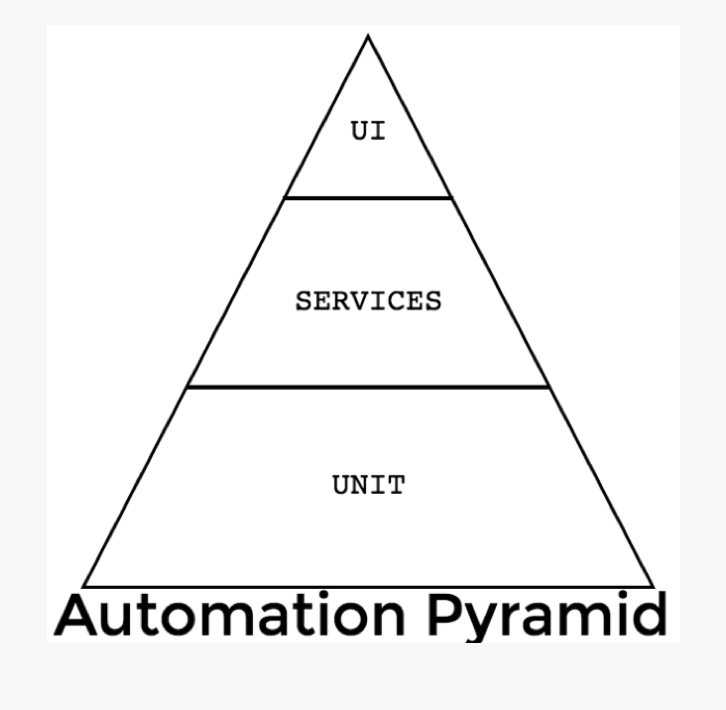

One way to accomplish this is to automate the tests as close to the production code when possible. The test automation pyramid, a model introduced by Mike Cohn in Succeeding With Agile: Software Development Using Scrum, describes three levels in which tests can be automated: unit, services, and UI.

Automation Pyramid

Unit Level

The unit level is closest to the production code. Automated tests at this level are quick to write and quick to run. More importantly, they are able to pinpoint the exact function in which a bug exists. Therefore, the bulk of the tests that are run as part of CI should be written at this level. This aligns with the goal of fast feedback.

Services Level

The services level is a bit further from the code itself and focuses on the functionality that the code provides, but without a user interface. Tests automated at this level can make calls to the product's APIs and/or business logic to verify the integration of various individual functions. This level should contain the second largest number of automated tests after the unit level.

UI Level

The furthest level from the production code is the UI level. Tests written at this level face unique challenges in that they take longer to write, take longer to execute, and are dependent upon the consistency of the UI. Because of this expense, this level should contain the least number of automated tests.

When considering a test for automation to be included in CI builds, determine what information this test needs to verify and choose the lowest level of the pyramid possible to write the test against. The lower the level, the faster the test — thus upholding the goal of fast feedback.

Reliability

Automated tests that are run as part of CI need to be highly reliable. The state of the build is dependent upon the state of the tests, and manual intervention is impractical. Yet, reliability is often something teams struggle to achieve within their automated tests.

Flaky tests — tests that sometimes pass and sometimes fail when run against the same code base — are a detriment to CI. False negatives require developers to investigate the failures to ensure it's not their code check-in that is at fault. This is time taken away from other development activities. And if there are too many false negatives, the team begins to lose trust in the tests and begins to disregard them altogether. False positives can have just as much of an impact, as they mistakenly inform the team that the integration is alright when in reality, it is not. These types of tests can allow bugs to creep into production.

There are techniques that can be employed to minimize flakiness within automated tests.

Improve Test Automatability

One reason why so many tests are written at the UI level is because there is a lack of code seams to allow for automating at lower levels. Code seams are exposed shortcuts that your tests can take advantage of to bypass the everchanging UI.

For example, if a test scenario is to verify that the quantity of a product can be increased within an online shopping cart, the steps to search for the product and add the product to the cart are prerequisites but immaterial to the verification of this specific scenario. Yet, these steps would often be automated via the UI as part of the test. There are a lot of things that can go wrong here before the test ever gets to what it is supposed to be verifying: increasing the quantity in the cart. Code seams, such as a web service to add the product to the cart, can help remove these unnecessary UI actions and reduce flakiness.

Another way to improve test automatability is to ensure that your application's UI elements contain reliable identifiers. These identifiers are used as locators within test automation to interact with the UI elements. Without a dedicated identifier, the test is forced to use XPath or CSS selectors to access the element. These are very fragile and will change as the appearance of your application does, meaning it will break your UI automation.

Manage the Test Data

Tests should not make assumptions about the data that it relies upon. Changes with test data are a common cause for failed tests. This gets particularly flaky when there are other tests running in parallel that are modifying that data.

Avoid hardcoding expected assertions. Either create whatever data is needed as part of the test itself using code seams or consult a source of truth (e.g. API/database) for the expected result.

Account for Asynchronous Actions

Tests automated at both the service and UI levels need to account for asynchronous actions. Scripts execute scenarios a lot faster than humans do, and at the point of verification, the system may not yet be in its expected state, which leads to flaky tests. This can be circumvented by having the automated test wait conditionally for the application to be in its desired state before taking the next action. Avoid hardcoding waits, as this can lead to waiting longer than needed and defeating the purpose of fast feedback within CI.

Quantity

To keep the CI process as fast as possible, be mindful of which tests you're adding to it. Every test should serve a distinct purpose. Many times, teams will aim to automate an entire regression backlog or every single test in a sprint. This leads to longer execution times, low-value tests blocking integration, and increased maintenance efforts.

Evaluate your scenarios and determine which are the best candidates for automation based on risk and value. Which ones would cause you to stop an integration or deployment? Which ones exercise critical core functionality? Which ones cover areas of the application that have historically been known to fail? Which ones are providing information that is not already covered by other tests in the pipeline?

Even when being very intentional about the scenarios that will be a part of your CI pipeline, the suite of automated tests will naturally grow. However, you do not want your overall build time to also increase. This can be remedied by running tests in parallel, which means none of your tests should be dependent on each other. For extremely large suites, the tests can also be grouped by key functional areas. Grouping the tests this way allows the flexibility of having CI only trigger the execution of specific groups that may be affected by the development change made, and running the other groups once or twice a day as part of a separate job.

Also, don't be afraid to delete tests that are no longer of high value or high priority. As your application evolves and certain areas prove to be very stable or even underutilized, the need for certain tests may vanish.

Maintenance

Your test code requires maintenance. There is no getting around this. The test code was written against your application at a given state, and as the state of your application changes, so must your test code.

The CI test results must be consistently monitored, failures must be triaged, and test code must be updated to reflect the new state of the application.

Avoiding this step leads to untrustworthy builds where the tests are no longer useful to the overall CI process.

This article is featured in the new DZone Guide to DevOps: Implementing Cultural Change. Get your free copy for insightful articles, industry stats, and more!

Opinions expressed by DZone contributors are their own.

Comments