Testcontainers + Liquibase: Make Integration Testing Easier

You can use Liquibase and the Testcontainers library to make integration testing much less headache-inducing. I’ll show you how to do it, step by step.

Join the DZone community and get the full member experience.

Join For FreeThe sheer number of tools, libraries, and frameworks can give many programmers a headache. Moreover, complex designs often require many of these components to work together or at least not interfere with each other.

Database versioning — and integration tests during which we conduct it — are great examples of such problematic cooperation. There is also the aspect of the persistence layer in our code, which will be the subject of the above-mentioned tests.

In this article, I’ll show you how to simplify this process using Liquibase and the Testcontainers library for Docker.

Prerequisites

Before we move on to the example, it is worth familiarizing yourself with the following concepts, as they will be the subject of our consideration.

Database Versioning

Since versioning all code changes is standard practice, why not transfer this practice to the database layer? It already happens in many projects, but I think it’s still worth remembering this process so that it becomes as natural as using Git.

DB versioning allows us to keep control of any changes to the database. It may apply to updating the schema, adding or removing tables, columns, etc. In addition to the auditability of changes, the available tools also allow you to freely manage them in different environments and easily roll them back if something goes wrong.

At Pretius, we use Liquibase for this, and we have a detailed Liquibase tutorial on our blog, so be sure to check it out. There are other options, however, such as Flyway.

Integration Tests

I hope I don’t have to explain why you should always test your code – it’s always a necessary step of the software development process.

Integration testing is a very broad topic, so we will limit ourselves to a specific, smaller area. Namely, we’ll focus on tests related to the persistence layer, i.e., whether our code correctly integrates with the database.

In the case of frameworks such as Hibernate, tests of automatically generated SQL may make less sense – after all, we don’t necessarily need to test a library that has already been tested extensively by its creators. However, in the case of, e.g., MyBatis, where we write SQL code ourselves, such tests become a necessity.

@Insert("INSERT INTO OFFERS (offer_name) VALUES (#{offer.offerName});")

@Options(useGeneratedKeys = true, keyProperty = "offer.id")

void save(@Param("offer") Offer offer);The Problem We Want To Solve

We will consider a combination of these two aspects: DB integration tests and DB versioning.

If you have already decided that in your project, all database changes are made using a dedicated tool (like Liquibase), it would be a sin not to go a step further and not use it in integration tests.

The sample code on GitHub contains an application based on Spring Boot and MyBatis. As I’ve mentioned, handwritten SQL (which is what MyBatis forces us to do) opens up many possibilities to make a mistake. Whether you edit existing queries or write new ones, it’s easy to make a typo or cut off the ending when pasting the query from the DB console to the application code. Despite all the great things about it, Spring Boot will catch such an error only when you try to execute the query in runtime. If you’re unlucky, you can find out that you made a mistake after a few days, which definitely isn’t a desirable situation.

For testing purposes, I’ve used the Testcontainers library, which can run the required infrastructure (PostgreSQL database, in my case) during a unit test conducted using Docker.

In addition to the database, Testcontainers can provide us with a number of other elements of our infrastructure: queues, cache, and document databases. I recommend reading the documentation. You may find that tests that once seemed impossible to write become prosaically simple.

Why Combine These Two Elements?

Someone could ask right now: why don’t you use a local database for such tests or a database from some test environment? One of the reasons for that is repeatability. It is crucial that you run your tests in the same conditions each and every time, and ongoing work, whether in the local or test environment, should not affect their course.

Thanks to Liquibase, you can be 100% sure that your database will be consistent with what you currently have in the repository. Moreover, by using Testcontainers, you can create an independent test environment in just a few seconds.

To sum up. The elements that make up my example are:

- The application is based on the Spring-boot 3+ framework

- Liquibase — DB versioning

- MyBatis — as a data persistence layer

- Testcontainers — provisioning testing infrastructure

- Junit 5 — tests

- PostgreSQL

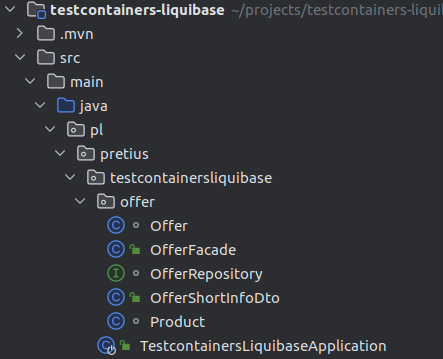

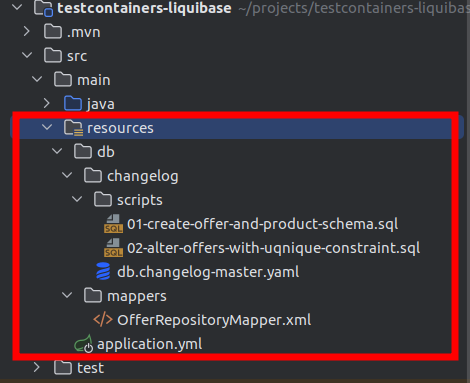

The structure of our application looks like this:

In short, it’s really nothing special. Two model classes, one repository class, and a public facade with a DTO that provides functionalities “to the world.” The Resources area will be more interesting. This is where the Liquibase SQL scripts and changelog reside.

To run the application using the spring-boot:run command, you will need a local database, which you can quickly create using Docker and the docker-compose.yml file attached to the repository.

docker-compose up -d

./mvn spring-boot:runAfter executing the above commands, the application will start, but the process will be finished in a brief moment. Nevertheless, you will be able to observe several changes that have taken place since its launch:

- You should see two entries in the logs:

SQL in file changelog/scripts/01-create-offer-and-product-schema.sql executed

SQL in file changelog/scripts/02-alter-offers-with-uqnique-constraint.sql executed- There should be four new tables in your local database:

The first two tables are needed for Liquibase to run, and audit data is stored there. Offers and products, however, are tables that you intentionally created with your SQL scripts.

To make this possible, you only need one entry in pom.xml and the appropriate directory structure in resources. Spring Boot takes care of the rest.

<dependency>

<groupId>org.liquibase</groupId>

<artifactId>liquibase-core</artifactId>

</dependency>Testing

As some of you have probably already guessed, you can use a lot of what I’m writing about here in tests without too much effort.

If you try to run a test that raises the spring context, the same thing will happen as when you started the application.

Liquibase will try to create tables — if they don’t already exist — in the local database. But you want something different.

It goes without saying that using a local database in unit tests is a bad idea. The Testcontainers library comes to your aid here. You can use it to successfully run a fresh database in the form of a container, both locally and within our CI/CD.

What Do We Need To Change?

Add the following dependency to your pom.xml file.

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>postgresql</artifactId>

<version>1.17.6</version>

<scope>test</scope>

</dependency>Plus a bit of configuration:

spring:

datasource:

username: test

password: test

url: jdbc:tc:postgresql:15.1:///test

driver-class-name: org.testcontainers.jdbc.ContainerDatabaseDriver

mybatis:

mapper-locations: classpath:db/mappers/*.xmlThe omniscient Spring Boot already knows that it needs to download and run the 15.1 PostgreSQL container.

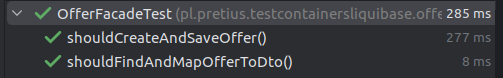

To prove that everything works, you can run two tests. The first will write to the DB, and the second will read the previously saved data. No mocks. All of the testing is done on the production code.

@Test

@Order(1)

void shouldCreateAndSaveOffer() {

//when

OfferShortInfoDto offer

= offerFacade.createOffer("TestOfferName", "TestProductName", BigDecimal.valueOf(123.12));

//then

Assertions.assertEquals("TestOfferName", offer.getOfferName());

Assertions.assertEquals(BigDecimal.valueOf(123.12), offer.getTotalPrice());

}@Test

@Order(2)

void shouldFindAndMapOfferToDto() {

//when

OfferShortInfoDto offer = offerFacade.findOfferByOfferName("TestOfferName");

//then

Assertions.assertEquals("TestOfferName", offer.getOfferName());

Assertions.assertEquals(BigDecimal.valueOf(123.12), offer.getTotalPrice());

}Where Do Errors in Handwritten SQL Come From?

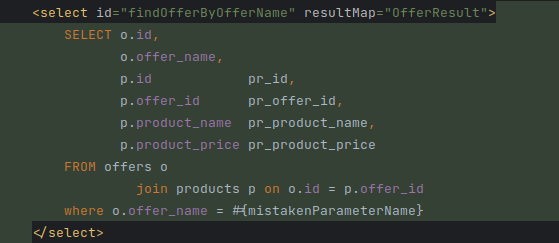

As I’ve mentioned earlier, handwritten SQL leaves plenty of room for “silly” mistakes. MyBatis gives you full control over how your queries look (which is its greatest asset), but writing them in XML files or in the form of annotations in Java classes isn’t the most pleasant thing out there.

Therefore, the most common practice is to write queries in the DB console and then paste them into XML. Unfortunately, MyBatis syntax is not 100% pure SQL. There are various types of tags, conditional instructions, or even parameters that need to be completed in XML. I think you can infer what this means yourself…

Liquibase and Testcontainers in an Existing Project?

Any time is good if you want to implement DB versioning in your project. There shouldn’t be any technical problems here. It’s just a decision the team needs to make — that from now on, everyone will use the selected tool, e.g., Liquibase.

What About Docker?

The problem is that Liquibase won’t be able to create a database from scratch using scripts. So it will need some help.

If we use the example of the PostgreSQL database, one of the possibilities is to create a backup copy and prepare your own Docker image based on it. This can be useful when your database is already large, and restoring it from scratch each time would be time-consuming. It’s worth noting that Testcontainers allows you to run images, and not only from its repository. In the example, I used DockerHub to run my custom Docker image.

Using the Testcontainers SDK, you can create the appropriate configuration that shows you the image that we built earlier.

public static PostgreSQLContainer postgresqlContainer = new PostgreSQLContainer(

DockerImageName.parse("arkadiuszrosloniec/postgres-preloaded-testcontainers:1.0.0")

.asCompatibleSubstituteFor("postgres")

)

.withDatabaseName("db")

.withUsername("test_user")

.withPassword("test_password");Now, using Junit 5 annotations, you need to start the container and set the appropriate data source configuration so that Spring Boot knows where your database has started.

@BeforeAll

public static void setUp() {

postgresqlContainer.setWaitStrategy(

new LogMessageWaitStrategy()

.withRegEx(".*database system is ready to accept connections.*\\s")

.withTimes(1)

.withStartupTimeout(Duration.of(60, ChronoUnit.SECONDS))

);

postgresqlContainer.start();

}

@DynamicPropertySource

public static void overrideProperties(DynamicPropertyRegistry dynamicPropertyRegistry) {

dynamicPropertyRegistry.add("spring.datasource.url", postgresqlContainer::getJdbcUrl);

dynamicPropertyRegistry.add("spring.datasource.username", postgresqlContainer::getUsername);

dynamicPropertyRegistry.add("spring.datasource.password", postgresqlContainer::getPassword);

dynamicPropertyRegistry.add("spring.datasource.driver-class-name", postgresqlContainer::getDriverClassName);The entire solution — along with its own DB image — is available on this GitHub branch.

Conclusion

I believe that Testcontainers and Liquibase should never be used separately. As my example shows, having a DB versioning tool at a low cost allows you to create tests that will fully reflect how the code actually works. Thanks to Docker, you can also be sure that the tests are repeatable and that no external factors (e.g., the work of other team members) will affect them. These tools complement each other perfectly. If you have any further questions, you can email me directly at.

Published at DZone with permission of Arkadiusz Rosloniec. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments