Test Automation Tools for Desktop Applications: Boxed vs. Open Source

Buckle up, this article takes a deep dive into the benefits, uses, and examples of using test automation for desktop apps.

Join the DZone community and get the full member experience.

Join For FreeSoftware development practices change over time, and so does the test automation landscape. Today most applications are either web- or mobile-based. The test automation of such systems is well-described. There are some best practices, information-loaded conferences, good tools, and so on. However, we faced a lack of information regarding desktop testing automation, particularly for Windows 7+ apps.

Our task was to test such an application, given that the manual QA process would take more than a month. Here we share the pros and cons of discovered solutions, along with our experience and some best practices of the test automation process.

If you’ve ever faced QA automation, we bet there were automated tests for a web page, web blog, or a web interface. Your team probably enjoys Appium for functional testing of a mobile app, or instrumental tests for Android (Espresso).

Still, in 2019, we keep developing desktop apps and maintaining legacy projects. Institutions such as banks, financial departments, laboratories, and HoReCa keep utilizing Windows Desktop. Businesses of all forms use them to automate, organize, and control their business processes.

The web will never fill every users’ need. As opposed to a user, PCs like working with local files and devices, big data processing, the ability to plug in specific devices, and accessing services. Still, there are reasons to keep using desktop apps and there are several ways to use them:

- User identification. Fingerprint scanners, passport scanners, etc.

- Corporate security reasons. The Internet can be restricted or limited at some manufactures or banks.

- Other reasons. For example, a company’s existing equipment fleet that already consists of Windows 7 PCs.

These are real customers’ needs, and no Web-based system may meet them.

Does Testing Take That Long? The "Do Pilot" Managing Pattern

Our customer uses a .NET desktop application for document control and business process automation, which has been developed and maintained for years.

This means dozens of thousands of code lines (LOCs), which call for hundreds of tests and for about 1.5 months for primary manual testing:

- Two weeks for primary manual regression testing.

- Two weeks for secondary testing.

- Time to verify bugfixes or correct errors and possible misconfigurations.

Errors are being corrected, while estimated time increases. Planning and controlling mistakes is even more time-consuming. So we get about 6 weeks of two engineers’ full-time work needed for regression tests. In case a new feature or a module is needed ASAP, six weeks of testing is a huge cost for a client.

Every customer prefers fast release iterations: from an inquiry through speedy testing to deployment. The key is keeping the quality level, or even increasing it, by reliable quantitative criteria. This is how we figured that our testing had to be automated.

Let’s admit that not every task in the QA process is automatable. Something still has to be done manually. When our team needs to make sure the possibility of the automation, we use the Do Pilot approach for automated testing.

The Do Pilot strategy is about the automation of some part of your task. Automate the part, and evaluate the result: spent time, scalability of the tests, the cost for maintenance. Just do the pilot with your automated testing solution, and then decide if it is worth expanding for the whole project.

It is always better to spend 1-2 weeks exploring the strengths of the product and making sure that it is worth investing time and effort in the automation.

Do Pilot Requirements and Possibilities

So we decided to develop a pilot version: to build a suite of tests for our client’s .NET Windows Desktop application. Our team took the stable app’s version, and chose six basic testing scenarios with Smoke or Regress tag. This would guarantee that the application is functioning as expected in the technical task.

The resulting solution must be:

- Continuous Integration. We need to read reports from the CI after night testing cycle (or to get the notification that they failed).

- Transparency. It should be clear to the QA team: what is being checked on every step, while the project manager needs a clear report (for example: "add a third field to an X form").

- Cost. We search for a tool that won’t increase the project’s costs.

What we should decide first, is what automation tool would meet each of our expectations.

Solutions and Frameworks for Automated Testing

As it was mentioned before, automated QA is dominated by web- and mobile technologies. Let’s change the game, and find something suitable for Windows Desktop.

Commercial Tools

TestComplete

TestComplete by SmartBear is one of leaders among testing automation studios. TestComplete has often been named a well-designed and highly functional commercial automated testing tool, and it offerstrial periodiod and is often described positively by its users.

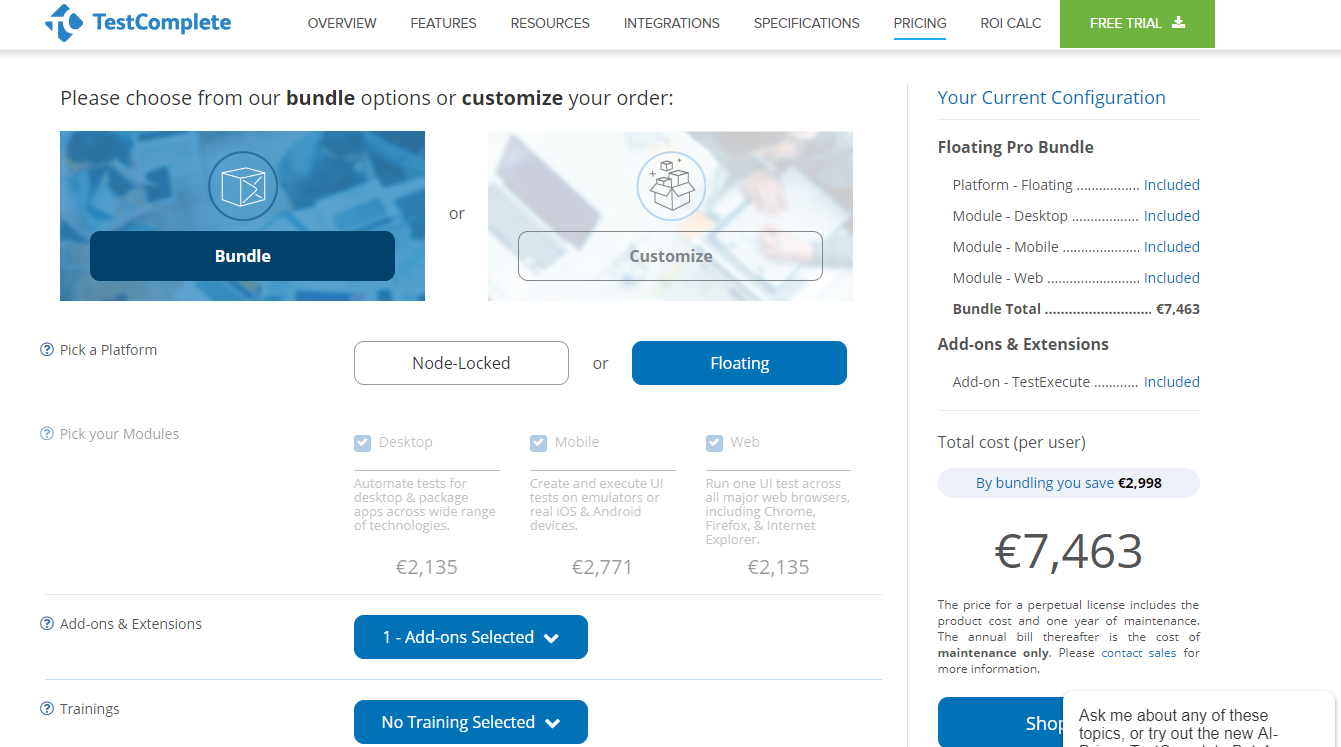

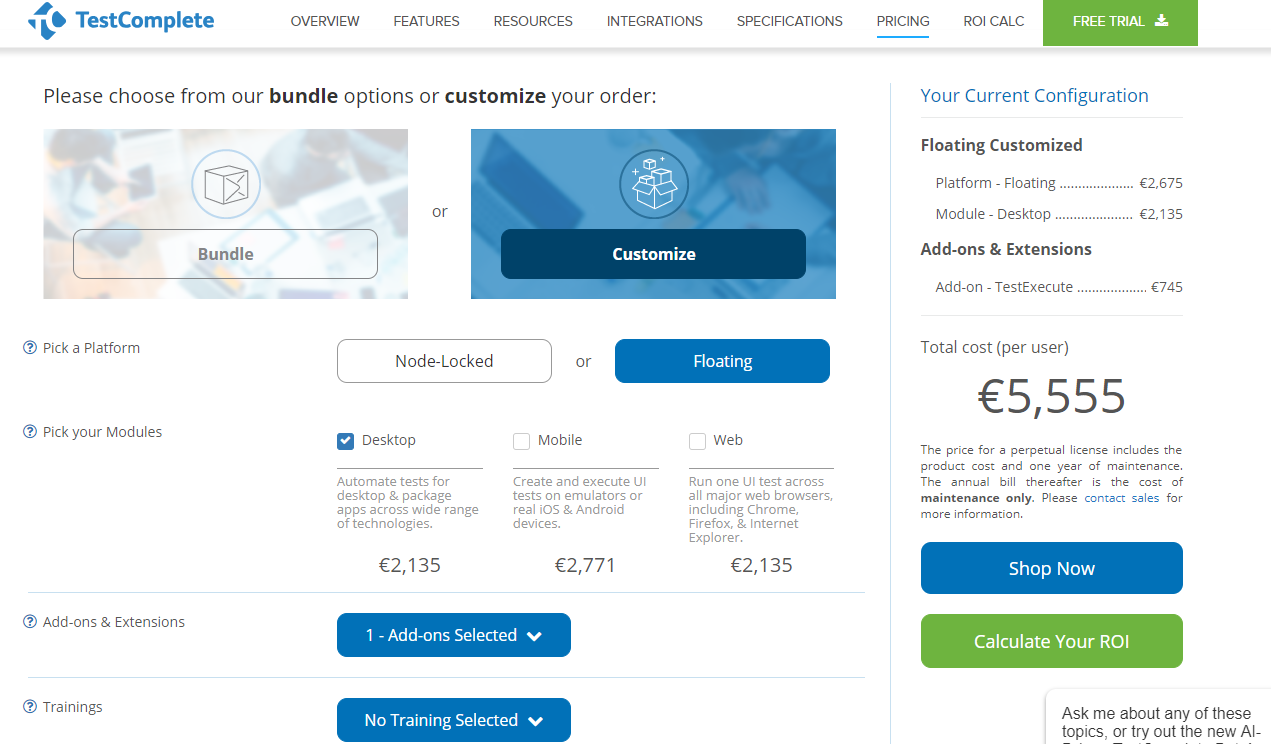

Let’s choose a "boxed" solution for a single user:

Let’s customize our solution by excluding web and mobile.

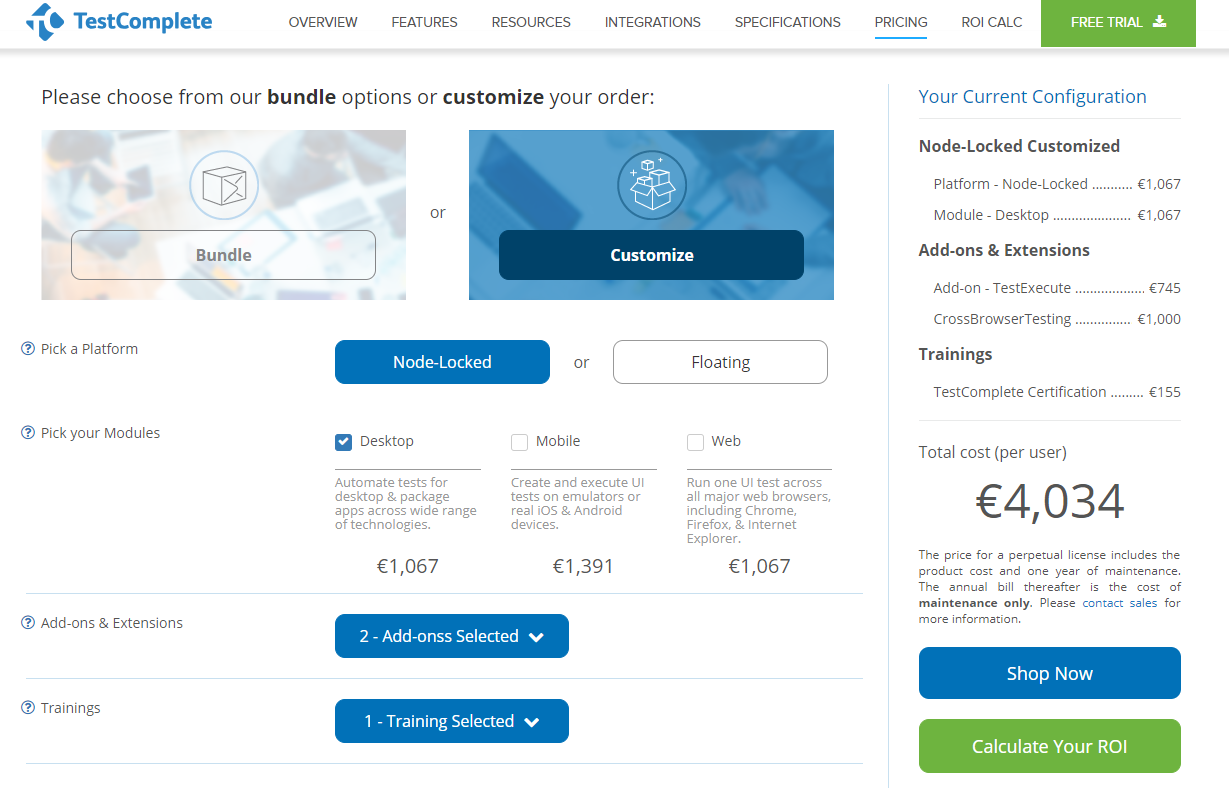

Let’s reduce costs as much as possible. For access to real and virtual machines, desktop, video replays of your tests unlimited screenshots, unlimited live testing and enterprise-grade security, it costs another $1700 for a single license.

You can get certificates from $150-700 to ensure that your team knows TestComplete inside and out. Let’s choose one basic TestComplete Certification.

The bottom-line price resulted in:

For TestComplete we’d pay $4000 for a studio, and for one year of support for a single user (our team consists on two specialists). However, our aim was to offer our customer the solution which wouldn’t force them to pay either us nor for the license, while keeping the quality level.

Telerik

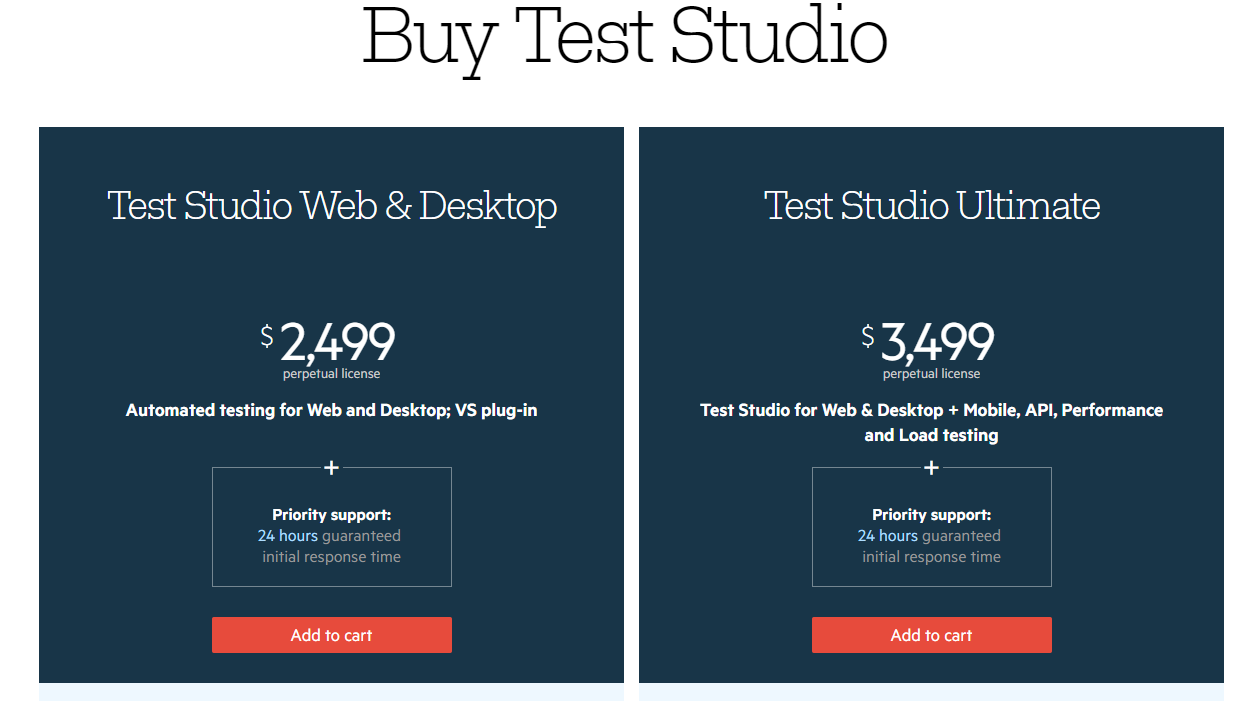

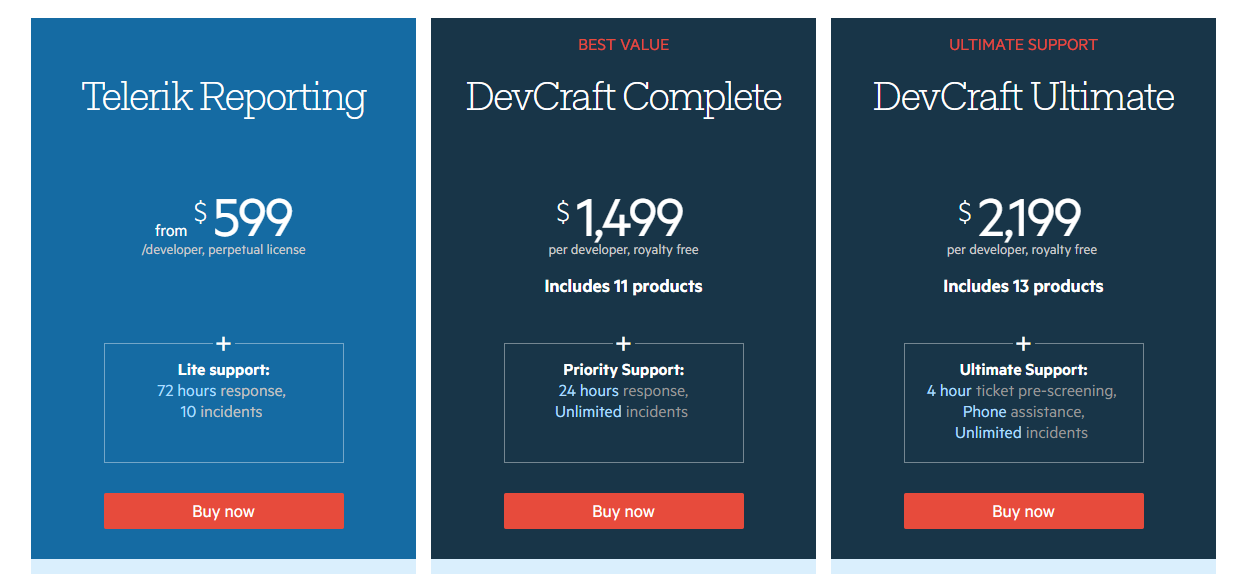

The next commercial tool is Telerik Test Studio. Here’s the pricing:

But every new feature is paid additionally:

This amount of $4000 includes no more than a single user and a year of support. After multiplying it by two (the project team consists of two specialists), we got the result of $8000 per year. Other commercial frameworks are surprisingly the same in costs.

The WaveAccess team decided to keep searching for a solution that will provide our customer with good quality level at a reasonable price. But the main reason was the need for transparency: our team wants to know what we offer our customer precisely, and how we are going to use this. That’s why we paid attention to open source solutions.

Comparison of Free Frameworks

Visual Studio Coded UI

The first solution tested for our challenge was Visual Studio Coded UI.

Pros:

- Allows for using the same technologies that the test object is based on (in our case, .NET and C#)

- Has a test recorder.

- Supports WinForms.

- Supports DevExpress UI controls.

Cons:

- Scarce documentation.

- Visual Studio Ultimate is available for a fee, and the free version has no access to CodedUI Test.

- The code generated by the test recorder is hardly supportable: recorded tests fail to run (this might not be the case for less complex interfaces, for which the tests may run properly).

- BDD test case writing (for example, using one of the most popular C# frameworks, Specflow) is not compatible with Coded UI in the “out of the box” version.

Limitations of search and using UI elements by UIA API v.1 — MSAA.

CUITe Framework

The CUITe framework can be used in combination with Coded UI for different VS versions. This tool can be considered, but it does not fix the essential drawbacks of Coded UI.

TestStack.White

TestStack.White is the second free solution that we considered

Pros:

The tool is fully functional for writing tests in C#.

Compatible with BDD-style test writing.

MSAA, UIA 2, 3 are available for selection.

Cons:

Legacy codebase is obviously in need for refactoring.

NuGet package had been last updated in 2014, but the repository was changed since then.

WhiteSharp

Further research suggested that we should try WhiteSharp, so we did.

Pros:

The tool is fully functional for writing tests in C#.

BDD-compatible.

Cons:

No way to identify all UI elements correctly. Not compatible with DevExpress elements.

NuGet package was last updated in 2016.

Scarce documentation and small community to ask for help.

Elementary written test cases (“open the app”, “check the status bar content”) run very slowly.

Winium.Desktop

Winium.Desktop has a significant disadvantage of not supporting .NET 4.0.

FlaUI

FlaUI is another fully functional tool for writing tests in C#. Its author emphasizes that the goal was to remake TestStack.White from scratch, removing the unnecessary and outdated features.

Pros:

1. BDD-compatible.

2. Allows for using any version of UIA: MSAA, UIA2, or UIA3.

3. Supports Windows 7, 8, 10 (likely XP too, but we have not checked).

4. Good community: helps solve problems not only in issues, but also in chats.

WinAppDriver + Appium

WinAppDriver + Appium is a full-functional test writing platform in C#, Java, and Python.

Pros:

Developed and supported by Microsoft.

BDD-compatible.

Low entry barrier, assuming some experience in web and mobile testing.

Cons:

Supports OS Windows no earlier than 10+.

Having checked all benefits and risks, we opted for WinAppDriver and FlaUI.

Examples of Using the Chosen Solutions

Before writing tests, one must pick an UI Element Inspector — for example, here. We prefer Inspect.exe and UISpy.exe.

WinAppDriver

To use WinAppDriver, you'll need Windows 10, as it doesn’t currently support newer versions). Of course, first, you download, install, and run WinAppDriver.exe. Then you installed Appium, and ran Appium Server (a “daemon”). If you don’t use C#, here are the samples in Python+Robot.

Creating an app session and connecting to it:

class App:

def __init__(self):

desired_caps = {"app": "Microsoft.WindowsCalculator_8wekyb3d8bbwe!App"}

self.__driver = webdriver.Remote(

command_executor='http://127.0.0.1:4723',

desired_capabilities=desired_caps)

def setup(self):

return self.__driverOpening the app:

class CalculatorKeywordsLibrary(unittest.TestCase):

@keyword("Open App")

def open_app(self):

self.driver = App().setup()

return self.driverFinding buttons and clicking them:

@keyword("Click Calculator Button")

def click_calculator_button(self, str):

self.driver.find_element_by_name(str).click()Getting the result:

@keyword("Get Result")

def getresult(self):

display_text = self.driver.find_element_by_accessibility_id("CalculatorResults").text

display_text = display_text.strip("Display is ")

display_text = display_text.rstrip(' ')

display_text = display_text.lstrip(' ')

return display_textQuitting the app:

@keyword("Quit App")

def quit_app(self):

self.driver.quit()As a result, the Robot test will calculate a square root that looks like this:

Test Square

[Tags] Calculator test

[Documentation] *Purpose: test Square*

...

... *Actions*:

... — Check is the calculator started

... — Press button Four

... — Press button Square

...

... *Result*:

... — Result is 2

...

Assert Calculator Is Open

Click Calculator Button Four

Click Calculator Button Square root

${result} = Get Result

AssertEquals ${result} 2

[Teardown] Quit AppYou can run this test like a regular robot-test from command line: robot test.robot.

There is some detailed documentation on BDD Robot Framework. WinStore apps, as well as regular apps, can be tested, too.

Supported Locator types:

FindElementByClassNameFindElementByAccessibilityIdFindElementByIdFindElementByNameFindElementByTagNameFindElementByXPath

This suggests that it is a fully Selenium-like test. Any testing patterns can be applied: Page Object and others, and a BDD framework can be added (for Python, for example, it is Robot Framework or Behave).

For further reading, here's detailed documentation with test examples in different languages.

FlaUI

FlaUI is basically a wrapper of UI Automation libraries. Earlier commercial utilities are also wrappers of Microsoft Automation API.

Windows 7 PC, Visual Studio. Running the app and getting attached to the process:

[Test]

public void NotepadAttachByNameTest()

{

using (var app = Application.Launch("notepad.exe"))

{

using (var automation = new UIA3Automation())

{

var window = app.GetMainWindow(automation);

Assert.That(window, Is.Not.Null);

Assert.That(window.Title, Is.Not.Null);

}

app.Close();

}

}Finding the text area with an Inspector and sending a string to it:

[Test]

public void SendKeys()

{

using (var app = Application.Launch("notepad.exe"))

{

using (var automation = new UIA3Automation())

{

var mainWindow = app.GetMainWindow(automation);

var textArea = mainWindow.FindFirstDescendant("_text");

textArea.Click();

//some string

Keyboard.Type("Test string");

//some key:

Keyboard.Type(VirtualKeyShort.KEY_Z);

Assert.That(mainWindow.FindFirstDescendant("_text").Patterns.Value.Pattern.Value, Is.EqualTo("Test stringz"));

}

app.Close();

}

}Basic element search types:

FindAllChildrenFindFirstChildFindFirstDescendantFindAllDescendants

Also, you can search by:

AutomationIdNameClassNameHelpText

Search by any pattern that is supported by element is also available — for example, by Value or by LegacyAccessible. Searching by condition is available, too.

public AutomationElement Element(string legacyIAccessibleValue) =>

LoginWindow.FindFirstDescendant(new PropertyCondition(Automation.PropertyLibrary.LegacyIAccessible.Value, legacyIAccessibleValue));Our team appreciated the static retry class that provides help with retrying:

Retry.While(foundMethod, Timeout, RetryInterval);

This class has a looping retry method at given intervals with a given timeout (custom or default) — and no more thread.sleep in the tests/page logic. Example:

private static LoginWindow LoginWindow

{

get

{

Retry.WhileException(() => MainWindow.LoginWindow.IsEnabled);

return new LoginWindow(MainWindow.LoginWindow);

}

}LoginWindow is created only when the login window is actually shown (using default interval and timeouts). This approach gets UI test stability at a new level.

For further reading, you can refer to this detailed guide on GitHub.

Test Development Time: UI Test Stability and Support

It was planned to complete six large-scale regress scenarios in three months of development from scratch — including project studies, available framework research (described above), AT skeleton development, CI adjustment, management, communication, and documentation. The project was delivered on time.

If we continue test coverage improvement, these setup processes will take less time, as they were basically implemented at the pilot stage.

Tests in Continuous Integration

Both frameworks support test runs using continuous integration systems. Our team uses TC and TFS.

When using frameworks such as FLAUI and SpecFlow, and tests written in C#, making a build is trivial, except for some conditions for build agent.

Build agent is run as a command line utility (instead of a service); both systems allow that. It is required to set up an automatic start of the build agent.

Automatic logon setup (Use SysInternals Autologon for Windows as it encrypts your password in the registry).

Turn off screensaver the screensaver.

Turn off Windows Error Reporting. See Help on Gist: DisableWER.reg

Have an active desktop session on the machine where the tests are built and run. A standard Windows app is enough for that.

RDC, but it is often recommended to use VNC (because when you connect using remote desktop, then disconnect, the desktop will be locked, and UI Automation will fail).

Specflow BDD framework can be integrated on-the-fly with TC and TFS, and the testing report is generated right in the test run.

The ROI of Automated Testing

It is hard to calculate ROI before implementing the automated testing solution, because there are a lot of variables and unknown factors.

But after the pilot has been implemented, some figures can be drawn. Let’s stick to the simplest ROI definition:

ROI = (Gain of Investment — Cost of Investment) / Cost of Investment

First year automation cost (without support):

Three months to get complete regression test coverage via automated tests by a single developer-in-test: 500 hours * Dev hourly rate

We assume that the solution cost (Cost of Investment) is paid in full by the client during the first year, not divided, and no loan capital is used.

In the first part we have calculated that manual regression testing takes one month: two weeks for a primary run, plus two weeks for a secondary run (without checking new tests and associated expenses).

After development and the introduction of automated regression testing (3 months), manual regression testing is not performed for the remaining 9 months. Let’s assume that the released resources (i.e. the testing team) are employed in other projects — so we saved 1,500 hours * QA hourly rate * 2 engineers.

ROI = (1500*QA*2 — 500*Dev) / 500 * Dev = (6*QA — 1*Dev) / Dev

Assuming the mid-market average of QA hourly rate = n, Dev hourly rate = 1.5n:

ROI = (6n — 1.5n) / 1.5n = 3n.

Obviously, the first year provides less payback, and ROI in the following years is going to be much better due to the absence of Cost of Investment.

Of course, this calculation is very rough and it does not include support, depreciation, reporting enhancement, and the quality of automatic tests themselves. We suggest you take these factors into consideration in the next ROI calculation after the first year of AT introduction.

The Result

Introduction of automated testing saves the QA labor costs and frees up a team of two QA engineers for other tasks, thus optimizing the project resources.

Of course, manual testing is still an essential part of the development job. But with a large-scale project of tens of thousands LOCs, where a feature release takes several weeks or more, test automation is crucial. To figure out whether it is possible and efficient to automate all testing jobs, our team uses the Do Pilot approach, which means automating a small scope of work using a given framework.

Nevertheless, if we use all necessary functionality of paid packaged solutions and expand the team to at least two people, it comes at a price. To spare the customers yearly support costs after having paid for the solution first, we have considered several open source AT frameworks. Two of those met our needs and are being used currently.

Therefore, although test automation takes research to find a suitable framework and make automatic tests themselves, it still yields much more advantage than manual test runs.

Thank you for your time. Contact us, we will gladly answer your questions about test automation of any application — even a complex Windows Desktop one.

Published at DZone with permission of Alex Azarov. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments