TensorFlow for Real-World Applications

TensorFlow and deep learning are things that corporations must now embrace. The coming flood of audio, video, and image data and their applications are key to success.

Join the DZone community and get the full member experience.

Join For FreeI have spoken to thought leaders at a number of large corporations that span across multiple industries such as medical, utilities, communications, transportation, retail, and entertainment. They were all thinking about what they can and should do with deep learning and artificial intelligence. They are all driven by what they’ve seen in well-publicized projects from well-regarded software leaders like Facebook, Alphabet, Amazon, IBM, Apple, and Microsoft. They are starting to build out GPU-based environments to run at scale. I have been recommending that they all add these GPU-rich servers to their existing Hadoop clusters so that they can take advantage of the existing production-level infrastructure in place. Though TensorFlow is certainly not the only option, it’s the first that is mentioned by everyone I speak to. The question they always ask is, “How do I use GPUs and TensorFlow against my existing Hadoop data lake and leverage the data and processing power already in my data centers and cloud environments?” They want to know how to train, how to classify at scale, and how to set up deep learning pipelines while utilizing their existing data lakes and big data infrastructure.

So why TensorFlow? TensorFlow is a well-known open-source library for deep learning developed by Google. It is now in version 1.3 and runs on a large number of platforms used by business, from mobile, to desktop, to embedded devices, to cars, to specialized workstations, to distributed clusters of corporate servers in the cloud and on-premise. This ubiquity, openness, and large community have pushed TensorFlow into the enterprise for solving real-world applications such as analyzing images, generating data, natural language processing, intelligent chatbots, robotics, and more. For corporations of all types and sizes, the use cases that fit well with TensorFlow include:

- Speech recognition

- Image recognition

- Object tagging videos

- Self-driving cars

- Sentiment analysis

- Detection of flaws

- Text summarization

- Mobile image and video processing

- Air, land, and sea drones

For corporate developers, TensorFlow allows for development in familiar languages like Java, Python, C, and Go. TensorFlow is also running on Android phones, allowing for deep learning models to be utilized in mobile contexts, marrying it with the myriad of sensors of modern smartphones.

Corporations that have already adopted big data have the use cases, available languages, data, team members, and projects to learn and start from.

The first step is to identify one of the use cases that fit your company. For a company that has a large number of physical assets that require maintenance, a good use case is to detect potential issues and flaws before they become a problem. This is an easy-to-understand use case, potentially saving large sums of money and improving efficiency and safety.

The second step is to develop a plan for a basic pilot project. You will need to acquire a few pieces of hardware and a team with a data engineer and someone familiar with Linux and basic device experience.

This pilot team can easily start with an affordable Raspberry Pi camera and a Raspberry Pi board, assuming the camera meets their resolution requirements. They will need to acquire the hardware, build a Raspberry Pi OS image, and install a number of open-source libraries. This process is well-documented here.

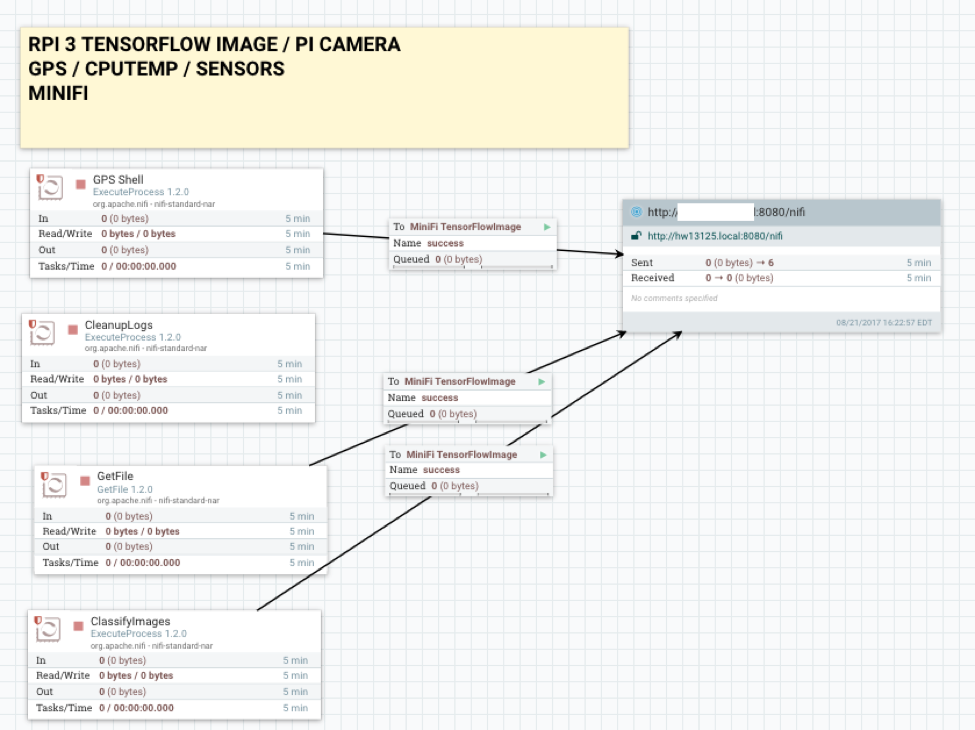

The first test of this project would be to send images from the camera at regular intervals, analyzed with image recognition, and send the resulting data and images via Apache MiNiFi to cloud servers for additional predictive analytics and learning. The combination of MiNiFi and TensorFlow is flexible enough that the classification of images via an existing model can be done directly on the device. This example is documented here at Hortonworks and utilizes OpenCV, TensorFlow, Python, MiNiFi, and NiFi.

After obtaining the images and Tensorflow results, you can now move onto the next step, which is to train your models to understand your dataset. The team will need to capture good state images in different conditions for each piece of equipment utilized in the pilot. I recommend capturing these images at different times of year and at different angles. I also recommend using Apache NiFi to ingest these training images, shrink them to a standard size, and convert them to black and white, unless color has special meaning for your devices. This can be accomplished utilizing the built-in NiFi processors: ListFiles, ResizeImage, and a Python script utilizing OpenCVor scikit-image.

The team will also need to obtain images of known damaged, faulty, flawed, or anomalous equipment. Once you have these, you can build and train your custom models. You should test these on a large YARN cluster equipped with GPUs. For TensorFlow to utilize GPUs, you will need to install the tensorflow-gpu version as well as libraries needed by your GPU. For NVidia, this means you will need to install and configure CUDA. You may need to invest in a number of decent GPUs for initial training. Training can be run on in-house infrastructure or by utilizing one of the available clouds that offer GPUs. This is the step that is most intensive, and depending on the size of the images, the number of data elements, and the precision needed, this step could take hours, days, or weeks; so schedule time for this. This may also need to run a few times due to mistakes or to tweak parameters or data.

Once you have these updated models, they can be deployed to your remote devices to run against. The remote devices do not need the processing power of the servers that are doing the training. There are certainly cases where new multicore GPU devices available could be utilized to handle faster processing and more cameras. This would require analyzing the environment, cost of equipment, requirements for timing, and other factors related to your specific use case. If this is for a vehicle, drone, or a robot, investing in better equipment will be worth it. Don’t put starter hardware in an expensive vehicle and assume it will work great. You may also need to invest in industrial versions of these devices to work in environments that have higher temperature ranges, longer running times, vibrations, or other more difficult conditions.

One of the reasons I recommend this use case is that the majority of the work is already complete. There are well-documented examples of this available at DZone for you to start with. The tools necessary to ingest, process, transform, train, and store are the same you will start with.

TensorFlow and Apache NiFi are clustered and can scale to a huge number of real-time concurrent streams. This gives you a production-ready supported environment to run these millions of streaming deep learning operations. Also, by running TensorFlow directly at the edge points, you can scale easily as you add new devices and points to your network. You can also easily shift single devices, groups of devices, or all your devices to processing remotely without changing your system, flows, or patterns. A mixed environment where TensorFlow lives at the edges, at various collection hubs, and in data centers make sense. For certain use cases, such as training, you may want to invest in temporary cloud resources that are GPU-heavy to decrease training times. Google, Amazon, and Microsoft offer good GPU resources on-demand for these transient use cases. Google, being the initial creator of TensorFlow, has some really good experience in running TensorFlow and some interesting hardware to run it on.

I highly recommend utilizing Apache NiFi, Apache MiNiFi, TensorFlow, OpenCV, Python, and Spark as part of your artificial intelligence knowledge stream. You will be utilizing powerful, well-regarded, open-source tools with healthy communities that will continuously improve. These projects gain features, performance, and examples at a staggering pace. It’s time for your organization to join the community by first utilizing these tools and then contributing back.

Opinions expressed by DZone contributors are their own.

Comments