taichi.js: Painless WebGPU Programming

This article explains how to use WebGPU to compute and render pipelines in simple JavaScript syntax using taichi.js.

Join the DZone community and get the full member experience.

Join For FreeAs a computer graphics and programming languages geek, I am delighted to have found myself working on several GPU compilers in the past two years. This began in 2021 when I started to contribute to taichi, a Python library that compiles Python functions into GPU kernels in CUDA, Metal, or Vulkan. Later on, I joined Meta and started working on SparkSL, which is the shader language that powers cross-platform GPU programming for AR effects on Instagram and Facebook. Aside from personal pleasure, I have always believed, or at least hoped, that these frameworks are actually quite useful; they make GPU programming more accessible to non-experts, empowering people to create fascinating graphics content without having to master complex GPU concepts.

In my latest installment of compilers, I turned my eyes to WebGPU -- the next-generation graphics API for the web. WebGPU promises to bring high-performance graphics via low CPU overhead and explicit GPU control, aligning with the trend started by Vulkan and D3D12 some seven years ago. Just like Vulkan, the performance benefits of WebGPU come at the cost of a steep learning curve. Although I'm confident that this won't stop talented programmers around the world from building amazing content with WebGPU, I wanted to provide people with a way to play with WebGPU without having to confront its complexity. This is how taichi.js came to be.

Under the taichi.js programming model, programmers don't have to reason about WebGPU concepts such as devices, command queues, bind groups, etc. Instead, they write plain Javascript functions, and the compiler translates those functions into WebGPU compute or render pipelines. This means that anyone can write WebGPU code via taichi.js, as long as they are familiar with basic Javascript syntax.

The remainder of this article will demonstrate the programming model of taichi.js via a "Game of Life" program. As you will see, with less than 100 lines of code, we will create an fully parallel WebGPU program containing 3 GPU compute pipelines plus a render pipeline. The full source code of the demo can be found here, and if you want to play with the code without having to set-up any local environments, go to this page.

The Game

The Game of Life is a classic example of a cellular automaton, a system of cells that evolve over time according to simple rules. It was invented by the mathematician John Conway in 1970 and has since become a favorite of computer scientists and mathematicians alike. The game is played on a two-dimensional grid, where each cell can be either alive or dead. The rules for the game are simple:

- If a living cell has fewer than two or more than three living neighbors, it dies.

- If a dead cell has exactly three living neighbors, it becomes alive.

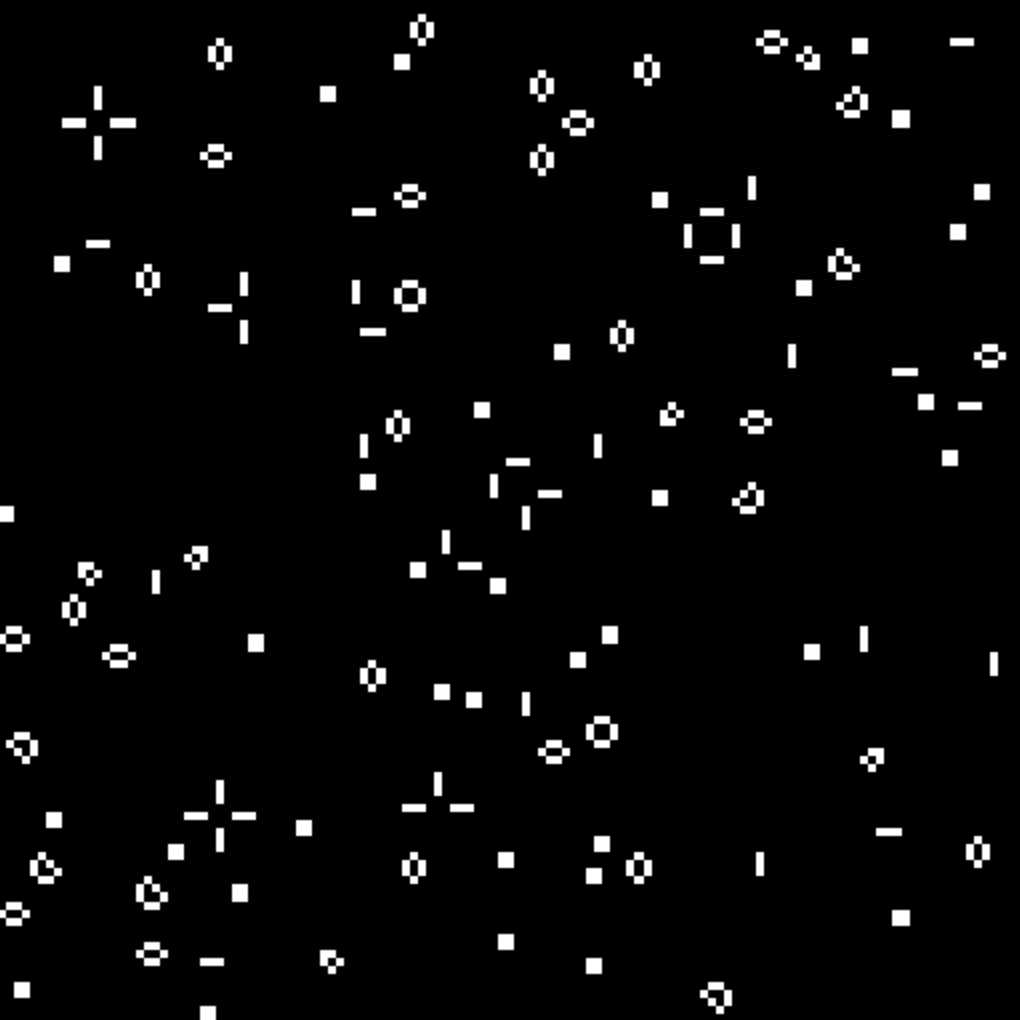

Despite its simplicity, the Game of Life can exhibit surprising behavior. Starting from any random initial state, the game often converges to a state where a few patterns are dominant as if these are "species" which survived through evolution.

Simulation

Let's dive into the Game of Life implementation using taichi.js. To begin with, we import the taichi.js library under the shorthand ti and define an async main() function, which will contain all of our logic. Within main(), we begin by calling ti.init(), which initializes the library and its WebGPU contexts.

import * as ti from "path/to/taichi.js"

let main = async () => {

await ti.init();

...

};

main()Following ti.init(), let's define the data structures needed by the "Game of Life" simulation:

let N = 128;

let liveness = ti.field(ti.i32, [N, N])

let numNeighbors = ti.field(ti.i32, [N, N])

ti.addToKernelScope({ N, liveness, numNeighbors });Here, we defined two variables, liveness, and numNeighbors, both of which are ti.fields. In taichi.js, a "field" is essentially an n-dimensional array, whose dimensionality is provided in the 2nd argument to ti.field(). The element type of the array is defined in the first argument. In this case, we have ti.i32, indicating 32-bit integers. However, field elements may also be other more complex types, including vectors, matrices, and even structures.

The next line of code, ti.addToKernelScope({...}), ensures that the variables N, liveness, and numNeighbors are visible in taichi.js "kernel"s, which are GPU compute and/or render pipelines, defined in the form of Javascript functions. As an example, the following init kernel is used to populate our grid cells with initial liveness vales, where each cell has a 20% chance of being alive initially:

let init = ti.kernel(() => {

for (let I of ti.ndrange(N, N)) {

liveness[I] = 0

let f = ti.random()

if (f < 0.2) {

liveness[I] = 1

}

}

})

init()The init() kernel is created by calling ti.kernel() with a Javascript lambda as the argument. Under the hood, taichi.js will look at the JavaScript string representation of this lambda and compile its logic into WebGPU code. Here, the lambda contains a for-loop, whose loop index I iterates through ti.ndrange(N, N). This means that I will take NxN different values, ranging from [0, 0] to [N-1, N-1].

Here comes the magical part -- in taichi.js, all the top-level for-loops in the kernel will be parallelized. More specifically, for each possible value of the loop index, taichi.js will allocate one WebGPU compute shader thread to execute it. In this case, we dedicate one GPU thread to each cell in our "Game of Life" simulation, initializing it to a random liveness state. The randomness comes from a ti.random() function, which is one of the the many functions provided in the taichi.js library for kernel use. A full list of these built-in utilities is available here in the taichi.js documentation.

Having created the initial state of the game, let's move on to define how the game evolves. These are the two taichi.js kernels defining this evolution:

let countNeighbors = ti.kernel(() => {

for (let I of ti.ndrange(N, N)) {

let neighbors = 0

for (let delta of ti.ndrange(3, 3)) {

let J = (I + delta - 1) % N

if ((J.x != I.x || J.y != I.y) && liveness[J] == 1) {

neighbors = neighbors + 1;

}

}

numNeighbors[I] = neighbors

}

});

let updateLiveness = ti.kernel(() => {

for (let I of ti.ndrange(N, N)) {

let neighbors = numNeighbors[I]

if (liveness[I] == 1) {

if (neighbors < 2 || neighbors > 3) {

liveness[I] = 0;

}

}

else {

if (neighbors == 3) {

liveness[I] = 1;

}

}

}

})Same as the init() kernel we saw before, these two kernels also have top-level for loops iterating over every grid cell, which are parallelized by the compiler. In countNeighbors(), for each cell, we look at the eight neighboring cells and count how many of these neighbors are "alive." The amount of live neighbors is stored into the numNeighbors field. Notice that when iterating through neighbors, the loop for (let delta of ti.ndrange(3, 3)) {...} is not parallelized, because it is not a top-level loop. The loop index delta ranges from [0, 0] to [2, 2] and is used to offset the original cell index I. We avoid out-of-bounds accesses by taking a modulo on N. (For the topologically-inclined reader, this essentially means the game has toroidal boundary conditions).

Having counted the amount of neighbors for each cell, we move on to update the their liveness states in the updateLiveness() kernel. This is a simple matter of reading the liveness state of each cell and its current amount of live neighbors and writing back a new liveness value according to the rules of the game. As usual, this process applies to all cells in parallel.

This essentially concludes the implementation of the game's simulation logic. Next, we will see how to define a WebGPU render pipeline to draw the game's evolution onto a webpage.

Rendering

Writing rendering code in taichi.js is slightly more involved than writing general-purpose compute kernels, and it does require some understanding of vertex shaders, fragment shaders, and rasterization pipelines in general. However, you will find that the simple programming model of taichi.js makes these concepts extremely easy to work with and reason about.

Before drawing anything, we need access to a piece of canvas that we are drawing onto. Assuming that a canvas named result_canvas exists in the HTML, the following lines of code create a ti.CanvasTexture object, which represents a piece of texture that can be rendered onto by a taichi.js render pipeline.

let htmlCanvas = document.getElementById('result_canvas');

htmlCanvas.width = 512;

htmlCanvas.height = 512;

let renderTarget = ti.canvasTexture(htmlCanvas);On our canvas, we will render a square, and we will draw the Game's 2D grid onto this square. In GPUs, geometries to be rendered are represented in the form of triangles. In this case, the square that we are trying to render will be represented as two triangles. These two triangles are defined in a ti.field, which store the coordinates of each of the six vertices of the two triangles:

let vertices = ti.field(ti.types.vector(ti.f32, 2), [6]);

await vertices.fromArray([

[-1, -1],

[1, -1],

[-1, 1],

[1, -1],

[1, 1],

[-1, 1],

]);As we did with the liveness and numNeighbors fields, we need to explicitly declare the renderTarget and vertices variables to be visible in GPU kernels in taichi.js:

ti.addToKernelScope({ vertices, renderTarget });Now, we have all the data we need to implement our render pipeline. Here's the implementation of the pipeline itself:

let render = ti.kernel(() => {

ti.clearColor(renderTarget, [0.0, 0.0, 0.0, 1.0]);

for (let v of ti.inputVertices(vertices)) {

ti.outputPosition([v.x, v.y, 0.0, 1.0]);

ti.outputVertex(v);

}

for (let f of ti.inputFragments()) {

let coord = (f + 1) / 2.0;

let texelIndex = ti.i32(coord * (liveness.dimensions - 1));

let live = ti.f32(liveness[texelIndex]);

ti.outputColor(renderTarget, [live, live, live, 1.0]);

}

});Inside the render() kernel, we begin by clearing the renderTarget with an all-black color, represented in RGBA as [0.0, 0.0, 0.0, 1.0].

Next, we define two top-level for-loops, which, as you already know, are loops that are parallelized in WebGPU. However, unlike the previous loops where we iterate over ti.ndrange objects, these loops iterate over ti.inputVertices(vertices) and ti.inputFragments(), respectively. This indicates that these loops will be compiled into WebGPU "vertex shaders" and "fragment shaders," which work together as a render pipeline.

The vertex shader has two responsibilities:

For each triangle vertex, compute its final location on the screen (or, more accurately, its "Clip Space" coordinates). In a 3D rendering pipeline, this will normally involve a bunch of matrix multiplications that transforms the vertex's model coordinates into world space, and then into camera space, and then finally into "Clip Space." However, for our simple 2D square, the input coordinates of the vertices are already at their correct values in clip space so that we can avoid all of that. All we have to do is append a fixed

zvalue of 0.0 and a fixedwvalue of1.0(don't worry if you don't know what those are -- not important here!).JavaScriptti.outputPosition([v.x, v.y, 0.0, 1.0]);For each vertex, generate data to be interpolated and then passed into the fragment shader. In a render pipeline, after the vertex shader is executed, a built-in process known as "Rasterization" is executed on all the triangles. This is a hardware-accelerated process which computes, for each triangle, which pixels are covered by this triangle. These pixels are also known as "fragments." For each triangle, the programmer is allowed to generate additional data at each of the three vertices, which will be interpolated during the rasterization stage. For each fragment in the pixel, its corresponding fragment shader thread will receive the interpolated values according to its location within the triangle.

In our case, the fragment shader only needs to know the location of the fragment within the 2D square so it can fetch the corresponding liveness values of the game. For this purpose, it suffices to pass the 2D vertex coordinate into the rasterizer, which means the fragment shader will receive the interpolated 2D location of the pixel itself:

JavaScriptti.outputVertex(v);

Moving on to the fragment shader:

for (let f of ti.inputFragments()) {

let coord = (f + 1) / 2.0;

let cellIndex = ti.i32(coord * (liveness.dimensions - 1));

let live = ti.f32(liveness[cellIndex]);

ti.outputColor(renderTarget, [live, live, live, 1.0]);

}The value f is the interpolated pixel location passed-on from the vertex shader. Using this value, the fragment shader will look-up the liveness state of the cell in the game which covers this pixel. This is done by first converting the pixel coordinates f into the [0, 0] ~ [1, 1] range and storing this coordinate into the coord variable. This is then multiplied with the dimensions of the liveness field, which produces the index of the covering cell. Finally, we fetch the live value of this cell, which is 0 if it is dead and 1 if it is alive. Finally, we output the RGBA value of this pixel onto the renderTarget, where the R,G,B components are all equal to live, and the A component is equal to 1, for full opacity.

With the render pipeline defined, all that's left is to put everything together by calling the simulation kernels and the render pipeline every frame:

async function frame() {

countNeighbors()

updateLiveness()

await render();

requestAnimationFrame(frame);

}

await frame();And that's it! We have completed a WebGPU-based "Game of Life" implementation in taichi.js. If you run the program, you should see an animation where 128x128 cells evolve for around 1400 generations before converging to a few species of stabilized organisms.

Exercises

I hope you found this demo interesting! If you did, then I have a few extra exercises and questions that I invite you to experiment with and think about. (By the way, for quickly experimenting with the code, go to this page.)

- [Easy] Add a FPS counter to the demo! What FPS value can you obtain with the current setting where

N = 128? Try increasing the value ofNand see how the framerate changes. Would you be able to write a vanilla Javascript program that obtains this framerate withouttaichi.jsor WebGPU? - [Medium] What would happen if we merge

countNeighbors()andupdateLiveness()into a single kernel and keep theneighborscounter as a local variable? Would the program still work correctly always? - [Hard] In

taichi.js, ati.kernel(..)always produces anasyncfunction, regardless of whether it contains compute pipelines or render pipelines. If you have to guess, what is the meaning of thisasync-ness? And what is the meaning of callingawaiton theseasynccalls? Finally, in theframefunction defined above, why did we putawaitonly for therender()function, but not the other two?

The last two questions are especially interesting, as they touches onto the inner workings of the compiler and runtime of the taichi.js framework, as well as the principles of GPU programming. Let me know your answer!

Resources

Of course, this Game of Life example only scratches the surface of what you can do with taichi.js. From real-time fluid simulations to physically based renderers, there are may other taichi.js programs for you to play with, and even more for you to write yourself. For additional examples and learning resources, check out:

Happy coding!

Published at DZone with permission of Dunfan Lu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments