Synchronizing Paginated Dataset by MuleSoft: An Event-Driven Approach

This article explains a pattern that can be used to synchronize paginated dataset from a source to a target.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

Data Synchronization is a common operation in enterprise computing. It ensures consistency of data across distributed systems by a constant flow of information at scheduled intervals and sometimes near-real time. It is also important that the data synchronization patterns should be optimized for processing data as quickly as possible. MuleSoft is an excellent Integration platform that supports the implementation of most widely used Data Integration Patterns. This article explains a pattern that can be used to synchronize paginated dataset from a source to a target. This may look like a usual task, but we will discuss the problem seen with this and a solution that we propose.

Problem

Often, business use cases involve the exchange of huge volumes of data more frequently. But building an integration among such systems is really a cumbersome process. Even while dealing with paginated sources, implementing a looping construct in the flow is still unachievable using the in-house components offered by MuleSoft (though there are some components that are meant to do looping). The For-Each or the Splitter can only act upon payload of collections data type and cannot determine when to loop around the logic within itself based on specific conditions. And simulating a loop using private flows (recursion) is still unsafe and not a good solution as it tends to lock up resources (threads), which can also cause instability and demands an overhead of creating an exception handling strategy that’s complex. Also, it is usually not scalable when running on multiple workers. Additionally, having recursion implemented in Mule 4 runtimes using private flows has shown many issues pertaining to the logic involved in doing so. Using a Batch process is not ideal as well since the source is paginating the data to avoid exposing entire load and Batch, functions well only if data is ingested in high volume. So, let’s dive into a pattern which overcomes these challenges with a good decoupling between the logic and yet simulating the looping construct in a much efficient way.

Solution

Ideally, when the source application starts responding to the request, the client is unaware of the volume of data it must handle, and by the time it finishes processing few of the available records, it may exhaust all its internal resources (such as memory, connection pools etc.) and shuts down erroneously. Pagination is a way to overcome this case and let’s take one such scenario where the source is exposing data with pagination involving an offset parameter that can be used to size the dataset aptly. Note that there might be other parameters to size the window of the dataset aptly or to apply a filter on the returned data like date range.

For a given request, the response contains a dataset along with a token (offset) field, which should be persisted and used as a reference in the subsequent calls by the client. For iterative requests, when no data is received back, the client should treat it as the last record set and finish the process of initiating further calls.

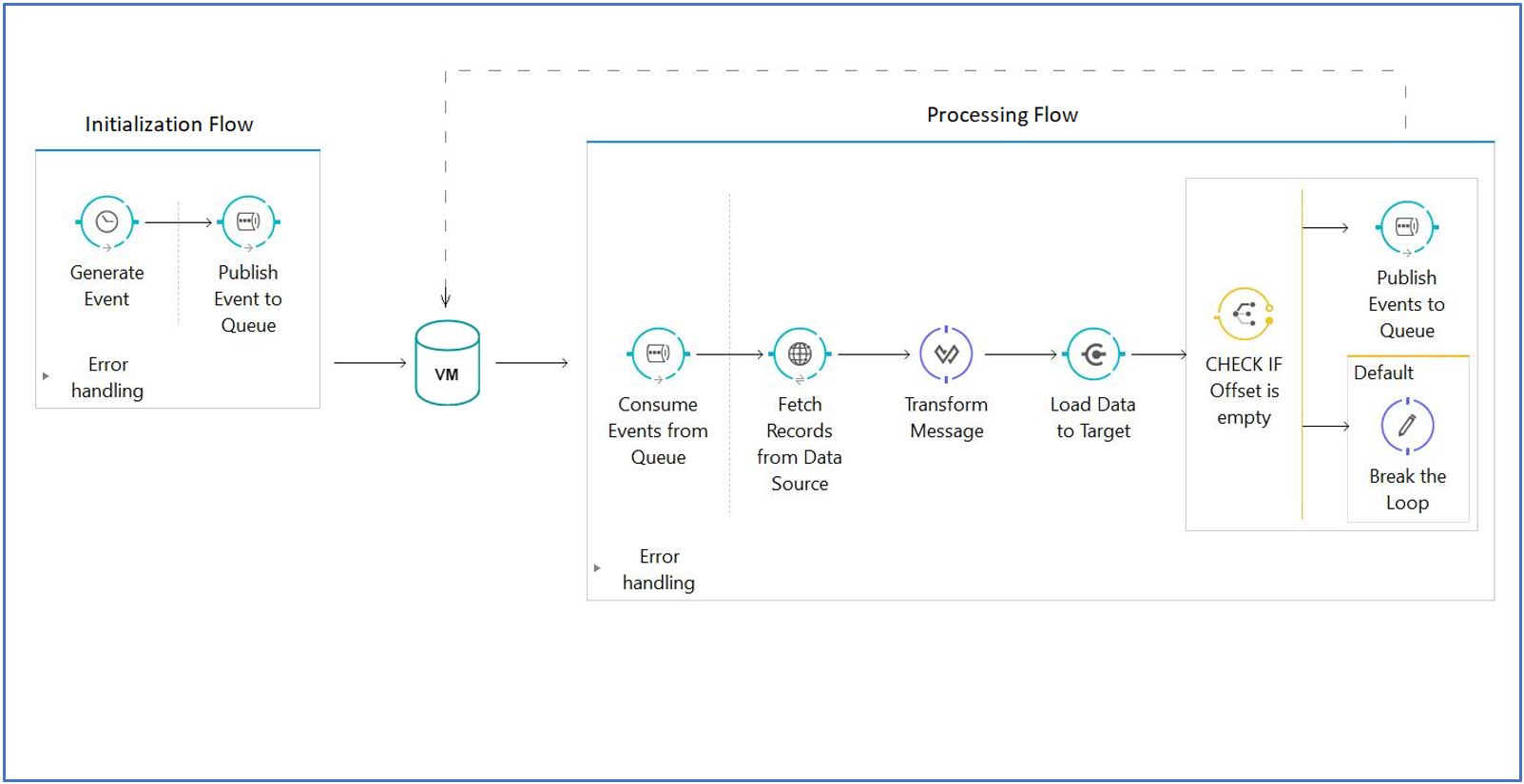

Every time after completing the processing of each dataset, the flow must re-trigger itself at the end (this is where the need of looping comes in). We can simulate this looping by wiring the different part of the logic via an Event Queue and trigger the flow based on the published events. Let’s take a pictorial representation of the flow design:

Initiation Flow

This is the flow that creates the first event based on the scheduling of the interface. When the scheduler triggers the flow, the flow will create the event and drop the message to the VM Queue.

Note that any requirement of event sequencing is not discussed here and will need a more complex construct of the flow. It is possible but is currently out of scope for this article.

Processing Flow

This flow houses the logic of invoking the backend that returns the paginated response. Remember that the backend will give a sub-set of the records along with a token (the offset identifier). This token is something that needs persisting and ObjectStore can be leveraged for that. It is important to persist this token (or update the existing one) after every iteration to recover from a breakdown of the worker so that the flow could start where it stopped last.

The second part of this flow should be to determine whether further calls to the backend is needed. This can be based on whether the current call (triggered by the current event) to the backend returned a non-empty response. If so, the flow will generate the Event again and drop it to the same VM Queue. This is the important part of the solution as this will retrigger the Processing flow again but this time with the required decoupling.

Limitations

The solution discussed above works perfectly fine for most of the scenarios that require consuming a paginated data source. As the saying goes, no solution is ever perfect, and neither is this approach. Assume the flow is initiated by a scheduler component and the interval between two subsequent triggers is extremely low. In this case, the new event generated by the scheduler may interfere with the looping, if the earlier data load is still in process. Externalizing the queue implementations to Anypoint MQ (or other MQ implementation) that supports FIFO semantics may help to overcome this but that involves the need more complex design. The best option to overcome this is to carefully decide the scheduling time based on below aspects:

1. Total datasets that are estimated from the back-end system

2. Time that the flow takes to complete the data load for each scheduled event

Note that this study is anyways important to evaluate the expected performance that the interface should exhibit.

Conclusion

Sending data in acceptable subsets helps clients to process records without outrunning their resources. This hand-over of control to Mule application as a client is still challenging when the overall count of datasets increases. So, this pattern has shown optimal results after conducting tests with loads of datasets across different infrastructures.

Note: This solution is tested in Mule 4 as well.

Opinions expressed by DZone contributors are their own.

Comments