Structured Logging

This post introduces Structured Logging and the rationale behind its use. Some simple examples are provided to reinforce understanding.

Join the DZone community and get the full member experience.

Join For FreeWhen I was learning about writing serverless functions with AWS Lambda and Java, I came across the concept of structured logging. This made me curious about the concept of Structured Logs, so I decided to explore it further.

What Is Structured Logging?

Typically, any logs generated by an application would be plain text that is formatted in some way.

For example, here is a typical log format from a Java application:

[Sun Apr 02 09:29:16 GMT] book.api.WeatherEventLambda INFO: [locationName: London, UK temperature: 22 action: record timestamp: 1564428928]

While this log is formatted, it is not structured.

We can see that it is formatted with the following components:

- Timestamp (when it occurred)

- Fully Qualified Class Name (from where it occurred)

- Logging Level (the type of event)

- Message (this is the part that is typically non-standardized and therefore benefits the most from having some structure, as we will see)

A structured log does not use a plain-text format but instead uses a more formal structure such as XML or, more commonly, JSON.

The log shown previously would like like this if it were structured:

Note that the message part of the log is what you would typically be interested in. However, there is a whole bunch of meta-data surrounding the message, which may or may not be useful in the context of what you are trying to do.

Depending on the logging framework that you are using, you can customize the meta-data that is shown. The example shown above was generated from an AWS Lambda function (written in Java) via the Log4J2 logging framework.

The configuration looks like this:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration packages="com.amazonaws.services.lambda.runtime.log4j2">

<Appenders>

<Lambda name="Lambda">

<JsonLayout compact="true" eventEol="true" objectMessageAsJsonObject="true" properties="true"/>

</Lambda>

</Appenders>

<Loggers>

<Root level="info">

<AppenderRef ref="Lambda"/>

</Root>

</Loggers>

</Configuration>The JsonLayout tag is what tells the logger to use a structured format (i.e.) JSON in this case.

Note that we are using it as an appender for INFO-level logs, which means logs at other levels, such as ERROR or DEBUG, will not be structured. This sort of flexibility, in my opinion, is beneficial as you may not want to structure all of your logs but only the parts that you think need to be involved in monitoring or analytics.

Here is a snippet from the AWS Lambda function that generates the log. It reads a weather event, populates a Map with the values to be logged, and passes that Map into the logger.

final WeatherEvent weatherEvent = objectMapper.readValue(request.getBody(), WeatherEvent.class);

HashMap<Object, Object> message = new HashMap<>();

message.put("action", "record");

message.put("locationName", weatherEvent.locationName);

message.put("temperature", weatherEvent.temperature);

message.put("timestamp", weatherEvent.timestamp);

logger.info(new ObjectMessage(message));There are different ways of achieving this. You could write your own class that implements an interface from Log4J2 and then populate the fields of an instance of this class and pass this instance to the logger.

So, What Is the Point of All This?

Why would you want to structure your logs?

To answer this question, consider you had a pile of logs (as in, actual logs of wood):

If I were to say to you, "Inspect the log on top of the bottom left one," you would have to take a guess as to which one I am referring to.

Now consider that these logs were structured into a cabin.

Now, if I were to say to you, "Inspect the logs that make up the front door," then you know exactly where to look.

This is why structure is good. It makes it easier to find things.

Querying Your Logs

Structured Logs can be indexed efficiently by monitoring tools such as AWS CloudWatch, Kibana, and Splunk. What this means is that it becomes much easier to find the logs that you want. These tools offer sophisticated ways of querying your logs, making it easier to do troubleshooting or perform analytics.

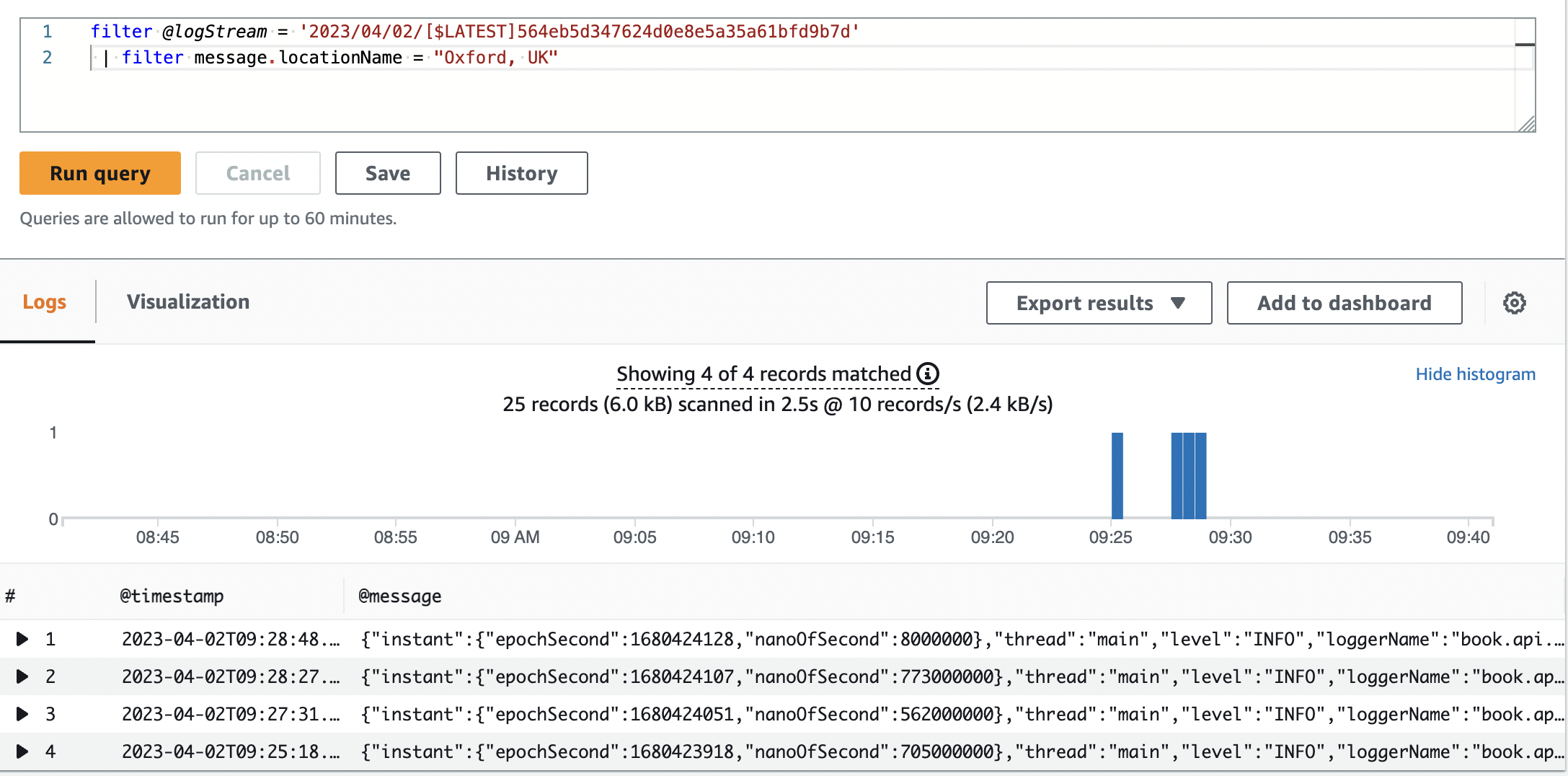

For example, this screenshot shows how, in AWS CloudWatch Insights, you would search for logs where a weather event from Oxford occurred. We are referring to the locationName property under the message component of the log.

You can do much more sophisticated queries with filtering and sorting. For example, you could say, "Show me the top 10 weather events where the temperature was greater than 20 degrees" (a rare occurrence in the UK).

Triggering Events From Logs

Another benefit of being able to query your logs is that you can start measuring things. These measurements (called metrics in AWS CloudWatch parlance) can then be used to trigger events such as sending out a notification.

In AWS, this would be achieved by creating a metric that represents what you want to measure and then setting up a CloudWatch Alarm based on a condition on that metric and using the alarm to trigger a notification to, for instance, SNS.

For example, if you wanted to send out an email whenever the temperature went over 20 degrees in London, you can create a metric for the average temperature reading from London over a period of, say, 5 hours and then create an alarm that would activate when this metric goes above 20 degrees. This alarm can then be used to trigger a notification to an SNS topic. Subscribers to the SNS topic would then be notified so that they know not to wear warm clothes.

Is There a Downside To Structured Logs?

The decision as to whether to use Structured Logs should be driven by the overall monitoring and analytics strategy that you envision for the system. If you have, for example, a serverless application that is part of a wider system that ties into other services, it makes sense to centralize the logs from these various services so that you have a unified view of the system. In this scenario, having your logs structured will greatly aid monitoring and analytics.

If, on the other hand, you have a very simple application that just serves data from a single data source and doesn't link to other services, you may not need to structure your logs. Let's not forget the old adage: Keep it Simple, Stupid.

So, to answer the question "Is there a Downside to Structured Logs?" - only if you use it where you don't need to. You don't want to spend time on additional configuration and having to think about structure when having simple logs would work just fine.

Conclusion

Structured Logging not only aids you in analyzing your logs more efficiently but it also aids you in building better monitoring capabilities in your system. In addition to this, business analytics can be enhanced through relevant queries and setting up metrics and notifications that can signal trends in the system.

In short, Structured Logging is not just about logging. It is a tool that drives architectural patterns that enhance both monitoring and analytics.

Opinions expressed by DZone contributors are their own.

Comments