GenAI: Spring Boot Integration With LocalAI for Code Conversion

Learn how GenAI can be used locally or in private data centers using LocalAI, SpringBoot, and LangChain4J for code conversion tasks.

Join the DZone community and get the full member experience.

Join For FreeBuilding applications with GenAI has become very popular today. One of the main concerns with cloud-based AI services like Chat GPT and Gemini is that you are sharing a lot of data with the cloud providers. These privacy issues can be addressed by running LLM models in a private data center or local machine. The popular open-source LLM models and GenAI tools such as LLM Studio, Ollama, AnythingLLM, and LocalAI are supported on various platforms like Windows, Linux, and Mac, and they are easy to set up.

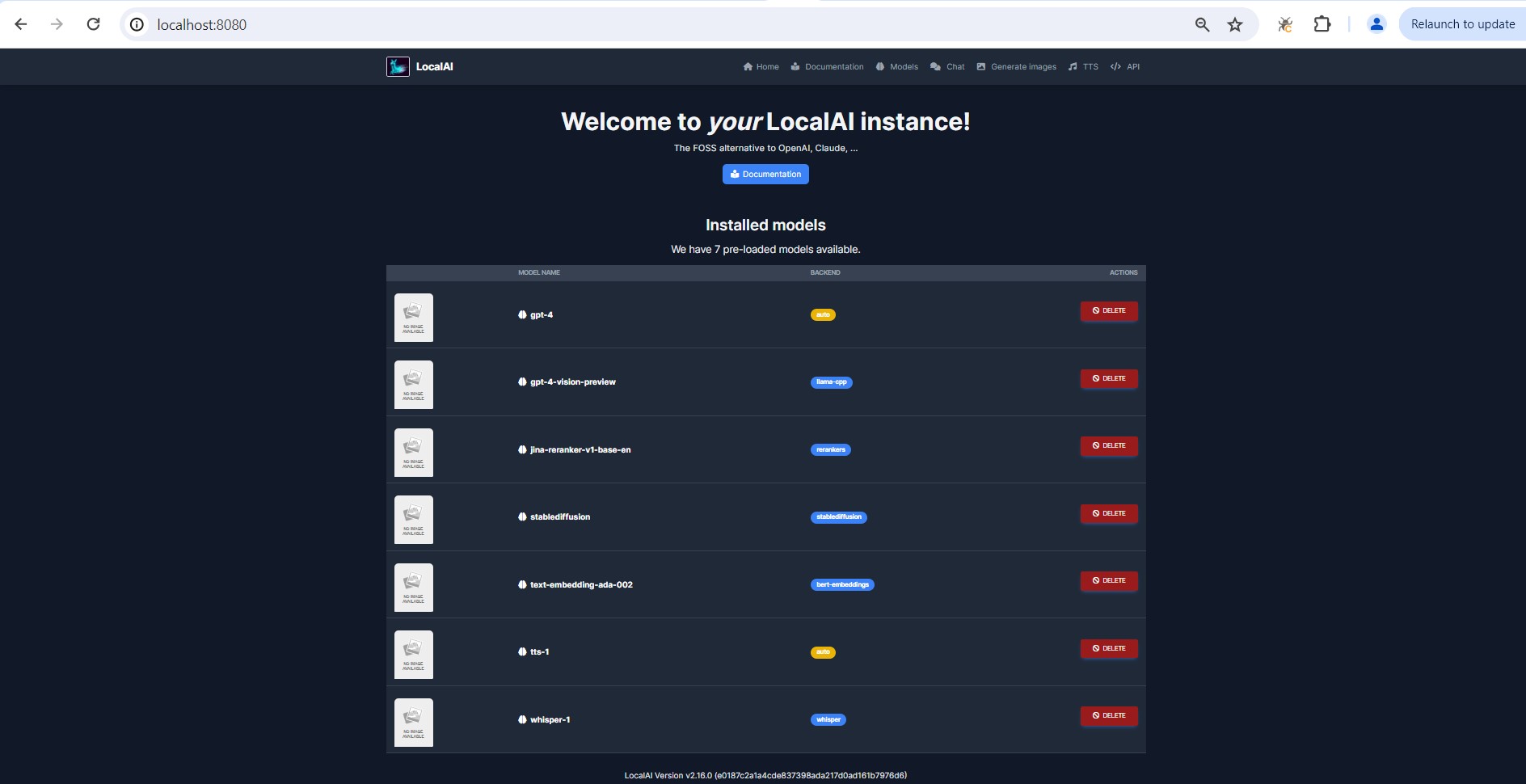

Today, we are going to discuss LocalAI, an open-source project that aims to provide a local, privacy-focused alternative to cloud-based AI services. This approach can offer several benefits, including enhanced data privacy, reduced latency, and potentially lower costs associated with cloud services. LocalAI comes with different models for different use cases such as text generation, creating embeddings, audio-to-text, text-to-audio, image analysis, etc., but for the demo, we will be using text generation with the “GPT-4” model from LocalAI.

Demonstration

This demo is about GenAI converting the source code from one programming language to another. We will convert Java source code to C# and Python. We will set up a Spring Boot project, which will upload the Java source file to the REST API endpoint, and convert the Java source code to the target language. Spring AI does not provide integration for LocalAI, so we will use LangChain4j, a Java implementation of the LangChain framework, designed to facilitate the development of applications that leverage language models.

First, we will set up LocalAI on Windows using Docker, and run the LLM Server on the local machine. The good thing is LocalAI can be set up on a laptop with no GPU. We execute the Docker command below to install LocalAI:

docker run -p 8080:8080 --name local-ai -ti localai/localai:latest-aio-cpuThis starts the LocalAI setup and will start downloading and building the models using the container image v2.16.0. The machine configuration used is 11th Gen Intel(R) Core(TM) i5-1135G7 @ 2.40GHz, 16GB RAM with no GPU. Once installation is done, you will see the logs below:

12:52PM INF core/startup process completed!

12:52PM INF LocalAI API is listening! Please connect to the endpoint for API documentation. endpoint=http://0.0.0.0:8080

Go to the browser, type http://localhost:8080, and navigate the UI:

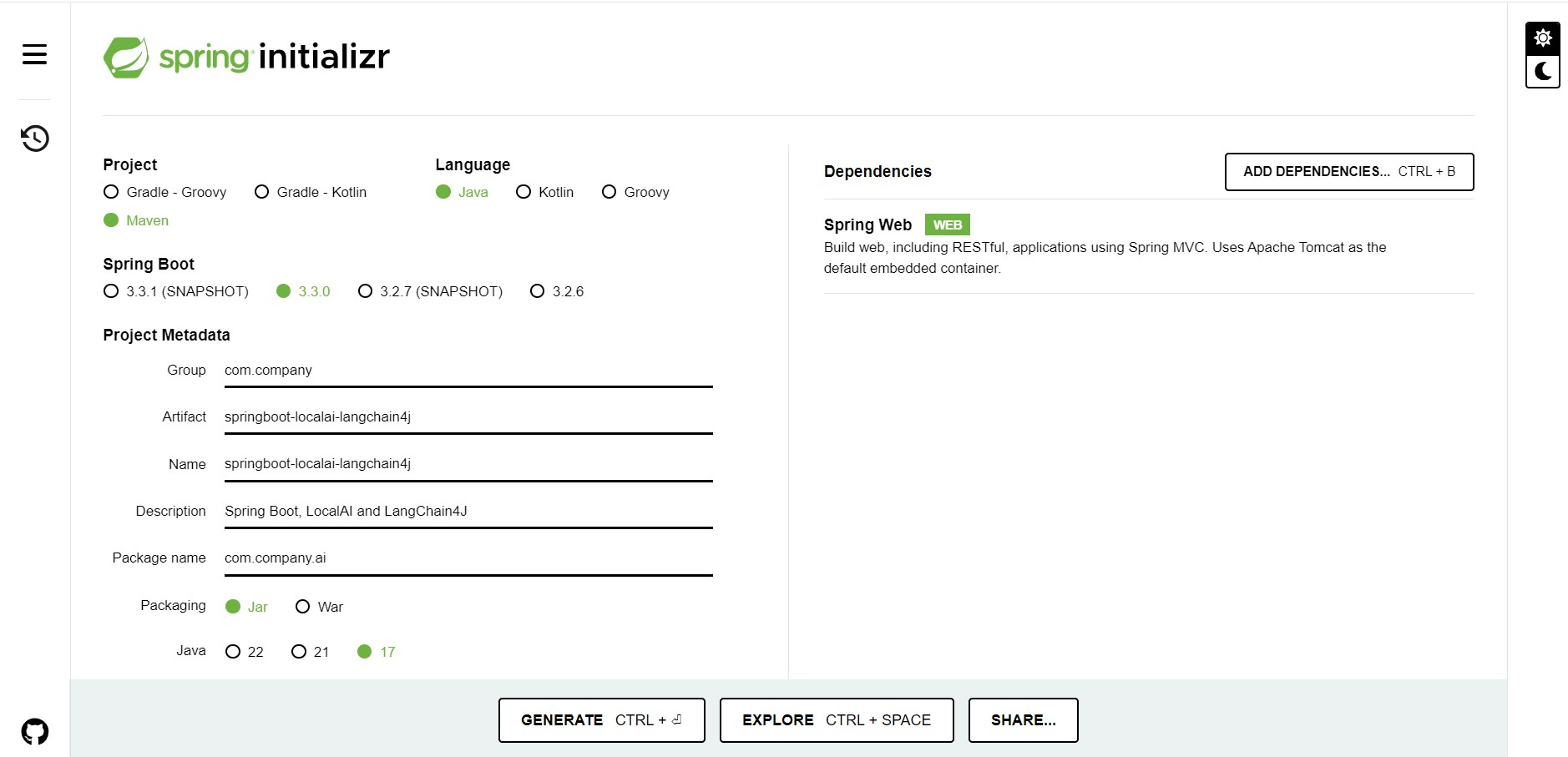

Now we will develop a Spring Boot app that will provide REST API to chat with the LocalAI. Let’s start by creating a Spring Boot app using the Spring Initializr project, adding a dependency for Spring Web, and downloading the project.

Add LangChain4J dependency to the Maven build file.

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-local-ai</artifactId>

<version>0.31.0</version>

</dependency>

Now create LocalAIChatController.java, a REST API “/api/files/translate” endpoint, to upload a Java source file. Below is the code for LocalAILLService.java, a Spring bean class that will use the LangChain4j API to connect to the LocalAI chat service using the GPT-4 model. We set the prompt timeout to 5 minutes. On average, it takes 2 minutes on my machine to send the response, but this may vary depending on machine load and utilization and hardware configuration. Since LocalAI is running on port 8080, we change the SpringBoot port to 8081.

ChatLanguageModel model = LocalAiChatModel.builder()

.baseUrl("http://localhost:8080")

.modelName("gpt-4")

.temperature(0.9)

.timeout(Duration.ofMinutes(2))

.build();

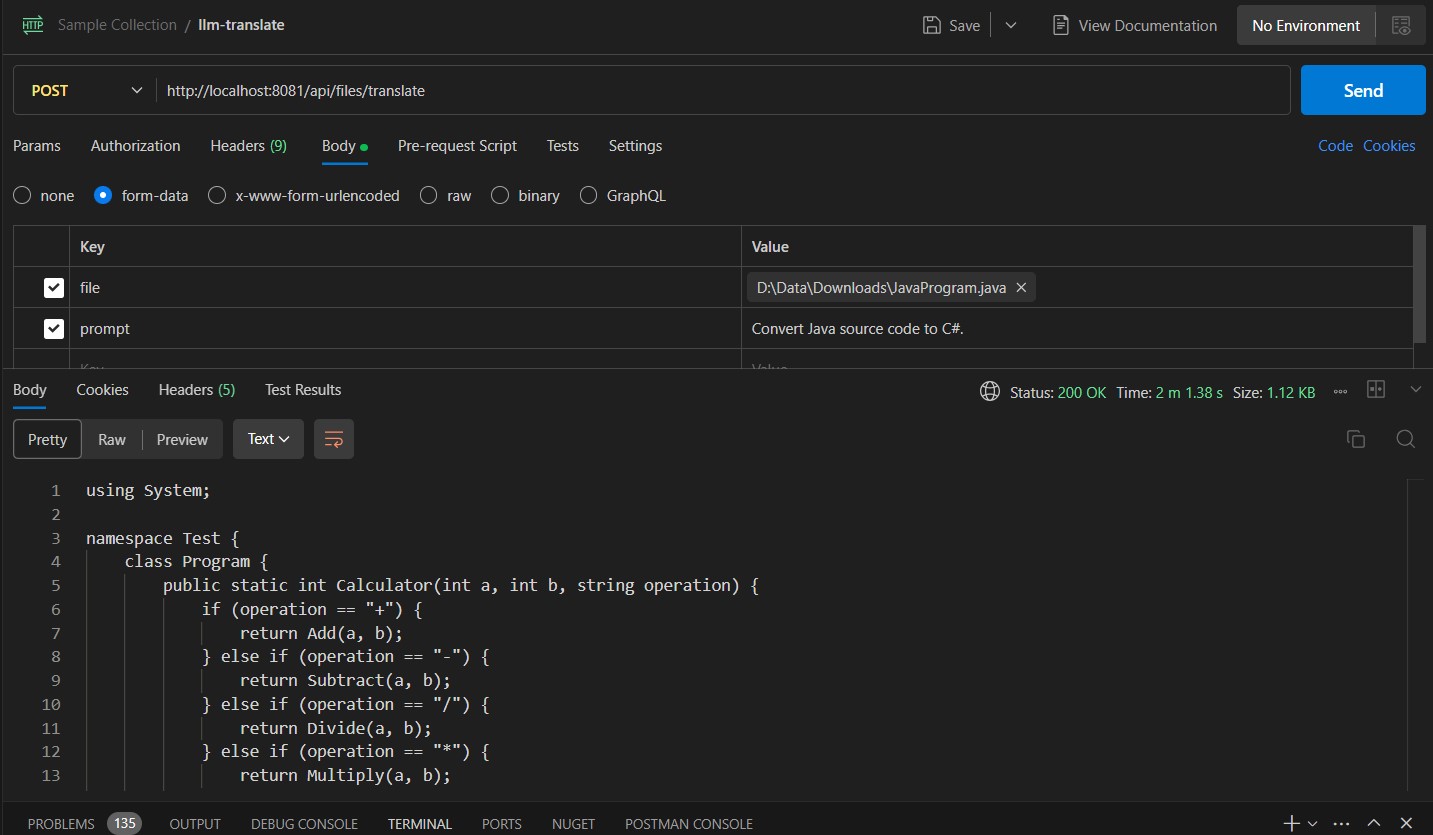

Go to Postman and create a prompt “Convert Java source code to C#.” Upload the below Java source code to the “/api/files/translate” endpoint, and make sure the file encoding is UTF-8:

package test;

public class JavaProgram {

public static int calculator(int a, int b, String operation) {

if(operation.equals("+")) {

return add(a, b);

}else if(operation.equals("-")) {

return subtract(a, b);

}else if(operation.equals("/")) {

return divide(a, b);

}else if(operation.equals("*")) {

return multiply(a, b);

}

throw new RuntimeException("Invalid Operation!");

}

public static int add(int a, int b) {

return a + b;

}

public static int multiply(int a, int b) {

return a * b;

}

public static int divide(int a, int b) {

return a / b;

}

public static int subtract(int a, int b) {

return a - b;

}

public static void main(String args[]) {

System.out.println(calculator(2, 2, "+"));

}

}

The process took 2 minutes and 1 second to convert Java to C# source code, but the results will be much faster with GPU installed.

Generated C# source code:

using System;

namespace test { public class CSharpProgram {

public static int Calculator(int a, int b, string operation)

{

if (operation == "+")

{

return Add(a, b);

}

else if (operation == "-")

{

return Subtract(a, b);

}

else if (operation == "/")

{

return Divide(a, b);

}

else if (operation == "*")

{

return Multiply(a, b);

}

throw new Exception("Invalid Operation!");

}

public static int Add(int a, int b)

{

return a + b;

}

public static int Multiply(int a, int b)

{

return a * b;

}

public static int Divide(int a, int b)

{

return a / b;

}

public static int Subtract(int a, int b)

{

return a - b;

}

public static void Main(string[] args)

{

Console.WriteLine(Calculator(2, 2, "+"));

}

}

}

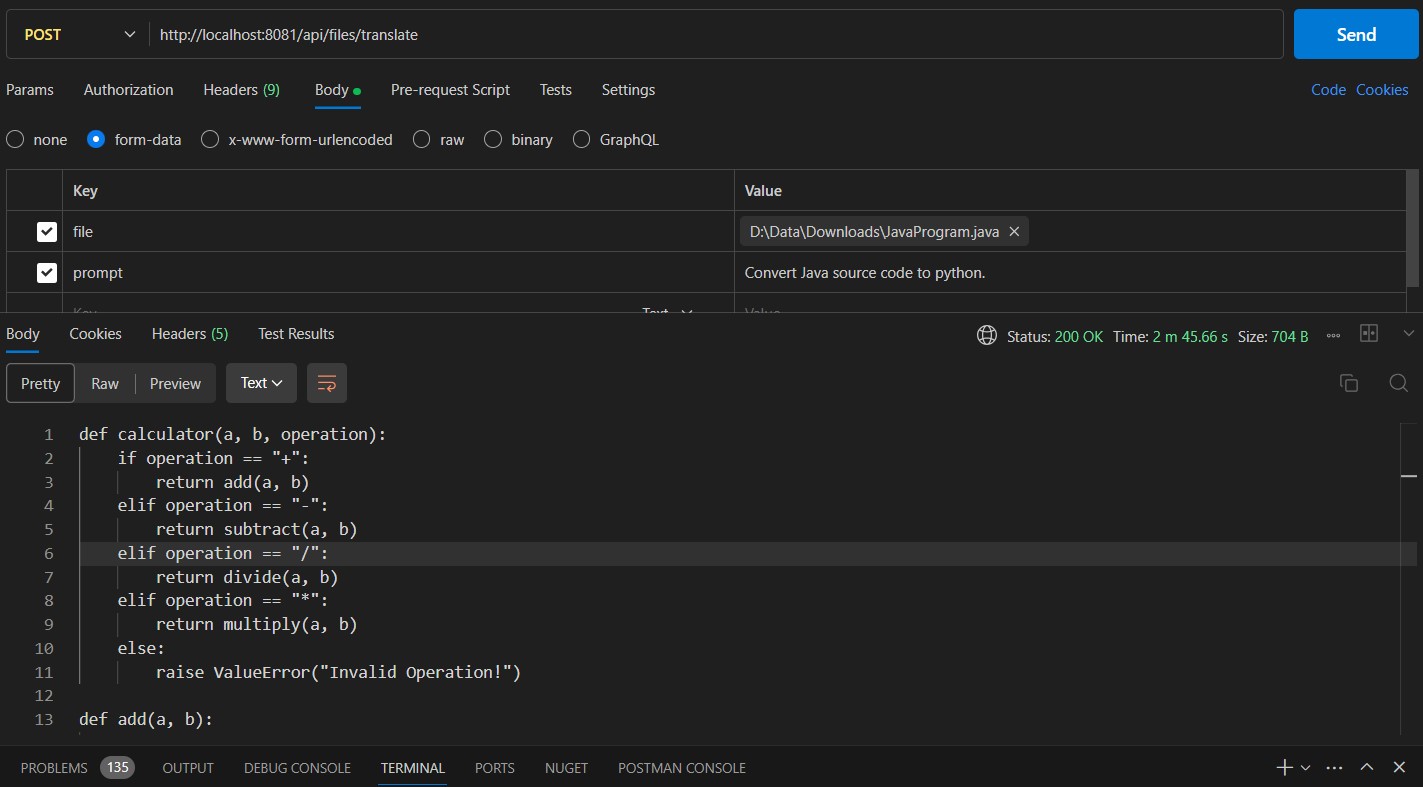

Now, upload the Java source code again using Postman. This time, change the prompt to “Convert Java source to Python.” When executed, the prompt took 2m 45sec:

Generated Python code:

def calculator(a, b, operation):

if operation == '+':

return add(a, b)

elif operation == '-':

return subtract(a, b)

elif operation == '/':

return divide(a, b)

elif operation == '*':

return multiply(a, b)

else:

raise RuntimeError("Invalid Operation!")

def add(a, b):

return a + b

def multiply(a, b):

return a * b

def divide(a, b):

return a // b

def subtract(a, b):

return a - b

def main():

print(calculator(2, 2, "+"))

if __name__ == "__main__":

main()

When C# and Python programs are executed, we get the same output.

4

** Process exited - Return Code: 0 **

Press Enter to exit terminal

Conclusion

We explored how Spring Boot can be used with LangChain4J to integrate with LocalAI. LocalAI represents a promising approach for applications where privacy, latency, and cost are critical considerations. By leveraging local hardware and optimizing AI models, organizations can achieve robust and efficient AI deployments tailored to their specific needs.

Opinions expressed by DZone contributors are their own.

Comments