Split the Monolith: What, When, How

In this post, follow along with some thoughts and step-by-step instructions on how to prepare and perform monolith splitting.

Join the DZone community and get the full member experience.

Join For FreeOverview

Monolith splitting has to chase some goals: not just splitting, but splitting to achieve some benefits. Some other ways, such as application scaling or database hardware update, may be preferable if considering the cost of splitting and possible benefits.

Monolith splitting has to chase some goals: not just splitting, but splitting to achieve some benefits. Some other ways, such as application scaling or database hardware update, may be preferable if considering the cost of splitting and possible benefits.

Another example of a benefit to achieve may simply be application modernization. Nevertheless, here is the more or less formal way to monolith splitting, which is trying to take into account the reason and goals of splitting. Of course, this is not dogma, and you can find several approaches to splitting. This migration roadmap is designed to migrate monolith applications to microservices and get microservice benefits, without (or minimal) application unavailability.

Baseline

Due to the nature of application support, monoliths may look very messy, but that is to be expected. At the best, expect a large, coupled layered application with tons of adjustments. In any case, the first step is that you need to gather all information about the current application: requirements, use cases, ASRs, components, deployments diagrams, etc.

Know Your Application (Monolith)

In most cases, the application has a lack of documentation, which may describe the baseline. You need to collect at least the following information:

- Functional modules of the application, relations among them, and the external world

- API and services interface description

- A component diagram with a modules' description will be enough. This is because in most cases splitting will be performed by functionality or in other words application capabilities.

- Deployment diagram, which shows physical hardware with the software of the system and configurations. This is because some NRF may be impacted by deployment (for example, scalability of fault isolation).

Know Your Data

Why are data diagrams and potential data mapping important? In most cases, the microservice will be extracted with underlying data and database. The disorganized data may impact such NRF like performance. In any case, it helps to find potential issues such as lack or excess of data, data disorganization, and boundaries of data extractions with some microservice.

Know Your Context

In some cases, the functionalities which need to be extracted may already be present as separate external services. Why? Instead of extracting some business capabilities, you just need to reuse some already existing external services. Such microservice benefits like TTM will be achieved with less effort.

Know Your Users

This is overlapping with context but shows how the application is used by users/external systems in order to understand what functionality is used and how. Maybe some parts of the application are obsolete and unused.

The rest of the information about applications will be used to make the decision and show the necessity to split, how to split and what to extract.

Why Split Monoliths?

It may be hard to provide the answers to why we need to split monolith into microservices. The answers can be more business-oriented or more technical-oriented. In most cases, a business does not see any benefits from refactoring or splitting monoliths into microservices.

Business Drivers

At first glance, the following prerequisites can be found, but the list may be expanded. In any case, business drivers are related to increasing income and reducing losses, so new items will just be derived from those two issues.

- Reduce time to market

- Reduce TCO: scale necessary part only

- Reduce losses: Resilient, HA, and fault-tolerant solution; test coverage

- Provide better user experience

Also, some strange drivers may be silently present, but unspoken: it's fashionable and everyone else breaks monoliths, too.

Tech Drivers

Tech drivers just expand the list of business drivers, and this may look like the following:

- Get rid of obsolete technologies and smoothly migrate to modern/supported ones

- Reduce complexity

- Raise supportability

- Raise test coverage

- Reusability

- Transparent and predictable changes

- Teams reusing

Microservice Benefits

Microservice benefits and how they cover business and tech needs are provided below. Of course, the table can be extended with several additional microservice benefits, but they are mostly derived and overlapped with table contents.

Please don't forget about the disadvantages.

|

#

|

Microservice benefit

|

What needs are covered

|

|---|---|---|

|

Development

|

||

|

1

|

Service observability: Relatively small microservices are

observable so easier to understand functionality, modify and enhance.

|

|

|

2

|

Service reusability: Service is organized around business capability,

so is it easy to reuse it and does not need to build some

functionality across the organization multiple times.

|

|

|

3

|

Standardized service contract and technology agnostic implementation: Possible to use team with different knowledge. |

|

|

Runtime

|

||

|

4

|

Service scaling: Easy to add, remove, update, and scale individual

microservices to avoid solution performance degradation.

|

|

|

5

|

Resiliency: Fault isolation of single service via orchestrations

and redundancy.

|

|

Steps to Split

Preparation

Here are more or less formal steps to split monolith. The steps may be adjusted taking into account business needs, monolith state, CI/CD procedures, etc.

The list of modules/services that were obtained early on when the baseline was established should be organized around business capabilities and may be one level deeper. An example could be that addresses may be required by the fraud detection system and by promotion services as well.

The desirable goals may be achieved in the middle of the splitting process. It may be good from a business point of view, but terrible from a tech point of view to stay with monolith with several extracted services.

Refactoring First

It is hardly possible that the application, which needs to be split has a good shape for this. Therefore, several rounds of service refactoring in the monolith may be performed. Some services may be under refactoring, another is extracting.

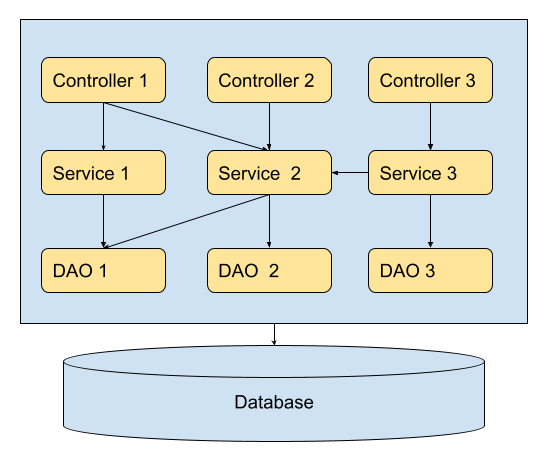

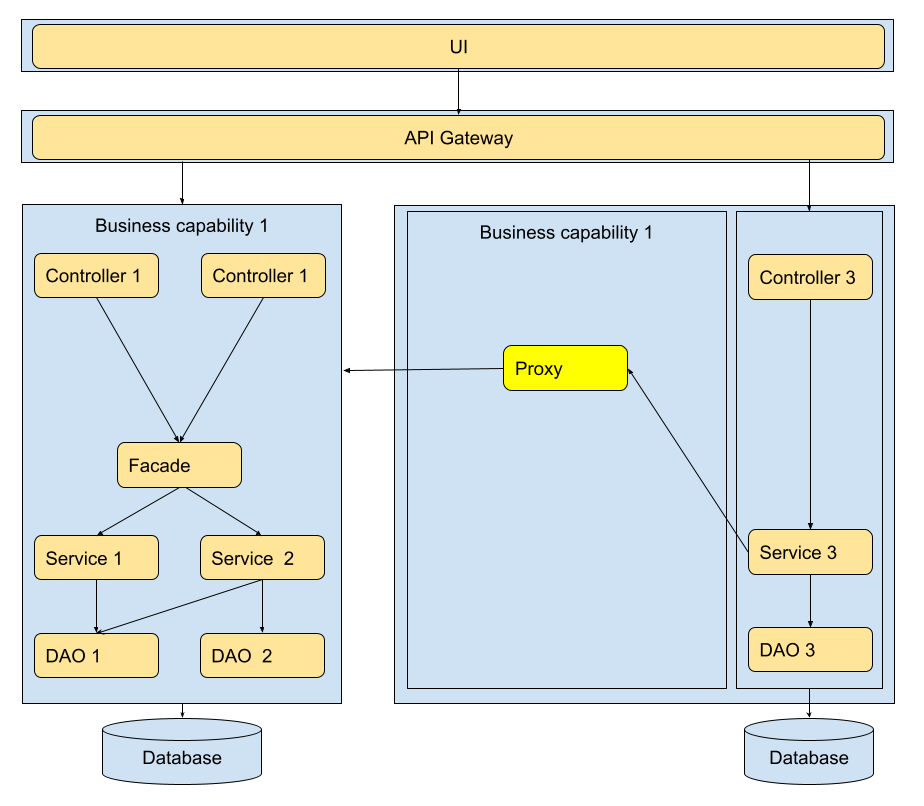

Typical Layered Application

This is the starting point for service extraction, when the main layers of application such as controllers, application services, DAO, etc., are observable and understandable.

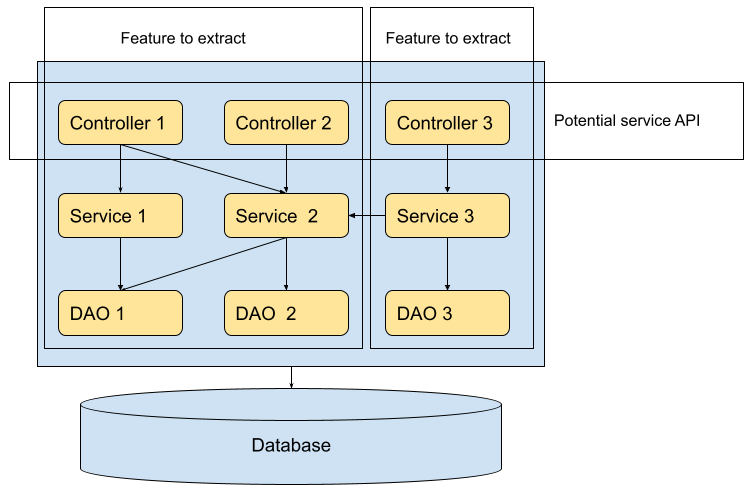

Service/Feature Boundaries and API

The DDD may help to define and service boundaries, but instead of modeling the business model, we need to extract business functionality from the existing application. Just start mapping business functionality to the existing application services and domain model to identify service boundaries.

In the layered application, controllers may depict the API to extract. The API may be adjusted/extended, but the main point here is that the entire application must work with service with minimal changes. Changes are related to the why and how we need to work with functionalities to extract (Proxy and Facades).

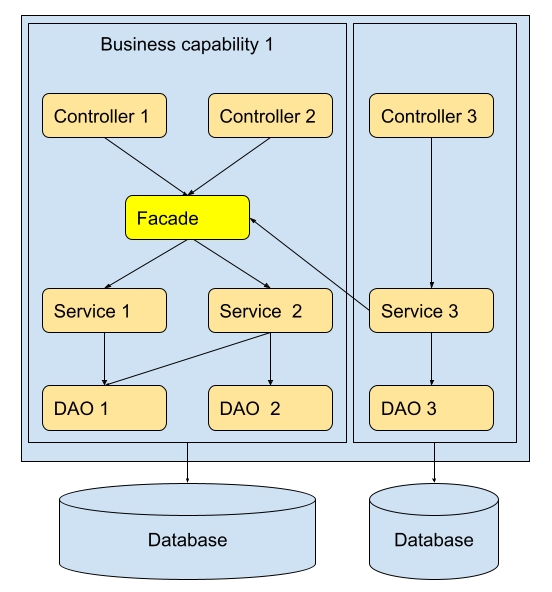

Create Service Facade

When service(s) boundaries are defined need to change the way of interaction with functionality, instead of dealing with a set of application services that represent business functionality, we need to create a facade and work through facade in monolith application.

In other words, we need to get a loosely coupled monolith before extracting services.

Refactor Data

Extraction of one service with a database may impact several different services of a monolith. Access to the service data must be through API only, not via database. So even one service extracting, may require significant effort, because of direct access to the data.

Stop Writing New Features Into Monoliths

It is a standard situation when two initiatives such as split to microservice and adding a new feature into solution are performed in parallel, so it makes sense to create a new feature as a separate microservice from the beginning.

Splitting

Mostly all preparation steps should be done before the actual splitting. The application should be presented as a loosely coupled monolith with fine-grained services, well-described API and boundaries, and isolated data per service (avoid data sharing across DB).

Prioritize Services To Extract

The more correct way is to call not a service extraction, but business feature extraction. Try to reorganize/refactor/group existing applications by business capabilities/domains, and each of them may include multiple application services.

Potential prioritizing criteria:

- The most frequently changed services: Minimize influence to deployment.

- The service that may be replaced by 3rd party service: Just to make the codebase lighter

- The service which needs to be scaled: Optimize performance.

- Coupling with the entire monolith: Make the codebase lighter and more understandable.

- The complexity of the service: To gather experience with a small one, establish CI/CD process, way of communications, etc.

The list may be different; such criteria as changing underlaying database model from relational to NoSQL, technology changes (programming language), teams utilization, etc. may be added.

As a result, at least one service can be chosen for extraction.

Select the Way of Communications Among Microservices

This is not a very complex step. However, we need not only to select the protocol and serialization type but also to take into account restrictions from the cloud providers (i.e., broadcasting support). In most cases, REST or gRPC is a preferred way for request-response synchronous communications. This is due to reasons including relative simplicity, teams experience, support by different tools, etc. It is very hard to imagine clean TCP usage for new or extracted microservices.

The message-based way of communications cannot be considered as a request-response alternative due to async nature, single direction (of course, it's possible to use the “reply to” mechanism, but it leads to service coupling), etc. The async is suitable for event sources (fire and forget), and for consumers to build their own picture of the world. An example would be a notification about successful payment will be reflected in the order fulfillment system to assemble the delivery and in the finance systems. The SQS, Kafka, or some other messaging services may be used.

Implement Service

Implement microservice with selected communication approach, own database, etc. It makes sense to create a service mock for testing purposes to test monolith and the rest of the related microservices.

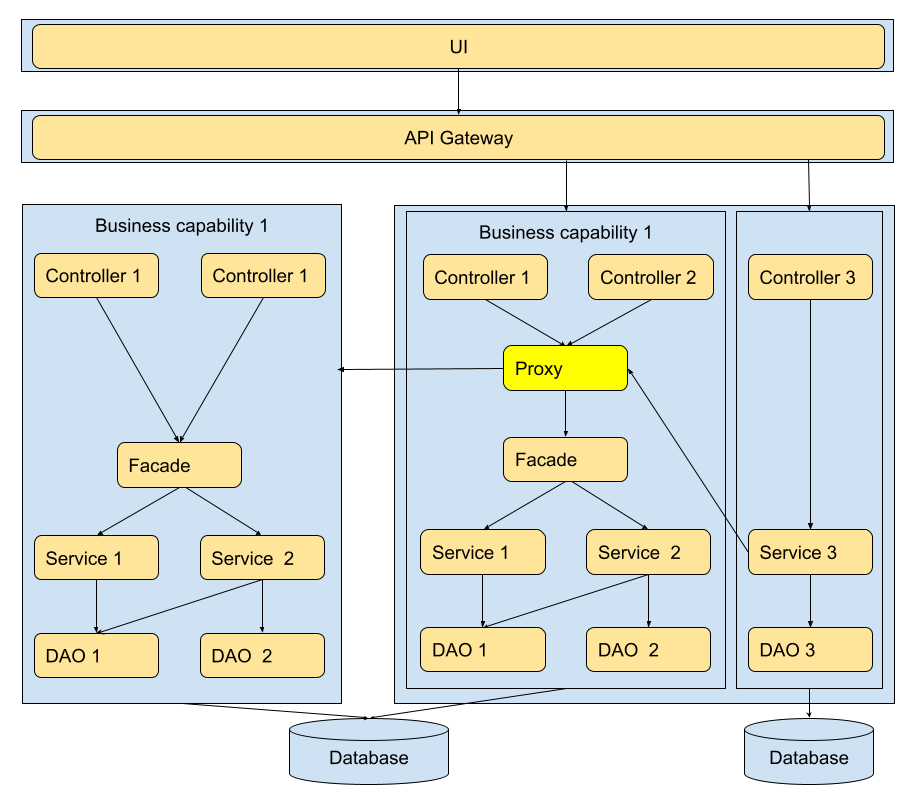

Create Service Proxy

Create service proxy with additional responsibility. Use service in monolith or use extracted service. Imply that proxy is available to communicate with remove/extracted microservice.

This proxy will allow easy switching between existing service implementation in the monolith and extracted services. The canary release approach can be used.

Switch to the Microservice Usage

After testing, canary release with testing in production makes sense to microservice usage only and cleanup monolith from old code.

Check if initial goals are achieved and make the decision to stop or to continue the splitting process. In the case of continuation, you need to select the next feature and perform an extraction.

Small Additional Note

Yes, I am Ukrainian and I am writing this article from shelter. Glory to Ukraine! Glory to the Heroes!

Opinions expressed by DZone contributors are their own.

Comments