Software Testing as a Debugging Tool

Explore how software testing functions as a critical debugging tool, significantly enhancing code reliability and streamlining the development process.

Join the DZone community and get the full member experience.

Join For FreeDebugging is not just about identifying errors — it's about instituting a reliable process for ensuring software health and longevity. In this post, we discuss the role of software testing in debugging, including foundational concepts and how they converge to improve software quality.

As a side note, if you like the content of this and the other posts in this series check out my Debugging book that covers this subject. If you have friends that are learning to code I'd appreciate a reference to my Java Basics book. If you want to get back to Java after a while check out my Java 8 to 21 book.

The Intersection of Debugging and Testing

Debugging and testing play distinct roles in software development. Debugging is the targeted process of identifying and fixing known bugs. Testing, on the other hand, encompasses an adjacent scope, identifying unknown issues by validating expected software behavior across a variety of scenarios.

Both are a part of the debug fix cycle which is a core concept in debugging. Before we cover the cycle we should first make sure we're aligned on the basic terminology.

Unit Tests

Unit tests are tightly linked to debugging efforts, focusing on isolated parts of the application—typically individual functions or methods. Their purpose is to validate that each unit operates correctly in isolation, making them a swift and efficient tool in the debugging arsenal. These tests are characterized by their speed and consistency, enabling developers to run them frequently, sometimes even automatically as code is written within the IDE.

Since software is so tightly bound it is nearly impossible to compose unit tests without extensive mocking. Mocking involves substituting a genuine component with a stand-in that returns predefined results, thus a test method can simulate scenarios without relying on the actual object. This is a powerful yet controversial tool. By using mocking we're in effect creating a synthetic environment that might misrepresent the real world. We're reducing the scope of the test which might perpetuate some bugs.

Integration Tests

Opposite to unit tests, integration tests examine the interactions between multiple units, providing a more comprehensive picture of the system's health. While they cover broader scenarios, their setup can be more complex due to the interactions involved. However, they are crucial in catching bugs that arise from the interplay between different software components.

In general, mocking can be used in integration tests but it is discouraged. They take longer to run and are sometimes harder to set up. However, many developers (myself included) would argue that they are the only benchmark for quality. Most bugs express themselves in the seams between the modules and integration tests are better at detecting that.

Since they are far more important some developers would argue that unit tests are unnecessary. This isn't true, unit test failures are much easier to read and understand. Since they are faster we can run them during development, even while typing. In that sense, the balance between the two approaches is the important part.

Coverage

Coverage is a metric that helps quantify the effectiveness of testing by indicating the proportion of code exercised by tests. It helps identify potential areas of the code that have not been tested, which could harbor undetected bugs. However, striving for 100% coverage can be a case of diminishing returns; the focus should remain on the quality and relevance of the tests rather than the metric itself. In my experience, chasing high coverage numbers often results in bad test practices that persist in problems.

It is my opinion that unit tests should be excluded from coverage metrics due to the importance of integration tests to overall quality. To get a sense of quality coverage should focus on integration and end-to-end tests.

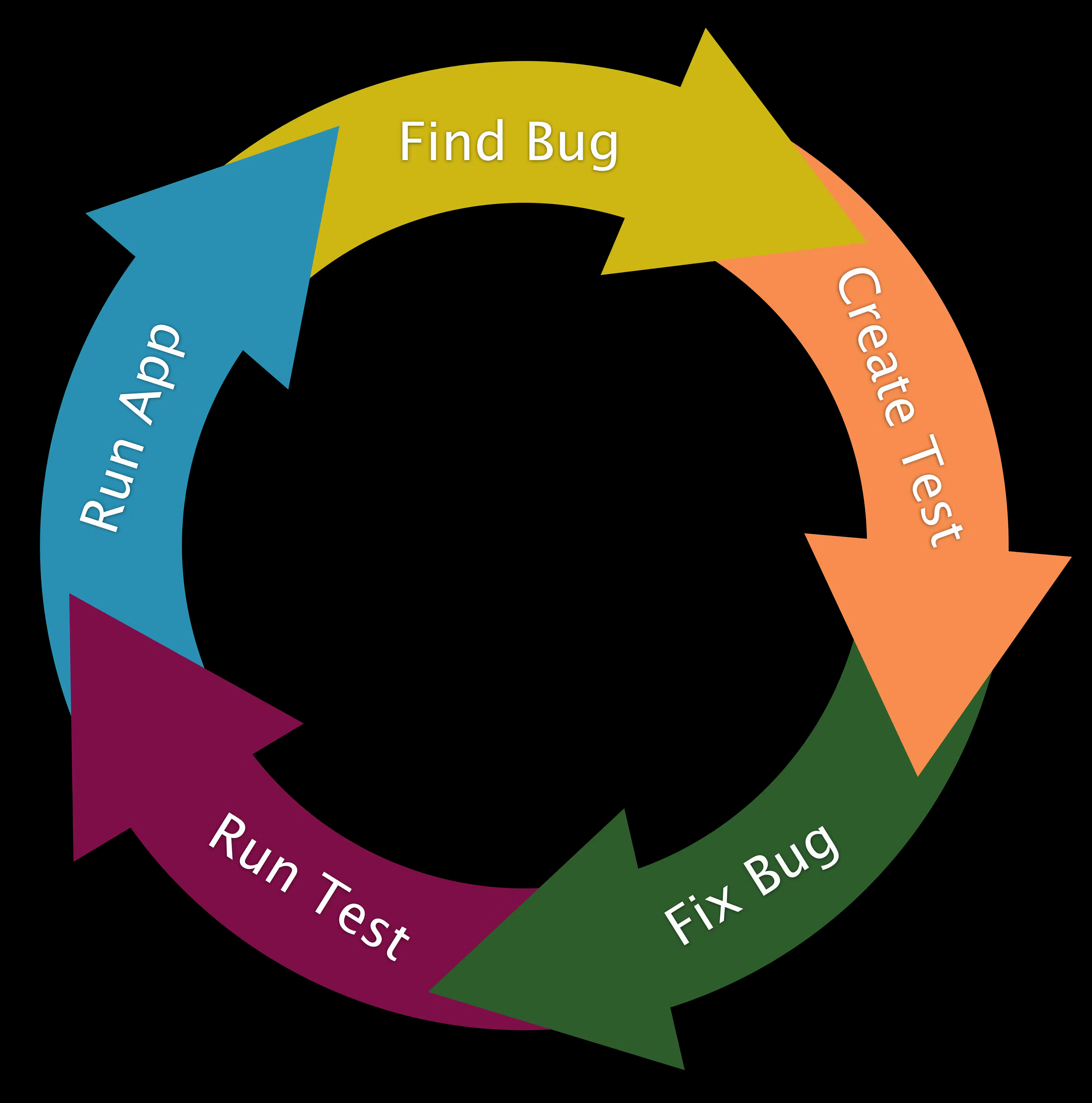

The Debug-Fix Cycle

The debug-fix cycle is a structured approach that integrates testing into the debugging process. The stages include identifying the bug, creating a test that reproduces the bug, fixing the bug, verifying the fix with the test, and finally, running the application to ensure the fix works in the live environment. This cycle emphasizes the importance of testing in not only identifying but also in preventing the recurrence of bugs.

Notice that this is a simplified version of the cycle with a focus on the testing aspect only. The full cycle includes a discussion of the issue tracking and versioning as part of the whole process. I discuss this more in-depth in other posts in the series and my book.

Composing Tests With Debuggers

A powerful feature of using debuggers in test composition is their ability to "jump to line" or "set value." Developers can effectively reset the execution to a point before the test and rerun it with different conditions, without recompiling or rerunning the entire suite. This iterative process is invaluable for achieving desired test constraints and improves the quality of unit tests by refining the input parameters and expected outcomes.

Increasing test coverage is about more than hitting a percentage; it's about ensuring that tests are meaningful and that they contribute to software quality. A debugger can significantly assist in this by identifying untested paths. When a test coverage tool highlights lines or conditions not reached by current tests, the debugger can be used to force execution down those paths. This helps in crafting additional tests that cover missed scenarios, ensuring that the coverage metric is not just a number but a true reflection of the software's tested state.

In this case, you will notice that the next line in the body is a rejectValue call which will throw an exception. I don’t want an exception thrown as I still want to test all the permutations of the method. I can drag the execution pointer (arrow on the left) and place it back at the start of the method.

Test-Driven Development

How does all of this fit with disciplines like Test-Driven Development (TDD)?

It doesn't fit well. Before we get into that let's revisit the basics of TDD. Weak TDD typically means just writing tests before writing the code. Strong TDD involves a red-green-refactor cycle:

- Red: Write a test that fails because the feature it tests isn't implemented yet.

- Green: Write the minimum amount of code necessary to make the test pass.

- Refactor: Clean up the code while ensuring that tests continue to pass.

This rigorous cycle guarantees that new code is continually tested and refactored, reducing the likelihood of complex bugs. It also means that when bugs do appear, they are often easier to isolate and fix due to the modular and well-tested nature of the codebase. At least, that's the theory.

TDD can be especially advantageous for scripting and loosely typed languages. In environments lacking the rigid structure of compilers and linters, TDD steps in to provide the necessary checks that would otherwise be performed during compilation in statically typed languages. It becomes a crucial substitute for compiler/linter checks, ensuring that type and logic errors are caught early.

In real-world application development, TDD's utility is nuanced. While it encourages thorough testing and upfront design, it can sometimes hinder the natural flow of development, especially in complex systems that evolve through numerous iterations. The requirement for 100% test coverage can lead to an unnecessary focus on fulfilling metrics rather than writing meaningful tests.

The biggest problem in TDD is its focus on unit testing. TDD is impractical with integration tests as the process would take too long. But as we determined at the start of this post, integration tests are the true benchmark for quality. In that test TDD is a methodology that provides great quality for arbitrary tests, but not necessarily great quality for the final product. You might have the best cog in the world, but if doesn't fit well into the machine then it isn't great.

Final Word

Debugging is a tool that not only fixes bugs but also actively aids in crafting tests that bolster software quality. By utilizing debuggers in test composition and increasing coverage, developers can create a suite of tests that not only identifies existing issues but also guards against future ones, thus ensuring the delivery of reliable, high-quality software.

Debugging lets us increase coverage and verify edge cases effectively. It's part of a standardized process for issue resolution that's critical for reliability and prevents regressions.

Published at DZone with permission of Shai Almog, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments