Snowflake and Salesforce Integration With AWS AppFlow

This tutorial provides guidance on how to integrate Salesforce data source with Snowflake Cloud Data Warehouse using AWS AppFlow.

Join the DZone community and get the full member experience.

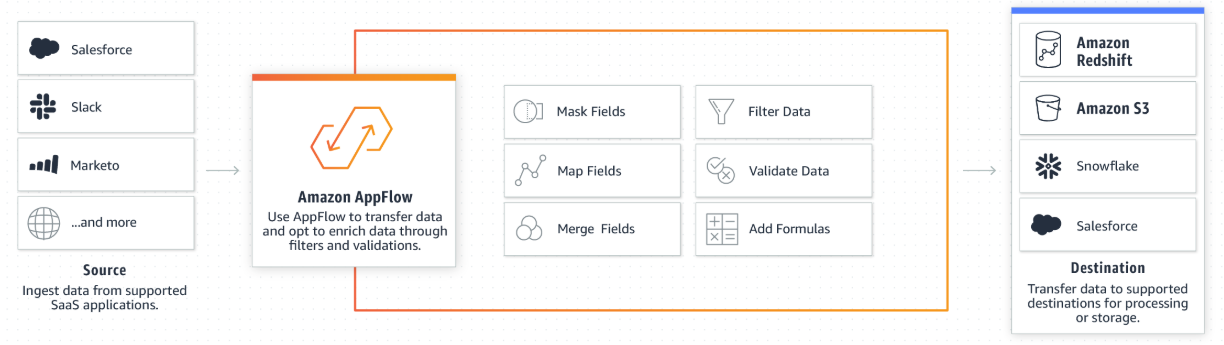

Join For FreeAmazon Web Services has recently announced a new service called AWS AppFlow, which is a fully managed serverless integration service to allow secure data transfer between various Software as Service providers such as Salesforce, ServiceNow, Snowflake, AWS Redshift, etc. The functionality supports no-code integration with mapping, validating, and merging fields on the fly.

This article covers integrating Salesforce CRM and one of the most popular cloud data warehouses, Snowflake using AppFlow.

AWS AppFlow Integration Architecture

The generic AWS AppFlow integration architecture looks like this:

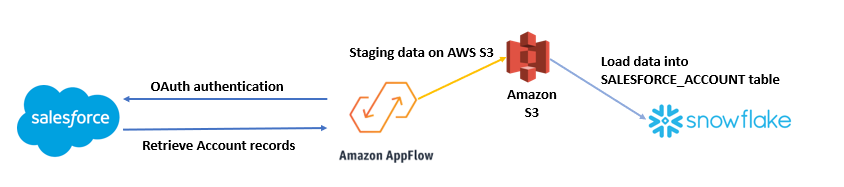

For our use case to integrate Salesforce and Snowflake, we will dive into the following architecture:

Snowflake Configuration

In order to have the configuration enabled, first we need to setup Snowflake side with an external AWS S3 stage and a warehouse.

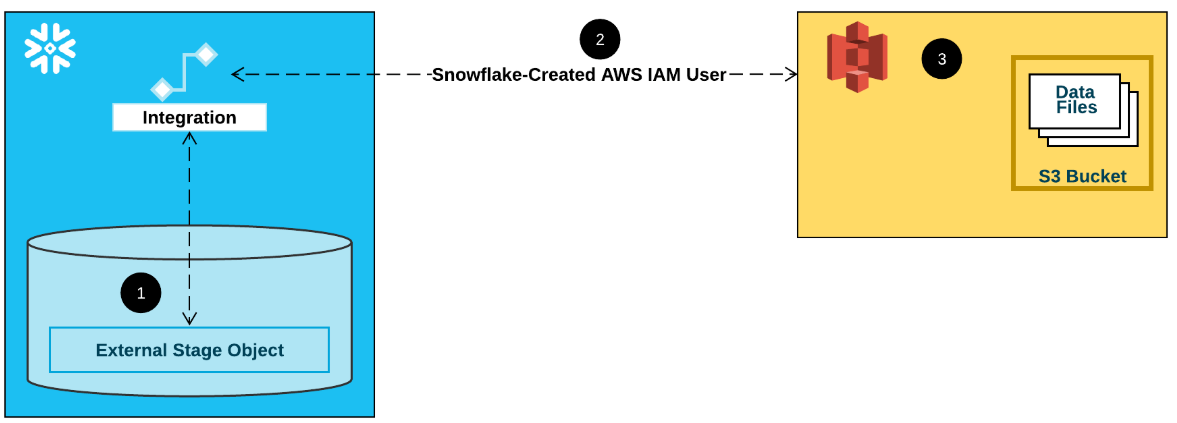

The Snowflake integration with AWS S3 is based on a Snowflake-Created AWS IAM user:

This process starts with creating an AWS IAM policy first for our S3 bucket:

x

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:GetObjectVersion",

"s3:DeleteObject",

"s3:DeleteObjectVersion"

],

"Resource": "arn:aws:s3:::<s3-bucket>/*"

},

{

"Effect": "Allow",

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::<s3-bucket>",

"Condition": {

"StringLike": {

"s3:prefix": [

"*"

]

}

}

}

]

}

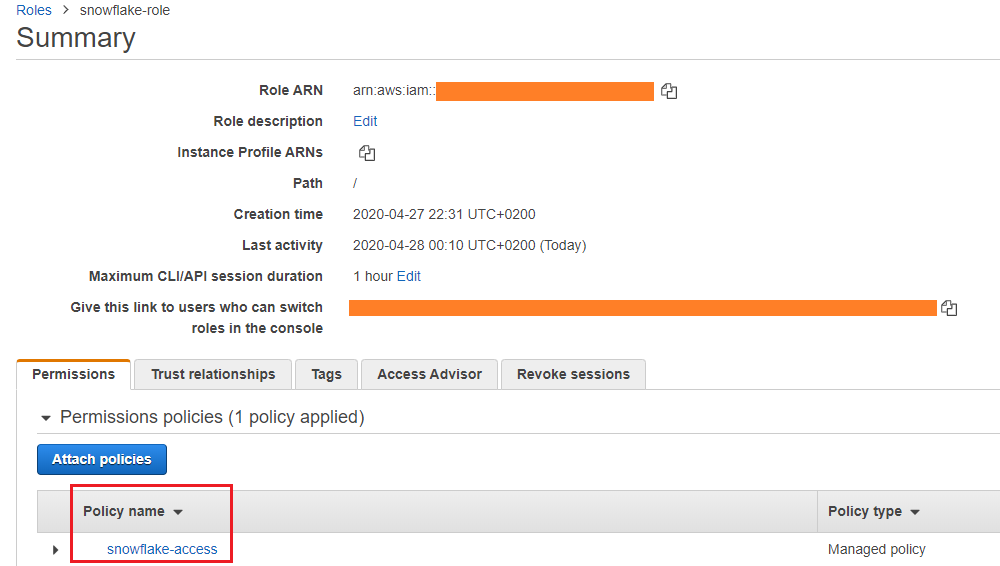

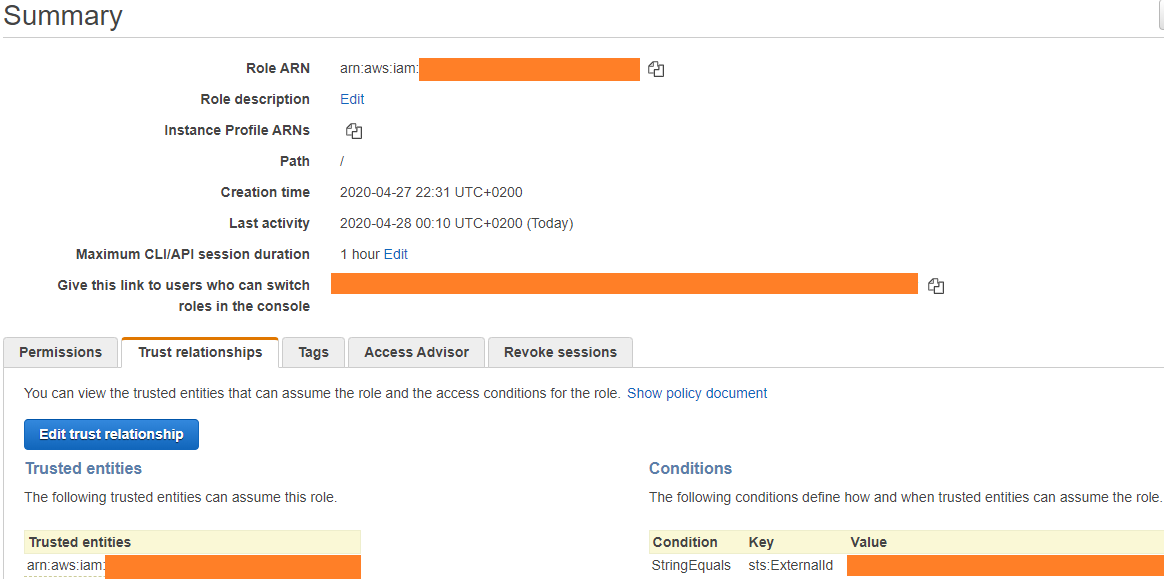

Then we will need to create an AWS IAM role and associate it with this policy:

The next step is to create a storage integration within a Snowflake WebUI worksheet:

x

-- Snowflake storage integration

CREATE STORAGE INTEGRATION snowflake_s3_integration

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = S3

ENABLED = TRUE

STORAGE_AWS_ROLE_ARN = 'arn:aws:iam::<snowflake-iam-role>'

STORAGE_ALLOWED_LOCATIONS = ('s3://<s3-bucket>/')

We can retrieved then the necessary parameters like STORAGE_AWS_IAM_USER_ARN and STORAGE_AWS_EXTERNAL_ID to set up a trust relationship for the Snowflake role to AWS S3:

xxxxxxxxxx

-- Retrieve IAM parameters for AWS S3 trusted relationship

DESC INTEGRATION snowflake_s3_integration

As the final step, we need to create a stage in Snowflake WebUI worksheet:

x

create stage s3stage

storage_integration = snowflake_s3_integration

url = 's3://<s3-bucket>/';

Please find all the details about how to set up an S3 stage for Snowflake here.

AWS AppFlow Configuration

Once we are done on the destination side, we can configure AWS AppFlow.

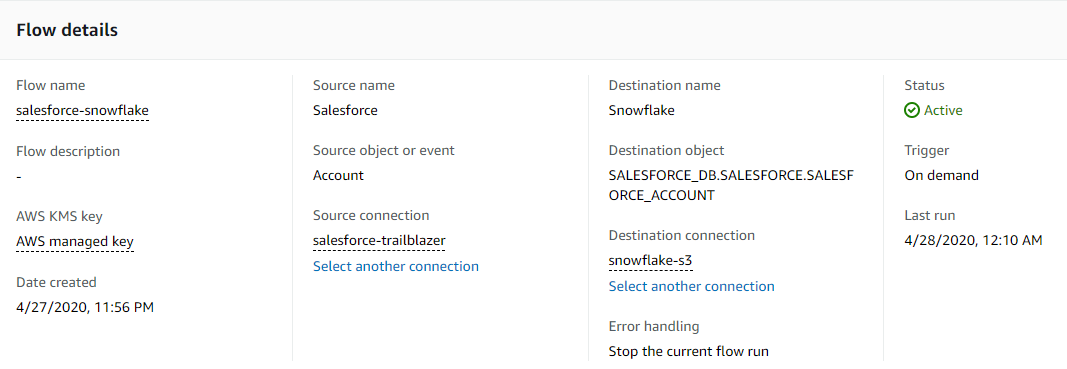

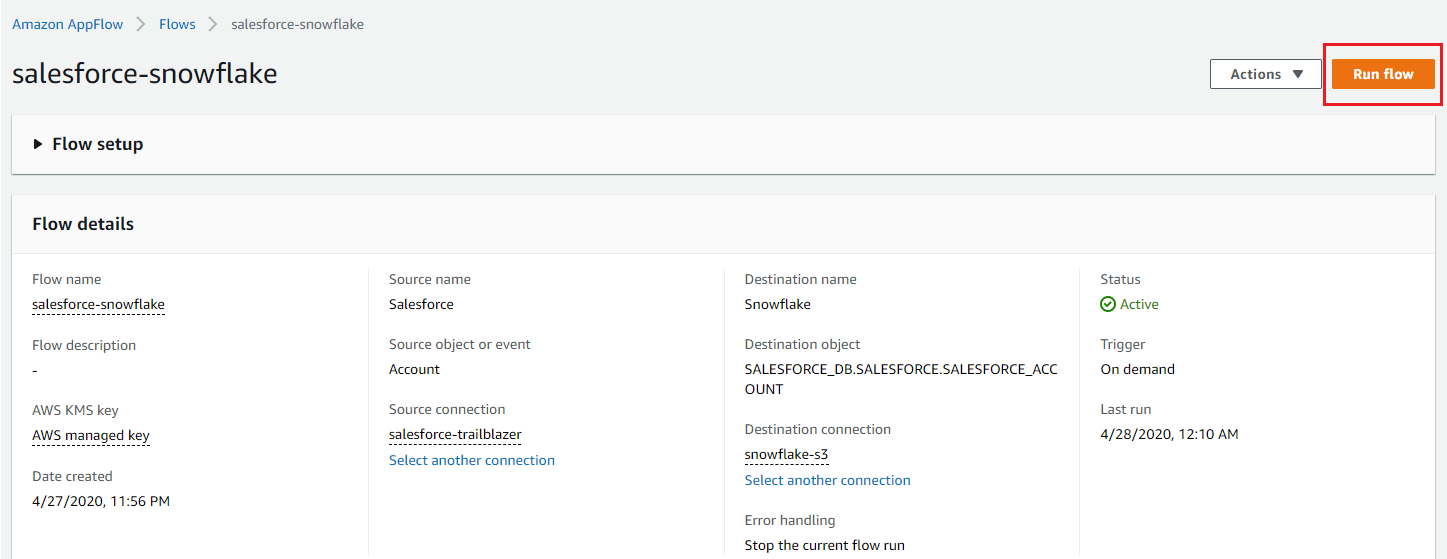

This involves to set up both the source and destination sides, the end result will look something like this:

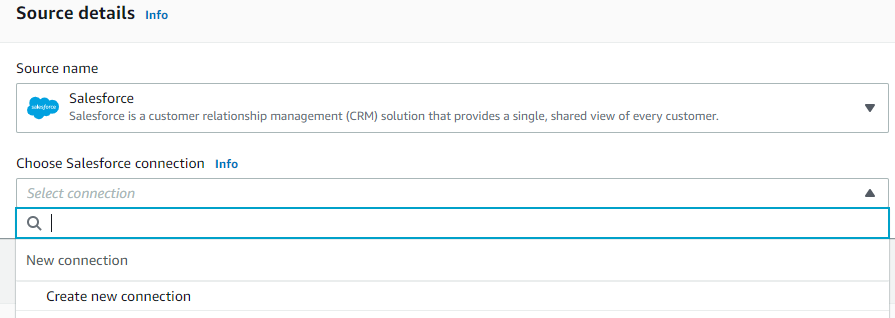

Salesforce as a source requires to set up the connection using Salesforce credentials, that is all.

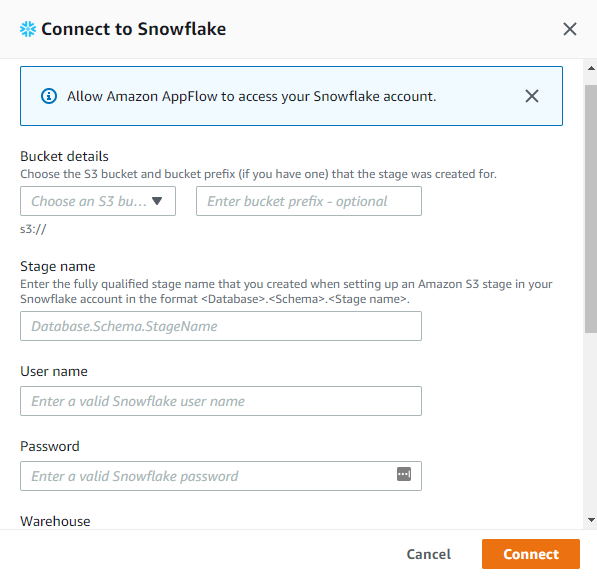

Snowflake connection requires a bit more details such as S3 bucket, stage, DWH username and password, warehouse, and the account name.

Once the connection is established, we can define whether the AppFlow flow is running on demand (i.e. manually) or it is a scheduled process. In the can we have chosen on-demand.

Field Mapping Between Snowflake and Salesforce

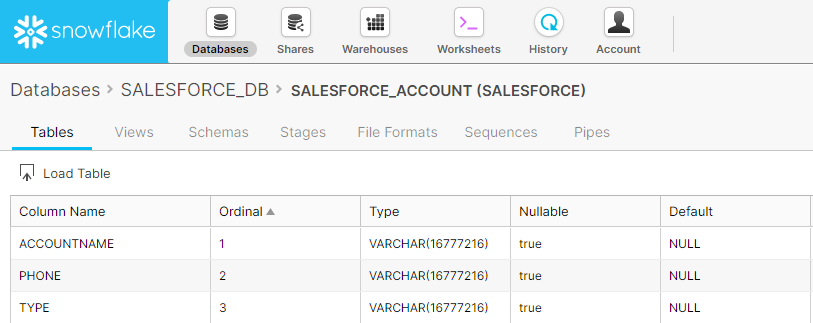

For the sake of simplicity, we have created a very basic SALESFORCE_ACCOUNT table in Snowflake containing nothing more than the account name, phone, and type:

The requested field mapping can be defined in AWS AppFlow configuration. And we are ready!

Now, when we hit Run Flow button, the data flow starts and the records are going to be copied over from Salesforce Account table into Snowflake SALESFORCE_ACCOUNT table:

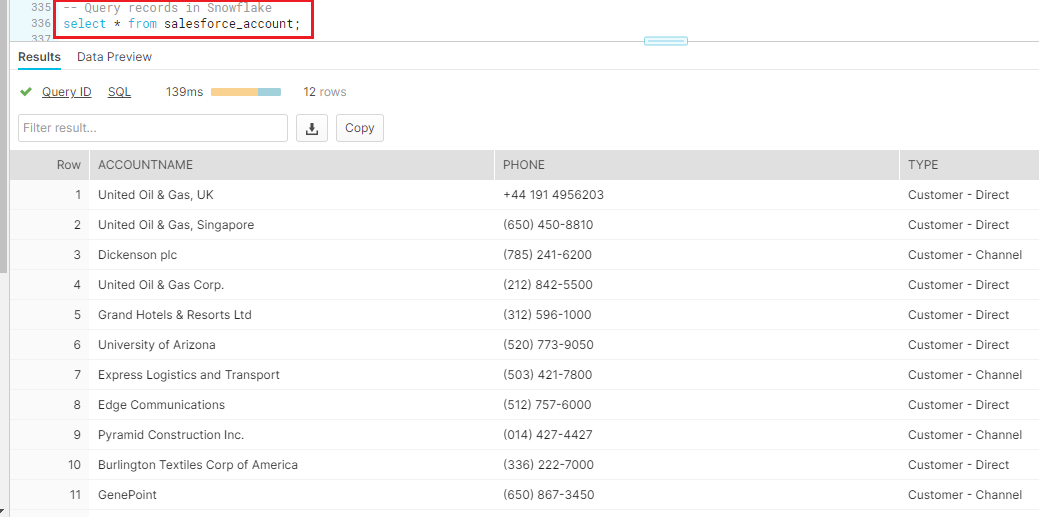

We can verify the data records loaded into Snowflake table with SELECT statements:

Conclusion

With the recent AWS AppFlow data integration service, cloud data integration is just getting easier. There is no need for code development, with fairly straightforward configuration we can easily and securely transfer data from Salesforce CRM as a system of records for our accounts and Snowflake Data Warehouse for analytics and business intelligence purposes. I am looking forward to seeing what other features and new sources and destinations will come.

Opinions expressed by DZone contributors are their own.

Comments