Setting up Request Rate Limiting With NGINX Ingress

Master request rate limits in Kubernetes with NGINX Ingress. Set up, test, and secure web apps using NGINX and Locust for peak performance.

Join the DZone community and get the full member experience.

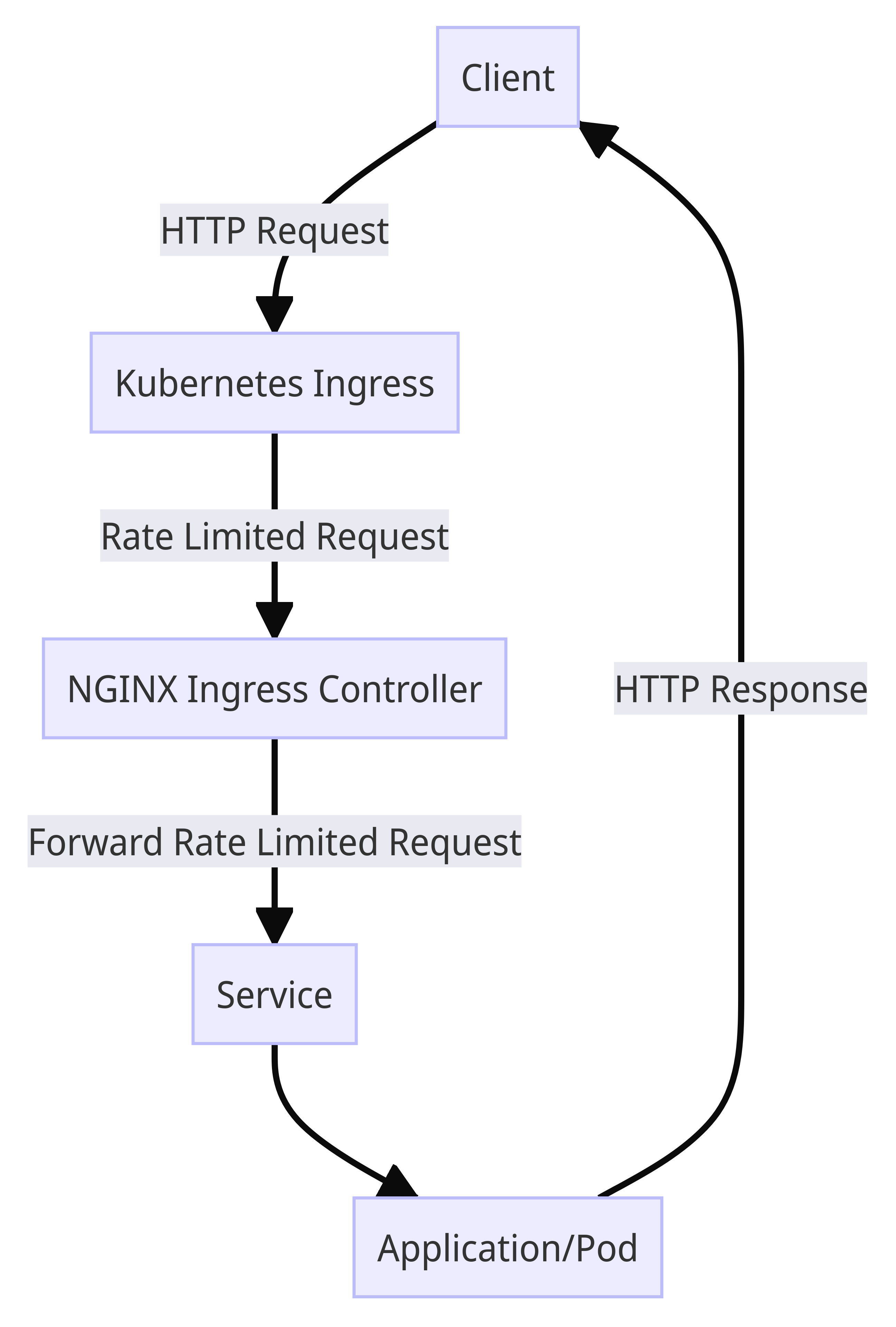

Join For FreeIn today's highly interconnected digital landscape, web applications face the constant challenge of handling a high volume of incoming requests. However, not all requests are equal, and excessive traffic can put a strain on resources, leading to service disruptions or even potential security risks. To address this, implementing request rate limiting is crucial to preserve the stability and security of your environment.

Request rate limiting allows you to control the number of requests per unit of time that a server or application can handle. By setting limits, you can prevent abuse, manage resource allocation, and mitigate the risk of malicious attacks such as DDoS or brute-force attempts. In this article, we will explore how to set up request rate limiting using NGINX Ingress, a popular Kubernetes Ingress Controller. We will also demonstrate how to test the rate-limiting configuration using Locust, a load-testing tool.

Creating a Kubernetes Deployment

To create the Nginx deployment, save the following YAML content to a file named nginx-deployment.yaml:

apiVersion: apps/v1kind: Deploymentmetadata:name: nginx-deploymentspec:selector:matchLabels:app: nginxreplicas: 1template:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx:latestports:- containerPort: 80kubectl command:

kubectl apply -f nginx-deployment.yamlCreating a Kubernetes Service

nginx-service.yaml:

apiVersion: v1kind: Servicemetadata:name: nginx-servicespec:selector:app: nginxports:- protocol: TCPport: 80targetPort: 80type: ClusterIPkubectl command:

kubectl apply -f nginx-service.yamlInstalling NGINX Ingress Controller

Next, we need to install the NGINX Ingress Controller. NGINX is a popular choice for managing ingress traffic in Kubernetes due to its efficiency and flexibility. The Ingress Controller extends Nginx to act as an entry point for external traffic into the cluster.

To install the NGINX Ingress Controller, we will use Helm, a package manager for Kubernetes applications. Helm simplifies the deployment process by providing a standardized way to package, deploy, and manage applications in Kubernetes.

Before proceeding, make sure you have Helm installed on your system. You can follow the official Helm documentation to install it.

Once Helm is installed, run the following command to add the NGINX Ingress Helm repository:

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginxhelm install my-nginx ingress-nginx/ingress-nginxCreating a Kubernetes Ingress Resource

rate-limit-ingress.yaml and add the following YAML content:

apiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: rate-limit-ingressannotations:nginx.ingress.kubernetes.io/limit-rps: "10"nginx.ingress.kubernetes.io/limit-rpm: "100"nginx.ingress.kubernetes.io/limit-rph: "1000"nginx.ingress.kubernetes.io/limit-connections: "100"spec:rules:- http:paths:- path: /pathType: Prefixbackend:service:name: nginx-serviceport:number: 80- 10 requests per second (

limit-rps) - 100 requests per minute (

limit-rpm) - 1000 requests per hour (

limit-rph) - 100 connections (

limit-connections)

kubectl command:

kubectl apply -f rate-limit-ingress.yamlIntroduction to Locust UI

Before we test the rate-limiting configuration, let's briefly introduce Locust, a popular open-source load-testing tool. Locust is designed to simulate a large number of concurrent users accessing a system and measure its performance under different loads.

Locust offers a user-friendly web UI that allows you to define test scenarios using Python code and monitor the test results in real time. It supports distributed testing, making it ideal for running load tests from Kubernetes clusters.

To install Locust locally for curiosity purposes, you can use the following pip command:

pip install locustlocustRunning Locust from Kubernetes

locust-deployment.yaml:

apiVersion: apps/v1kind: Deploymentmetadata:name: locust-deploymentspec:replicas: 1selector:matchLabels:app: locusttemplate:metadata:labels:app: locustspec:containers:- name: locustimage: locustio/locust:latestcommand:- locustargs:- -f- /locust-tasks/tasks.py- --host- http://nginx-service.default.svc.cluster.localports:- containerPort: 8089http://nginx-service.default.svc.cluster.local.

kubectl command:

kubectl apply -f locust-deployment.yamlkubectl port-forward deployment/locust-deployment 8089:8089The Locust UI will now be accessible here.

Open a web browser and navigate here to access the Locust UI.

Now, let's set up a simple test scenario in Locust to verify the rate limiting.

- In the Locust UI, enter the desired number of users to simulate and the spawn rate.

- Set the target host to the hostname or public IP associated with the NGINX Ingress Controller.

- Add a task that sends a GET request to

/index.html. - Start the test and monitor the results.

Locust will simulate the specified number of users, sending requests to the NGINX service. The rate-limiting configuration applied by the NGINX Ingress Controller will control the number of requests allowed per unit of time.

Conclusion

Implementing request rate limiting is essential for preserving the stability and security of your web applications. In this article, we explored how to set up request rate limiting using NGINX Ingress, a popular Kubernetes Ingress Controller. We also demonstrated how to test the rate-limiting configuration using Locust, a powerful load-testing tool.

By following the steps outlined in this article, entry-level DevOps engineers can gain hands-on experience in setting up request rate limiting and verifying its effectiveness. Remember to adjust the rate limits and test scenarios according to your specific requirements and application characteristics.

Rate limiting is a powerful tool, but it's important to use it judiciously. While it helps prevent abuse and protect your resources, overly strict rate limits can hinder legitimate traffic and user experience. Consider the nature of your application and the expected traffic patterns, and consult with stakeholders to determine appropriate rate limits.

With the knowledge gained from this article, you are well-equipped to implement request rate limiting in your Kubernetes environment and ensure the stability

Opinions expressed by DZone contributors are their own.

Comments