Send Slack Notification When Pod Is in "Crashloopbackoff" State

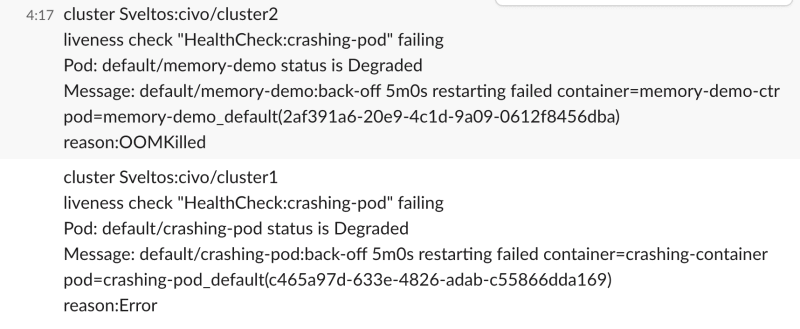

Sveltos can be configured to detect pods in a "crashloopbackoff" state within any of the managed clusters and send immediate #slack notifications.

Join the DZone community and get the full member experience.

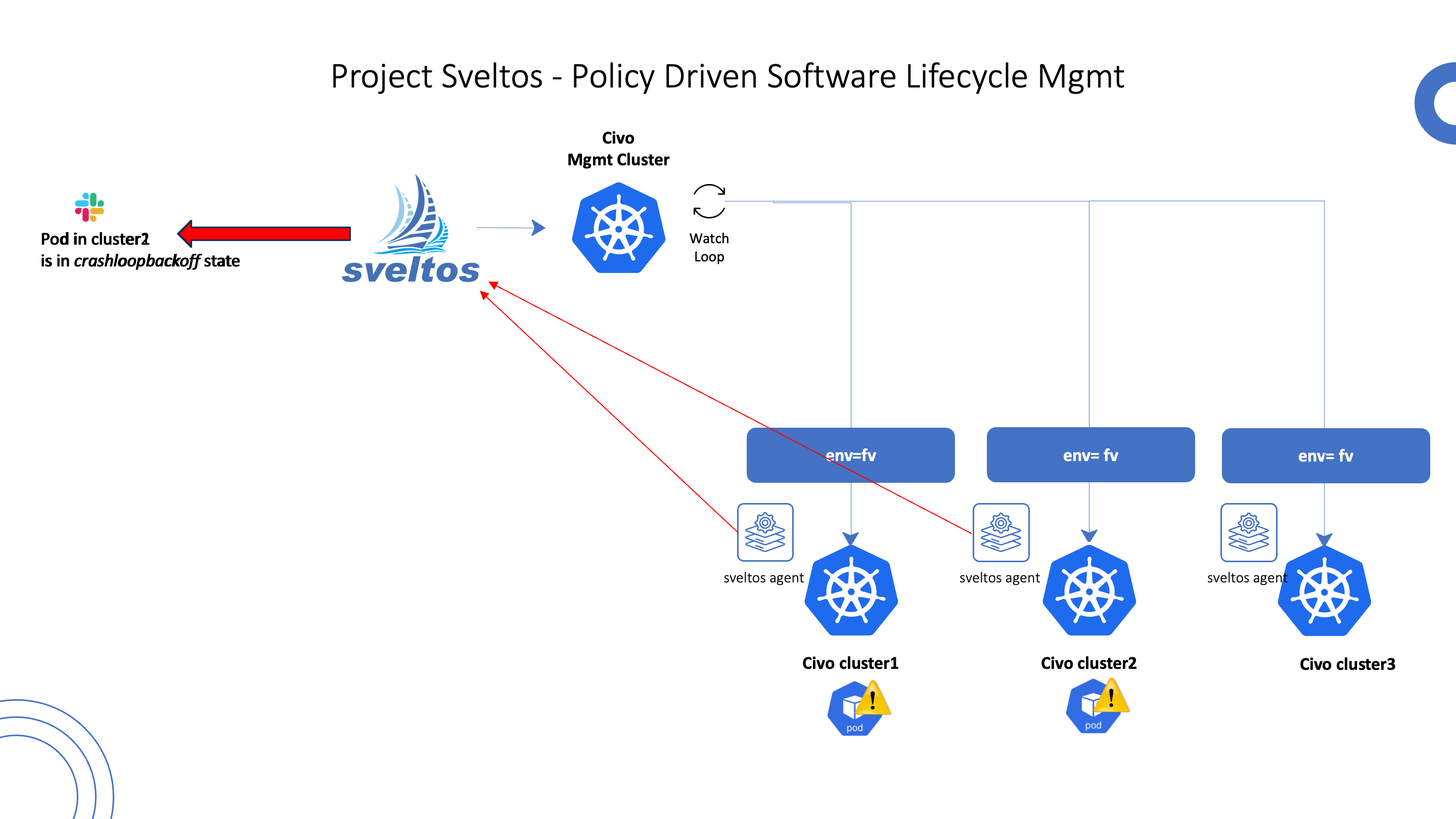

Join For FreeProjectsveltos is a Kubernetes add-on controller that simplifies the deployment and management of add-ons and applications across multiple clusters. It runs in the management cluster and can programmatically deploy and manage add-ons and applications on any cluster in the fleet, including the management cluster itself. Sveltos supports a variety of add-on formats, including Helm charts, raw YAML, Kustomize, Carvel ytt, and Jsonnet.

Projectsveltos, though, goes beyond managing add-ons and applications across a fleet of #Kubernetes Clusters. It can also proactively monitor cluster health and provide real-time notifications.

For example, Sveltos can be configured to detect pods in a "crashloopbackoff" state within any of the managed clusters and send immediate #slack notifications alerting administrators to potential issues.

Detect a Pod in "Crashloopbackoff" State

Projectsveltos has two custom resource definitions to achieve this goal:

HealthCheckdefines what to monitor. It accepts a #lua script. Sveltos's monitoring capabilities extend to all Kubernetes resources, including custom resources, ensuring comprehensive oversight of your infrastructure.ClusterHealthCheckdefines which clusters to monitor and where to send notifications

apiVersion: lib.projectsveltos.io/v1alpha1

kind: HealthCheck

metadata:

name: crashing-pod

spec:

group: ""

version: v1

kind: Pod

script: |

function evaluate()

hs = {}

hs.status = "Healthy"

hs.ignore = true

if obj.status.containerStatuses then

local containerStatuses = obj.status.containerStatuses

for _, containerStatus in ipairs(containerStatuses) do

if containerStatus.state.waiting and containerStatus.state.waiting.reason == "CrashLoopBackOff" then

hs.status = "Degraded"

hs.ignore = false

hs.message = obj.metadata.namespace .. "/" .. obj.metadata.name .. ":" .. containerStatus.state.waiting.message

if containerStatus.lastState.terminated and containerStatus.lastState.terminated.reason then

hs.message = hs.message .. "\nreason:" .. containerStatus.lastState.terminated.reason

end

endapiVersion: lib.projectsveltos.io/v1alpha1

kind: ClusterHealthCheck

metadata:

name: crashing-pod

spec:

clusterSelector: env=fv

livenessChecks:

- name: crashing-pod

type: HealthCheck

livenessSourceRef:

kind: HealthCheck

apiVersion: lib.projectsveltos.io/v1alpha1

name: crashing-pod

notifications:

- name: slack

type: Slack

notificationRef:

apiVersion: v1

kind: Secret

name: slack

namespace: default

All YAMLs used in this example can be found here.

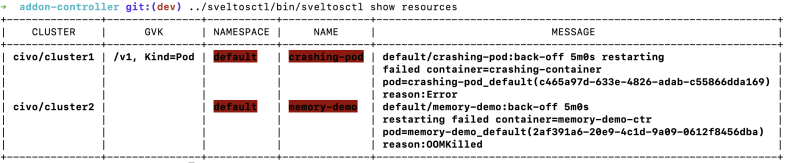

Centralized View

Projectsveltos also offers the ability to display the health of the clusters it manages. This information can then be accessed and displayed using Sveltos' CLI in the management cluster.

Deploy Add-Ons and Applications When an Event Happens

When detecting an event, Sveltos is not limited to just sending notifications. It can also respond to the event by deploying a new set of add-ons and applications.

Projectsveltos has two custom resource definitions to achieve this goal:

1. EventSource defines what an event is. It accepts a #lua script. Sveltos's monitoring capabilities extend to all Kubernetes resources, including custom resources, ensuring comprehensive oversight of your infrastructure.

2. EventBasedAddOn defines in which clusters events need to be detected and what add-ons and applications deploy in response.

apiVersion: lib.projectsveltos.io/v1alpha1

kind: EventSource

metadata:

name: crashing-pod

spec:

group: ""

version: "v1"

kind: "Pod"

collectResources: true

script: |

function evaluate()

hs = {}

hs.matching = false

hs.message = ""

if obj.status.containerStatuses then

local containerStatuses = obj.status.containerStatuses

for _, containerStatus in ipairs(containerStatuses) do

if containerStatus.state.waiting and containerStatus.state.waiting.reason == "CrashLoopBackOff" then

hs.matching = true

hs.message = obj.metadata.namespace .. "/" .. obj.metadata.name .. ":" .. containerStatus.state.waiting.message

if containerStatus.lastState.terminated and containerStatus.lastState.terminated.reason then

hs.message = hs.message .. "\nreason:" .. containerStatus.lastState.terminated.reason

end

end

end

end

return hs

end apiVersion: lib.projectsveltos.io/v1alpha1

kind: EventBasedAddOn

metadata:

name: hc

spec:

sourceClusterSelector: env=fv

eventSourceName: crashing-pod

oneForEvent: true

stopMatchingBehavior: LeavePolicies

policyRefs:

- name: k8s-collector

namespace: default

kind: ConfigMapThe ConfigMap referenced by EventBasedAddOn instance contains all resources that will be deployed in each cluster where a Pod in a crashing state is found.

In this case:

- PersistentVolumeClaim will be created

- A Job containing a Kubernetes collector instance. This job will collect logs and Kubernetes resources and save those in the corresponding volume

- A

ConfigMapcontains the Kubernetes collector configuration (which logs and resources to collect).

It is important to note that the job is defined as a template. This template allows Sveltos to dynamically instantiate the Job at deployment time, utilizing the metadata information from the crashing pod.

All YAMLs used in this example can be found here.

The Kubernetes collector used in this example can be found here.

Support This Project

If you enjoyed this article, please check out the Projectsveltos GitHub repo.

You can also star the project if you found it helpful.

The GitHub repo is a great resource for getting started with the project. It contains the code, documentation, and examples. You can also find the latest news and updates on the project on the GitHub repo.

Thank you for reading!

Published at DZone with permission of Gianluca Mardente. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments