Searchable Pod Logs on Kubernetes in Minutes

Containers generate huge volumes of log data. In a production environment, SREs, DevOps folks need a central location to query and analyse these logs, to do their job well. In this post we look at Parseable, a simple, efficient log observability platform that lets you store logs efficiently and query the logs easily to fix real world problems.

Join the DZone community and get the full member experience.

Join For FreeLog data, as it grows in importance and its sheer volume – is increasingly looking like analytical data. Small, semi-structured datasets in high volumes, critical insights to be captured from the data – the boundary between a log and an analytical event is as thin as ever.

Organisations are adapting by switching to OLAP stores like ClickHouse for their log storage. But OLAP platforms are essentially databases, built for BI-type use cases. This leaves a huge gap in the overall experience. Most important logging features like alerts, correlation, deep diving into an incident, and much more are not at all available.

This gap led to Parseable, a modern, scalable, cloud-native log storage system that works and scales like an OLAP system, but behaves like a log storage and observability platform.

Logging is a routine activity for any software-enabled business. Logs help keep the business running and customers happy. With the patterns I discussed above, there is a clear need for a developer-friendly, easy-to-deploy/maintain platform that doesn't get in the way of developers doing their job, but rather paves the way. Parseable is a simple, efficient log storage and observability platform. We're aiming at:

- Ownership of data and content

- Simplicity in deployment and usage, deploy anywhere.

- Kubernetes-native.

- OLAP DB-like architecture, logging platform-like behaviour.

Head over to Parseable community Slack with any questions, comments, or feedback!

Getting Started on Kubernetes

With the introductions out of the way, let's take a look at how to set up and run Parseable. We'll see how easy it is to get to searchable logs with Parseable.

Prerequisites

- S3 or compatible buckets as the storage layer.

- Kubectl and Helm installed on your machine.

Install on Kubernetes with Helm

We'll use a Parseable Helm chart to deploy the Parseable image. To get started in demo mode, use the commands:

helm repo add parseable https://charts.parseable.io/

kubectl create namespace parseable

helm install parseable parseable/parseable --namespace parseable --set parseable.demo=true

Note that we're using Parseable in demo mode here (--set parseable.demo=true), which means Parseable will use a publicly hosted bucket. We use this to get an idea of the Parseable working, but make sure to configure Parseable to use your own S3 bucket. Refer to the configuration options section for details on how to set up your own bucket.

Access the GUI

Let's first expose the Parseable service (created by the Helm chart) to localhost, so it is accessible on localhost:8000.

kubectl port-forward svc/parseable -n parseable 8000:80

You should now be able to point your browser to http://localhost:8000 and see the Parseable login page. In demo mode, the login credentials are set to parseable and parseable. Log in with these credentials. Right now, you may see an empty page, because we've not sent any data. Let's send some data to the demo instance now.

Sending and Querying Logs

Before sending logs, we need to create a log stream. Use curl to create a log stream like shown below, but make sure to replace <stream-name> with a name you intend to use for the log stream.

curl --location --request PUT 'http://localhost:8000/api/v1/logstream/<stream-name>' \

--header 'Authorization: Basic cGFyc2VhYmxlOnBhcnNlYWJsZQ=='Next, let's send log data to this log stream.

curl --location --request POST 'http://localhost:8000/api/v1/logstream/<stream-name>' \

--header 'X-P-META-meta1: value1' \

--header 'X-P-TAG-tag1: value1' \

--header 'Authorization: Basic cGFyc2VhYmxlOnBhcnNlYWJsZQ==' \

--header 'Content-Type: application/json' \

--data-raw '[

{

"id": "434a5f5e-2f5f-11ed-a261-0242ac120002",

"datetime": "24/Jun/2022:14:12:15 +0000",

"host": "153.10.110.81",

"user-identifier": "Mozilla/5.0 Gecko/20100101 Firefox/64.0",

"method": "PUT",

"status": 500,

"referrer": "http://www.google.com/"

}

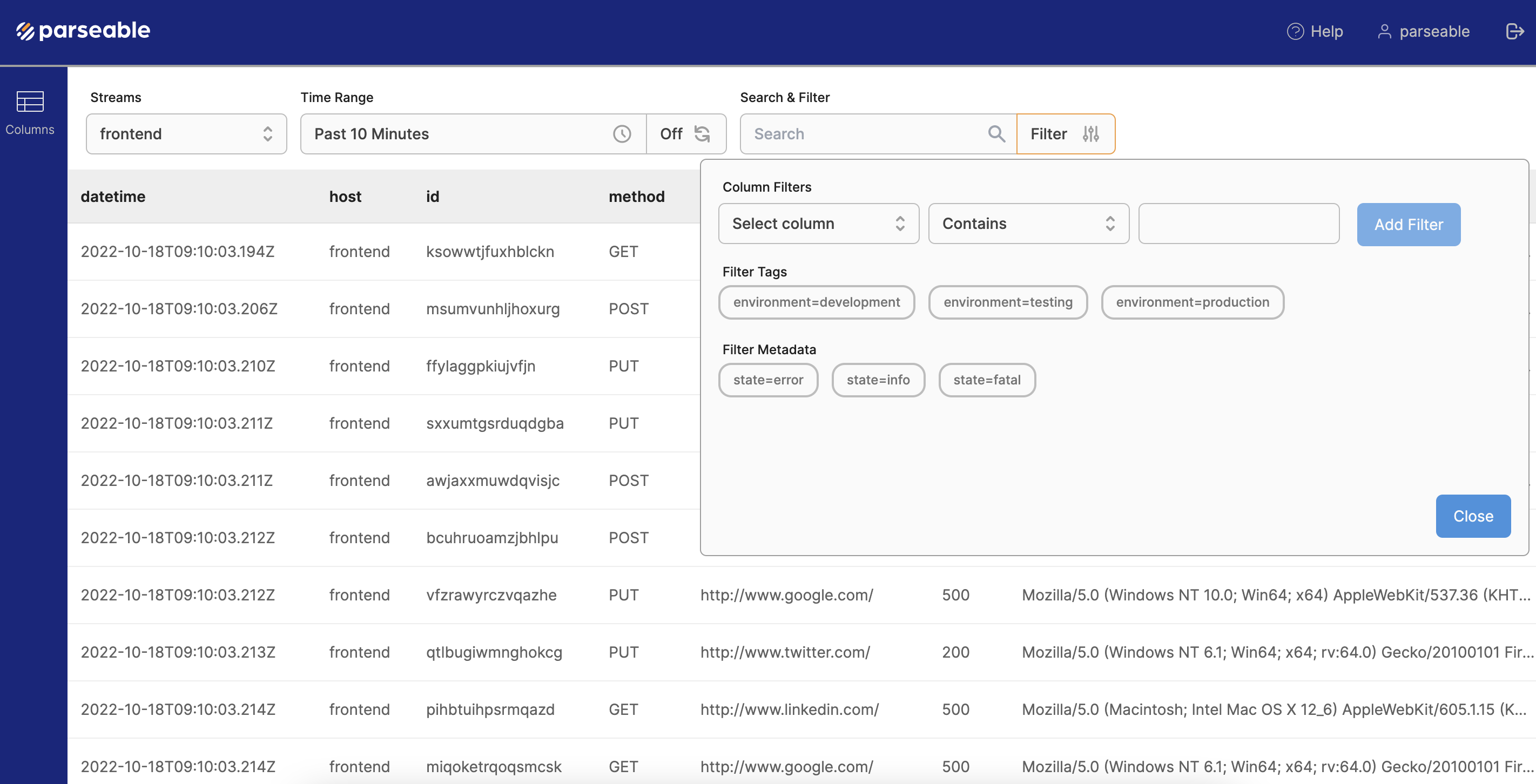

]'Now that we've sent data to the Parseable demo instance, switch to the Parseable dashboard on your browser and select the log stream you just created. You should see the log data we just sent.

You can also use the Query API to run SQL queries on the log data directly. Refer to the docs here.

Configuration Options

To configure Parseable so it uses your S3 / compatible bucket, you can set variables via the Helm command line (e.g. --set parseable.env.P_S3_URL=https://s3.us-east-1.amazonaws.com) or change the values.yaml file with proper values.

This is a list of relevant variables to configure the correct S3 endpoint.

P_S3_URL=https://s3.us-east-1.amazonaws.com

P_S3_ACCESS_KEY=<access-key>

P_S3_SECRET_KEY=<secret-key>

P_S3_REGION=<region>

P_S3_BUCKET=<bucket-name>

P_USERNAME=<username-for-parseable-login>

P_PASSWORD=<password-for-parseable-login>

For further details, please refer to the Parseable documentation.

I hope this article helped you gain a new perspective on the log storage space. If you find Parseable interesting, please consider adding a star to the repo here: https://github.com/parseablehq/parseable

Opinions expressed by DZone contributors are their own.

Comments