Scaling Up With Kubernetes: Cloud-Native Architecture for Modern Applications

Explore the world of containers and microservices in K8s-based systems and how they enable the building, deployment, and management of cloud-native applications at scale.

Join the DZone community and get the full member experience.

Join For FreeThis is an article from DZone's 2023 Kubernetes in the Enterprise Trend Report.

For more:

Read the Report

Cloud-native architecture is a transformative approach to designing and managing applications. This type of architecture embraces the concepts of modularity, scalability, and rapid deployment, making it highly suitable for modern software development. Though the cloud-native ecosystem is vast, Kubernetes stands out as its beating heart. It serves as a container orchestration platform that helps with automatic deployments and the scaling and management of microservices. Some of these features are crucial for building true cloud-native applications.

In this article, we explore the world of containers and microservices in Kubernetes-based systems and how these technologies come together to enable developers in building, deploying, and managing cloud-native applications at scale.

The Role of Containers and Microservices in Cloud-Native Environments

Containers and microservices play pivotal roles in making the principles of cloud-native architecture a reality.

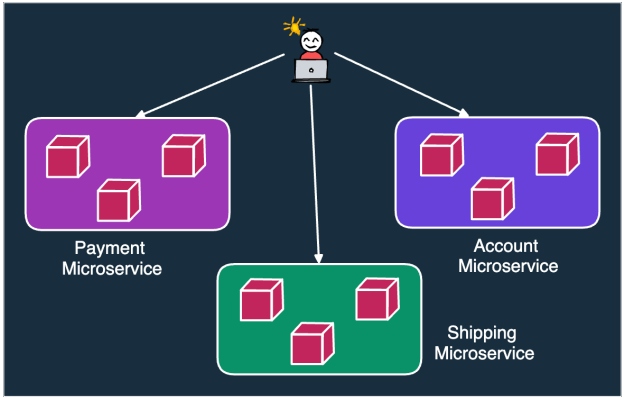

Figure 1: A typical relationship between containers and microservices

Here are a few ways in which containers and microservices turn cloud-native architectures into a reality:

- Containers encapsulate applications and their dependencies. This encourages the principle of modularity and results in rapid development, testing, and deployment of application components.

- Containers also share the host OS, resulting in reduced overhead and a more efficient use of resources.

- Since containers provide isolation for applications, they are ideal for deploying microservices. Microservices help in breaking down large monolithic applications into smaller, manageable services.

- With microservices and containers, we can scale individual components separately. This improves the overall fault tolerance and resilience of the application as a whole.

Despite their usefulness, containers and microservices also come with their own set of challenges:

- Managing many containers and microservices can become overly complex and create a strain on operational resources.

- Monitoring and debugging numerous microservices can be daunting in the absence of a proper monitoring solution.

- Networking and communication between multiple services running on containers is challenging. It is imperative to ensure a secure and reliable network between the various containers.

How Does Kubernetes Make Cloud Native Possible?

As per a survey by CNCF, more and more customers are leveraging Kubernetes as the core technology for building cloud-native solutions. Kubernetes provides several key features that utilize the core principles of cloud-native architecture: automatic scaling, self-healing, service discovery, and security.

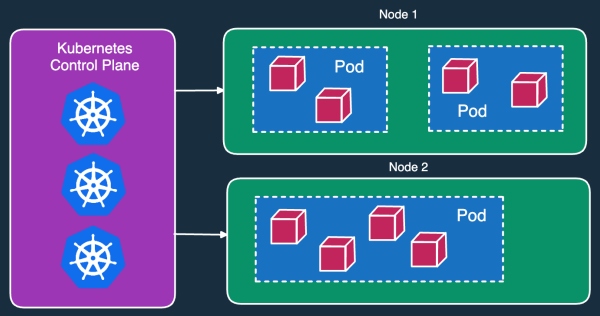

Figure 2: Kubernetes managing multiple containers within the cluster

Automatic Scaling

A standout feature of Kubernetes is its ability to automatically scale applications based on demand. This feature fits very well with the cloud-native goals of elasticity and scalability. As a user, we can define scaling policies for our applications in Kubernetes. Then, Kubernetes adjusts the number of containers and Pods to match any workload fluctuations that may arise over time, thereby ensuring effective resource utilization and cost savings.

Self-Healing

Resilience and fault tolerance are key properties of a cloud-native setup. Kubernetes excels in this area by continuously monitoring the health of containers and Pods. In case of any Pod failures, Kubernetes takes remedial actions to ensure the desired state is maintained. It means that Kubernetes can automatically restart containers, reschedule them to healthy nodes, and even replace failed nodes when needed.

Service Discovery

Service discovery is an essential feature of a microservices-based cloud-native environment. Kubernetes offers a built-in service discovery mechanism. Using this mechanism, we can create services and assign labels to them, making it easier for other components to locate and communicate with them. This simplifies the complex task of managing communication between microservices running on containers.

Security

Security is paramount in cloud-native systems and Kubernetes provides robust mechanisms to ensure the same. Kubernetes allows for fine-grained access control through role-based access control (RBAC). This certifies that only authorized users can access the cluster. In fact, Kubernetes also supports the integration of security scanning and monitoring tools to detect vulnerabilities at an early stage.

Advantages of Cloud-Native Architecture

Cloud-native architecture is extremely important for modern organizations due to the evolving demands of software development. In this era of digital transformation, cloud-native architecture acts as a critical enabler by addressing the key requirements of modern software development. The first major advantage is high availability. Today's world operates 24/7, and it is essential for cloud-native systems to be highly available by distributing components across multiple servers or regions in order to minimize downtime and ensure uninterrupted service delivery.

The second advantage is scalability to support fluctuating workloads based on user demand. Cloud-native applications deployed on Kubernetes are inherently elastic, thereby allowing organizations to scale resources up or down dynamically. Lastly, low latency is a must-have feature for delivering responsive user experiences. Otherwise, there can be a tremendous loss of revenue. Cloud-native design principles using microservices and containers deployed on Kubernetes enable the efficient use of resources to reduce latency.

Architecture Trends in Cloud Native and Kubernetes

Cloud-native architecture with Kubernetes is an ever-evolving area, and several key trends are shaping the way we build and deploy software. Let's review a few important trends to watch out for.

The use of Kubernetes operators is gaining prominence for stateful applications. Operators extend the capabilities of Kubernetes by automating complex application-specific tasks, effectively turning Kubernetes into an application platform. These operators are great for codifying operational knowledge, creating the path to automated deployment, scaling, and management of stateful applications such as databases. In other words, Kubernetes operators simplify the process of running applications on Kubernetes to a great extent.

Another significant trend is the rise of serverless computing on Kubernetes due to the growth of platforms like Knative. Over the years, Knative has become one of the most popular ways to build serverless applications on Kubernetes. With this approach, organizations can run event-driven and serverless workloads alongside containerized applications. This is great for optimizing resource utilization and cost efficiency. Knative's auto-scaling capabilities make it a powerful addition to Kubernetes.

Lastly, GitOps and Infrastructure as Code (IaC) have emerged as foundational practices for provisioning and managing cloud-native systems on Kubernetes. GitOps leverages version control and declarative configurations to automate infrastructure deployment and updates. IaC extends this approach by treating infrastructure as code.

Best Practices for Building Kubernetes Cloud-Native Architecture

When building a Kubernetes-based cloud-native system, it's great to follow some best practices:

- Observability is a key practice that must be followed. Implementing comprehensive monitoring, logging, and tracing solutions gives us real-time visibility into our cluster's performance and the applications running on it. This data is essential for troubleshooting, optimizing resource utilization, and ensuring high availability.

- Resource management is another critical practice that should be treated with importance. Setting resource limits for containers helps prevent resource contention and ensures a stable performance for all the applications deployed on a Kubernetes cluster. Failure to manage the resource properly can lead to downtime and cascading issues.

- Configuring proper security policies is equally vital as a best practice. Kubernetes offers robust security features like role-based access control (RBAC) and Pod Security Admission that should be tailored to your organization's needs. Implementing these policies helps protect against unauthorized access and potential vulnerabilities.

- Integrating a CI/CD pipeline into your Kubernetes cluster streamlines the development and deployment process. This promotes automation and consistency in deployments along with the ability to support rapid application updates.

Conclusion

This article has highlighted the significant role of Kubernetes in shaping modern cloud-native architecture. We've explored key elements such as observability, resource management, security policies, and CI/CD integration as essential building blocks for success in building a cloud-native system. With its vast array of features, Kubernetes acts as the catalyst, providing the orchestration and automation needed to meet the demands of dynamic, scalable, and resilient cloud-native applications.

As readers, it's crucial to recognize Kubernetes as the linchpin in achieving these objectives. Furthermore, the takeaway is to remain curious about exploring emerging trends within this space. The cloud-native landscape continues to evolve rapidly, and staying informed and adaptable will be key to harnessing the full potential of Kubernetes.

Additional Reading:

- CNCF Annual Survey 2021

- CNCF Blog

- "Why Google Donated Knative to the CNCF" by Scott Carey

- Getting Started With Kubernetes Refcard by Alan Hohn

- "The Beginner's Guide to the CNCF Landscape" by Ayrat Khayretdinov

This is an article from DZone's 2023 Kubernetes in the Enterprise Trend Report.

For more:

Read the Report

Opinions expressed by DZone contributors are their own.

Comments