Scaling Entity Framework Core Apps With Distributed Caching

Learn how to increase the speed of your high-traffic .NET applications by optimizing Entity Framework Core, providing faster access to relational databases.

Join the DZone community and get the full member experience.

Join For FreeHigh-traffic .NET applications that need to access relational databases like SQL Server are increasingly using Entity Framework (EF) Core. EF Core is the latest version of the Entity Framework Object Relational (O/R) Mapping framework from Microsoft. EF Core runs on both standard .NET Framework and the new .NET Core. EF Core simplifies database programming and speeds up .NET application development.

But, high-traffic EF Core applications face performance bottlenecks under heavy user traffic. And, this is because although the application tier scales nicely for handling increased user traffic, the database becomes the bottleneck. EF Core itself doesn’t resolve this problem unless you incorporate distributed caching in your EF Core application. NCache is a very popular distributed cache for .NET that I will use in this article.

This article goes over the techniques you can use from within an EF Core application for caching various types of data. NCache has implemented “Extension Methods” in EF Core that allows you to very easily make caching calls from appropriate places in your application. And, this article explains what you can do with those calls in order to benefit from caching.

In the end, this article covers what a typical distributed cache architecture looks like.

Good Response Time Saves the Day

We have usually heard that “speed kills” and it is true, say, if you are driving way beyond the speed limits and you get into an accident. However, when we enter the world of high traffic applications, especially customer-facing web applications, speed is the real savior for the business. There was an interesting survey done by the Forrester Consulting on behalf of Akamai Technologies, Inc. in 2009 examining e-commerce web application performance and its correlation with an online shopper’s behavior. The most compelling results were that two seconds is the threshold in terms of an average online shopper’s expectation for a web page to load and 40 percent of shoppers will wait no more than three seconds before abandoning a retail or travel site.

Some of the key findings of the survey are:

- 47 percent of consumers expect a web page to load in 2 seconds or less and 40 percent of them will wait no more than 3 seconds for a web page to render before abandoning the site.

- Online shoppers, especially for high spending shoppers, loyalty is contingent upon quick page loading, and an astounding 52 percent stated that quick loading of pages is important to their site loyalty.

- Retail and travel sites that have slow response times lead to lost sales. 79 percent of online shoppers who experience a dissatisfying visit are less likely to buy from that site again.

One should keep this in mind that the survey took place in the year of 2009 when smartphone and tablet usage was yet to explode which would eventually add more traffic for already overburdened applications as the years passed on.

Distributed Cache Scales

As we saw in the last section, in order for a business to succeed, the technological solution that it employs must meet, not only certain performance benchmark, but it must be able to offer the same user experience under enormous load on the system. In other words, it should be able to scale well and offer the same level of application performance, for example, say for 1000 concurrent users, as well as, say for another 100,000 concurrent users, without any noticeable degradation in performance.

The way we handle huge traffic to our application is by resorting a distributed caching solution. In distributed caching, we pool together memory, CPU and other resources of a collection/cluster of servers as if all the servers were one logical unit and delegate all tasks related to caching of application data to it and have it sit in front of the backend database server. Distributed cache enables the application to store any volume of reference data, that is, those pieces of information that do not change frequently, as well as transactional data, information that is in constant flux, in an elegant manner, sparing the application layer of all complexities of handling a caching solution by itself.

Some of the important advantages of using a distributed cache are as follows:

- Scalability: Distributed cache can be scaled rapidly to handle high transactional workloads as well as large data volumes by simply adding more nodes to the existing cluster of nodes.

- Cost Effectiveness: Scaling of backend database itself is sometimes prohibitively costly as it often requires high-end costly servers and storage solution. However, implementing a caching utilizing a distributed cache is a rapid and clean way of eliminating the demand of scaling backend databases. And distributed cache can be scaled using low priced nodes, thereby, reducing the TCO (total cost of ownership) of a high performing and highly scalable enterprise solution, considerably.

- High Availability: Distributed cache not only boosts performance while affording us a highly scalable solution; it also takes care of the needs of a highly available environment with built-in fault-tolerance and automatic failover.

Entity Framework Core

Entity Framework Core (EF Core) is an object-relational mapper (ORM) that is lightweight, extensible and cross-platform enabling .NET developers to work with an underlying database using .NET objects thereby eliminating the need for separate data access codes. The latest version released by Microsoft is EF Core 2.0.

At the time of writing this article, EF Core supports only First-Level Cache, that is, when an entity is materialized as the result of executing a query, it is stored in an in-memory cache associated with the Entity Framework Context ( a.k.a System.Data.Entity.DbContext class ). Let us go over some of the basic examples as to how EF Core handles caching of data and associated problems.

Data Retrieval/First Level Cache Storage

In the following example, we attempt to load all “Customers” in the sample Northwind database that are from the country of “Italy”:

using ( var context = new NorthwindContext())

{

var Italians = context.Customers.Where( c => c.Country == “Italy”);

}

Code 1: EF Core data retrieval from the database using the Entity Framework ContextOnce the Entity Framework Context class finishes data retrieval, the returned entities are added to the context’s local (first-level / in-memory) cache and marked for persistence. So, for subsequent queries to fetch same entities, it will bring back entities stored in data context’s local cache thereby eliminating the need to query the database again, instantiating all entities, hydrating them etc.

Notice in the following example, as to how ChangeTracker instance in the NorthwindContext ( derived from DBContext class) keeps track of all entities known by the context. In the following code snippet, we try bring the entities back from the local cache of data context.

using ( var context = new NorthwindContext())

{

var Italians = context.Customers.Where( c => c.Country == “Italy”);

// accessing cached copies of entities

var cachedItalians = context.ChangeTracker

.Entries<Customers>()

.Select(e => e.Entity);

}

Code 2: EF Core data retrieval from the local cache of Entity Framework ContextInherent Problems With First-Level Storage Cache

As seen earlier, EF Core’s first level caching mechanism provides some performance boost, however, consider a scenario where the entity’s record changes in the database level and we are still working off of locally cached copies of entities from the Entity Framework context. Notice in the following code snippet we will get an incorrect result set.

using ( var context = new NorthwindContext())

{

// retrieving entities from the database

var Italians = context.Customers.Where( c => c.Country == “Italy”);

// the entities’ records changes in the database, say, all customers from Italy are deleted

// trying to retrieve the same entities again

// result is unchanged as the entities are being returned from the first level cache

Italians = context.Customers.Where( c => c.Country == “Italy”);

}

Code 3: EF Core data retrieval from the local cache leading to inconsistent resultSo a workaround for this problem is to detach those entities from the context, which effectively means removing it from first-level cache, also termed as eviction of the cached item. Once detachment is done, entities may be fetched once again, which would ensure that fresh data is read from the database.

…

foreach (var entry in context.ChangeTracker.Entries<T>().ToList())

{

context.Entry(entry.Entity).State = EntityState.Detached;

}

..

Code 4: Detaching entities from local cache so that they may be re-hydrated in subsequent retrievalsSo, we see that EF Core’s out of the box (OOB) first-level caching mechanism is far from perfect. It leads to clunky code which is prone to errors and there is fairly a good chance of the code base becoming convoluted and unmaintainable in the long run. A few of the other drawbacks of EF Core OOB first-level caching mechanism are:

- As more objects and their references are loaded into memory, the memory consumption of the context may increase exponentially – ironically leading to performance issues, for which we had started using EF core first-level cache in the first place.

- Possible memory leaks when disposal of context not done properly when it is not required.

- The probability of running into concurrency related issues increases as the gap between the time when the data is queried and updated grows.

To the relief of developers and corporations alike, NCache, a market leader in distributed cache niche (we will see as to why, later in the article), provides distributed cache solutions that not only are sophisticated but elegant at the same time, enabling developers to write less code (utilizing NCache extension methods) that are maintainable and leverage the full capability of the underlying distributed cache effortlessly without worrying about inherent flaws of first-level caching of EF Core and jumping hoops to come up with workaround. Most importantly, NCache distributed cache gives developers full control over both reference data as well as transactional data.

NCache – the Distributed Cache

Microsoft is making progress with each iteration of its release of .NET Framework, including that of the latest .NET Core and accompanying latest ORM technology Entity Framework Core, to make an out of the box caching solution available; however, it falls short of providing a caching solution that has robust scalability, good performance, and is reliable at the same time. Hence there remains a need for a third-party caching solution that caters to enterprise caching needs and is able to do extreme transaction processing. Some of the vendors in this niche area are NCache, Azure Redis Cache, Red Hat JBoss Data Grid, Memcached, AppFabric, and others.

NCache by Alachisoft stands out among its competitors due to several reasons. It is 100% native to .NET and is open source. It provides an extremely fast and linearly scalable distributed cache that caches application data enabling the application to support extreme transaction processing. Its competitors are also providing similar solutions, however, they still need to do a lot of catching up with NCache in many respects. For example, Azure Redis Cache (as of version 3.2.7) does not support Entity Framework Core Cache (extension methods), Red Hat JBoss Data Grid (as of version 7.1.0) has no support for Entity Framework Core Cache, and neither Memcached (as of version 1.4.21) nor AppFabric (as of version 1.1) support any Entity Framework 2nd Level Caching.

However, on the other hand, NCache, as stated earlier is 100% native to .NET, comes with all the bells and whistles that are compatible with various tools and techniques provided by Microsoft with each iteration of its release of .NET Framework/.NET Core including that of full support for the latest Entity Framework Core using extension methods – which we will see later on in full details as to how NCache provides a robust caching mechanism for EF Core by providing extension methods.

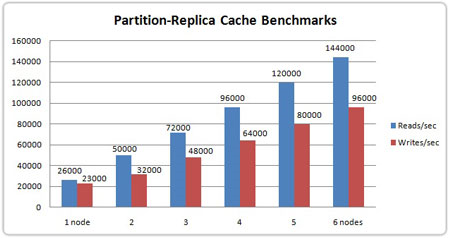

Before we dive into details of EF Core and NCache, one thing that is worth mentioning here is the NCache performance and scalability benchmarks which are quite impressive ( as shown in the following graph).

3 Figure 2 : NCache Performance Benchmarks – Partition Replica Caching Topology.

I was going through the NCache website and found that it has a long list of organizations that have successfully integrated it into their enterprise solutions. Some of the organizations that are listed are Bank of America, CITI Group, Ryan Air, AIG, and others. In a way, this stands as a testament to the maturity of their product and would inspire confidence among developers to explore NCache further.

NCache for EF Core

Please keep this in mind that installing and configuring .NET Core, Visual Studio, or Entity Framework Core is beyond the scope of this article and a basic assumption has been made that you already have these components working on your machine.

Installing NCache itself is fairly a straightforward procedure as like any other standard windows application/service installer, NCache installer provides a step-by-step installation wizard that is quite self-explanatory.

Now let us dive deeper into installing and configuring NCache Entity Framework Core Provider which acts as a pluggable in-memory second-level cache for EF Core.

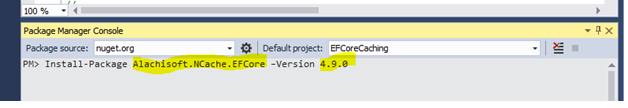

Installing Entity Framework Core Caching Provider

In order to install NCache EF Core provider please note that you must have .NET Framework 4.6.1/Visual Studio 2015 and up, or .NET Core App 2.0/Visual Studio 2017 and Entity Framework Core 1.1.0 installed on your machine.

NCache EF Core Cache provider is available as NuGet Package (package name “Alachisoft.NCache.EFCore” - latest version being 4.9.0) and could be installed using the following command on Package Manager Console:

Figure 3: Visual Studio Package Manager – Installing NCache EF Core provider.

Once the package gets installed successfully, you will need to reference the following 2 namespaces in your application:

using Alachisoft.NCache.EntityFrameworkCore;

using Alachisoft.NCache.Runtime.Caching;

Code 5 : Required namespaces from NCache EF Core provider APIThe Alachisoft team has done a wonderful job of providing a multitude of sample applications that demonstrate as to how to leverage NCache as a distributed caching system in .NET applications and more specifically sample applications for EF Core Caching Provider may be found under the following folders:

.NET Core:[Install_Dir]/samples/dotnetcore/EntityFramework/EFCore

.NET Framework: [Install_Dir]/samples/dotnet/EntityFramework/EFCore

Configuring NCache Entity Framework Core Provider

Before NCache EF Core Provider can be used in our applications, we need to make sure that certain configuration requirements are met.

Among these requirements, first and foremost is that we need to make sure that all entities of the database model are serialized so that they may be stored by NCache.

namespace Alachisoft.NCache.Samples.NorthwindModels

{

[Serializable]

public partial class Customers

{

public Customers()

{ ...

Code 6 : Making Customers entity of database model serializable for storing in NCacheSecondly, NCache provides a plethora of configurable cache properties that are set in the DBContext via using different properties of the class “NCacheConfiguration”. Alachisoft recommends that we configure NCache in either the database context or the entry point of the application otherwise an exception is thrown.

namespace Alachisoft.NCache.Samples.NorthwindModels

{

public partial class NorthwindContext : DbContext

{

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder)

{

string cacheId = System.Configuration.ConfigurationManager.AppSettings["CacheId"];

string connString = System.Configuration.ConfigurationManager.AppSettings["NorthwindDbConnString"];

NCacheConfiguration.Configure(cacheId, DependencyType.SqlServer);

optionsBuilder.UseSqlServer(connString);

...

Code 7 : Setting different configuration options using NCacheConfiguration class in DBContextNext, comes configuring a default schema in case SQL Dependency is being used with NCache. This is done by appending “dbo” before the table name where default schema is set as “dbo.” This is achieved by adding the following code in the “OnModelCreating” method in the DBContext class:

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

modelBuilder.HasDefaultSchema("dbo");

Code 8 : Setting default schema as “dbo” in OnModelCreating method of the DBContext ClassUsing NCache Entity Framework Core Caching Provider

This is the section where the rubber meets the road, that is, we will dig deeper into as to how NCache EF Core Caching provider is actually used in our applications that utilize Entity Framework Core as the ORM. In this section, we will see how to utilize LINQ APIs, cache only APIs along with the flexibility of specifying different caching options.

Caching Options for EF Core Caching Provider

NCache EF Core Caching Provider provides us a set of configuration options and methods encapsulated in the class “ CachingOptions” (not to be confused with options of NCacheConfiguration class) which are to be populated with appropriate values for the cache item before it could be inserted into the cache. Some of the notable properties are, “ AbsoluteExpirationTime ”, “ SlidingExpirationTime ”, “ StoreAs ”, “ Priority ” etc.

For a list of different methods and properties (along with their default values) of “CachingOptions” class please head over to their detailed documentation that can be found here.

Please refer to the following code snippet to see as to how the configuration options are set on cache item before it is stored in the cache:

private static void GetCustomerFromDatabase(NorthwindContext database)

{

CachingOptions options = new CachingOptions

{

QueryIdentifier = null,

CreateDbDependency = false,

StoreAs = StoreAs.Collection,

Priority = Runtime.CacheItemPriority.Default

};

var customerQuery = (from customerDetail in database.Customers

where customerDetail.CustomerId == "TOMSP"

select customerDetail)

.FromCache(out string cacheKey, options).AsQueryable();

Code 9 : Setting of different options utilizing CacheOptions class before an item is stored in Cache(b) LINQ APIs for EF Core Caching Provider

NCache EF Core Caching Provider comes with both sync and async API calls for caching queries. Sync API calls are extension methods provided on the IQueryable interface (LINQ) while the async API calls are a respective invocation of the sync APIs returning a task instance of the respective invocation.

NCache EF Core Caching Provider gives us 3 APIs for caching queries, viz. “FromCache”, “LoadIntoCache”, and “FromCacheOnly”; while the corresponding set of Async APIs for these 3 are named with the word “Async” suffixed at the end of these three APIs, for example: “FromCacheAsyn.c”

(1) FromCache

This method caches the result set generated by the LINQ query and returns it. In case the data was absent in cache, it will fetch the data from data source and insert the item into the cache. When the same query is executed subsequently, the result is fetched from the cache, provided that the data is still in the cache and is not evicted, expired or invalidated due to some other reasons.

However, it should be noted here that if the need is to find the current state of data from the data source calling “FromCache” should be avoided as this will fetch the data directly from the data source without checking the cache first, nor it will insert the data into the cache.

This method, generally speaking, is suitable for result sets that do not change frequently, that is, lookup data.

For example, notice how different caching options are set in the following code snippet before a call is made to “ FromCache ” which stores the result set of the query in the form of a collection in the cache.

private static void GetCustomerFromDatabase(NorthwindContext database)

{

CachingOptions options = new CachingOptions

{

QueryIdentifier = null,

CreateDbDependency = false,

StoreAs = StoreAs.Collection,

Priority = Runtime.CacheItemPriority.Default

};

var customerQuery = (from customerDetail in database.Customers

where customerDetail.CustomerId == "TOMSP"

select customerDetail)

.FromCache(out string cacheKey, options).AsQueryable();

…

Code 10 : Using “FromCache”(2) LoadIntoCache

There are scenarios where we need fresh data each time the query is executed from the data source and would like to store the item in the cache. For this purpose, “LoadIntoCache” comes handy which fetches the result set from the source and caches it, overwriting any prior version of cached data, if present, before returning to the application each time. This is appropriate in cases where data changes frequently and we cannot work with stale data. So, in a way invocation of LoadIntoCache can be considered as a query result set refresher.

For example, notice as to how customer orders are brought back in the following code snippet using “LoadIntoCache” which ensures that subsequent execution of the query and the resulting set is returned to the application and cached collection is refreshed/overwritten each time.

private static void GetCustomerOrders(NorthwindContext database)

{

CachingOptions options = new CachingOptions

{

QueryIdentifier = null,

CreateDbDependency = false,

StoreAs = StoreAs.Collection,

Priority = Runtime.CacheItemPriority.Default

};

var orderQuery = (from customerOrder in database.Orders

where customerOrder.Customer.CustomerId == "TOMSP"

select customerOrder)

.LoadIntoCache(out string cacheKey, options);

Code 11 : Using “LoadIntoCache”

(3) FromCacheOnly

There are scenarios where we are working with data that rarely or infrequently changes, termed as referential data. So, in such scenarios, it would be better to fetch data from the cache instead of making costly trips to the back-end database. For this purpose, NCache provides us “FromCacheOnly” that fetches data from cache only and if the data is not present in the cache, then it may be loaded into cache separately by “LoadIntoCache”. So, the objective of using “FromCacheOnly” is to query referential data from the cache and that of “LoadIntoCache” is to load referential data. As a result, users would be able to query on the cache instead of the backend database. However, it should be noted here that we should load all referential data in the cache as opposed to just a portion of it based on some criteria because the call to “FromCacheOnly” will fetch from the cache only and would not attempt to run a query against the backend database.

In the following code snippet, we first load all “Products” into the cache at the time of application startup using “LoadIntoCache” as the list of products is by nature referential data because it does not change frequently. Afterwards, we make a call to “FromCacheOnly” to query the cache for any products that are discontinued.

private static void PrintDetailsOfDiscontinuedProducts(NorthwindContext database)

{

CachingOptions options = new CachingOptions

{

QueryIdentifier = null,

CreateDbDependency = false,

StoreAs = StoreAs.SeperateEntities,

Priority = Runtime.CacheItemPriority.Default

};

// Load all products into cache as individual objects

(from products in database.Products select products).LoadIntoCache(options).ToList();

// Query cache for any products that are discontinued

var discontinuedProducts = (from product in database.Products

where product.Discontinued == true

select product)

.FromCacheOnly().ToList();

…

Code 12 : Using “FromCacheOnly”(4) Asynchronous LINQ APIs

Asynchronous API calls work in the same manner as that of their respective synchronous counterparts as discussed earlier, except that the call is handled in an asynchronous manner.

Notice as to how in the following code snippet, the customer is brought back by using “FromCacheAsync” in an asynchronous manner:

private static void GetCustomerFromDatabaseAsync(NorthwindContext database)

{

CachingOptions options = new CachingOptions

{

QueryIdentifier = null,

CreateDbDependency = false,

StoreAs = StoreAs.Collection,

Priority = Runtime.CacheItemPriority.Default

};

// Invoke the async extension method

var task = (from customerDetail in database.Customers

where customerDetail.CustomerId == "TOMSP"

select customerDetail)

.FromCacheAsync(options);

// Perform some other task until this one is complete

TaskExhaustor(task);

// Get the result

IQueryable<Customers> result = task.Result.AsQueryable();

…

Code 13 : Asynchronous LINQ API(c) Cache Only APIs for EF Core Caching Provider

NCache EF Core Cache Provider also offers us APIs to deal with entities through cache directly without ever going back to the database. So data may be inserted or removed from the cache without modifying the underlying data source. There are 3 cache only APIs, viz. “Insert”, “Remove” and “RemoveByQueryIdentifier”, however, in order to utilize these, a cache handle is to be first obtained via the “GetCache” extension method on your application’s extended DBContext class.

(1) Insert

The “Insert” method adds the entity directly to the cache without any dependency on the database. If the entity is not existing in the cache, then “Insert” adds an entity to the cache and if the entity is already present in the cache, it updates it.

In the following code snippet, notice as to how an entity named “shipperPandaExpress” is being added to the cache by the “Insert” method without querying the database:

….

string cacheKey;

// Add them to cache (without querying the database)

Cache cache = database.GetCache();

cache.Insert(shipperPandaExpress, out cacheKey, options);

....

Code 14 : Using “Insert” method(2) Remove

The “Remove” method removes entities from the cache without deleting them from the underlying database. The method comes in two overloads – one that takes entity to be removed as a parameter, while the second overload takes in the cache key.

In the following code snippets, notice how the two overloads of the method are used to remove an entity from the cache:

…

// Remove it from cache without querying the database

Cache cache = database.GetCache();

cache.Remove(shipper);

…

String cacheKey;

Cache cache = database.GetCache();

cache.Remove(cacheKey); //cacheKey saved during Insert()/FromCache()/LoadIntoCache() calls

…

Code 15 : Using “Remove” method

(3) RemoveByQueryIdentifier

This method removes all entities from the cache based on the specified “QueryIdentifier” in “CachingOptions”.

Notice in the following code snippet all entities associated with the QueryIdentifier of “CustomerEntity” are removed from the cache:

…

CachingOptions options = new CachingOptions

{

QueryIdentifier = new Tag("CustomerEntity"),

};

Cache cache = database.GetCache(); //get NCache instance

cache.RemoveByQueryIdentifier(options.QueryIdentifier);

…

Code 16 : Using “RemoveByQueryIdentifier” methodNCache Distributed Cache Architecture

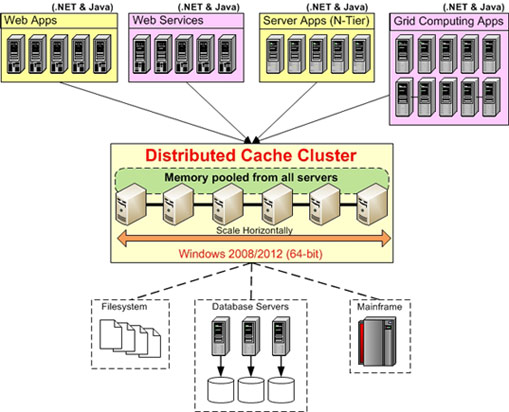

NCache distributed cache is quite a sophisticated cache that gives us a solution that is 100% Native .NET and is Open Source (under Apache License). It affords us extremely fast and linearly scalable distributed cache solution that is not only a complete distributed caching solution for any enterprise in its current form, rather it is continuously striving to improvise itself in order to cater to the ever-changing needs of an enterprise. NCache makes sure that the needs of a High Availability (HA) production environment are met by ensuring a 100% uptime via utilizing a self-healing dynamic cluster whereby one may add or remove servers from the cluster at runtime without bringing either application layer or cache layer down.

NCache offers different configurations depending on your organization’s needs and budget. It provides different caching topologies, needing different number of servers, which are often dictated by data storage strategy, data replication strategy and client connection strategy i.e. a balancing act between the needs of a resilient solution with no to little data loss, as well as a high performing and scalable solution that is capable to handle any load that is thrown its way.

Without going into details, NCache, provides the following four caching topologies for your cache and they are as follows:

- Mirrored Cache

- Replicated

- Partitioned Cache

- Partitioned-Replica Cache

Figure 4: NCache Distributed Cache Architecture – Enterprise Solution.

Conclusion

In this article, we started off of some of the basic concepts surrounding cache and distributed cache. Then we saw as to how, despite making progress, Microsoft’s out of the box (OOB) caching solutions fall short of handling extreme transaction processing in a high volume environment where high availability, robust scalability, and high performance are always the driving factors in the success of any enterprise solution. As a result, several third-party vendors have come up with their independent caching solutions, among which NCache stands tall because of its several sophisticated features as enumerated in this article itself.

We also saw as to how NCache EF Core Cache Provider acts as pluggable in-memory second-level cache for EF Core. We dived deeper into as to how specifically NCache EF Core Cache Provider’s API extension methods could be utilized by our EF Core code in order to leverage caching capabilities of NCache EF Core Provider effortlessly.

Finally, we discussed briefly as to how NCache distributed cache is architected to suited to the needs of different types of organization depending on the complexity of the project and budget size.

So, if you are an architect or a decision maker in your organization and are in the market for a distributed cache system that is extremely fast, resilient, and is always ahead of the curve in terms of its compatibility with the latest version of Microsoft tools and technologies, then look no further and please explore NCache. I have heard they even give out a demo of their products and their after-sales support is excellent.

Before I sign off, we have clearly seen that at least when, say, you are an Amazon.com in the making and a slow performing web application could lead to fewer conversion of visitors into customers and a clogged system might result in the loss of thousands upon thousands dollars in potential revenue, then you would certainly not want to be conservative with the speed of your applications, rather you should hit the pedal to the metal, and evaluate tools and techniques that provide optimal solution to your technical problems. In an era where businesses are done at the speed of thought, corporations should be ever vigilant that a simple wrong decision in terms of choosing an optimal technological tool and or technique, can be critical for their survival in this hyper-competitive world.

'Til next time,

Rashid Khan

Opinions expressed by DZone contributors are their own.

Comments