Run Containers and VMs Together With KubeVirt

Wouldn’t it be great if you could run VMs as part of your Kubernetes environment?

Join the DZone community and get the full member experience.

Join For FreeAlthough many enterprises have deployed Kubernetes and containers, most also operate virtual machines. As a result, the two environments will likely co-exist for years, creating operational complexity and adding cost in time and infrastructure.

Without going into the pros and cons of one versus the other, it’s helpful to remember that each virtual machine or VM contains its instance of a full operating system and is intended to operate as if it were a standalone server—hence the name. By contrast, in a containerized environment, multiple containers share one instance of an operating system, almost always some flavor of Linux.

Not all application services run well in containers, resulting in a need to run both.

For example, a VM is better than a container for LDAP/Active Directory applications, tokenization applications, and applications with intensive GPU requirements. You may also have a legacy application that, for some reason (no source code, licensing, deprecated language, etc.) can’t be modernized and therefore has to run in a VM, possibly against a specific OS like Windows.

Whatever the reason your application requires VMs or containers, running and managing multiple environments increases the complexity of your operations, requiring separate control planes and possibly separate infrastructure stacks. That may not seem like a big deal if you need to run one or a small set of VMs to support a single instance of an otherwise containerized application. But what if you have many such applications? And what if you need to run multiple instances of those apps across different cloud environments? Your operations can become very complicated very quickly.

Wouldn’t it be great if you could run VMs as part of your Kubernetes environment?

This is exactly what KubeVirt enables you to do. In this blog, I’ll dig into what KubeVirt is, the benefits of using it, and how to integrate this technology so that you can get started using it right away.

What Is KubeVirt?

KubeVirt is a Kubernetes add-on that enables Kubernetes to provision, manage, and control VMs on the same infrastructure as containers. An open-source project under the auspices of the Cloud Native Computing Foundation (CNCF), KubeVirt is currently in the incubation phase.

This technology enables Kubernetes to schedule, deploy, and manage VMs using the same tools as containerized workloads, eliminating the need for a separate environment with different monitoring and management tools. This gives you the best of both worlds, VMs and Kubernetes working together.

WIth KubeVirt, you can declaratively:

- Create a VM

- Schedule a VM on a Kubernetes cluster

- Launch a VM

- Stop a VM

- Delete a VM

Your VMs run inside Kubernetes pods and utilize standard Kubernetes networking and storage.

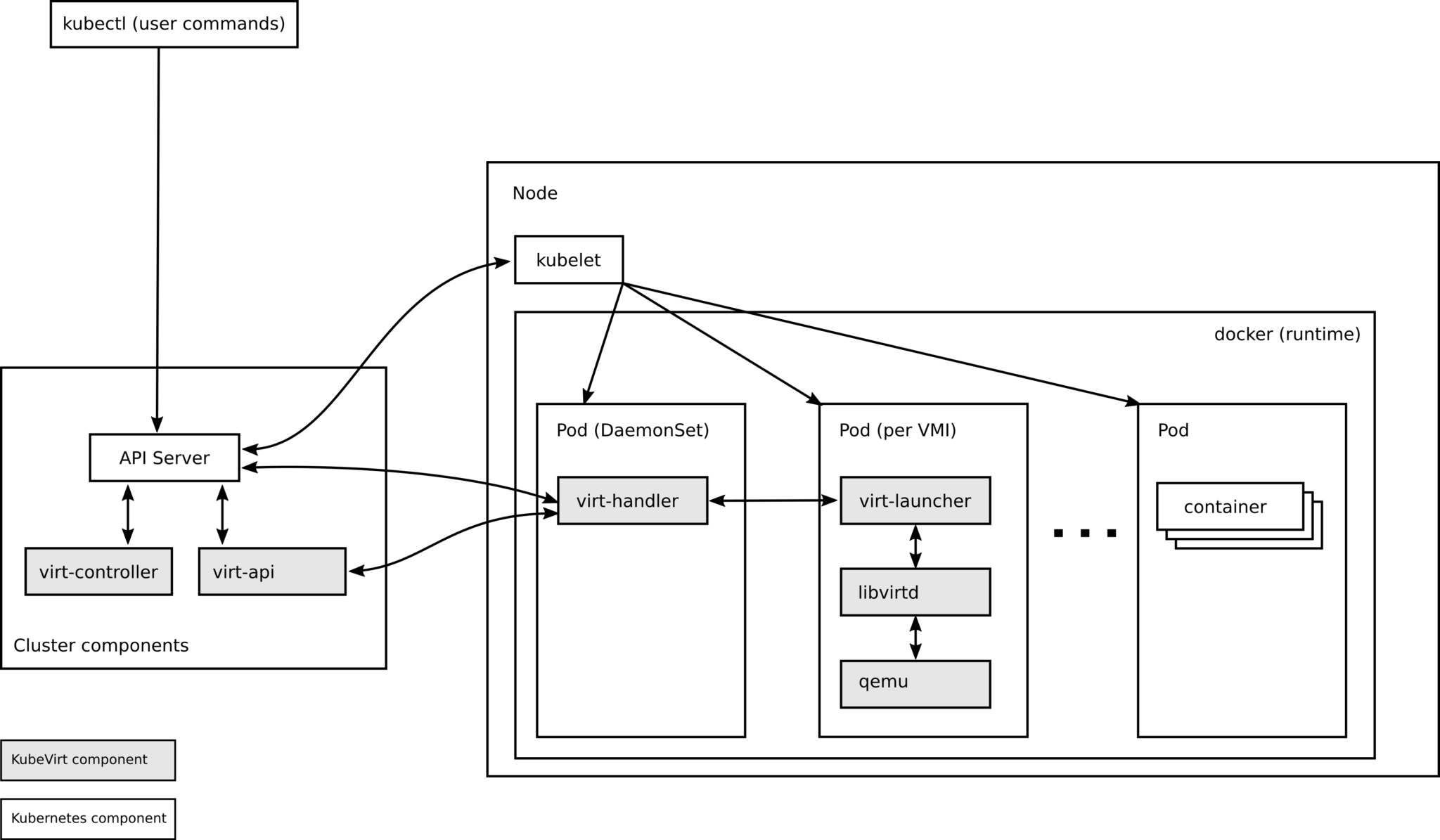

Source: https://kubevirt.io/user-guide/architecture/

For a deeper discussion of how KubeVirt works and the components involved, look at the blog Getting to Know KubeVirt on kubernetes.io.

What Are the Benefits of KubeVirt?

KubeVirt integrates with existing Kubernetes tools and practices such as monitoring, logging, alerting, and auditing, providing significant benefits including:

- Centralized management: Manage VMs and containers using a single set of tools.

- No hypervisor tax: Eliminate the need to license and run a hypervisor to run the VMs associated with your application.

- Predictable performance: KubeVirt uses the Kubernetes CPU manager to pin vCPUs and RAM to a VM for workloads that require predictable latency and performance.

- CI/CD for VMs: Develop application services that run in VMs and integrate and deliver them using the same CI/CD tools for containers.

- Authorization: KubeVirt comes with a set of predefined RBAC ClusterRoles that can be used to grant users permissions to access KubeVirt Resources.

Centralizing management of VMs and containers simplifies your infrastructure stack and offers a variety of less obvious benefits. For instance, adopting KubeVirt reduces the load on your DevOps teams by eliminating the need for separate VM and container pipelines, speeding daily operations. In addition, as you migrate more VMs to Kubernetes, you can see savings in software and utility costs, not to mention the hypervisor tax. In the long term, you can decrease your infrastructure footprint by leveraging Kubernetes’ ability to package and schedule your virtual applications.

Kubernetes with KubeVirt provides faster time to market, reduced cost, and simplified management. Automating the lifecycle management of VMs using Kubernetes helps consolidate the CI/CD pipeline of your virtualized and containerized applications. With Kubernetes as an orchestrator, changes in either type of application can be similarly tested and safely deployed, reducing the risk of manual errors and enabling faster iteration.

KubeVirt: Challenges and Best Practices

There are a few things to keep in mind if you’re deploying KubeVirt. First, as I mentioned above, one of the reasons you may want to run a VM instead of a container is for specialized hardware like GPUs. If this applies to your workload, you’ll need to ensure that at least one node in your cluster contains the necessary hardware and then pin the pod containing the VM to the node(s) with that hardware.

As with any Kubernetes add-on, managing KubeVirt when you have a fleet of clusters—possibly running in multiple, different environments—becomes more challenging. It’s essential to ensure technology is deployed the same way in each cluster and perhaps tailored to the hardware available.

Finally, Kubernete's skills are in short supply. Running a VM on KubeVirt generally requires the ability to understand and edit YAML configuration files. You’ll need to ensure that everyone who needs to deploy VMs on KubeVirt has the skills and tools, from developers to operators.

Published at DZone with permission of Kyle Hunter. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments