Request Routing Through Service Mesh for WebSphere Liberty Profile Container on Kubernetes

I will be demonstrating how request routing from service mesh to WebSphere Liberty Profile (WLP) application server Docker container image on Kubernetes.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

I will demonstrate how to request routing from service mesh to WebSphere Liberty Profile (WLP) application server Docker container image on Kubernetes. Further providing details about Istio Ingress gateway, Gateway, and Virtual service created on istio that routing traffic to Docker image with WLP installed. In the end, we will be seeing from Istio kiali dashboard request routing through Istio ingress gateway and another way directly hitting to service on Kubernetes.

Environment Requirements

1. Refer to the following Kubernetes official website to install and configure the cluster with kubeadm,

Installing kubeadm | Kubernetes

Creating a cluster with kubeadm | Kubernetes

To better illustrate the proof of concept covered here, Below is the current Kubernetes environment setup installed and created a cluster of one master and two worker nodes using kubeadm.

Login into the master node and run the following command to list the number of nodes in Kubernetes, including version and status.

2. MetalLB: MetalLB hooks into your Kubernetes cluster and provides a network load-balancer implementation. In short, it allows you to create Kubernetes services of the type LoadBalancer clusters that don’t run on a cloud provider and thus cannot simply hook into paid products to provide load balancers.

Refer to the following MetalLB official website to set up MetalLB to use the Kubernetes cluster.

MetalLB, bare metal load-balancer for Kubernetes (universe.tf)

3. Istio(Service Mesh) Setup on Kubernetes: Istio extends Kubernetes to establish a programmable, application-aware network using the powerful Envoy service proxy. Working with both Kubernetes and traditional workloads, Istio brings standard, universal traffic management, telemetry, and security to complex deployments.

Refer to the following Istio official website to set up Istio on Kubernetes.

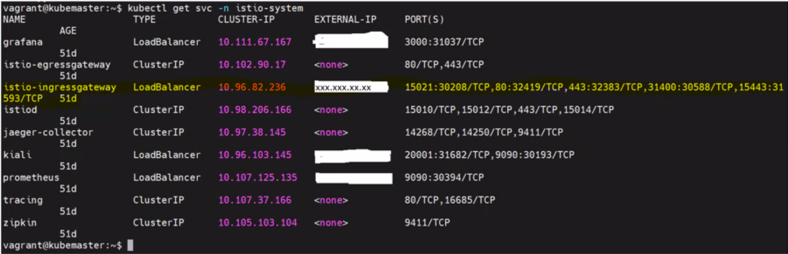

Login into the master node and run the command mentioned in the below snapshot to see the Istio installed with istio controller, ingress and egress gateway, and the following add-ons grafana, jaeger, kiali, and prometheus.

Distributed tracing is provided by integration with Jaeger; we can use Prometheus with Istio to record metrics that track the health of Istio and applications within the service mesh. We can visualize metrics using tools like Grafana and Kiali. Kiali is an observability console for Istio to visualize the application traffic flow.

Deployment of WLP containers on Kubernetes

WebSphere liberty profile application server container image deployment on Kubernetes cluster using following imperative commands with 2 replicas.

Login into the Kubernetes master node and run the following commands to schedule the deployment and expose the service type as a load balancer to access the application server.

kubectl create deployment libertyj --image=websphere-liberty --replicas=2

kubectl expose deployment libertyj --name libertyj1 --type LoadBalancer --protocol TCP --port 9088 --target-port 9080.

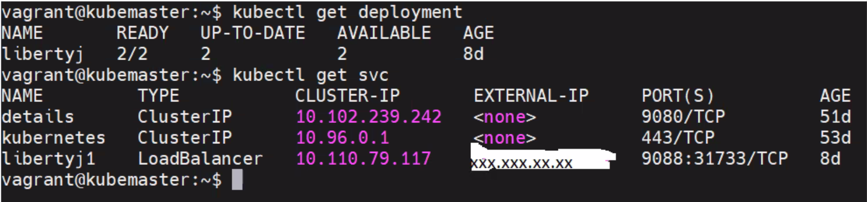

Run the following command mentioned in the below snapshot to see the deployment and load balancer status,

Service( libertyj1 ) using Load balancer type will be published with external IP using MetalLB .We can access the WebSphere Liberty Profile application server with service external IP and port mentioned as blow. The external IP in the above snapshot is masked with XXX.XXX.XX.XX for further use in the article. With the below URL, we can directly access the WebSphere liberty profile application server via Kubernetes load balancer type service libertyj1

http://XXX.XXX.XX.XX:9088/index.html

However, we aim to access the WebSphere liberty profile application server via the Istio service mesh and route the application traffic to the Kubernetes service. To route traffic from Istio ingress gateway service to the WebSphere liberty profile application server exposed service, we need to create a gateway, virtual service, and destination rule in Kubernetes default namespace.

YAML definition file for creating gateway component,

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: liberty-gateway

namespace: default

spec:

selector:

istio: ingressgateway

servers:

- hosts:

- '*'

port:

name: http

number: 80

protocol: HTTP

YAML definition file for creating Virtual Service component. In the YAML definition file of the virtual service component, we need to mention the gateway created above, and also destination rule can be included.

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: httpbin

namespace: default

spec:

gateways:

- liberty-gateway

hosts:

- '*'

http:

- match:

- uri:

prefix: /index.html

route:

- destination:

host: libertyj1

port:

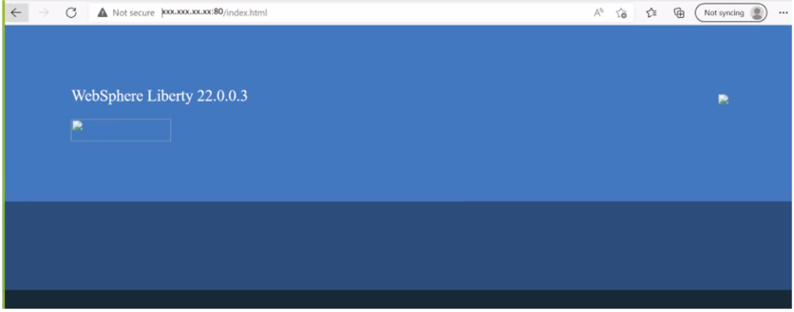

number: 9088Finally, by using istio service mesh ingress gateway, exposed external IP and port 80 mentioned in the gateway will be exposed as service to access the WebSphere liberty profile application server via the following URL http://XXX.XXX.XX.XX:80/index.html

From the Kiali dashboard, we can visualize traffic hitting the libertyj1 service exposed for WebSphere liberty profile application server container image deployment in two routes.

One from Istio-ingressgateway and the other from service exposed for deployment type as loadbalancer.

Summary

WebSphere Liberty is built on Open Liberty (link resides outside IBM). The combination of Open Liberty with traditional WebSphere compatibility features makes WebSphere Liberty an ideal target for modernizing existing applications. For example, when migrating from existing monolithic applications into microservices, consider Websphere Liberty on containers to orchestrate from Kubernetes and intelligent traffic management from Istio (Service Mesh).

Opinions expressed by DZone contributors are their own.

Comments