Performance Comparison — Thread Pool vs. Virtual Threads (Project Loom) In Spring Boot Applications

This article compares different request-handling approaches in Spring Boot Applications: ThreadPool, WebFlux, Coroutines, and Virtual Threads (Project Loom).

Join the DZone community and get the full member experience.

Join For FreeIn this article, we will briefly describe and roughly compare the performance of various request-handling approaches that could be used in Spring Boot applications.

Efficient request handling plays a crucial role in developing high-performance back-end applications. Traditionally, most developers use the Tomcat embedded in Spring Boot applications, with its default Thread Pool for processing requests under the hood. However, alternative approaches are gaining popularity in recent times. WebFlux, which utilizes reactive request handling with an event loop, and Kotlin coroutines with their suspend functions have become increasingly favored.

Additionally, Project Loom, which introduces virtual threads, is set to be released in Java 21. However, even though Java 21 is not released yet, we can already experiment with Project Loom since Java 19. Therefore, in this article, we will also explore the use of virtual threads for request handling.

Furthermore, we will conduct a performance comparison of the different request-handling approaches using JMeter for high-load testing.

Performance Testing Model

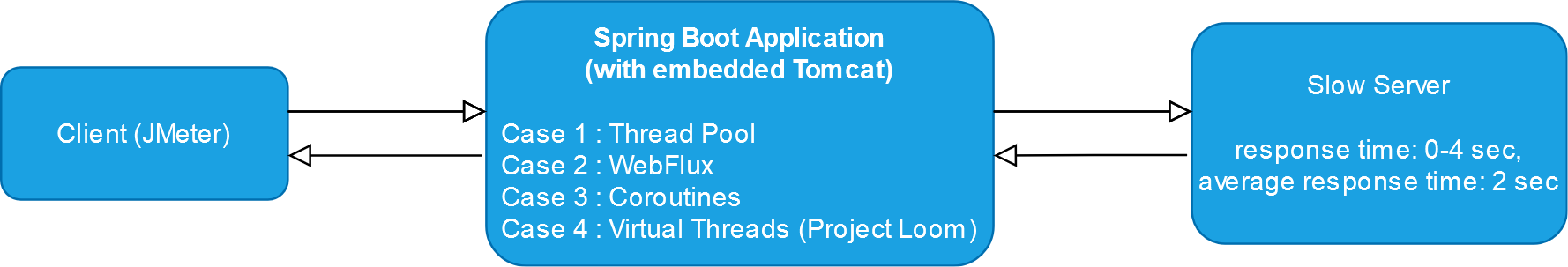

We will compare request-handling approaches using the following model:

The flow is as easy as cake:

- The client (JMeter) will send 500 requests in parallel. Each thread will await for response and then send another request repeatedly. The request timeout is 10 seconds. The testing period will last for 1 minute.

- The Spring Boot application that we are testing will receive the requests from the client and await responses from the slow server.

- The slow server responds with a random timeout. Max response time is 4 seconds. The average response time is 2 seconds.

The more requests that are handled, the better the performance results are.

1. Thread Pool

By default, Tomcat uses Thread Pool (sometimes called a connection pool) for request handling.

The concept is simple: when a request arrives at Tomcat, it assigns a thread from the thread pool to handle the request. This allocated thread remains blocked until a response is generated and sent back to the client.

By default, Thread Pool contains up to 200 threads. That basically means that only 200 requests could be handled at a single point in time.

But this and other parameters are configurable.

Default Thread Pool

Let's make performance measurements on a simple Spring Boot application with embedded Tomcat and default Thread Pool.

The Thread Pool holds 200 threads. On each request, the server makes a blocking call to another server with an average response time of two seconds. So we can anticipate a throughput of 100 requests per second.

|

Total requests

|

Throughput, req/sec

|

Response time, ms

|

Error rate, %

|

|||

|

Average

|

Min

|

90% line

|

Max

|

|||

|

3356

|

91.2

|

4787

|

155

|

6645

|

7304

|

0.00

|

Remarkably, the actual result is quite close, with a measured throughput of 91.2 requests per second.

Increased Thread Pool

Let's increase the maximum number of threads in the Thread Pool up to 400 using application properties:

server:

tomcat:

threads:

max: 400

And let's run tests again:

| Total requests | Throughput, req/sec | Response time, ms | Error rate, % | |||

| Average | Min | 90% line | Max | |||

| 6078 | 176.7 | 2549 | 10 | 4184 | 4855 | 0.00 |

It is expected that doubling the number of threads in the Thread Pool would improve the throughput by two times.

But note that increasing the number of threads in a Thread Pool without considering the system's capacity and resource limitations can have adverse effects on performance, stability, and overall system behavior. It is crucial to carefully tune and optimize the Thread Pool size based on the specific requirements and capabilities of the system.

2. WebFlux

Instead of assigning a dedicated thread per request, WebFlux employs an event loop model with a small number of threads, typically referred to as an event loop group. This allows it to handle a high number of concurrent requests with a limited number of threads. Requests are processed asynchronously, and the event loop can efficiently handle multiple requests concurrently by leveraging non-blocking I/O operations. WebFlux is well-suited for scenarios that require high scalability, such as handling a large number of long-lived connections or streaming data.

Ideally, WebFlux applications should be fully written in a reactive way; sometimes, this is not that easy. But we have a simple application, and we can just use a WebClient for calls to the slow server.

@Bean

public WebClient slowServerClient() {

return WebClient.builder()

.baseUrl("http://127.0.0.1:8000")

.build();

}In the context of Spring WebFlux, a RouterFunction is an alternative approach to request mapping and handling:

@Bean

public RouterFunction<ServerResponse> routes(WebClient slowServerClient) {

return route(GET("/"), (ServerRequest req) -> ok()

.body(slowServerClient

.get()

.exchangeToFlux(resp -> resp.bodyToFlux(Object.class)),

Object.class

));

}But traditional controllers could still be used instead.

So, let's run the tests:

| Total requests | Throughput, req/sec | Response time, ms | Error rate, % | |||

| Average | Min | 90% line | Max | |||

| 7443 | 219.2 | 2068 | 12 | 3699 | 4381 | 0.00 |

The results are even better than in the case of an increased Thread Pool. But it's important to note that both Thread Pool and WebFlux have their strengths and weaknesses, and the choice depends on the specific requirements, the nature of the workload, and the expertise of the development team.

3. Coroutines and WebFlux

Kotlin coroutines can be effectively used for request handling, providing an alternative approach in a more concurrent and non-blocking manner.

Spring WebFlux supports coroutines for requests handling, so let's try to write such a controller:

@GetMapping

suspend fun callSlowServer(): Flow<Any> {

return slowServerClient.get().awaitExchange().bodyToFlow(String::class)

}A suspend function can perform long-running or blocking operations without blocking the underlying thread. Kotlin Coroutine Fundamentals article describes the basics pretty well.

So, let's run the tests again:

| Total requests | Throughput, req/sec | Response time, ms | Error rate, % | |||

| Average | Min | 90% line | Max | |||

| 7481 | 220.4 | 2064 | 5 | 3615 | 4049 | 0.00 |

Roughly, we can make a conclusion that results do not differ from the case of the WebFlux application without coroutines.

But besides coroutines, the same WebFlux was used, and the test probably didn't fully reveal the potential of coroutines. Next time, it's worth trying Ktor.

4. Virtual Threads (Project Loom)

Virtual threads, or fibers, are a concept introduced by Project Loom.

Virtual threads have a significantly lower memory footprint compared to native threads, allowing applications to create and manage a much larger number of threads without exhausting system resources. They can be created and switched between more quickly, reducing the overhead associated with thread creation.

The switching of virtual thread execution is handled internally by the Java Virtual Machine (JVM) and could be done on:

- Voluntary suspension: A virtual thread can explicitly suspend its execution using methods like

Thread.sleep()orCompletableFuture.await(). When a virtual thread suspends itself, the execution is temporarily paused, and the JVM can switch to executing another virtual thread. - Blocking operation: When a virtual thread encounters a blocking operation, such as waiting for I/O or acquiring a lock, it can be automatically suspended. This allows the JVM to utilize the underlying native threads more efficiently by using them to execute other virtual threads that are ready to run.

If you're interested in the topic of virtual and carrier threads, read this nice article on DZone — Java Virtual Threads — Easy Introduction.

Virtual threads will finally be released in Java 21, but we can already test them since Java 19. We just have to explicitly specify the JVM option:

--enable-preview

Basically, all that we have to do is to replace Tomcat Thread Pool with some virtual thread-based executor:

@Bean

public TomcatProtocolHandlerCustomizer<?> protocolHandler() {

return protocolHandler ->

protocolHandler.setExecutor(Executors.newVirtualThreadPerTaskExecutor());

}

So, instead of the Thread Pool executor, we've started using virtual threads.

Let's run the tests:

| Total requests | Throughput, req/sec | Response time, ms | Error rate, % | |||

| Average | Min | 90% line | Max | |||

| 7427 | 219.3 | 2080 | 12 | 3693 | 4074 | 0.00 |

The results are practically the same as in the case of WebFlux, but we didn't utilize any reactive techniques at all. Even for calls to the slow server, a regular blocking RestTemplate was used. All we did was replace the Thread Pool with a virtual thread executor.

Conclusion

Let's gather the test results into one table:

| Request handler | Total requests in 30 seconds |

Throughput, req/sec | Response time, ms | Error rate, % | |||

| Average | Min | 90% line | Max | ||||

| Thread Pool (200 threads) | 3356 | 91.2 | 4787 | 155 | 6645 | 7304 | 0.00 |

| Increased Thread Pool (400 threads) | 6078 | 176.7 | 2549 | 10 | 4184 | 4855 | 0.00 |

| WebFlux | 7443 | 219.2 | 2068 | 12 | 36999 | 4381 | 0.00 |

| WebFlux + Coroutines | 7481 | 220.4 | 2064 | 5 | 3615 | 4049 | 0.00 |

| Virtual Threads (Project Loom) | 7427 | 219.3 | 2080 | 12 | 3693 | 4074 | 0.00 |

The performance tests conducted in this article are quite superficial, but we can draw some preliminary conclusions:

- Thread Pool demonstrated inferior performance results. Increasing the number of threads might provide improvements, but it should be done considering the system's capacity and resource limitations. Nevertheless, a thread pool can still be a viable option, especially when dealing with numerous blocking operations.

- WebFlux demonstrated really good results. However, it is important to note that to fully benefit from its performance. The whole code should be written in a reactive style.

- Using Coroutines in conjunction with WebFlux yielded similar performance results. Perhaps we had to try them with Ktor, a framework specifically designed to harness the power of coroutines.

- Using Virtual Threads (Project Loom) also yielded similar results. However, it's important to note that we didn't modify the code or employ any reactive techniques. The only change made was replacing the Thread Pool with a virtual thread executor. Despite this simplicity, the performance results were significantly improved compared to using a Thread Pool.

Therefore, we can tentatively conclude that the release of virtual threads in Java 21 will significantly transform the approach to request handling in existing servers and frameworks.

The entire code, along with the JMeter test file, is available on GitHub as usual.

Opinions expressed by DZone contributors are their own.

Comments