QA Approach for Data Migration

Data migration can be a pain. Read on to learn how about the common mistakes and how to avoid them in your next migration project.

Join the DZone community and get the full member experience.

Join For FreeSeveral systems are getting migrated to latest technologies these days. Cloud enablement is one of the major reasons for migration. The other reasons being cost reduction, productivity improvement, and flexibility in managing data. When a system gets migrated, the data held by the system cannot be ignored and needs to be migrated. The data migration process involves huge risks because of the high volume and criticality of the data to be migrated. The data consists of both business data and customer data. Business intelligence is contained in the business data, which is mainly the rules and parameters used for the successful processing of the application; whereas the customer data includes data such as buyer demographics and consumer ratings. There are several steps involved in the successful migration of data from one system to another.

First and foremost is the planning and determination of the scope of the migration. Once the scope is identified, we can to list out the components with the help of SMEs and people working on the system. Planning should be done for allocating the resources, including the tools and the phases of data migration. This includes the final step of the actual migration of production data, which may impact the availability or downtime of the system. Plan in such a way that the business continuity is interrupted at a minimum level.

A thorough analysis by the development team, testing team, and business analysts is required in order to understand the system. Create artifacts and have them reviewed by analysts to make sure that the teams have a shared and proper understanding of the existing system. Back up the data that is to be migrated. This can be used in the event of a major data migration failure.

There are two major things to be considered during data migration: data volume and data value.

Data volume: Both the initial data and the delta (or the rate of change in the data) is a major criterion for data migration. Initial data involves historical data as well, which needs to be loaded in a single step. We also need to consider a change in the architecture/design or parallel processing for loading huge volumes of data within a limited time. This will ensure reduced downtime of the system, which is critical in systems like banking and FinTech.

Data Value: Data value is all about the different types of data and its format. The different data types are character, numeric, float, double, etc. The format of the data is values such as, date, address, phone number, etc. Analysis is required to get the source and target data structure and map the corresponding fields before doing the migration process which is either automatic, manual, or a combination of both.

The following phases are involved in data migration.

- Identify and segregate the databases, fields, and programs used in the application.

- Validation of metadata, which includes the details of the fields and databases.

- Map the database fields with the corresponding program level fields.

- Identify the business rules performed and segregate the one required for data preparation.

- Find the possible values of each of the database fields using the mapped program level fields and their usage across the entire system.

- Identify the data base primary key, foreign keys, and indexes used.

- Analyze the program fields for possible relationships between the databases.

- Fields used across the databases and the key fields to be identified by analyzing the application.

- Analyzing the data validation programs for possible values of the database fields

Even if there is an exact match for the databases in the source and target systems, the target system may generate errors when executed. This is because the target system's design/language is entirely different from the source system. So, the handling of data in the target system should be different from that of the source system. One such case is the conditional logic issue which may happen due to EBCDIC and ASCII difference. So, the analysts or architects should foresee these kinds of errors before data migration and the data should be converted accordingly.

Challenges of Data Migration

Truncation and precision of data: In this case, the data to be migrated is partially moved to the target system. It can be challenging because of the additional padded spaces or a smaller number of bytes in the source data.

Data type mismatch: The data types must be mapped correctly. Numeric types should be mapped with integers, float types must be identified, and so on. Variable length fields should also be mapped correctly and handled properly.

Null data translation: Null data should be translated to the Target as null itself and not as spaces or default values. The application can have some checks for null validation. The target application should be modified to handle the null values, if it doesn’t support them. The handling of null values should be converted to the equivalents in the target architecture as well.

Incorrect translation or data corruption: There can be different data sources and data formats that are stored in multiple source tables. A thorough analysis of the data is required when the architecture or design changes from a legacy system to a modern system. An incorrect translation of data, or corrupted data, impacts the target system.

Misplaced data: This is data that has been lost while performing the migration. It happens mainly because of the process followed while backing up the data or because of some illogical analysis of the system.

Extra records: Duplicate records can come from different data sources. So, before loading the data to the target system, a filtering and transformation of the data needs to be done in order to remove the duplicate records.

Transformation logic errors: There can be logic errors that lead to the improper transformation of data. This can give unexpected results upon execution in the target system.

Hidden requirements: There are instances where some requirements are missed due to the absence of subject matter experts on the source system. SMEs in the system would be able to tell the reason behind the design. If that is not addressed during the build of the target system, it will affect the data migration project.

Numeric field precision: This is mostly valid in the case of calculated fields and reports. Field precision of numeric data used in reports needs to be used as it is in the target system. Invalid values in these may produce incorrect reports, as the values will come with incorrect field descriptions. For example, we gave a percentage field which should have a value between 0 and 100 only. The value should be between 0 and 1 while used in calculations. 25% or 0.25 should be used for percentage values in this example.

Rejected rows: Some data might get rejected during data extraction. This usually happens when an automated migration of data is done. We need to have a report on these rejected rows with the values as well. This will be useful to reload the rejected data back into the target system if required.

Data Cleansing: The process of preparing data for the migration. This is required mainly because the storage method and the architecture of the target system differs from the source system. Minor manipulation or transformation of the data is also involved in the cleansing process to make the data compatible with the target system.

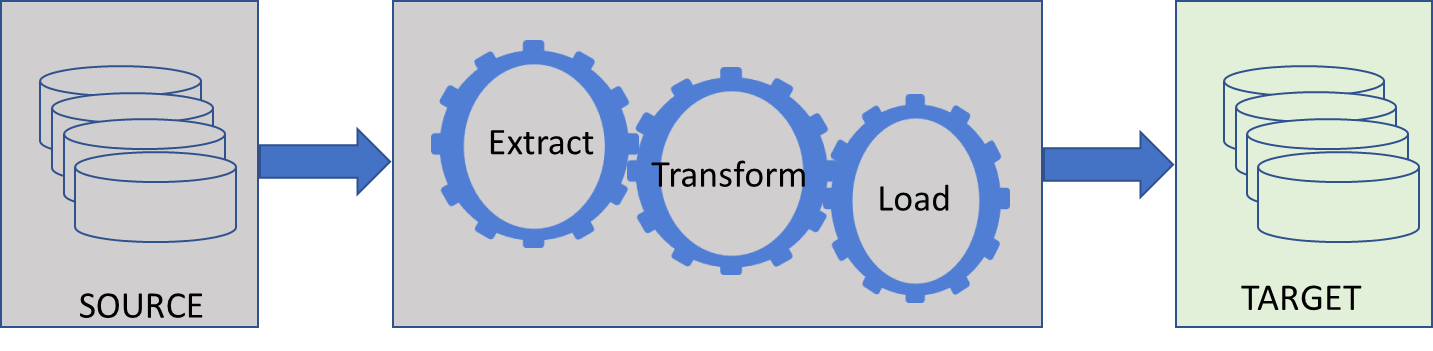

Data Quality: Execute some mock migrations and pilot migrations before the final migration. It may give some errors which we never expected during any of the previous phases. Customize the ETL tools to handle these kinds of errors in the transformation process. This is to ensure the quality of the migration.

Schema Validation: Automate processes to validate the data by comparing the source and the target systems. Scripts can be written in Perl, Python, or any language of choice to do it. Export both the source and target metadata into a table and execute the script against it to identify any mismatches in views, stored procedures, etc.

Data validation: Here each data element in the source needs to be compared with the target. Scripts need to be executed to compare the column values. A mismatch in data needs to be reported for reconciliation. The report should list the missing column and data with the key value, if any.

Once the data is migrated, have the scripts executed against the source and target databases to find any errors or missing data in the mapped fields. Rectify these errors using the back up data you created. Do a thorough testing to ensure that the data is loaded to the proper location and is accessible from various systems and applications as before.

Opinions expressed by DZone contributors are their own.

Comments