Pinterest's Transition to HTTP/3: A Boost in Performance and Reliability

Pinterest's adoption of HTTP/3 is a strategic move to enhance the platform's networking performance. It also helps to leverage the protocol's advanced features.

Join the DZone community and get the full member experience.

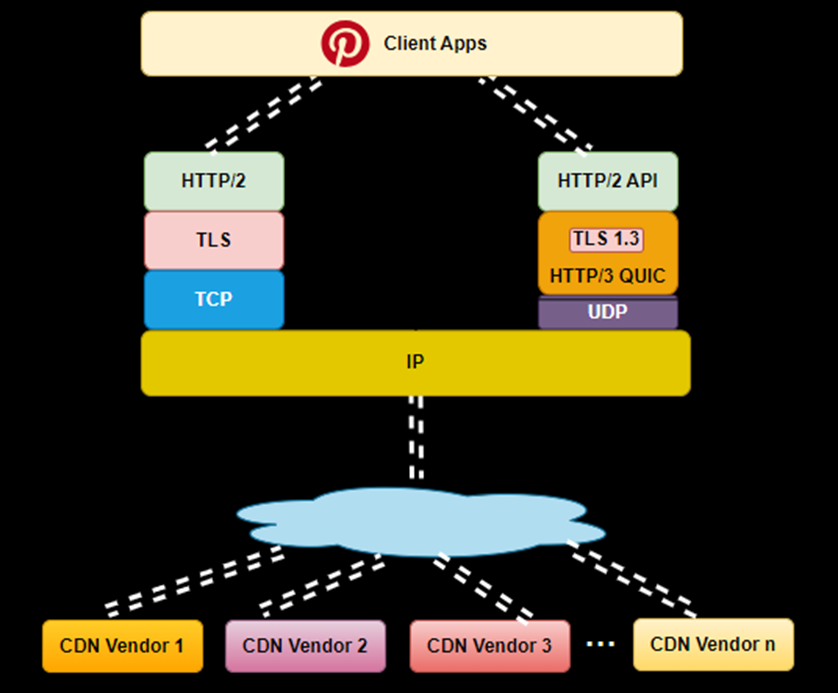

Join For FreeIn a recent announcement, Pinterest revealed its successful migration from HTTP/2 to HTTP/3. This marked a significant improvement in its networking infrastructure. The aim was to enhance the user experience and improve critical business metrics by leveraging the capabilities of the modern HTTP/3 protocol.

The Journey to HTTP/3

Network performance, such as low latency and high throughput, is critical to Pinners’ experience.

In 2021, a group of client networking enthusiasts at Pinterest started thinking about adopting HTTP/3 for Pinterest, from traffic/CDN to client apps. They worked on it throughout 2022 and achieved the goal in early 2023.

How Does HTTP/3 Help?

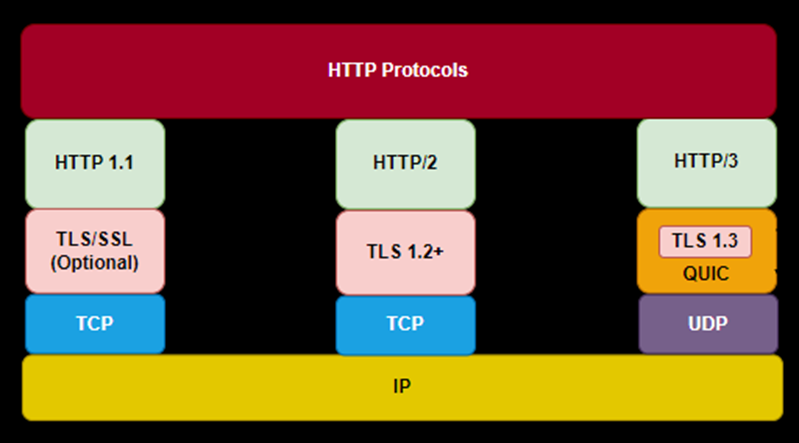

HTTP is the foundation of data communication on the World Wide Web.

If you don’t know what HTTP is and how it works, learn more here before proceeding further.

HTTP/3 is a modern HTTP protocol that has many advantages compared to HTTP/2, including but not limited to:

- No TCP head of line blocking problem in comparison to HTTP/2.

- Connection migration across IP addresses, which is good for mobile use cases.

- Ability to change/tune Lost Detection and Congestion Control parameters.

- Reduced connection time (0-RTT, while HTTP/2 still requires TCP 3-way handshakes).

- More efficient for large payload use cases, such as image downloading, video streaming, etc.

Let’s deep dive into each of these advantages and understand what they mean and how it’s helping Pinterest.

1. Head-of-Line Blocking

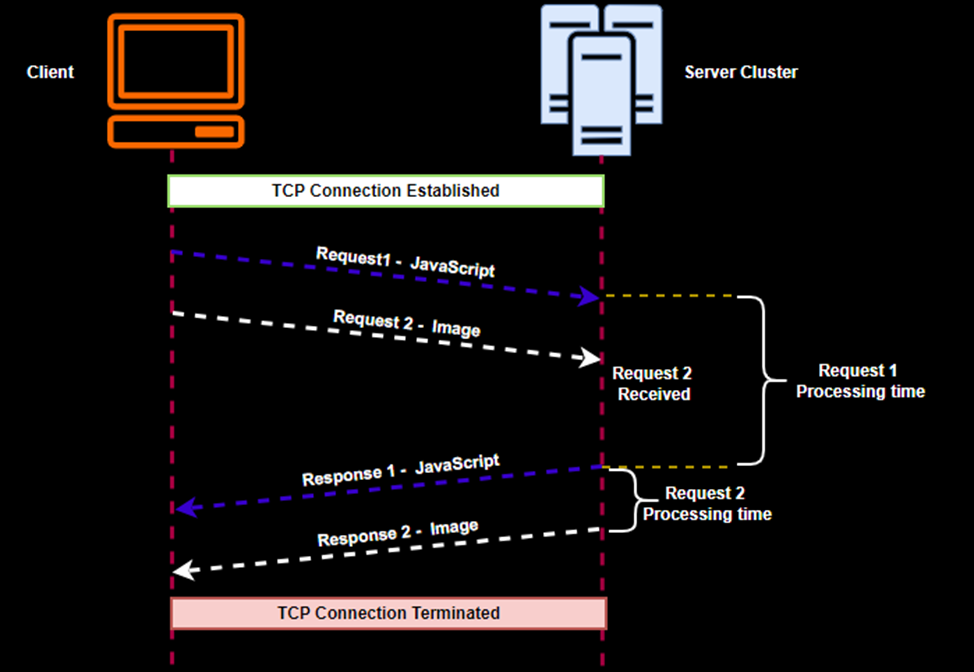

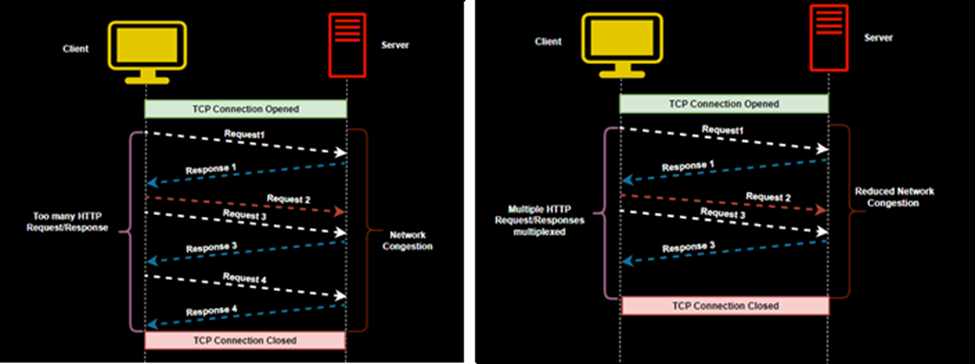

In HTTP/2 and earlier versions, a problem called "head-of-line blocking" can occur. Head-of-line blocking is a performance degradation phenomenon where one HTTP request blocks other requests from processing.

This means that if one resource (like an image or a script) encounters a delay in transmission, it can block other resources from being delivered, even if they are ready. This can impact the overall webpage loading time.

For example, let's consider a scenario where a web page consists of several resources: HTML, CSS, JavaScript, and an image. If JavaScript is larger in size, it will block the image requested subsequently until JavaScript arrives.

This can impact the overall webpage loading time.

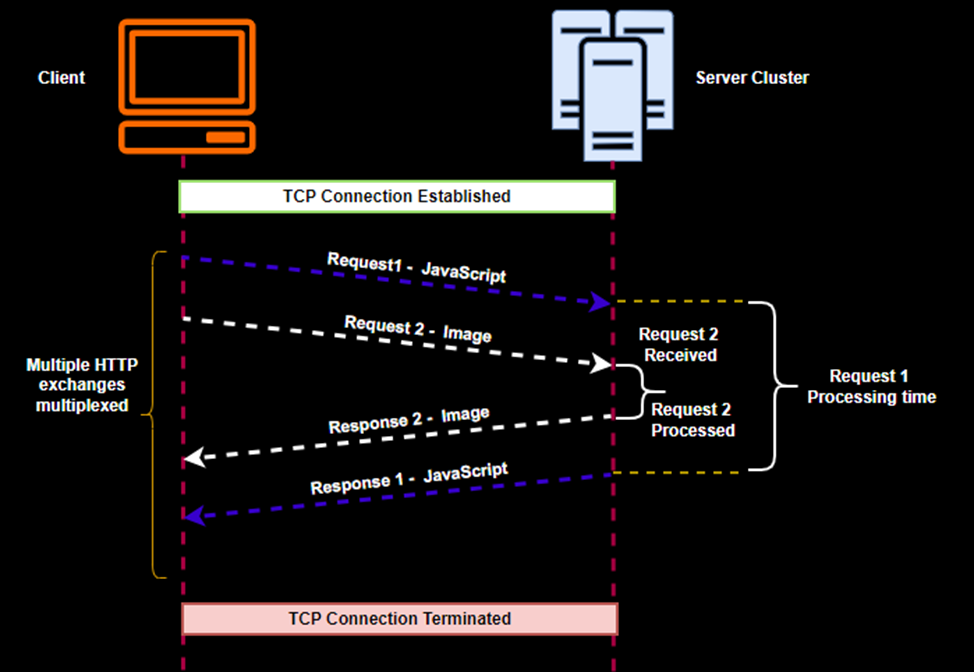

To overcome this, HTTP/2 introduced HTTP Streams. HTTP streams is an abstraction that allows multiplexing different HTTP exchanges onto the same TCP connection.

Streams don’t need to be sent in order and are independent of other streams.

This eliminates Head of Line blocking at the application layer. But Head Of Line blocking still exists at the TCP transport layer in HTTP/2.

HTTP/3 uses QUIC instead of TCP as the transport protocol, thereby removing TCP Head offive Line blocking in the transport layer. QUIC is specifically designed to optimize internet usage on mobile devices.

2. Connection Migration Across IP Addresses

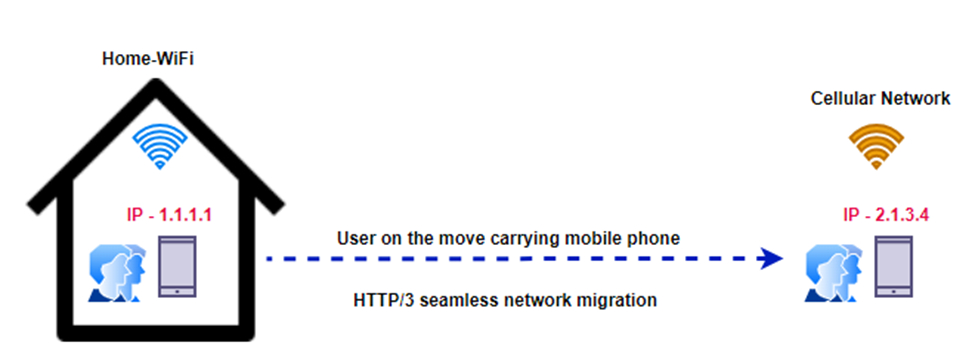

QUIC allows for connection migration, which means that if the client's IP address changes (common in mobile scenarios), the connection can be maintained. This is beneficial for mobile use cases, ensuring a more seamless transition even if the client's network conditions change.

For example, consider a user who is using a mobile device, such as a smartphone or tablet, to access Pinterest that relies on HTTP/3 for communication. The user decides to leave home and starts using cellular data while on the move. This transition involves a switch from the home Wi-Fi network to the cellular network, resulting in the device acquiring a new IP address associated with the cellular network.

HTTP/3's capability to migrate connections across IP addresses ensures a seamless transition during this switch between networks.

3. Able To Change/Tune Lost Detection and Congestion Control

HTTP/3 provides the ability to change and tune lost detection and congestion control mechanisms. This allows for customization based on specific use cases or network conditions. Fine-tuning these aspects contributes to better performance and reliability.

In networking, "lost detection" refers to the ability of a communication protocol to identify when data packets are lost or not successfully delivered to the intended recipient.

For example, consider a scenario where a mobile device experiences intermittent connectivity. In this case, the lost detection mechanism in HTTP/3 can be tuned to be more tolerant of brief connection drops, minimizing unnecessary retransmissions and optimizing data transfer.

Congestion control is a fundamental aspect of networking protocols to ensure that data is transmitted efficiently without causing network congestion. Congestion control algorithms determine how a sender adjusts its rate of data transmission based on network conditions. HTTP/3 provides the ability to change or tune its congestion control mechanisms.

For example, imagine a network that encounters occasional congestion during peak usage hours. HTTP/3 allows fine-tuning of congestion control algorithms, enabling the protocol to intelligently adjust the rate of data transmission based on network conditions. This adaptability ensures optimal performance even in varying congestion scenarios.

HTTP/3's capability to migrate connections across IP addresses ensures a seamless transition during this switch between networks.

4. Reduced Connection Time (0-RTT, While HTTP/2 Still Requires TCP 3-Way Handshakes)

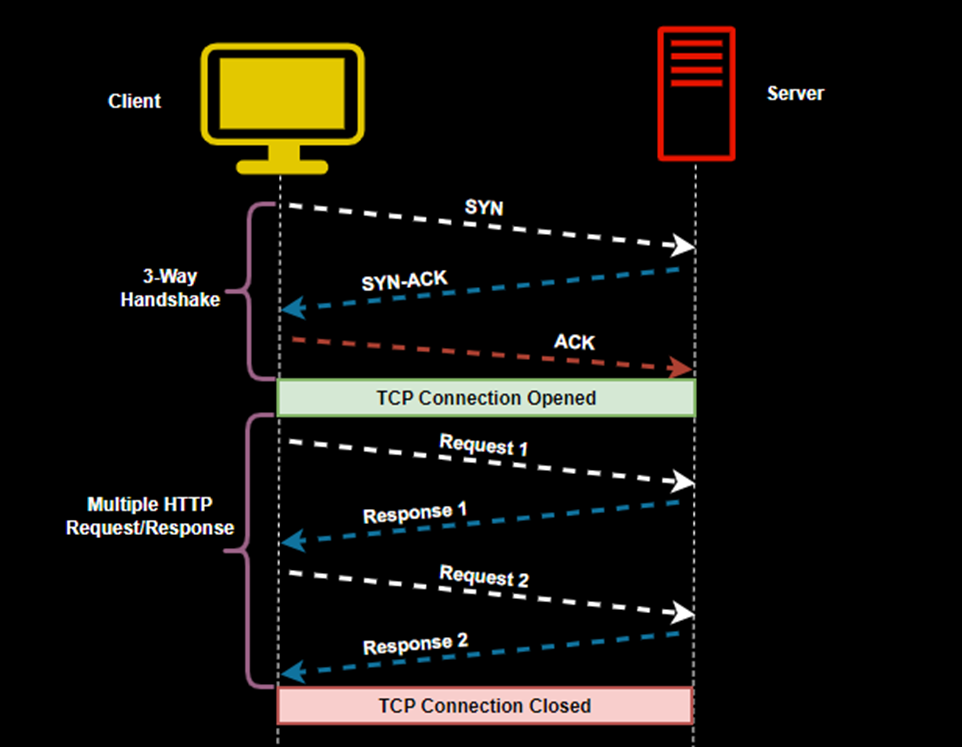

The three-way handshake is a process used by TCP to establish a reliable and connection-oriented communication channel between a client and a server. Since HTTP typically runs over TCP, the three-way handshake is relevant in the context of establishing a TCP connection for HTTP communication. It involves the following steps:

1. SYN (Synchronize): The client initiates the connection by sending a TCP segment with the SYN flag set to the server. This indicates the client's intention to establish a connection and also includes an initial sequence number.

2. SYN-ACK (Synchronize-Acknowledge): Upon receiving the SYN segment, the server responds with a TCP segment that has both the SYN and ACK flags set. The acknowledgment number is set to one more than the received client sequence number, and the server also chooses its initial sequence number.

3. ACK (Acknowledge): Finally, the client sends a TCP segment with the ACK flag set. The acknowledgment number is set to one more than the received server sequence number. This completes the three-way handshake, and both parties are now aware that the connection is established.

4. RTT(Round Trip Time) includes the message propagation time to and from the server. In the context of networking and web performance, RTT is a critical metric because it affects the responsiveness of applications. Lower RTT values indicate quicker communication and less delay, which is desirable for real-time applications, online gaming, and other scenarios where low latency is crucial.

0-RTT is a notable feature of QUIC protocol used by HTTP/3.

With 0-RTT, a client can send data to the server in the very first message of the connection, without waiting for a round-trip time (RTT).

This is achieved by using previously established session information or by resuming a previous session. It allows the client to include data in the initial request, improving the efficiency of the connection setup.

The use of 0-RTT in HTTP/3 significantly reduces the time needed for the initial connection setup. The client can start sending data right away, leading to faster loading times for web pages and resources.

This is particularly advantageous for scenarios where minimizing latency is crucial, such as loading web pages quickly or delivering real-time content, as in the case of Pinterest.

5. More Efficient for Large Payload Use Cases, Such as Image Downloading, Video Streaming, Etc.

HTTP/3 is more efficient for use-cases involving large payloads, such as downloading images or streaming videos. The protocol's design is well-suited to handle scenarios where substantial amounts of data need to be transmitted, contributing to improved performance in media-rich content environments.

HTTP/3 has refined flow control mechanisms that allow for better handling of large payloads. Flow control is essential for preventing overwhelming a receiver with too much data at once.

HTTP/3's efficiency for large payload use-cases is rooted in its efficient multiplexing, reduced latency, improved flow control, and adaptive connection handling. These features collectively contribute to a better user experience when dealing with media-rich content on the web.

Multiplexing, 0-RTT, easy connection migrations along with improved flow control makes HTTP/3 efficient for large payloads.

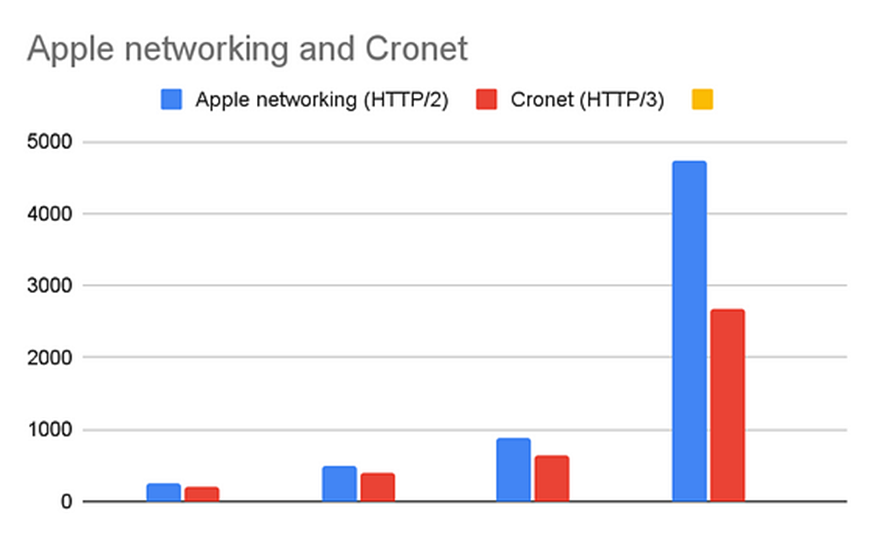

Analysis at Pinterest indicates that HTTP/3 has improved round-trip latency and reliability. Improved latency/throughput is critical to large media features (such as video and images). A faster and more reliable network is also able to move user engagement metrics.

The chart here shows the network request round-trip latency in mille sec, before and after the HTTP/3, for data collected over a week for varying payloads.

In conclusion, Pinterest's adoption of HTTP/3 represents a strategic move to leverage the protocol's advanced features and enhance the platform's overall networking performance. The benefits, ranging from reduced latency to improved handling of large payloads, contribute to a more seamless and efficient user experience on the Pinterest platform. As the industry continues to embrace HTTP/3, Pinterest stands at the forefront of innovation, aligning its network infrastructure with the latest advancements in web protocols.

Published at DZone with permission of Roopa Kushtagi. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments