Path-Based Routing in Render With Kong API Gateway

When deploying microservices to Render, use path-based routing as a workaround to leverage an API gateway.

Join the DZone community and get the full member experience.

Join For FreeIf you’re building a microservice-backed application, a key benefit is separating the concerns of your application across individual microservices, each with its own ability to scale and encapsulate different functionality. The frontend—ostensibly a single-page application running in your user’s browser—will need access to the microservices that make up your web application. Each service could be directly accessible to the public web, but that adds security concerns.

An API gateway, however, allows for a centralized layer to handle concerns like authentication, traffic monitoring, or request and response transformations. API gateways are also a great way to leverage rate limiting and caching to improve the resilience and performance of your application.

Render is a one-stop-shop for deploying microservice-based web applications directly from an existing GitHub or GitLab repo. While Render provides many resources for standing up microservices and databases, one element that is not configurable out of the box is an API gateway—something along the lines of the AWS API Gateway or the Azure Application Gateway. Although access to an API gateway is not a one-click add-on with Render, it’s still possible to get one up and running.

In this post, we’re going to walk through how to set up Render for path-based routing so that we can use Kong Gateway in front of our microservices. Let’s start with a brief overview of our approach.

Overview of Our Mini-Project

We’ll deploy two simple microservice backends using Render. One will be a Python Flask service, and the other will be a Node.js service built on Express.

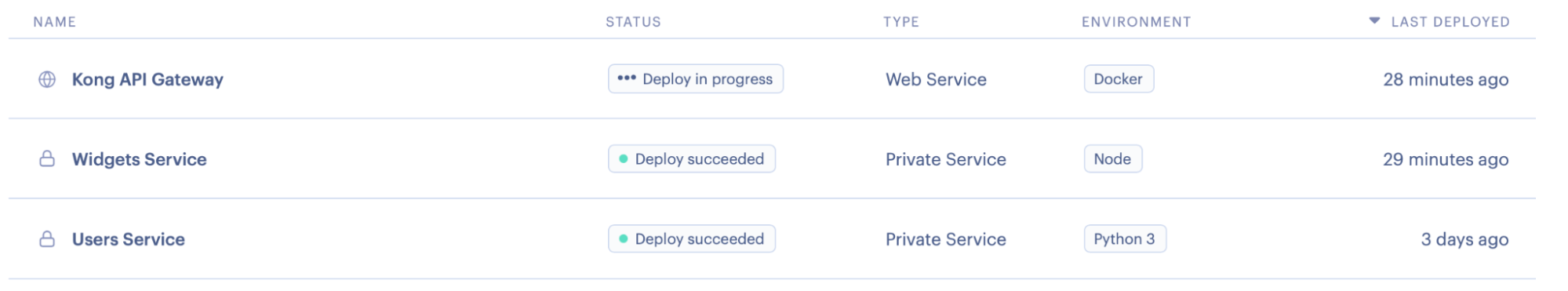

The anticipated end result is shown in the image above. We’ll have deployed two private services and one web service, Kong, that will accept and route requests to those private services. From the client’s perspective, they appear to be interacting with a single application. In reality, they are requesting resources across an ecosystem of microservices.

Microservices Deployed as Private Services

There are two main types of service deployments in Render: web services and private services. Web services are directly accessible to the public web. Private services, on the other hand, are only available within the private cloud inside your Render account’s ecosystem. This is a good thing because it allows you to better control the security and access within your microservice ecosystem.

Related Tutorial: How to Implement Oauth2 security in microservices.

Both of our microservices will be deployed as private services.

Kong Gateway Deployed as a Web Service

Kong is a highly performant, open-source, API gateway used in many of the biggest web applications in the world today. While there are many choices for API gateways, Kong stands out for being cloud and application-agnostic, highly configurable, and—perhaps most importantly—fast.

We’ll deploy Kong Gateway as a web service, accessible via the public web. Kong (and Kong alone) will have access to our two private microservices, and we’ll configure it to do appropriate request routing.

Deploying Microservices With Render

Let’s begin by setting up and deploying our two microservices.

“Users” Microservice With Python and Flask

Flask is a service framework for Python with a low barrier to entry. A single Python file is all we need to get a minimal API up and running with Flask. The code for this Flask service is available on GitHub. The following snippet creates a working service with a /users endpoint that returns a simple JSON response and status code:

from flask import Flask, jsonify

app = Flask(__name__)

@app.route("/users")

def root():

return jsonify({'userId': 42}), 200

if __name__ == "__main__":

app.run(host='0.0.0.0')An important detail to note is that in order for Render to automatically expose the correct host and port for your service, you must make sure you bind your application to 0.0.0.0 and not localhost or 127.0.0.1. The difference between 0.0.0.0 and 127.0.0.1 is the scope from which incoming requests are accepted. Only requests from the same machine are permitted using 127.0.0.1 which is the conventional loopback address. The 0.0.0.0 address allows requests from any network interface and what we need here to be picked up by Render. DZone’s previously covered how to secure Spring Boot microservices with JSON web tokens (JWT).

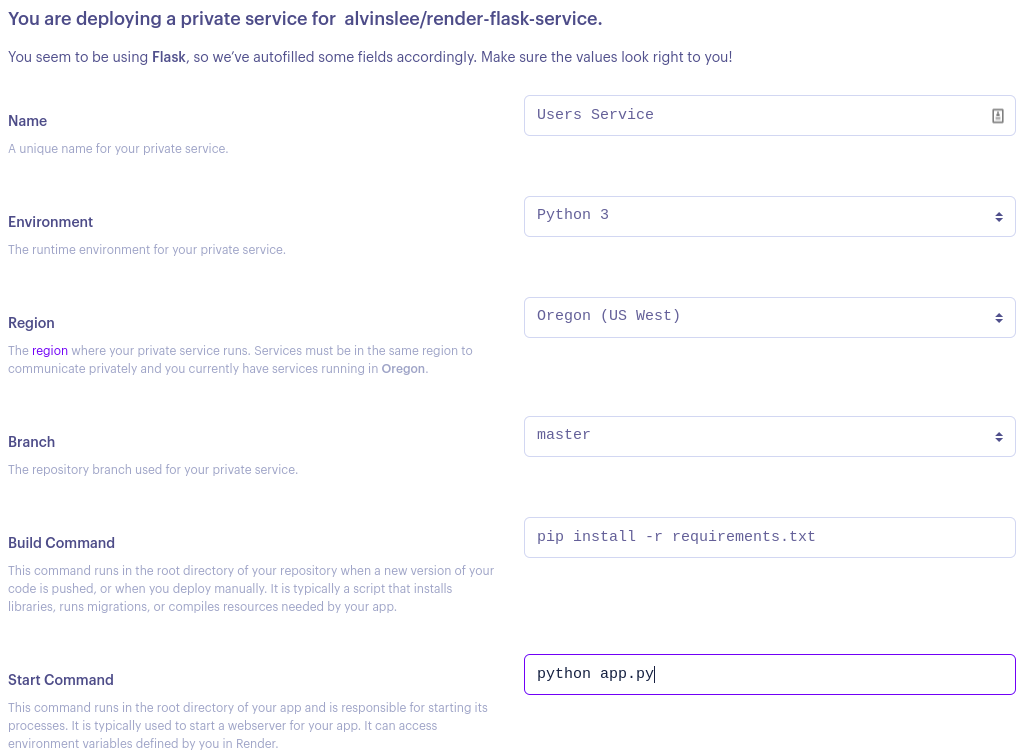

To deploy this as a private service in Render, first click the New button in your Render dashboard and select your git repo with the Flask app. Set the service Name and the Start Command. All other configuration options can be left as their default values. Alternatively, you can add a render.yaml file to your repository that configures how this service will be deployed. In our demo, though, we’ll walk through the user interface.

Render has free tiers all the way to enterprise-level hosting offerings. Choose the one that fits your needs. Select the branch that you wish to deploy and set up the build and start commands. Typically for a Python application, building the app just requires having all the proper dependencies in place. We can do that by running pip install -r requirements.txt. The command to boot up our service is python app.py.

Once you are satisfied with your selections, click Create Private Service. Within a few moments, your service will be up and running!

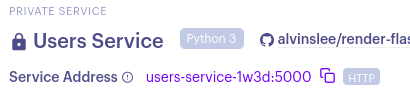

Notice the internal service address of your private service:

In this case, our service address is http://users-service-1w3d:5000. Remember that this is a private service, inaccessible outside of our Render account.

“Widgets” Microservice With Node.js and Express

Deploying the Node.js service is nearly the same as with the Python service, though the code required to stand up a Node.js project is more involved. We’ve built a simple “Widgets Service” with an endpoint at /widgets. The code for this Node.js service is available on GitHub.

Deploying this as a private service is nearly the same as with a Python Flask service. You will add a new private service from the Render dashboard and work through the options in the UI. The build and start commands are fields to pay close attention to ensure the proper scripts from the package.json file are used to build and start up the application correctly. For this service, the build command needs to install all the dependencies and then build the distribution bundle. This is done using two commands in sequence, like so: npm install && npm run build.

The double ampersand means that the first command must finish successfully before the second command begins. This is also an example of how to chain commands in Render forms to achieve multiple actions in a single step. After the build stage completes, we can start the service using the npm run start:prd script. Again, remember to bind your application to 0.0.0.0 in order for Render to automatically know how to connect to it internally. The port and IP that this service uses are defined in the src/constants.ts file and are currently set to 0.0.0.0:5001.

Setting up Kong Gateway

We’ll deploy Kong as a web service and configure it to route to our upstream private services based on the request path. Kong is often set up in tandem with a database such as PostgreSQL, which holds configuration data for the gateway. There is a simpler setup, though, which Kong calls the “DB-less declarative configuration.” In this approach, Kong does not need a database, and the configuration is loaded at the boot of the service and stored in its memory.

Below is a simple configuration file (kong.yaml) that configures Kong to route to our private services. All of our Kong-related files are available on GitHub.

_format_version: "2.1"

_transform: true

services:

- name: user-service

url: http://users-service-1w3d:5000

routes:

- name: user-routes

paths:

- /user-service

- name: widget-service

url: http://widgets-service:5001

routes:

- name: widget-routes

paths:

- /widget-serviceThe first two lines are required to direct Kong to the correct version and how to use this configuration.

The services block details all the destinations where we want Kong to route incoming traffic, and that routing is based on the paths set up in the paths block for each service. You can see here the services list contains the URLs for the two private services deployed to Render. For example, our web service (Kong) will listen for a request to the /user-service path and then forward that request to http://users-service-1w3d:5000.

Deploying Kong in a Docker Container

Using Render to deploy Kong will be a little different than our two microservices. We need to deploy it as a web service and use the custom Docker application option during configuration. Review DZone’s tutorial on performing a Docker health check.

The following Dockerfile will provide a DB-less instance of Kong which will read in the static configuration above from a file named kong.yaml. This sets up port 8000 as the port where Kong will listen for incoming requests. If you use EXPOSE 8000, Render will automatically pick up that port from the Docker image to use with this service.

FROM kong:2.7.1-alpine

COPY kong.yaml /config/kong.yaml

USER root

ENV KONG_PROXY_LISTEN 0.0.0.0:8000

ENV KONG_DATABASE off

ENV KONG_DECLARATIVE_CONFIG /config/kong.yaml

ENV PORT 8000

EXPOSE 8000

RUN kong startAfter connecting your repository with the Kong Dockerfile and configuration files, make sure you select a tier with at least 1GB of RAM and 1 CPU. Kong performs erratically with limited resources on a shared CPU. The remaining default configurations can be left as is.

After deployment, your Render dashboard should contain three services:

Once Kong has successfully deployed, you can test this setup with curl or Postman. Issue the following request to ensure proper routing to the Users and Widgets services respectively:

curl https://kong-gateway-lh8i.onrender.com/widget-service/widgets/10 \

-i -H "kong-debug: 1"The additional kong-debug header tells Kong to add some debugging information to the response headers. We can use that information to validate a successful setup. You should see something like the following in the response:

HTTP/2 200

content-type: application/json; charset=utf-8

kong-route-id: 8b2d449d-9589-5362-a2a1-3be5683a8f97

kong-route-name: widget-routes

kong-service-id: 6c8de697-474a-54cf-a59e-4ad086047749

kong-service-name: widget-service

via: kong/2.7.1

x-kong-proxy-latency: 61

x-kong-upstream-latency: 11

x-powered-by: Express

{"widget":"10"}Note the Kong prefixed headers detailing the route and services that were used to route the request to the proper upstream service.

Similarly, you can test the User services routing with:

curl https://kong-gateway-lh8i.onrender.com/user-service/users \

-i -H "kong-debug: 1" Conclusion

In this article, we’ve explored the cloud hosting solutions provided by Render. Specifically, we walked through how to deploy Kong Gateway as a web service that handles path-based routing to microservices deployed to Render as private services. This deployment pattern can set you up for scalable and flexible production deployments of microservice-backed applications.

Published at DZone with permission of Alvin Lee. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments