Open Source Software (OSS) Quality Assurance — A Milvus Case Study

We aim to provide you with optimal user experience with Milvus by conducting various types of QA tests and leveraging multiple tools.

Join the DZone community and get the full member experience.

Join For FreeQuality assurance (QA) is a systematic process of determining whether a product or service meets specific requirements. A QA system is an indispensable part of the R&D process because, as its name suggests, it ensures the quality of the product.

This post introduces the QA framework adopted in developing the Milvus vector database, providing a guideline for contributing developers and users to participate in the process. It will also cover the major test modules in Milvus and methods and tools that can be leveraged to improve the efficiency of QA testings.

A General Introduction to the Milvus QA System

The system architecture is critical to conducting QA testings. Therefore, the more a QA engineer is familiar with the system, the more likely they will develop a reasonable and efficient testing plan.

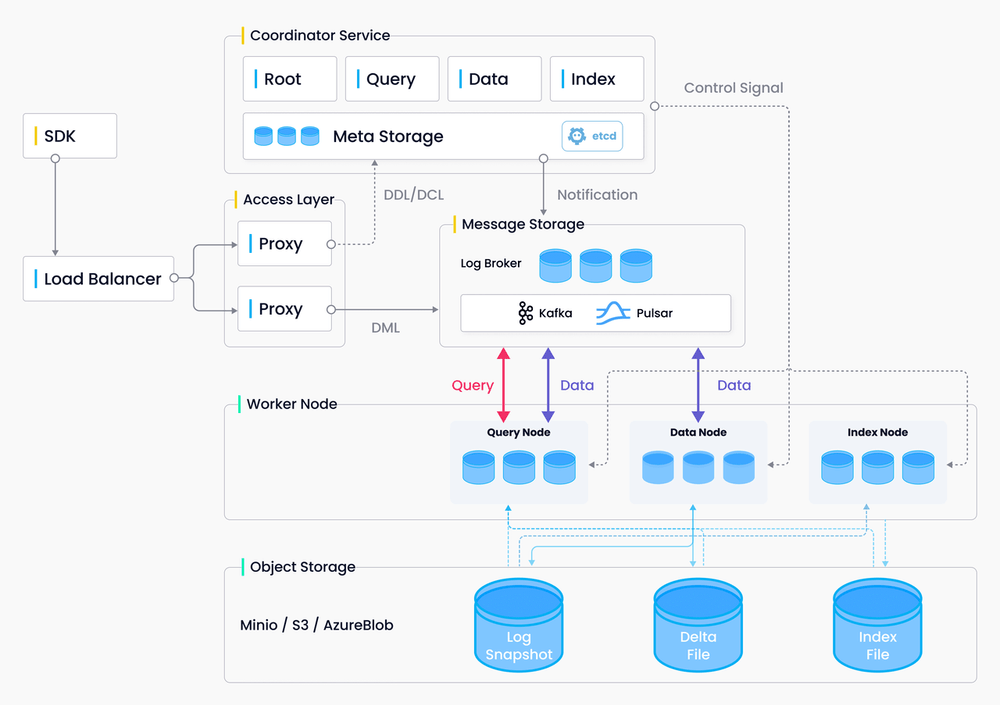

Milvus 2.0 adopts a cloud-native, distributed, and layered architecture, with SDK being the main entrance for data to flow in Milvus. The Milvus users leverage the SDK frequently; hence functional testing on the SDK side is much needed. Also, function tests on SDK can help detect the internal issues within the Milvus system. Apart from function tests, other types of tests will also be conducted on the vector database, including unit tests, deployment tests, reliability tests, stability tests, and performance tests.

A cloud-native and distributed architecture bring both convenience and challenges to QA testings. Unlike systems that are deployed and run locally, a Milvus instance deployed and run on a Kubernetes cluster can ensure that software testing is carried out under the same circumstance as software development. However, the downside is that the complexity of distributed architecture brings more uncertainties that can make QA testing of the system even more complex and strenuous. For instance, Milvus 2.0 uses microservices of different components, leading to an increased number of services and nodes and a greater possibility of a system error. Consequently, a more sophisticated and comprehensive QA plan is needed for better testing efficiency.

QA Testings and Issue Management

QA in Milvus involves conducting tests and managing issues that emerged during software development.

QA Testings

Milvus conducts different types of QA testing according to Milvus features and user needs in order of priority, as shown in the image below.

QA testings are conducted on the following aspects in Milvus in the next priority:

- Function: Verify if the functions and features work as originally designed.

- Deployment: Check if a user can deploy, reinstall, and upgrade both Milvus standalone version and the Milvus cluster with different methods (Docker Compose, Helm, APT, or YUM, etc.).

- Performance: Test the performance of data insertion, indexing, vector search, and query in Milvus.

- Stability: Check if Milvus can run stably for 5-10 days under a normal workload level.

- Reliability: Test if Milvus can still partly function if a certain system error occurs.

- Configuration: Verify if Milvus works as expected under a certain configuration.

- Compatibility: Test if Milvus is compatible with different types of hardware or software.

Issue Management

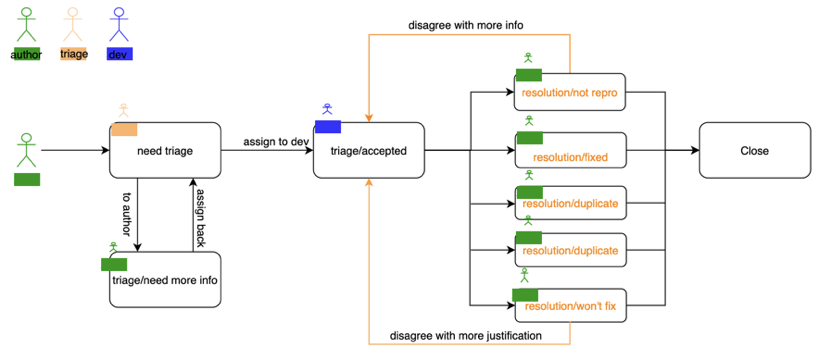

Many issues may emerge during software development. The author of the templated issues can be QA engineers themselves or Milvus users from the open-source community. The QA team is responsible for figuring out the issues.

When an issue is created, it will go through triage first. During triage, new issues will be examined to ensure that sufficient details of the issues are provided. If the issue is confirmed, it will be accepted by the developers, and they will try to fix the issues. Once development is done, the issue author needs to verify if it is fixed. If yes, the issue will ultimately be closed.

When Is QA Needed?

One common misconception is that QA and development are independent of each other. However, the truth is to ensure the quality of the system; efforts are needed from both developers and QA engineers. Therefore, QA needs to be involved throughout the whole lifecycle.

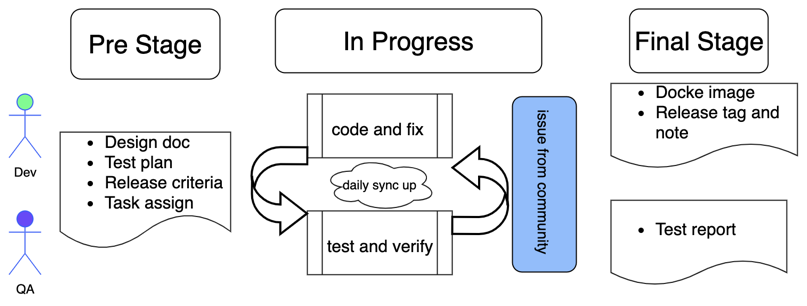

The figure above shows that a complete software R&D lifecycle includes three stages.

During the initial stage, the developers publish design documentation while QA engineers develop test plans, define release criteria, and assign QA tasks. Developers and QA engineers need to be familiar with both the design doc and test plan so that a mutual understanding of the objective of the release (in terms of features, performance, stability, bug convergence, etc.) is shared among the two teams.

During R&D, development and QA testings frequently interact to develop and verify features and functions and fix bugs and issues reported by the open-source community.

If the release criteria are met during the final stage, a new Docker image of the new Milvus version will be released. A release note focusing on new features and fixed bugs and a release tag is needed for the official release. Then the QA team will also publish a testing report on this release.

Test Modules in Milvus

There are several test modules in Milvus, and this section will explain each module in detail.

Unit Test

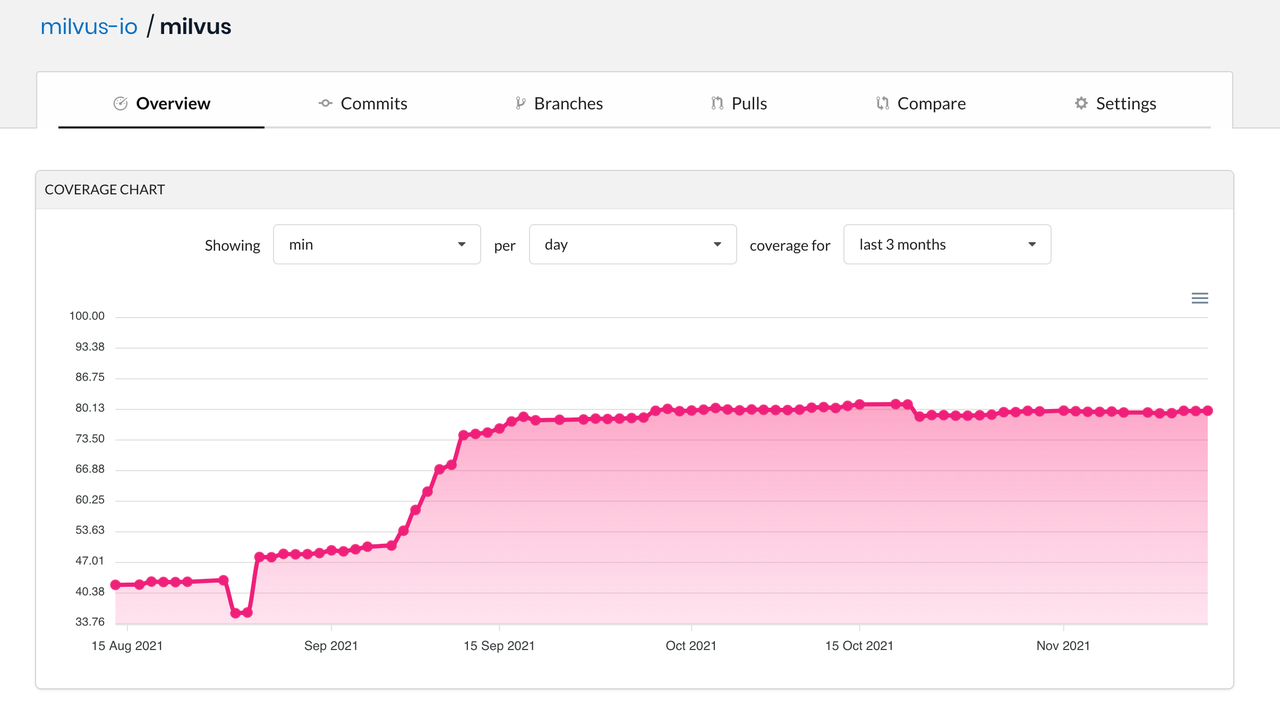

Unit tests can help identify software bugs at an early stage and provide verification criteria for code restructuring. According to the Milvus pull request (PR) acceptance criteria, the coverage of the code unit test should be 80%.

Function Test

Function tests in Milvus are mainly organized around PyMilvus and SDKs. The main purpose of function tests is to verify if the interfaces can work as designed. Function tests have two facets:

- Test if SDKs can return expected results when correct parameters are passed.

- Test if SDKs can handle errors and return reasonable error messages when incorrect parameters are passed.

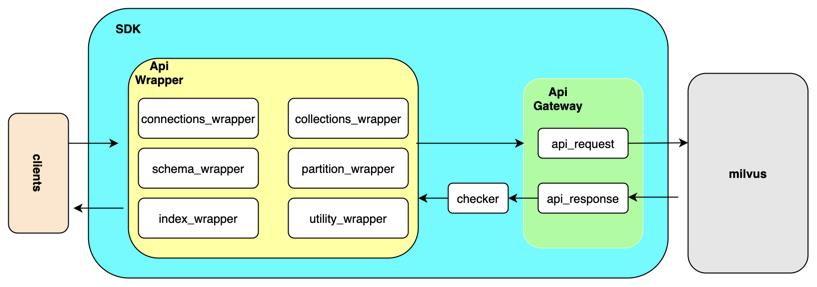

The figure below depicts the current framework for function tests based on the mainstream pytest framework. This framework adds a wrapper to PyMilvus and empowers testing with an automated testing interface.

Considering a shared testing method is needed, and some functions need to be reused, the above testing framework is adopted rather than using the PyMilvus interface directly. In addition, a "check" module is also included in the framework to bring convenience to the verification of expected and actual values.

Many as 2,700 function test cases are incorporated into the tests/python_client/testcases directory, fully covering almost all the PyMilvus interfaces. This function test strictly supervises the quality of each PR.

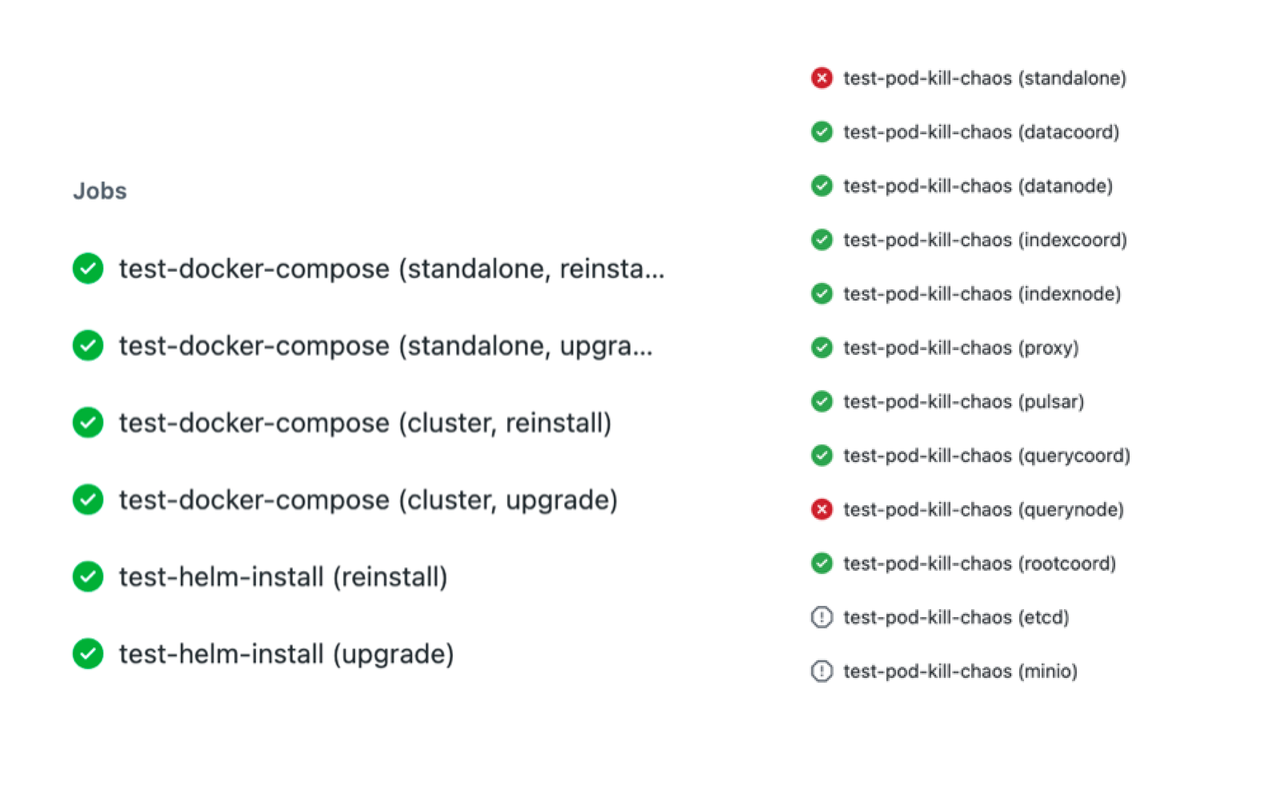

Deployment Test

Milvus comes in two modes: standalone and cluster. And there are two significant ways to deploy Milvus: using Docker Compose or Helm. And after deploying Milvus, users can also restart or upgrade the Milvus service. So there are two main categories of deployment tests: restart test and upgrade test.

Restart test refers to the process of testing data persistence, i.e., whether data are still available after a restart. Upgrade test refers to the process of testing data compatibility to prevent situations where incompatible formats of data are inserted into Milvus. The two types of deployment tests share the same workflow, as illustrated in the image below.

In a restart test, the two deployments use the same docker image. However, in an upgrade test, the first deployment uses a docker image of a previous version, while the second deployment uses a docker image of a later version. The test results and data are saved in the Volumes file or persistent volume claim (PVC).

Multiple collections are created when running the first test, and different operations are made to each collection. When running the second test, the main focus will be on verifying if the created collections are still available for CRUD operations and if new collections can be further created.

Reliability Test

Testings on the reliability of cloud-native distributed systems usually adopt a chaos engineering method whose purpose is to nip errors and system failures in the bud. In other words, in a chaos engineering test, we purposefully create system failures to identify issues in pressure tests and fix system failures before they really start to do hazards. During a chaos test in Milvus, we choose Chaos Mesh as the tool to create chaos. There are several types of failures that need to be created:

- Pod kill: a simulation of the scenario where nodes are down.

- Pod failure: Test if one of the worker node pods fails and whether the whole system can still continue to work.

- Memory stress: a simulation of heavy memory and CPU resource consumption from the work nodes.

- Network partition: Since Milvus separates storage from computing, the system relies heavily on the communication between various components. A simulation of the scenario where the communication between different pods is partitioned is needed to test the interdependency of different Milvus components.

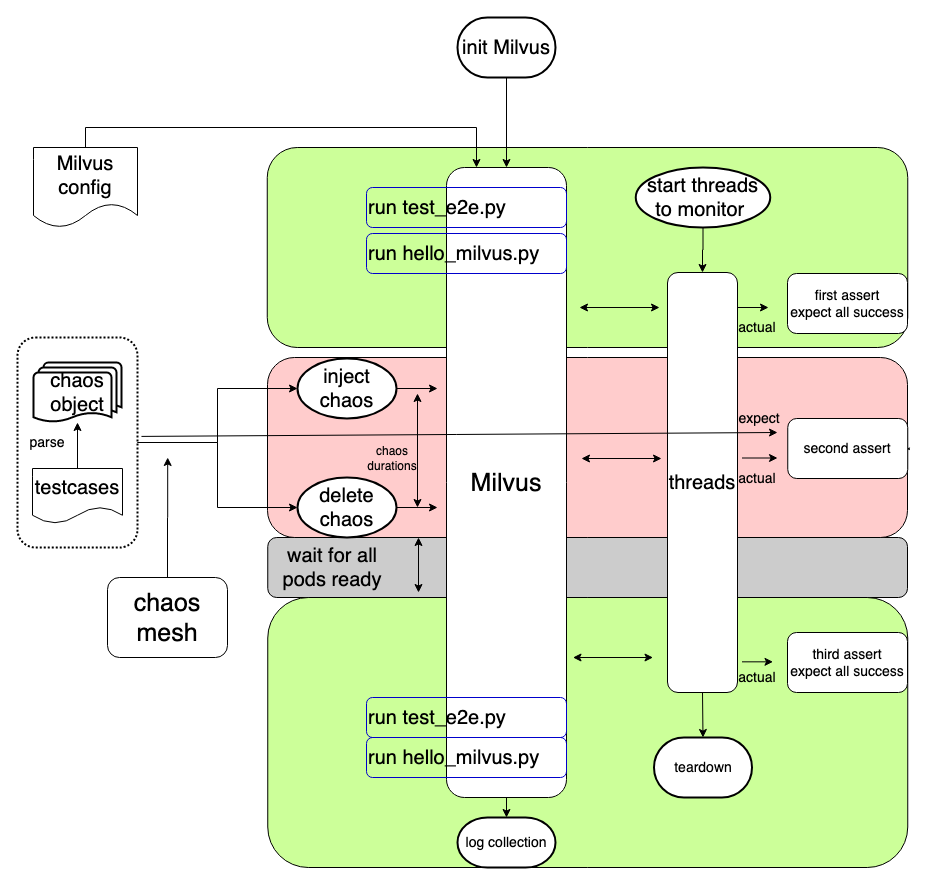

The figure above demonstrates the reliability test framework in Milvus that can automate chaos tests. The workflow of a reliability test is as follows:

- First, initialize a Milvus cluster by reading the deployment configurations.

- When the cluster is ready, run

test_e2e.pyto test if the Milvus features are available. - Run

hello_milvus.pyto test data persistence. Create a collection named "hello_milvus" for data insertion, flush, index building, vector search, and query. This collection will not be released or dropped during the test. - Create a monitoring object which will start six threads executing create, insert, flush, index, search and query operations.

checkers = {

Op.create: CreateChecker(),

Op.insert: InsertFlushChecker(),

Op.flush: InsertFlushChecker(flush=True),

Op.index: IndexChecker(),

Op.search: SearchChecker(),

Op.query: QueryChecker()

}- Make the first assertion - all operations are successful as expected.

- Introduce a system failure to Milvus by using Chaos Mesh to parse the yaml file, which defines the failure. For instance, a failure can be killing the query node every five seconds.

- Make the second assertion while introducing a system failure - Judge whether the returned results of the operations in Milvus during a system failure match the expectation.

- Eliminate the failure via Chaos Mesh.

- When the Milvus service is recovered (meaning all pods are ready), make the third assertion - all operations are successful as expected.

- Run

test_e2e.pyto test if the Milvus features are available. During the chaos, some of the operations might be blocked due to the third assertion. And even after the chaos is eliminated, some operations might continue to be blocked, hampering the third assertion from being successful as expected. This step aims to facilitate the third assertion and serves as a standard for checking if the Milvus service has recovered. - Run

hello_milvus.pyLoad the created collection, and conduct CRUP operations on the collection. Then, check if the data existing before the system failure are still available after failure recovery. - Collect logs.

Stability and Performance Test

The figure below describes the purposes, test scenarios, and metrics of stability and performance test.

| STABILITY TEST | PERFORMANCE TEST | |

|---|---|---|

| Purposes | - Ensure that Milvus can work smoothly for a fixed period of time under normal workload. - Make sure resources are consumed stably when the Milvus service starts. |

- Test the performance of all Milvus interfaces. - Find the optimal configuration with the help of performance tests. - Serve as the benchmark for future releases. - Find the bottleneck that hampers a better performance. |

| Scenarios | - Offline read-intensive scenario where data are barely updated after insertion and the percentage of processing each type of request is: search request 90%, insert request 5%, others 5%. - Online write-intensive scenario where data are inserted and searched simultaneously, and the percentage of processing each type of request is: insert request 50%, search request 40%, others 10%. |

- Data insertion - Index building - Vector search |

| Metrics | - Memory usage - CPU consumption - IO latency - The status of Milvus pods - Response time of the Milvus service etc. |

- Data throughput during data insertion - The time it takes to build an index - Response time during a vector search - Query per second (QPS) - Request per second - Recall rate etc. |

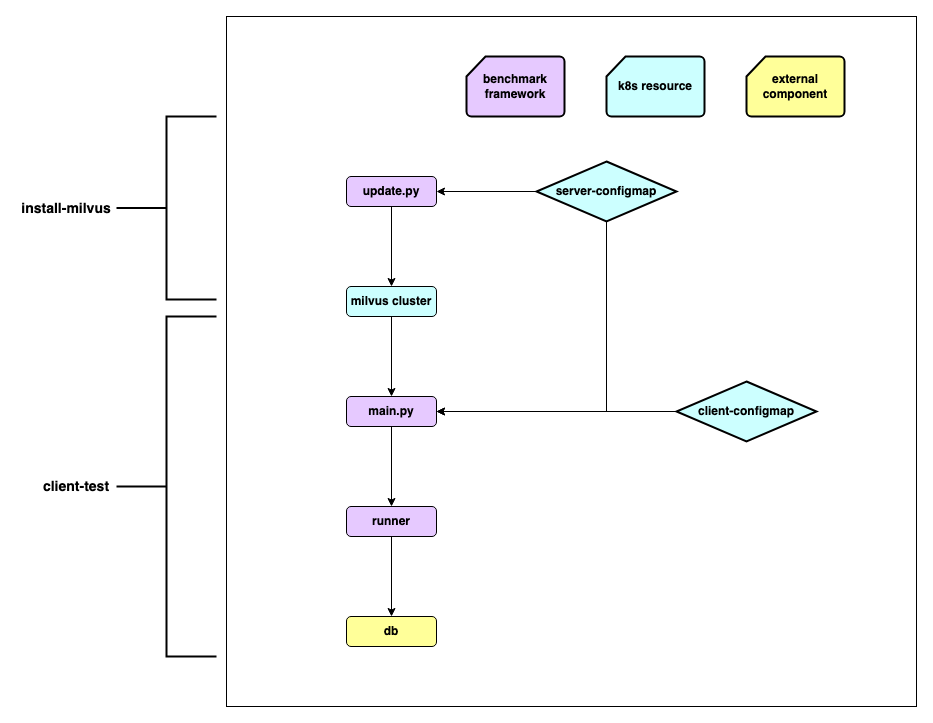

Both stability test and performance test share the same set of workflow:

- Parse and update configurations, and define metrics. The

server-configmapcorresponds to Milvus standalone or cluster configuration whileclient-configmapcorresponds to the test case configurations. - Configure the server and the client.

- Data preparation

- Request interaction between the server and the client.

- Report and display metrics.

Tools and Methods for Better QA Efficiency

From the module testing section, we can see that the procedure for most of the testings is, in fact, almost the same, mainly involving modifying Milvus server and client configurations and passing API parameters. When there are multiple configurations, the more varied the combination of different configurations, the more testing scenarios these experiments and tests can cover. As a result, the reuse of codes and procedures is all the more critical to the process of enhancing testing efficiency.

SDK Test Framework

To accelerate the testing process, we can add an API_request wrapper to the original testing framework and set it as something similar to the API gateway. This API gateway will be in charge of collecting all API requests and then passing them to Milvus to collectively receive responses. These responses will be passed back to the client afterward. Such a design made capturing certain log information like parameters and returned results much easier. In addition, the checker component in the SDK test framework can verify and examine the results from Milvus. And all checking methods can be defined within this checker component.

Some crucial initialization processes can be wrapped into one single function with the SDK test framework. By doing so, large chunks of tedious codes can be eliminated.

It is also noteworthy that each individual test case is related to its unique collection to ensure data isolation.

When executing test cases,pytest-xdistthe pytest extension can be leveraged to execute all individual test cases in parallel, greatly boosting efficiency.

GitHub Action

GitHub Action is also adopted to improve QA efficiency for its following characteristics:

- First, it is a native CI/CD tool deeply integrated with GitHub.

- It has a uniformly configured machine environment and pre-installed common software development tools, including Docker, Docker Compose, etc.

- It supports multiple operating systems and versions, including Ubuntu, MacOs, Windows-server, etc.

- It has a marketplace that offers rich extensions and out-of-box functions.

- Its matrix supports concurrent jobs and reusing the same test flow to improve efficiency.

Apart from the characteristics above, another reason for adopting GitHub action is that deployment tests and reliability tests require an independent and isolated environment. And GitHub Action is ideal for daily inspection checks on small-scale datasets.

Tools for Benchmark Tests

To make QA tests more efficient, a number of tools are used.

- Argo: a set of open-source tools for Kubernetes to run workflows and manage clusters by scheduling tasks. It can also enable running multiple tasks in parallel.

- Kubernetes dashboard: a web-based Kubernetes user interface for visualizing

server-configmapandclient-configmap. - NAS: Network-attached storage (NAS) is a file-level computer data storage server for keeping common ANN-benchmark datasets.

- InfluxDB and MongoDB: Databases for saving results of benchmark tests.

- Grafana: An open-source analytics and monitoring solution for monitoring server resource metrics and client performance metrics.

- Redash: A service that helps visualize your data and create charts for benchmark tests.

Published at DZone with permission of Wx Zhu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments