Open-Source Dapr for Spring Boot Developers

Using Dapr with Spring Boot simplifies the development for Dapr-enabled apps: run, test, and debug locally without the need to run inside a K8s cluster.

Join the DZone community and get the full member experience.

Join For FreeEvery day, developers are pushed to evaluate and use different tools, cloud provider services, and follow complex inner-development loops. In this article, we look at how the open-source Dapr project can help Spring Boot developers build more resilient and environment-agnostic applications. At the same time, they keep their inner development loop intact.

Meeting Developers Where They Are

A couple of weeks ago at Spring I/O, we had the chance to meet the Spring community face-to-face in the beautiful city of Barcelona, Spain. At this conference, the Spring framework maintainers, core contributors, and end users meet yearly to discuss the framework's latest additions, news, upgrades, and future initiatives.

While I’ve seen many presentations covering topics such as Kubernetes, containers, and deploying Spring Boot applications to different cloud providers, these topics are always covered in a way that makes sense for Spring developers. Most tools presented in the cloud-native space involve using new tools and changing the tasks performed by developers, sometimes including complex configurations and remote environments.

Tools like the Dapr project, which can be installed on a Kubernetes cluster, push developers to add Kubernetes as part of their inner-development loop tasks. While some developers might be comfortable with extending their tasks to include Kubernetes for local development, some teams prefer to keep things simple and use tools like Testcontainers to create ephemeral environments where they can test their code changes for local development purposes.

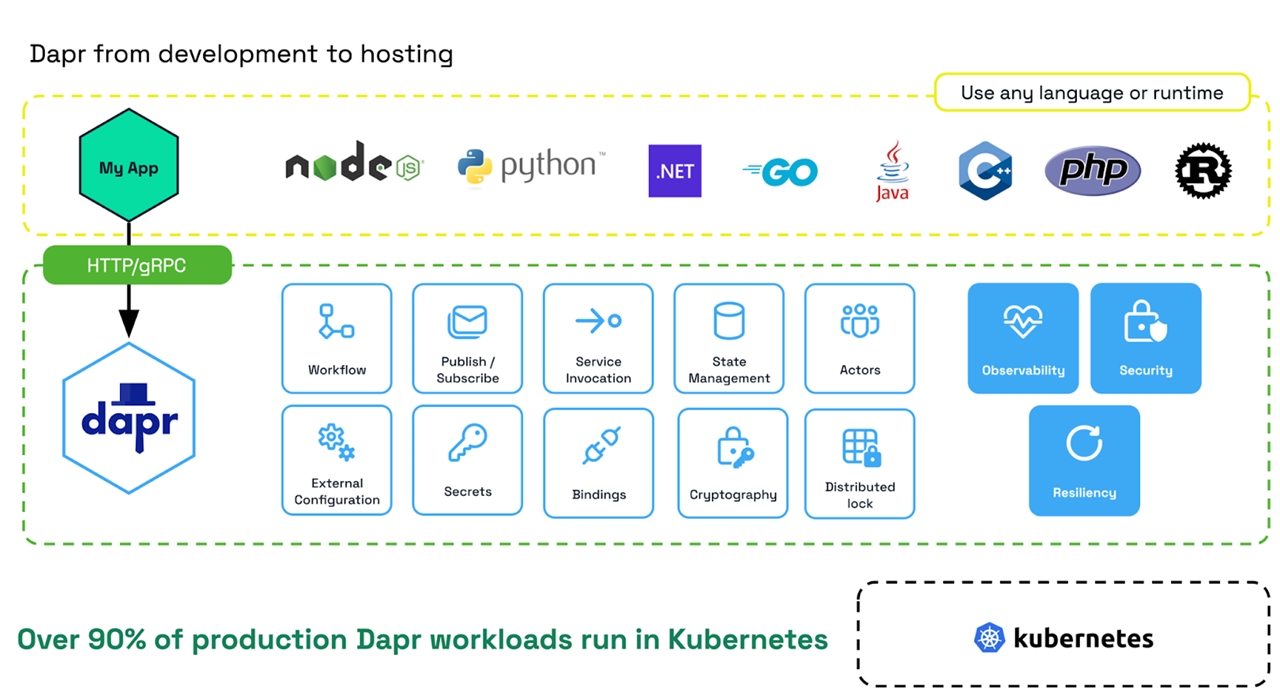

With Dapr, developers can rely on consistent APIs across programming languages. Dapr provides a set of building blocks (state management, publish/subscribe, service Invocation, actors, and workflows, among others) that developers can use to code their application features.

Instead of spending too much time describing what Dapr is, in this article, we cover how the Dapr project and its integration with the Spring Boot framework can simplify the development experience for Dapr-enabled applications that can be run, tested, and debugged locally without the need to run inside a Kubernetes cluster.

Today, Kubernetes, and Cloud-Native Runtimes

Today, if you want to work with the Dapr project, no matter the programming language you are using, the easiest way is to install Dapr into a Kubernetes cluster. Kubernetes and container runtimes are the most common runtimes for our Java applications today. Asking Java developers to work and run their applications on a Kubernetes cluster for their day-to-day tasks might be way out of their comfort zone. Training a large team of developers on using Kubernetes can take a while, and they will need to learn how to install tools like Dapr on their clusters.

If you are a Spring Boot developer, you probably want to code, run, debug, and test your Spring Boot applications locally. For this reason, we created a local development experience for Dapr, teaming up with the Testcontainers folks, now part of Docker.

As a Spring Boot developer, you can use the Dapr APIs without a Kubernetes cluster or needing to learn how Dapr works in the context of Kubernetes.

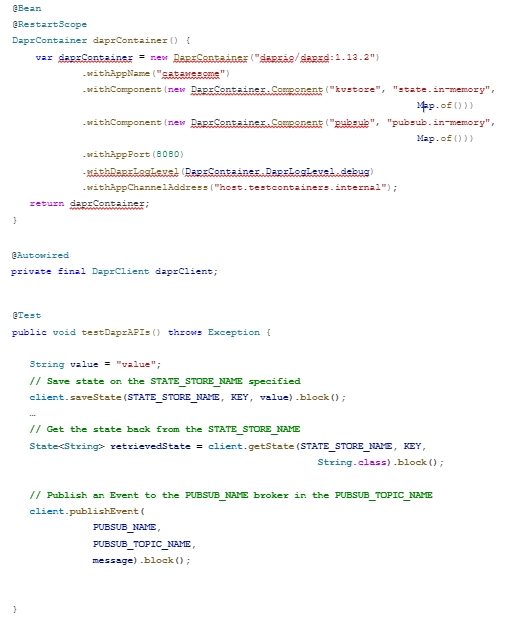

This test shows how Testcontainers provisions the Dapr runtime by using the @ClassRule annotation, which is in charge of bootstrapping the Dapr runtime so your application code can use the Dapr APIs to save/retrieve state, exchange asynchronous messages, retrieve configurations, create workflows, and use the Dapr actor model.

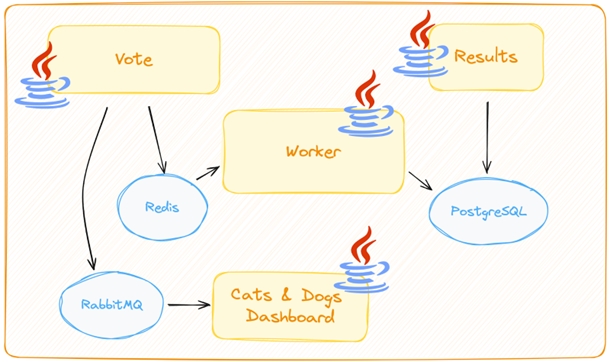

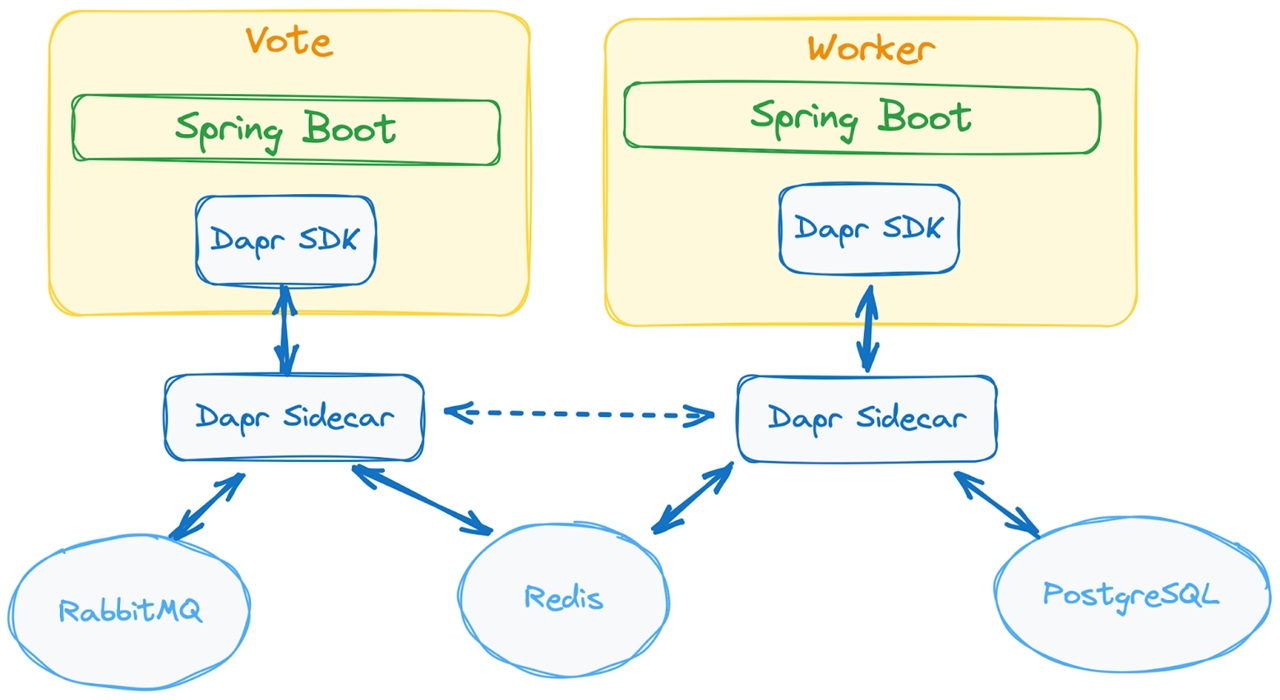

How does this compare to a typical Spring Boot application? Let’s say you have a distributed application that uses Redis, PostgreSQL, and RabbitMQ to persist and read state and Kafka to exchange asynchronous messages. You can find the code for this application here (under the java/ directory, you can find all the Java implementations).

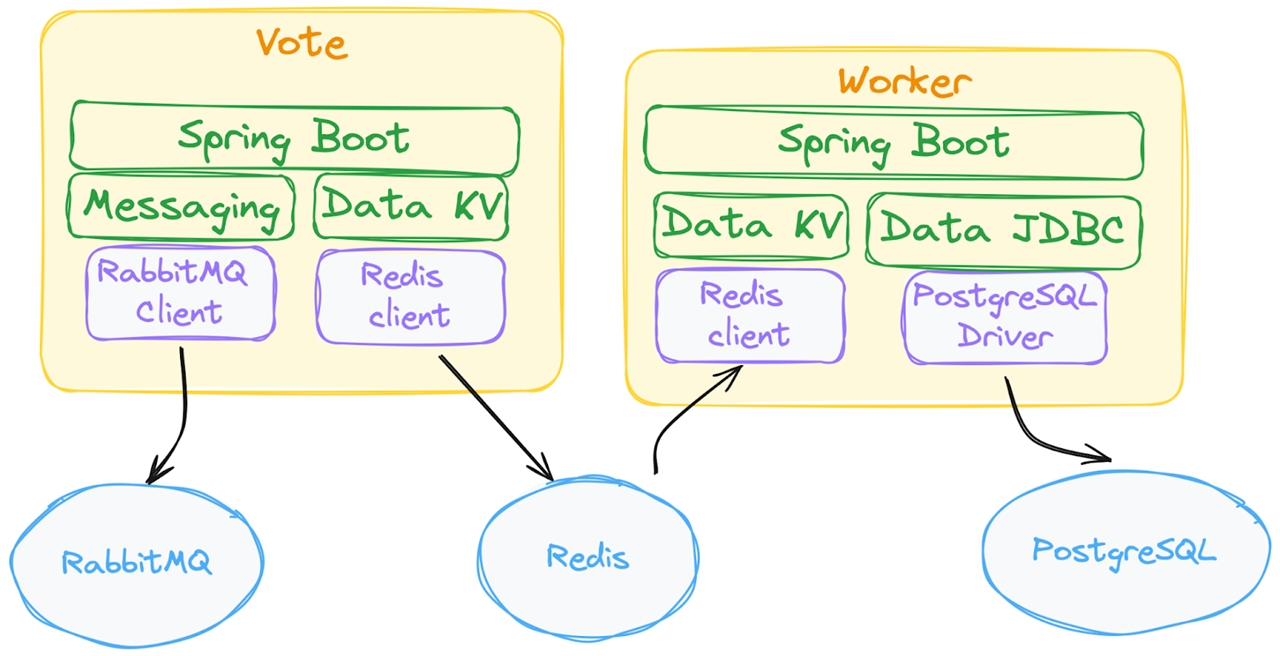

Your Spring Boot applications will need to have not only the Redis client but also the PostgreSQL JDBC driver and the RabbitMQ client as dependencies. On top of that, it is pretty standard to use Spring Boot abstractions, such as Spring Data KeyValue for Redis, Spring Data JDBC for PostgreSQL, and Spring Boot Messaging RabbitMQ. These abstractions and libraries elevate the basic Redis, relational database, and RabbitMQ client experiences to the Spring Boot programming model. Spring Boot will do more than just call the underlying clients. It will manage the underlying client lifecycle and help developers implement common use cases while promoting best practices under the covers.

If we look back at the test that showed how Spring Boot developers can use the Dapr APIs, the interactions will look like this:

In the second diagram, the Spring Boot application only depends on the Dapr APIs. In both the unit test using the Dapr APIs shown above and the previous diagram, instead of connecting to the Dapr APIs directly using HTTP or gRPC requests, we have chosen to use the Dapr Java SDK. No RabbitMQ, Redis clients, or JDBC drivers were included in the application classpath.

This approach of using Dapr has several advantages:

- The application has fewer dependencies, so it doesn’t need to include the Redis or RabbitMQ client. The application size is not only smaller but less dependent on concrete infrastructure components that are specific to the environment where the application is being deployed. Remember that these clients’ versions must match the component instance running on a given environment. With more and more Spring Boot applications deployed to cloud providers, it is pretty standard not to have control over which versions of components like databases and message brokers will be available across environments. Developers will likely run a local version of these components using containers, causing version mismatches with environments where the applications run in front of our customers.

- The application doesn’t create connections to Redis, RabbitMQ, or PostgreSQL. Because the configuration of connection pools and other details closely relate to the infrastructure and these components are pushed away from the application code, the application is simplified. All these concerns are now moved out of the application and consolidated behind the Dapr APIs.

- A new application developer doesn’t need to learn how RabbitMQ, PostgreSQL, or Redis works. The Dapr APIs are self-explanatory: if you want to save the application’s state, use the

saveState()method. If you publish an event, use thepublishEvent()method. Developers using an IDE can easily check which APIs are available for them to use. - The teams configuring the cloud-native runtime can use their favorite tools to configure the available infrastructure. If they move from a self-managed Redis instance to a Google Cloud In-Memory Store, they can swap their Redis instance without changing the application code. If they want to swap a self-managed Kafka instance for Google PubSub or Amazon SQS/SNS, they can shift Dapr configurations.

But, you ask, what about those APIs, saveState/getState and publishEvent? What about subscriptions? How do you consume an event? Can we elevate these API calls to work better with Spring Boot so developers don’t need to learn new APIs?

Tomorrow, a Unified Cross-Runtime Experience

In contrast with most technical articles, the answer here is not, “It depends." Of course, the answer is YES. We can follow the Spring Data and Messaging approach to provide a richer Dapr experience that integrates seamlessly with Spring Boot. This, combined with a local development experience (using Testcontainers), can help teams design and code applications that can run quickly and without changes across environments (local, Kubernetes, cloud provider).

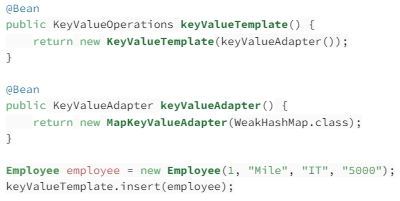

If you are already working with Redis, PostgreSQL, and/or RabbitMQ, you are most likely using Spring Boot abstractions Spring Data and Spring RabbitMQ/Kafka/Pulsar for asynchronous messaging.

For Spring Data KeyValue, check the post A Guide to Spring Data Key Value for more details.

To find an Employee by ID:

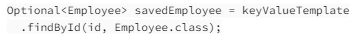

For asynchronous messaging, we can take a look at Spring Kafka, Spring Pulsar, and Spring AMQP (RabbitMQ) (see also Messaging with RabbitMQ ), which all provide a way to produce and consume messages.

Producing messages with Kafka is this simple:

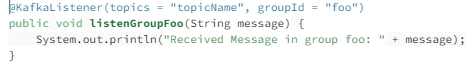

Consuming Kafka messages is extremely straightforward too:

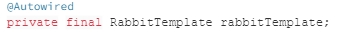

For RabbitMQ, we can do pretty much the same:

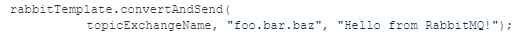

And then to send a message:

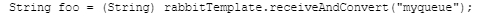

To consume a message from RabbitMQ, you can do:

Elevating Dapr to the Spring Boot Developer Experience

Now let’s take a look at how it would look with the new Dapr Spring Boot starters:

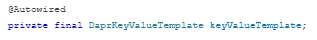

Let’s take a look at the DaprKeyValueTemplate:

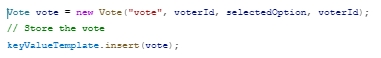

Let’s now store our Vote object using the KeyValueTemplate.

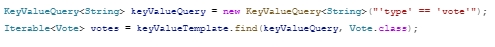

Let’s find all the stored votes by creating a query to the KeyValue store:

Now, why does this matter? The DaprKeyValueTemplate, implements the KeyValueOperations interfaces provided by Spring Data KeyValue, which is implemented by tools like Redis, MongoDB, Memcached, PostgreSQL, and MySQL, among others. The big difference is that this implementation connects to the Dapr APIs and does not require any specific client. The same code can store data in Redis, PostgreSQL, MongoDB, and cloud provider-managed services such as AWS DynamoDB and Google Cloud Firestore. Over 30 data stores are supported in Dapr, and no changes to the application or its dependencies are needed.

Similarly, let’s take a look at the DaprMessagingTemplate.

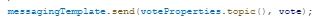

Let’s publish a message/event now:

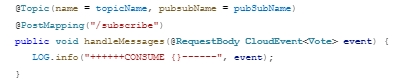

To consume messages/events, we can use the annotation approach similar to the Kafka example:

An important thing to notice is that out-of-the-box Dapr uses CloudEvents to exchange events (other formats are also supported), regardless of the underlying implementations. Using the @Topic annotation, our application subscribes to listen to all events happening in a specific Dapr PubSub component in a specified Topic.

Once again, this code will work for all supported Dapr PubSub component implementations such as Kafka, RabbitMQ, Apache Pulsar, and cloud provider-managed services such as Azure Event Hub, Google Cloud PubSub, and AWS SNS/SQS (see Dapr Pub/sub brokers documentation).

Combining the DaprKeyValueTemplate and DaprMessagingTemplate gives developers access to data manipulation and asynchronous messaging under a unified API, which doesn’t add application dependencies, and it is portable across environments, as you can run the same code against different cloud provider services.

While this looks much more like Spring Boot, more work is required. On top of Spring Data KeyValue, the Spring Repository interface can be implemented to provide a CRUDRepository experience. There are also some rough edges for testing, and documentation is needed to ensure developers can get started with these APIs quickly.

Advantages and Trade-Offs

As with any new framework, project, or tool you add to the mix of technologies you are using, understanding trade-offs is crucial in measuring how a new tool will work specifically for you.

One way that helped me understand the value of Dapr is to use the 80% vs 20% rule. Which goes as follows:

- 80% of the time, applications do simple operations against infrastructure components such as message brokers, key/value stores, configuration servers, etc. The application will need to store and retrieve state and emit and consume asynchronous messages just to implement application logic. For these scenarios, you can get the most value out of Dapr.

- 20% of the time, you need to build more advanced features that require deeper expertise on the specific message broker that you are using or to write a very performant query to compose a complex data structure. For these scenarios, it is okay not to use the Dapr APIs, as you probably require access to specific underlying infrastructure features from your application code.

It is common when we look at a new tool to generalize it to fit as many use cases as we can. With Dapr, we should focus on helping developers when the Dapr APIs fit their use cases. When the Dapr APIs don’t fit or require specific APIs, using provider-specific SDKs/clients is okay. By having a clear understanding of when the Dapr APIs might be enough to build a feature, a team can design and plan in advance what skills are needed to implement a feature. For example, do you need a RabbitMQ/Kafka or an SQL and domain expert to build some advanced queries?

Another mistake we should avoid is not considering the impact of tools on our delivery practices. If we can have the right tools to reduce friction between environments and if we can enable developers to create applications that can run locally using the same APIs and dependencies required when running on a cloud provider.

With these points in mind let’s look at the advantage and trade-offs:

Advantages

- Concise APIs to tackle cross-cutting concerns and access to common behavior required by distributed applications. This enables developers to delegate to Dapr concerns such as resiliency (retry and circuit breaker mechanisms), observability (using OpenTelemetry, logs, traces and metrics), and security (certificates and mTLS).

- With the new Spring Boot integration, developers can use the existing programming model to access functionality

- With the Dapr and Testcontainers integration, developers don’t need to worry about running or configuring Dapr, or learning other tools that are external to their existing inner development loops. The Dapr APIs will be available for developers to build, test, and debug their features locally.

- The Dapr APIs can help developers save time when interacting with infrastructure. For example, instead of pushing every developer to learn about how Kafka/Pulsar/RabbitMQ works, they just need to learn how to publish and consume events using the Dapr APIs.

- Dapr enables portability across cloud-native environments, allowing your application to run against local or cloud-managed infrastructure without any code changes. Dapr provides a clear separation of concerns to enable operations/platform teams to wire infrastructure across a wide range of supported components.

Trade-Offs

Introducing abstraction layers, such as the Dapr APIs, always comes with some trade-offs.

- Dapr might not be the best fit for all scenarios. For those cases, nothing stops developers from separating more complex functionality that requires specific clients/drivers into separate modules or services.

- Dapr will be required in the target environment where the application will run. Your applications will depend on Dapr to be present and the infrastructure needed by the application wired up correctly for your application to work. If your operation/platform team is already using Kubernetes, Dapr should be easy to adopt as it is a quite mature CNCF project with over 3,000 contributors.

- Troubleshooting with an extra abstraction between our application and infrastructure components can become more challenging. The quality of the Spring Boot integration can be measured by how well errors are propagated to developers when things go wrong.

I know that advantages and trade-offs depend on your specific context and background, feel free to reach out if you see something missing in this list.

Summary and Next Steps

Covering the Dapr Statestore (KeyValue) and PubSub (Messaging) is just the first step, as adding more advanced Dapr features into the Spring Boot programming model can help developers access more functionality required to create robust distributed applications. On our TODO list, Dapr Workflows for durable executions is coming next, as providing a seamless experience to develop complex, long-running orchestration across services is a common requirement.

One of the reasons why I was so eager to work on the Spring Boot and Dapr integration is that I know that the Java community has worked hard to polish their developer experiences focusing on productivity and consistent interfaces. I strongly believe that all this accumulated knowledge in the Java community can be used to take the Dapr APIs to the next level. By validating which use cases are covered by the existing APIs and finding gaps, we can build better integrations and automatically improve developers’ experiences across languages.

You can find all the source code for the example we presented at Spring I/O linked in the "Today, Kubernetes, and Cloud-Native Runtimes" section of this article.

We expect to merge the Spring Boot and Dapr integration code to the Dapr Java SDK to make this experience the default Dapr experience when working with Spring Boot. Documentation will come next.

If you want to contribute or collaborate with these projects and help us make Dapr even more integrated with Spring Boot, please contact us.

Published at DZone with permission of Thomas Vitale. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments