On Git and Cognitive Load

Git is complex and translates to cognitive load for developers. Let's look at how to improve upon previous source control systems in a way that makes developers happy.

Join the DZone community and get the full member experience.

Join For FreeAny developer working in a modern engineering organization is likely working in git. Git, written by Linus Torvalds in 2005, has been since its creation, basically the ubiquitous tool for source control management. Git gained widespread adoption across engineering organizations as the successor to existing source control management such as Apache Subversion (SVN) and Concurrent Versions System (CVS), and this was mostly a byproduct of the timing.

Git predates cloud and improved network connectivity, and therefore the best solution to managing source control at scale was to decentralize and leverage local dev environments to power engineering organizations. Fast forward 17 years, this is no longer the case. Cloud is de facto, networking and internet connectivity is blazing fast, and this changes everything.

Git itself as a system, while it has its benefits, is known to come with a lot of complexity that ultimately translates to cognitive load for developers. This post is going to dive into that a bit, and how we can improve upon previous source control systems to deliver improved developer happiness in the future.

The Git State of the Union

One of my favorite websites is ohshitgit.com (hat tip to Katie Sylor-Miller, front end architect at Etsy for this), which despite being a developer for years, I visit regularly, because, well… Git commands are just hard and unintuitive. We can see this through many Git-related resources and ecosystem manifestations.

This comes from the fact that the Linux Foundation recently announced official Git certification (which in itself points to a problem… when you need to get certified that implies complexity), and there are other examples, such as the multitude of books and cheat sheets that are written about Git, the fact that five out of the top 10 ranked questions of all time on Stack Overflow are about Git, and that every company has its own Git master that everyone goes to for really serious Git debacles.

But it really doesn’t have to be that way. Git, or rather source control, is just a means to an end to delivers great software and shouldn’t come with so much overhead.

Exhibit 1:

Exhibit 2:

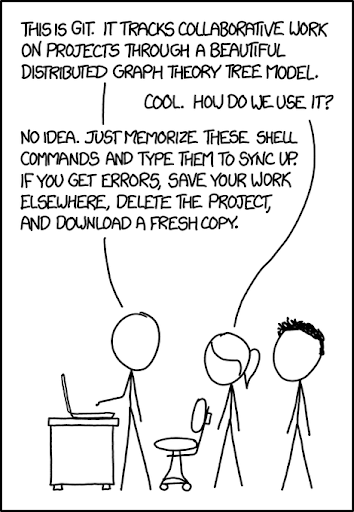

With a bonus XKCD:

This overhead comes at a time when developers are already overloaded with tasks and tools that have never really been their responsibility. There’s no doubt that it was much easier to be a programmer 20 years ago — all you really needed to know was… how to code. With all of the shift left movements coming from every single direction, developers are now expected to own basically every product-related discipline: from engineering to security, operations, support, testing, quality, and more.

All of these come with their own set of tools and frameworks from a diversity of clouds, containers, scanners and linters, config management and IaC, load testing and QA tools, and much more. Some of these even come with their own languages and syntax, where developers are expected to learn these very well — including the programming languages to the config languages, ops and scripting tools, module repos, NPM through PyPI, Terraform, and other IaC… and the list goes on.

This is the expectation of devs today, and all this while their primary source control system — Git — takes up a significant share of their mental capacity to get it right.

So it’s no surprise that developers move more slowly to avoid making mistakes (and the embarrassment that comes along with them), and are slowed down when mistakes happen and they need to fix them, sometimes because of something as basic as the wrong Git command.

But How Do Git Mistakes Still Happen?

This begs the question of how is this even possible?! With all of the existing resources and information available, how do these types of mistakes still happen with Git?

Because RTFM(ing) just doesn’t work anymore in a world of too much documentation, tooling, and frameworks. There just isn’t enough time to master each and every tool and read its documentation thoroughly with everything a developer is juggling on a daily basis.

What ends up happening is developers resort to searching for answers. (Remember our stack overflow questions?) And this can have disastrous outcomes sometimes.

At a company I worked at, a junior developer once searched for a Git command and decided to git push –force, which impacted all of the developers on the repo. You can imagine how the developer felt, but to be honest, this should not have happened in the first place.

Ultimately, your SCM should be a tool that serves to help you and provide you with the feeling of security that your work is always safe, and it should not be a constant source of headache and anxiety that you are one push away from disaster.

This is still the case because Git remains complicated — there’s too much to learn, and new engineers don’t become experts in Git for a while, which also makes it hard to adopt new tooling when you’re still learning Git for endless periods of time.

The Source of Git’s Complexity

There are a few elements to the Git architecture from where its complexity is derived. Let’s start with decentralization.

If we were to compare Git to other existing SCMs at the time — SVN or CVS — Git was built to break apart these tool’s centralization because a centralized repository and server required self-management and maintenance, and it was slow. To be able to create and switch branches and perform complicated merges, you needed blazing-fast connectivity and servers, and this just wasn’t available in 2005.

Connections and servers were slow, and branch and other operations would take unreasonable amounts of time. This resulted in workflows without branching, which impacted developer velocity significantly. So in order to improve this bottleneck in engineering, the best solution was to enable all developers to work locally (and cache their changes locally), and push their code to a central repository once the work was completed.

This decentralization and local work though is also the source of much of the heartache and frustration associated with Git, creating many conflicts in versions and branches that can be quite difficult to resolve (particularly with major code changes).

For example, in a centralized SCM, there’s only one copy of each branch. Either a commit exists on a branch, or it doesn’t. In Git, however, you have local and remote branches. Local branches can track remote branches with different names. You can have different (divergent) histories on the local and remote copies of your branch. This results in the need to work carefully when applying certain operations to a local copy of a branch, which is then pushed to the shared repository (e.g. rebasing) because of the way it might affect others’ work. Simple right? Or not… It’s all rather confusing.

Another source of confusion is the index, also called the staging area. It’s a snapshot of your files, which exists in-between your worktree and the committed version. Why is it needed? Some argue that it helps you split your work into separate commits. Perhaps. But it’s certainly not required.

For example, Mercurial (very similar to Git in most aspects) doesn’t have it, and you can still commit parts of your changes. What it certainly DOES result in is another layer of complexity. Now when comparing changes or resolving conflicts, you need to worry about the staging area and your worktree. This results in a plethora of Git command switches that nobody can memorize (e.g. git reset --soft/hard/mixed/merge *facepalm*) and complicated logic that is sometimes hard to predict.

Yet more confusion stems simply from what seems to be a lack of proper UX planning. Git’s CLI is notoriously confusing. There are operations that can be achieved in multiple ways (git branch, git checkout -b), commands that do different things depending on the context (see Git checkout), and command switches with completely unintuitive names (git diff --cached? git checkout --guess??). Things would have been simpler if Git’s developers would have given the interface more thought at the beginning. But, it seems that developer experience was not a priority in 2005.

GUI clients and IDE plugins have tried to bridge the DevEx gap, by making Git easier to use through their tools and platforms, but it can’t solve all issues. Eventually, you will inevitably need to use the command line to solve some kind of arcane problem or edge case.

At which point, when this occurs, many just bite the bullet and ask their Git guru how to fix the problem. The other option is to avoid the shame of being revealed as a novice (imposter syndrome anyone?), and to delete the repository (yes, yes including copying all of the local changes aside), clone the repo again, and try to resume work from the previous failure point (without triggering the same conflicts).

The Future of Source Control

The next generation of version control tools should be designed with developer experience in mind. It should be easy to use (easy != not powerful) with intuitive commands (when you need to use CLI) and fewer pitfalls so that it’s hard to make embarrassing or costly mistakes or meddle with others’ work.

It should give the developers exactly what they need to manage their code, not more and not less. It should not require you to read a book and understand its underlying data model prior to using it. It should also be usable by non-developers (most software projects today also include non-developers, but that’s a topic for another blog post).

Together with the expectation of better UX, one of the biggest changes that has happened since 2005 is the cloud. Having a centralized repository doesn’t mean managing your own servers anymore. Cloud-based services are scalable, fast, and (for the most part) easy to use. Think Dropbox, Google Docs, Asana, etc.

A new SCM could also take into account the modern DevOps needs, have powerful API capabilities, have deep and even native integrations with existing stacks, and be easily extensible, thus deriving the inherent benefits available in the cloud of customizability and flexibility. You could define your very own custom processes for pull request approval, CI processes pass/fail, pre-deployment checks, and much more. No two teams and companies are the same, as we know, and not all PRs were created equal. So how could there be a one-size-fits-all approach to PR management?

It’s time to rethink code management and rebuild it from the ground up as a cloud-based, modern, scalable, and powerful tool with a delightful developer experience. Our code management tools should help us save time, collaborate, and give a warm and pleasant feeling that our work is always safe — and not the opposite.

Opinions expressed by DZone contributors are their own.

Comments