O11y Guide, Cloud-Native Observability Pitfalls: Underestimating Cardinality

Continuing in this series examining the common pitfalls of cloud-native observability, take a look at how underestimating cardinality is the silent killer.

Join the DZone community and get the full member experience.

Join For FreeAre you looking at your organization's efforts to enter or expand into the cloud-native landscape and feeling a bit daunted by the vast expanse of information surrounding cloud-native observability? When you're moving so fast with agile practices across your DevOps, SREs, and platform engineering teams, it's no wonder this can seem a bit confusing.

Unfortunately, the choices being made have a great impact on both your business, your budgets, and the ultimate success of your cloud-native initiatives that hasty decisions upfront lead to big headaches very quickly down the road.

In the previous article, we talk about how focusing on cloud-native observability pillars has outlived its usefulness. Now it's time to move on to another common mistake organizations make: underestimating cardinality. By sharing common pitfalls in this series, the hope is that we can learn from them.

This article takes a look at how underestimating cardinality in our cloud-native observability can very quickly ruin our day.

Cardinality, the Silent Killer

The biggest problem facing our developers, engineers, and observability teams is that they are flooded with cloud-native data from their systems. Collecting all that we need versus exactly what we use to monitor our organization's applications and infrastructure is a constant struggle. This struggle leads to ever-increasing stress and frustration within teams trying to maintain some sense of order in the constant chaos.

The following quote is from an online forum where an SRE asked the community about dipping his feet into the tracing pond. This is one form of gathering observability information across service calls, tracing the entire process end to end. He stated that he did not yet use tracing, but wanted to ask those that do, why they did and those that don't, why not. Simple question, right?

Frustrations mounting?

The first answer was dripping with the frustration and sarcasm you find in any organization that is struggling with cloud-native observability:

"I don't yet collect spans/traces because I can hardly get our devs to care about basic metrics, let alone traces. This is a large enterprise with approx. 1000 developers."

Next thing you know, a new deployment in our organization happens to trigger an explosion of data by adding a small metric change triggering an exponential increase in cardinality. By collecting some unique value, this change has caused a huge spike in observability data that is now causing delays in all queries and dashboards. On-call support staff are now being paged and it plays out just like the 2023 Chronosphere Observability Report research showed. We spend on average 10 hours per week trying to triage and understand incidents: that's a quarter of our 40-hour work week!

Cardinality spikes are another example of the cloud-native complexity that is leading our developers and engineers to feel like they are drowning. From the same observability report, 33% surveyed said those above-mentioned issues spill over and disrupt their personal lives. 39% felt frequently stressed out.

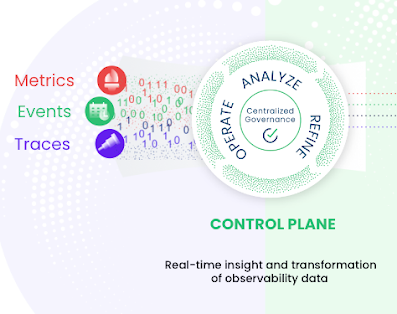

The only way to get a handle on this is to have more insights and control over how we are going to deal with high volumes of cloud-native observability data before it has the negative impact described above. We need a way to analyze, refine, and operate on our observability data live during ingestion and be able to take immediate action when problems arise, like cardinality issues.

When we have access to something like the control plate shown above, we can stop incoming cardinality issues before they overload our systems, preventing that data from flooding our backend storage until we can sort out what is going on. This ease of control provides on-call engineers with powerful capabilities for taking immediate and decisive action resulting in better outcomes.

The road to cloud-native success has many pitfalls, and understanding how to avoid the pillars, focusing instead on solutions for the phases of observability, will save much wasted time and energy.

Ignoring existing landscape?

Coming Up Next

Another pitfall organizations struggle with in cloud native observability is ignoring their existing landscape. In the next article in this series, I'll share why this is a pitfall and how we can avoid it wreaking havoc on our cloud-native observability efforts.

Published at DZone with permission of Eric D. Schabell, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments